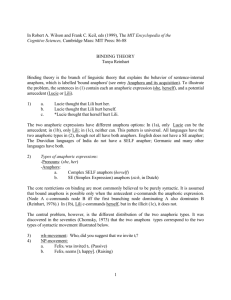

c. Only Lucie (λx (x respects x's husband)

advertisement