A3_survey1

advertisement

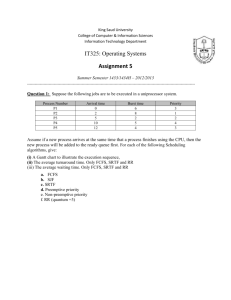

Adapting UNIX For A Multiprocessor Environment Using Threads Group A3 Jahanzeb Faizan Jonathan Sippel Ka Hou Wong October 15, 2001 Introduction _________________________________________________________________________ 1 The UNIX process model _______________________________________________________________ 1 Limitations of the UNIX process model ____________________________________________________ 2 Improving the UNIX process model_______________________________________________________ 2 Threads ___________________________________________________________________________ 2 Figure 1 _____________________________________________________________________ 3 Figure 2 _____________________________________________________________________ 4 Figure 3 _____________________________________________________________________ 5 Multithreaded systems ______________________________________________________________ 5 Kernel threads _____________________________________________________________________ 6 Lightweight processes _______________________________________________________________ 7 Figure 4 _____________________________________________________________________ 7 User threads _______________________________________________________________________ 8 Figure 5 _____________________________________________________________________ 8 Figure 6 _____________________________________________________________________ 9 SunOS 5.0: A case study________________________________________________________________ 9 Figure 7 ____________________________________________________________________ 11 Summary ___________________________________________________________________________ 12 References __________________________________________________________________________ 12 Group A3 i 3/6/16 Introduction During the 1980s, the demand for processing power exceeded the capabilities of current computer systems. To satisfy the demand for increased computing power, systems were developed with multiple processors. These systems typically shared the same system memory and Input/Output (I/O) infrastructure, an advance that required operating systems to change. UNIX was selected for these new systems because it was originally designed as a portable, general-purpose, multi-tasking operating system, which was continually being adapted to new and more powerful computer architectures. These multiprocessor computers were natural candidates for a UNIX-based operating system because the highlevel management techniques UNIX used for files, I/O, memory, and processes were efficient models for manageable operating system functions. Since the operating system was originally developed to run on a single processor, there were a number of challenges in porting this familiar and efficient environment to powerful multiprocessor machines. Early implementations of multiprocessor UNIX operating systems were asymmetric in nature. The kernel could run on only one processor at a time, while user processes could be scheduled on any of the available processors. The implementation of asymmetric multiprocessors was a move in the right direction, but the scalability declined rapidly as additional processors were added.4 This brought about the need to redesign the UNIX operating system to better support multiprocessor systems. In this paper we will describe the traditional UNIX process model, discuss its limitations, and review how it has been redesigned to better support concurrency and parallelism in a multiprocessor environment. Due to the scope of this topic alone, we will not be able to discuss the need for new synchronization methods and scheduling algorithms to support multithreaded applications. The UNIX process model The UNIX application environment contains a fundamental abstraction—the process.7 In traditional UNIX systems, the process executes a single sequence of instructions in an address space. The address space of a process is simply the set of memory locations that the process may reference or access. The UNIX system is a multitasking environment, i.e. several processes are active in the system concurrently. To these processes, the system provides some features of a virtual machine. In a virtual machine architecture the operating system gives each process the illusion that it is the only process on the machine. The programmer writes an application as if only its code were running on the system. Under UNIX, each process has its own registers and memory, but must rely on the UNIX kernel for I/O and device control. UNIX processes contend for the various resources of the system, such as the processor, memory, and peripheral devices. The UNIX kernel must act as a resource manager, Group A3 1 3/6/16 distributing the system resources optimally. A process that cannot acquire a resource it needs must block (suspend execution) until that resource becomes available. Since the processor is one such resource, only one process can actually run at a time in a single processor system. The rest of the processes are blocked, waiting for either the processor or other resources. The UNIX kernel provides an illusion of concurrency by allowing one process to have the processor for a brief period of time, then switching to another. In this way each process receives processor time and is allowed to make progress. Limitations of the UNIX process model The process model has a couple of important limitations. First, many applications wish to perform several largely independent tasks that can run concurrently, but must share a common address space and other resources. These processes are parallel in nature and require a programming model that supports parallelism. On traditional UNIX systems, these types of programs must spawn multiple processes. Using multiple processes in an application has some disadvantages. Creating additional processes adds substantial overhead, since creating a new process requires an expensive system call. Processes must use interprocess communication facilities such as message passing or shared memory to communicate because each process has its own address space. Second, traditional processes cannot take advantage of multiprocessor architectures because a process can use only one processor at a time. An application must create a number of separate processes and dispatch them to the available processors. These processes must find ways of sharing memory and resources, and synchronizing their tasks with each other. Improving the UNIX process model A process is defined by the resources it uses and the location at which it is executing. There are many instances where it would be useful for resources to be shared and accessed concurrently. This situation is similar to the event where a fork() system call is invoked with a new program counter, or thread of control, executing within the same address space. Many UNIX variants are now providing mechanisms to support this through thread facilities. Threads A traditional UNIX process has a single thread of control. A thread of control, otherwise known as a thread, is a sequence of instructions being executed in a program. Each thread has a program counter (PC) and a stack to keep track of local variables and return addresses. Threads share the process instructions and most of the processes data. If a thread changes any shared data the change can be seen by all other threads in the process. In addition, threads share most of the operating system state of a process.5 Group A3 2 3/6/16 A multithreaded UNIX process is no longer a thread of control in itself; instead it is associated with one or more threads. Each thread executes independently. The advantages of multithreaded systems are most apparent when combined with multiprocessor architectures. An application can achieve true parallelism by running each thread of a multithreaded process on a different processor. Figure 1 Figure 17 shows a set of single-threaded processes executing on a system with a single processor. It appears that the processes are running concurrently on the system because each process is being executed for a brief period of time before switching to the next. In this example the first three processes are associated with a server application. The server program starts a new process for each active client. The server processes have nearly identical address spaces and share information with one another using interprocess communication mechanisms. The last two processes are running another server application. Group A3 3 3/6/16 Figure 2 Figure 27 shows two servers running in a multithreaded system. Each server runs as a single process, with multiple threads sharing a single address space. Either the kernel or a user threads library, depending on the operating system, handles interthread context switching. Since all of the application threads share a common address space, they can use efficient, lightweight, interthread communication and synchronization mechanisms, which significantly reduce the demand on the memory subsystem. There are potential disadvantages with this approach. For instance, a single-threaded process does not have to protect its data from other processes. Multithreaded processes must be concerned with all data in their address space. If more than one thread can access the data, the processes must use some form of synchronization to avoid data corruption. Group A3 4 3/6/16 Figure 3 Figure 37 shows two multithreaded processes running on a system with multiple processors. All threads of one process share the same address space, but each runs on a different processor. Therefore, the processes are all running concurrently. This improves performance considerably because many processes can run at the same time, but also complicates synchronization problems because only one process should access the data at a time. Multithreaded systems The level of parallelism of a multiprocessor application is measured by the actual degree of parallel execution achieved and is limited by the number of physical processors available to the application. Concurrency is the maximum parallelism a multiprocessor application can achieve using an unlimited number of processors. It is dependent on how the application is written, how many threads of control can execute at the same time, and availability of the proper resources for processing. The kernel recognizes multiple threads of control within a process, schedules them independently, and multiplexes them onto the available processor(s) to provide system concurrency. Both single-processor and multiprocessor applications can benefit from Group A3 5 3/6/16 system concurrency because the kernel is able to schedule another thread if one blocks on an event or resource. User-level thread libraries are used by applications to provide user concurrency. The kernel does not recognize these user threads, or co-routines, so they must be scheduled and managed by the applications themselves. True concurrency or parallelism is not achieved since these co-routines cannot actually run in parallel. However, non-blocking system calls can be used by an application to simultaneously maintain several interactions in progress. User threads capture the state of these simultaneous interactions in perthread local variables on the thread’s stack instead of using a global state table, which simplifies programming. Each concurrency model offers limited value by itself. Threads are used as both organizational tools and to exploit multiple processors. A kernel thread facility allows parallel execution on multiple processors, but it is not suitable for structuring user applications. On the other hand, a purely user-level facility is only useful for structuring applications and does not allow parallel execution of code. Many systems combine system and user concurrency to implement a dual concurrency model. The kernel recognizes multiple threads in a process, and libraries add user threads that are not seen by the kernel. User threads are desirable in systems with multithreaded kernels because they allow synchronization between concurrent routines in a program without the overhead of making system calls. Splitting the thread support functionality between the kernel and the threads library is good because it reduces the size and responsibilities of the kernel. Kernel threads A kernel thread does not have to be associated with a user process. It is internally created and destroyed when it is needed by the kernel and is responsible for executing a specific function. It has its own kernel stack and shares the kernel text and global data. It can be independently scheduled and uses the standard synchronization mechanisms of the kernel (e.g. sleep() and wakeup()).7 Kernel threads are mostly used for performing operations like asynchronous I/O. The kernel can create a new thread to handle each request instead of providing special mechanisms to handle this. The thread handles the request synchronously, but the operation appears to be asynchronous to the rest of the kernel. Kernel threads are also used to handle interrupts. Kernel threads are inexpensive to create and use since they use limited resources. For instance, they only use the kernel stack and an area to save the register context when they are not processing. Context switching between kernel threads is quick since no memory mappings need to be flushed.7 Group A3 6 3/6/16 Lightweight processes A lightweight process (LWP) is a kernel-supported user thread. A system must support a kernel thread before it can support LWPs. As seen in Figure 47, every process may have at least one LWP, but a separate kernel thread must support each one. The LWPs are independently scheduled and share the processes address space and other resources. They can make system calls and block for I/O or resources. True parallelism exists on a multiprocessor system since each LWP can be sent to run on a different processor. There are major advantages to using LWPs even on a single-processor system, since resource and I/O waits block individual LWPs and not the entire process. Figure 4 Besides the kernel stack and register context, a LWP also needs to maintain some user state. This mostly includes the user register context, which must be saved when the LWP is preempted. Each LWP is associated with a kernel thread, but some kernel threads will not have a LWP and may be dedicated to system tasks. These multithreaded processes are useful when each thread is relatively independent and does not frequently interact with other threads. User code in these processes are fully preemptible, and LWPs share a common address space. Any data that can be accessed at the same time by multiple LWPs must be accessed in a synchronized manner. Shared Group A3 7 3/6/16 variables are locked by facilities that are provided by the kernel and LWPs are blocked from accessing the locked data. User threads Thread abstraction can be provided entirely at the user level without involvement from the kernel. Library packages like POSIX pthreads and Mach c-threads are used to accomplish this.7 These libraries provide all the functions for creating, synchronizing, scheduling, and managing threads and do not require any special assistance from the kernel as illustrated in Figure 57. As a result, the thread interactions are very fast. Figure 5 In Figure 67, the library acts as a miniature kernel for the threads it controls by combining user threads and lightweight processes. This ultimately creates a very powerful programming environment because the kernel recognizes, schedules, and manages the LWPs. In addition, a user-level library multiplexes user threads on top of LWPs and provides facilities for inter-thread scheduling, context switching, and synchronization without involving the kernel.7 Group A3 8 3/6/16 Figure 6 Only the kernel has the ability to modify the memory management registers, so it retains the responsibility for process switching. User threads are not really schedulable entities, and the kernel has no knowledge of them. The kernel is responsible for scheduling the underlying process or LWP, which will use library functions to schedule its threads. The threads are preempted each time the process or LWP is preempted. Similarly, if a user thread makes a blocking system call, it blocks the underlying LWP. If the process has only one LWP, all its threads are blocked. The library also provides protection for shared data structures using synchronization objects. These synchronization objects usually contain a type of lock variable such as a semaphore and a queue of threads blocked on it. Threads must acquire the lock before accessing the data structure. If the data is already locked, the library blocks the thread by adding it to its blocked thread queue and transferring control to another thread. Performance is the biggest advantage of user threads because they implement functionality at a user level without using system calls and they are very lightweight and consume no kernel resources when bound to a LWP. This avoids the overhead of trap processing and moving parameters and data across protection boundaries.7 SunOS 5.0: A case study Group A3 9 3/6/16 A prime example of a multithreaded UNIX operating system is SunOS 5.0, the operating system component of the Solaris 2.0 operating environment. Until 1992, SunOS supported only traditional UNIX processes. Then, in 1992 it was redesigned as a modern operating system with support for symmetric multiprocessing. SunOS 5.0 supports user threads by a library for their creation and scheduling, and the kernel knows nothing of these threads. SunOS 5.0 expects potentially thousands of userlevel threads to be vying for processor time. SunOS 5.0 defines an intermediate level of threads as well. Between user threads and kernel threads are lightweight processes. Each process contains at least one LWP. These LWPs are manipulated by the thread library. The user threads are multiplexed on the LWPs of the process, and only user threads currently connected to LWPs make progress. The rest are either blocked or waiting for an LWP on which they can run. Standard kernel threads execute all operations within the kernel. There is a kernel thread for each LWP, and there are some kernel threads that run on the kernel’s behalf and have no associated LWP (for instance, a thread to service disk requests). The SunOS 5.0 thread system is depicted in Figure 7. Kernel threads are the only objects scheduled within the system. Some kernel threads are multiplexed on the processor(s) in the system, whereas some are tied to a specific processor. For instance, the kernel thread associated with a device driver for a device connected to a specific processor will run only on that processor. By request, a thread can also be pinned to a processor. Only that thread runs on the processor, with the processor allocated to only that thread (Figure 7). Group A3 10 3/6/16 Figure 7 Take a look at how the system operates. Any one process may have many user threads. These user threads may be scheduled and switched among kernel-supported lightweight processes without the intervention of the kernel. No context switch is needed for one user thread to block and another to start running, so user threads are extremely efficient. Lightweight processes support these user threads. Each LWP is connected to exactly one kernel thread, whereas each user thread is independent of the kernel. There may be many LWPs in a process, but they are needed only when threads need to communicate with the kernel. For instance, one LWP is needed for every thread that may block concurrently in system calls. Consider five different file read requests that could be occurring simultaneously. Then, five LWPs would be needed, because they could all be waiting for I/O completion in the kernel. If a process had only four LWPs, then the fifth request would have to wait for one of the LWPs to return from the kernel. Adding a sixth LWP would gain us nothing if there were only enough work for five. The kernel threads are scheduled by the kernel’s scheduler and execute on the processor(s) in the system. If a kernel thread blocks (usually waiting for an I/O operation to complete), the processor is free to run another kernel thread. If the thread that blocked was running on behalf of an LWP, the LWP blocks as well. Any user-level thread Group A3 11 3/6/16 currently attached to the LWP also blocks. If the process containing that thread has only one LWP, the whole process blocks until the I/O completes With SunOS 5.0, a process no longer must block while waiting for I/O to complete. The process may have multiple LWPs; if one blocks, the others can continue to execute within the process. Summary Redesigning UNIX around threads has made it a much more efficient operating system. Applications that need to perform several largely independent tasks concurrently, but must share a common address space and other resources, can now take advantage of thread facilities. It is no longer necessary for these applications to spawn multiple processes, thus eliminating the overhead of expensive system calls and providing a more efficient use of memory and resources. By having multiple threads of control, a process is no longer limited to running on a single processor. It can now take advantage of the parallelism that a multiprocessor architecture provides. References 1. Maurice J. Bach, The Design of the UNIX Operating System, Prentice Hall, Englewood Cliffs, New Jersey, 1986. 2. J. R. Eyhholt, S. R. Kleiman, S. Barton, R. Faulkner, A. Shivalingiah, M. Smith, D. Stein, J. Voll, M. Weeks, D. Willams, Beyond Multiprocessing… Multithreading the SunOS Kernel, USENIX Summer, 1992, San Antonio, Texas. 3. M. D. Janssens, J. K. Annot, and a. J. Van De Goor, Adapting UNIX for a Multiprocessor Environment, Communications of the ACM, September, 1986, Vol. 29, no. 9, pp. 895 - 901. 4. Jim Mauro, Solaris Internals: Core Kernel Components, Sun Microsystems Press, 2001. 5. M. L. Powel, S. R. Kleiman, S. Barton, D. Shah, D. Stein, M. Weeks, SunOS Multithread Architecture, USENIX Winter, 1991, Dallas, Texas. 6. Channing H. Russel and Pamela J. Waterman, Variations on UNIX for Parallelprocessing Computers, Communications of the ACM, December, 1987, Vol. 30, no. 12, pp. 1048 - 1055. 7. Uresh Vahalia, UNIX Internals: The New Frontiers, Prentice Hall, Upper Saddle River, New Jersey, 1996. Group A3 12 3/6/16