- Howard Besser's Site

advertisement

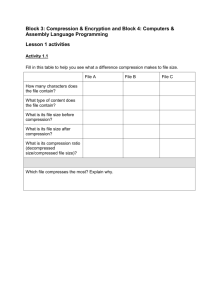

Best Practices 1 3/6/16 Best Practices for Image Capture1 (Prepared for California Digital Library, 7/99) This document outlines a set of "best practices" for libraries, archives, and museums who wish to create digital representations of parts of their collections. The recommendations here focus on the initial stages of capturing images and metadata, and do not cover other important issues such as systems architecture, retrieval, interoperability, and longevity. Our recommendations are directed towards institutions that want a large collection of their digital surrogates to persist for some prolonged period of time. Institutions with very small collections or those who anticipate relatively short life-spans for their digital surrogates may find some of the recommendations too burdensome. These recommendations really focus on reformatting existing works (such as handwritten manuscripts, typescript works on paper, bound volumes, slides, or photographs) into bitmapped digital formats. Because collections differ widely in their types of material, audience, and institutional purpose, specific practices may vary from institution to institution as well as for different collections within a single institution. Therefore, the sets of recommendations we make here attempt to be broad enough to apply to most cases, and try to synthesize the differing recommendations previously made for specific target collections/audiences (for references to these previous documents, see Bibliography). Because image capture capabilities are changing so rapidly, we chose to divide the "best practices" discussion into two parts: general recommendations that should apply to many different types of objects over a prolonged period of time, and specific minimum recommendations that take into consideration technical capabilities and limitations faced by a hypothetical large academic library in 1999. The first part will cover the practices that we think are fairly universal, and will be usable for many years to come. This includes the notion of masters and derivatives, and some discussion about image quality and file formats. The Summary of General Recommendations, found near the end of this document, provides a list of these suggested best practices. Since the issues surrounding image quality and file formats are complex, vary from collection to collection, and are in flux due to rapid technological developments and emerging standards, we have also summarized at the end of this document some more 1 This document was originally drafted by Howard Besser and edited and enhanced by the UC Berkeley Image Capture and Presentation Task Force (1998). The first published edition of this document appeared within the Digital Library Federation's Making of America II White Paper (http://sunsite.berkeley.edu/moa2). That version was then enhanced by a committee composed of UC Berkeley's Howard Besser, Dan Johnston, Jan Eklund, and Roy Tennant, then revised to its present form by the California Digital Library's Architecture Committee. Best Practices 2 3/6/16 specific recommendations that provide a list of minimally acceptable levels rather than a precise set of guidelines (see: Specific Minimum Recommendations for a sample Project). Digital Masters Digital master files are created as the direct result of image capture. The digital master should represent as accurately as possible the visual information in the original object.2 The primary functions of digital master files are to serve as a long-term master image and as a source for derivative files. Depending on the collection, a digital master file may serve as a surrogate for the original, may completely replace originals or be used as security against possible loss of originals due to disaster, theft and/or deterioration. Derivative files are created from digital master images for editing or enhancement, conversion of the master to different formats, and presentation and transmission over networks. Typically, one would capture the master file at a very high level of image quality, then would use image processing techniques (such as compression and resolution reduction) to create the derivative images (including thumbnails) which would be delivered to users. Long term preservation of digital master files requires a strategy of identification, storage, and migration to new media, as well as policies about image use and access to them. The specifications for derivative files used for image presentation may change over time; digital masters can serve an archival purpose, and can be processed by different presentation methods to create necessary derivative files without the expense of digitizing the original object again. Because the process of image capture is so labor intensive, the goal should be to create a master that has a useful life of at least fifty years. Therefore, collection managers should anticipate a wide variety of future uses, and capture at a quality high enough to satisfy these uses. In general, decisions about image capture should err towards the highest quality. Some collections will need to do image-processing on files for purposes such as removing blemishes on an image, restoring faded colors from film emulsion, or annotating an image. For these purposes we strongly recommend that a master be saved before any image processing is done, and that the “beautified” image be used as a submaster to generate further derivatives. In the future, as we learn more about the side effects of image processing, and as new functions for color restoration are developed, the original master would still be available. 2 Note: this is similarity in terms of technical fidelity using objective technological measurements; this is not accuracy as determined by comparing the object scanned to an image on a display device. This type of accuracy is obtained by the manipulation of settings before scanning rather than by image processing after scanning. See further discussion of color pallette and monitors in the “Image Quality” section later in this document. Best Practices 3 3/6/16 Capturing the Image Appropriate scanning procedures are dictated by the nature of the material and the product one wishes to create. There is no single set of image quality parameters that should be applied to all documents that will be scanned. Decisions as to image quality typically take into consideration the research needs of users (and potential users), the types of uses that might be made of that material, as well as the artifactual nature of the material itself. The best situation is one where the source materials and project goals dictate the image quality settings and the hardware and software one employs. Excellent sources of information are available, including the experience of past and current library and archival projects (see Bibliography section entitled “Scanning and Image Capture”). The pure mechanics of scanning are discussed in Besser (Procedures and Practices for Scanning), Besser and Trant (Introduction to Imaging) and Kenney’s Cornell manual (Digital Imaging for Libraries and Archives). It is recommended that imaging projects consult these sources to determine appropriate options for image capture. Decisions of quality appropriate for any particular project should be based on best anticipation of use of the digital resource. Image Quality Image quality for digital capture from originals is a measure of the completeness and the accuracy of the capture of the visual information in the original. There is some subjectivity involved in determining completeness and accuracy. Sometimes the subjectivity relates to what is actually being captured (with a manuscript, are you only trying to capture the writing, or is the watermark and paper grain important as well?). At other times the subjectivity relates to how the informational content of what is captured will be used. ( For example, should the digital representation of faded or stained handwriting show legibility or reflect the illegibility of the source material? Should pink slides be “restored” to their proper color? And if the digital image is made to look “better” than the original, what conflicts does that cause when a user comes in to see the original and it looks “worse” than the onscreen version? See Sidebar II for more complete discussion of these problems.). Image quality should be judged in terms of the goals of the project, and ultimately depends on an understanding of who are the users (and potential users), and what kind of uses will they make of this material. In past projects, some potential use has been inhibited because not enough quality (in terms of resolution and/or bit-depth) was captured during the initial scanning. Image quality depends on the project's planning choices and implementation. Project designers need to consider what standard practices they will follow for input resolution and bit depth, layout and cropping, image capture metric (including color management), and the particular features of the capture device and its software. Benchmarking quality (see Kenney’s Cornell Manual) for any given type of source material can help one select appropriate image quality parameters that capture just the amount of information needed from the source material for eventual use and display. By maximizing the image quality Best Practices 4 3/6/16 of the digital master files, managers can ensure the on-going value of their efforts, and ease the process of derivative file production. Quality is necessarily limited by the size of the digital image file, which places an upper limit on the amount of information that is saved. The size of a digital image file depends on the size of the original and the resolution of capture (number of pixels per inch in both height and width that are sampled from the original to create the digital image), the number of channels (typically 3: Red, Green, and Blue: "RGB"), and the bit depth (the number of data bits used to store the image data for one pixel). Measuring the accuracy of visual information in digital form implies the existence of a capture metric (i.e., the rules that give meaning to the numerical data in the digital image file). For example, the visual meaning of the pixel data Red=246, Green=238, Blue=80 will be a shade of yellow, which can be defined in terms of visual measurements. Most capture devices capture in RGB using software based on the video standards defined in international agreements. A thorough technical introduction to these topics can be found in Poynton's Color FAQ: <http://www.inforamp.net/~poynton/ColorFAQ.html>. We strongly urge that imaging projects adopt standard target values for color metrics as Poynton discusses, so that the project image files are captured uniformly. A reasonably well-calibrated grayscale target should be used for measuring and adjusting the capture metric of a scanner or digital camera.3 For capturing reflective copy, we recommend that a standard target consisting of grayscale, centimeter scale (useful for users to make sure that the image is captured or displayed at the right size), and standard color patches be included along one edge of every image captured, to provide an internal reference within the image for linear scale and capture metric information. Kodak makes a set consisting of grayscale (with approximate densities), color patches, and linear scale which is available in two sizes: 8 inches long (Q-13, CAT 152 7654) and 14 inches long (Q-14, CAT 152 7662) Bit depth is an indication of an image's tonal qualities. Bit depth is the number of bits of color data which are stored for each pixel; the greater the bit depth, the greater the number of gray scale or color tones that can be represented and the larger the file size. The most common bit depths are: 3 Bitonal or binary, 1 bit per pixel; a pixel is either black or white 8 bit gray scale,; 8 bits per pixel; a pixel can be one of 256 shades of gray 8 bit color, 8 bits per pixel ("indexed color"); a pixel is one of 256 colors Targets for source material that themselves are intermediates (eg. slides, microfilm, photocopies) may be more confusing than helpful. Targets are not reasonable for slides because of the small size. Targets for other intermediates can be misleading, as future users employing them to adjust their viewing environment to the image may be confused as to whether they are adjusting to the proper settings for viewing the intermediate or to viewing the original. Best Practices 5 3/6/16 24 bit color (RGB), 24 bits per pixel; each 8-bit color channel can have 256 levels, for a total of 16 million different color combinations While it is desirable to be able to capture images at bit depths greater than 24 (which only allows 256 levels for each color channel), standard formats for storing and exchanging higher bit-depth files have not yet evolved, so that we expect that (at least for the next few years) the majority of digital master files will be 24-bit. Project planners considering bitonal capture should run some samples from their original materials to verify that the information captured is satisfactory; frequently grayscale capture is desirable even for bitonal originals. 8-bit color is seldom suitable for digital masters. Useful image quality guidelines for different types of source materials are listed in Puglia & Rosinkski’s NARA Guidelines and in Kenney’s Cornell Manual (see bibliography). Formats Digital masters should capture information using color rather than grayscale approaches where there is any color information in the original documents. Digital masters should never use lossy compression schemes and should be stored in internationally recognized formats. TIFF is a widely used format, but there are many variations of the TIFF format, and consistency in use of the files by a variety of applications (viewers, printers etc.) is a necessary consideration. In the future, we hope that international standardization efforts (such as ISO attempts to define TIFF-IT and SPIFF) will lead vendors to support standards-compliant forms of image storage formats. Proprietary file formats (such as Kodak’s Photo CD or the LZW compression scheme) should be avoided for any long-term project. Most projects currently use uncompressed TIFF 6 images. While it is our recommendation that no file compression be used at all for digital master files, we recognize that there may be legitimate reasons for considering it. Limited storage resources may force the issue by requiring the reduced file sizes that file compression affords. Those who choose to go this route should be careful to take into consideration digital longevity issues. As a general rule, lossless compression schemes should be preferred over lossy compression schemes. Lossless compression makes files smaller, and when they are decompressed they are exactly the same as before they were compressed. Lossy compression actually combines and throws away data (usually data that cannot be readily detected by the human eye), so decompressed lossy images are different than the original image, even though those differences may be difficult for our eyes to see. Typically, lossy compression yields far greater compression ratios than lossless. But unlike lossy compression, lossless compression will not eliminate data we may later find useful. Lossy compression is unwise, as we do not yet know how today’s lossy compression schemes (optimized for human eyes viewing a CRT screen) may affect future uses of digital images (such as computer-based analysis systems or display on Best Practices 6 3/6/16 future display devices). But even lossless compression adds a level of complexity to decoding the file many years hence. And many vendor products that claim to be lossless (primarily those that claim “lossless JPEG”) are actually lossy. Image Metadata Metadata or data describing digital images must be associated with each image created, and most of this should be noted at the point of image capture. Image metadata is needed to record information about the scanning process itself, about the storage files that are created, and about the various pieces that might compose a single object. The number of metadata fields may at first seem daunting. However, high proportions of these fields are likely to be the same for all the images scanned during a particular scanning session. For example, metadata about the scanning device, light source, date, etc. is likely to be the same for an entire session. And some metadata, about the different parts of a single object (such as the scan of each page of a book), will be the same for that entire object. This kind of repeating metadata will not require keyboarding each individual metadata field for each digital image; instead, these can be handled either through inheritance or by batch-loading of various metadata fields. Administrative metadata includes a set of fields noting the creation of a digital master image, identifying the digital image and what is needed to view or use it, linking its parts or instantiations to one another, and ownership and reproduction information. Structural metadata includes fields that help one reassemble the parts of an object and navigate through it. Descriptive metadata provides information about the original scanned object itself, to support discovery and retrieval of the material from within a larger archive. Derivative Images Since the purpose of the digital master file is to capture as much information as is practical, smaller derivative versions will almost always be needed for delivery to the end user via computer networks. In addition to speeding up the transfer process, another purpose of derivatives may be to digitally "enhance" the image in one form or another (see discussion below of artifact vs. content) to achieve a particular goal. Such enhancements should be performed on a submaster rather than on the digital master file (which should reflect only what the particular digitization process has captured). Derivative versions are typically not files that will be preserved, as the digital master file is for that purpose. Derivative images for web-based delivery might be pre-computed in batch mode from masters early on in a project, or could be generated on demand from web-resident Best Practices 7 3/6/16 submasters on-the-fly as part of a progressive decompression function or through an application such as MrSID (Multi-resolution Seamless Image Database). Sizes Typical derivative versions include a small preview or "thumbnail" version (usually no more than 200 pixels for the longest dimension) and a larger version that mostly fills the screen of a computer monitor (640 pixels by 480 pixels fills a monitor set at a standard PC VGA resolution). Depending on the need for users to detect detail in an image, a higher resolution version may be required as well. The full set and sizes of derivative images required will depend upon a variety of factors, including the nature of the material, the likely uses of that material, and delivery system requirements (such as user interface). Derivative files should be created using software to reduce the resolution of the master image, not by adjusting the physical dimensions (width and height). After reducing the resolution, it may be necessary to sharpen the derivative image to produce an acceptable viewing image (e.g., by using "unsharp mask" in Adobe Photoshop). It is perfectly acceptable to use image processing on derivative images, but this should never be done to masters. Derivative images will frequently be compressed using lossy compression. Compression algorithms are usually optimized for a particular type of image (e.g. JPEG achieves high compression ratios for pictorial images, but cannot compress images of text very much without introducing compression artifacts), and one should be careful not to use the wrong type of compression scheme for a particular image. Compression algorithms such as JPEG involve a spectrum of options (ranging from high compression ratios that involve visible loss to low compression ratios that involve little visible loss). Each software implementation of these options label them differently (on scales of 1-3, scales of 1-10, options high/medium/low, etc.), and there is currently no objective and interoperable way to declare which of a range of options one has chosen. Artifactual vs. Enhanced Many historical images are faded, yellowed, or otherwise decayed or distorted. Image enhancement techniques can in some cases result in a much improved image for viewing the content of the image rather than the decayed condition of the artifactual print or transparency. In situations where such an enhanced viewing version is desired, it should in most cases be offered in addition to a version that more closely depicts the condition of the artifact. By having both images available, users will understand the condition of the original while having a more useable version for online viewing. Production of artifactual derivatives can often be automated by using software that can perform a series of standard operations. Production of enhanced versions, however, will most likely not be able to be automated, due to the inability of any one standard transformation procedure to apply equally to all images in a particular project. If Best Practices 8 3/6/16 automated procedures for image enhancement are not effective, the costs of creating these images individually will need to be considered in the overall project cost. Color Management The objective of color management is to control the capture and reproduction of color in such a way that an original print can be scanned, displayed on a computer monitor, and printed, with the least possible change of appearance from the original to the monitor to the printed version. This objective is made difficult by the limits of color reproduction: input devices such as scanners cannot "see" all the colors of human vision, and output devices such as computer monitors and printers have even more limited ranges of colors they can reproduce. Further, there is substantial variability in the color reproduction of different capture, display and output devices, even among identical models from the same manufacturer. Most commercial color management systems are based on the ICC (International Color Consortium) data interchange standard, and are often integrated with image processing software used in the publishing industry. They work by systematically measuring the color properties of digital input devices and of digital output devices, and then applying compensating corrections to the digital file to optimize the output appearance. Although color management systems are widely used in the publishing industry, there is no consensus yet on standards for how (or whether) color management techniques should be applied to digital master files. Though projects may experiment with color management systems for derivative files, until a clear standard emerges it is not recommended that digital master files be routinely processed by color management software.4 Strategies for Digital Preservation How to keep digital files alive and accessible over time is a key issue facing our field, and as yet little consensus has emerged (Waters et. al.). The two general approaches are Emulation and Migration. Emulation assumes that we will keep files in their current encoding format, and that software will eventually be written for future computers and operating systems that will emulate current viewing and manipulation environments. Migration requires that files be periodically copied from one encoding format to a newer format (such as from WordStar to Microsoft Word 3 to Word 6 to Word 8, ...). Both approaches assume that files will be periodically transferred from one physical medium to another (to avoid deterioration of the tape, disk, or other storage media). Appropriate metadata is critical to both approaches. Metadata is necessary to: know what file formats the file requires, and to make sure that all related files are emulated or migrated. Metadata will also be crucial for future environments which may include features such as automatic color control, and measurement tools to compare image sizes. 4 Some image processing tools (such as Photoshop 5) default to implementing color management. Users need to beware, and may need to turn off such functions. Best Practices 9 3/6/16 Summary of General Recommendations Plan image capture projects with a goal of long-term access to the digital materials created Think about users (and potential users), uses, and type of material/collection Scan at the highest quality you can possibly justify based on potential users/uses/material. Err on the side of quality. Do not let today’s delivery limitations influence your scanning file sizes; understand the difference between digital masters and derivative files used for delivery Many documents which appear to be bitonal actually are better represented with grayscale scans; some documents that appear to be grayscale are better represented in color Include grayscale target, standard color patches, and ruler in the scan Use objective measurements to determine scanner settings (do NOT attempt to make the image good on your particular monitor or use image processing to color correct) Don’t use compression for digital masters Store in a common (standardized) file format Capture as much metadata as is reasonably possible (including metadata about the scanning process itself) Specific Minimum Recommendations for a hypothetical large academic library in 1999 [[[insert table here]]] Some originals which may appear to be candidates for grayscale scanning may in fact be better represented by color capture (usually when the artifactual nature of a historical item needs to be retained); in those cases, use the color master recommendations. 2 Suggestions that we need to make sure have been integrated: Best Practices 10 3/6/16 Working through the Imaging Decisions: The Honeyman Project (SideBar I) In September of 1998 the Bancroft Library and the Library Photo Service completed a one-year project with an important digital imaging component: the Robert B. Honeyman, jr. Collection Digital Archive Project. The resulting work is now available as part of the California Heritage website at http://sunsite.berkeley.edu/CalHeritage With hindsight, we can look back on the image capture decisions, and consider how they influenced the project results. Project goals: The main goals of the project were to provide public access to images of each item in the collection for purposes of study and teaching, and to provide improved intellectual control of the collection by compiling an on-line catalog with links to the images. Project scope: The Honeyman collection in the Bancroft Library includes about 2300 items related to California and Western US history, including oil paintings, lithographs, sketches, maps, lettersheets, items in scrapbooks, and more. Project Image requirements The Honeyman project calls for web-viewing files in keeping with the design of the California Heritage website, which offers each image at three resolutions (derived from a master): a small thumbnail version, for browsing; a larger "medium resolution" version, sized to approximately fit on a typical computer screen; and a "high resolution" version for viewing image details, at twice the resolution of the "medium" version. Other considerations (discussed below) led to the decision to capture the digital masters at a higher level of resolution than was required to meet this minimal requirement. Additional Imaging goals Given that the added costs of improved image capture quality (over and above the minimum necessary for the project) are often small compared with the costs of repeating the project for more quality sometime in the future, some additional likely uses for the digital captures from the project were considered. In the future it should be possible to offer higher resolution versions of the images from the master for more detailed study via the web. Also, these images can provide a permanent record of each item in the collection and its condition at the time of capture. Finally, because images from the Honeyman collection are frequently published, the archived digital images may be supplied to publishers instead of photographs made conventionally. Best Practices 11 3/6/16 Imaging Equipment Available In addition to traditional (film) cameras, the Library Photographic Service has a digital camera: a PhaseOne Powerphase scanning back which fits on a Hasselblad camera. The Powerphase is used on a copy stand and controlled from a PC (personal computer, either Powermac or Wintel); it is capable of capturing an image measuring 7000 pixels square. Also, an Epson 836 flatbed scanner became available during the project; it can scan originals as large as 12x17 inches at 800 ppi (pixels per inch). Capture Specifications for Digital Masters File Format Digital masters are captured in 24 bit RGB color and stored in uncompressed TIFF format, as managed by the PhaseOne software. In the case of the Epson scanner, the scanner software runs as a plug-in to Adobe Photoshop, so the file is created by Photoshop 5. This file format is widely readable. Capture Resolution and Master File Size Because of the wide range of sizes and types of originals represented in the Honeyman collection, no single value for capture resolution could be set. Instead, a number of discrete resolution values were used, and originals were sorted prior to capture into groups suitable for each resolution, based on the capacity of the Powerphase camera. (The camera's capture resolution is adjusted by moving the camera on the copystand nearer or farther from the subject; the nearer the camera, the higher the capture resolution and the smaller the area of coverage). The highest resolution used was 600 ppi; at this resolution the camera will capture an area about 11.5 inches square, a comfortable fit for an 8-1/2 x 11 inch original. Several arguments were used to establish 600 ppi as the preferred resolution level for capture: 600 ppi is sufficient to capture extremely small text legibly; it is sufficient for high-quality publication at double life-size; and the resulting TIFF file sizes (up to 143 MB) are small enough to be processed and stored on suitablyequipped PC's. This is the sorting table: Resolution Coverage (ppi) (inches) 600 11.5 450 15.5 300 23 200 35 150 46 (for example, an 11x17 inch original would be copied at 300 ppi because it fits in a 23x23 inch capture area, but can't be completely covered in the 15.5x15.5 inch area covered at 450dpi) Best Practices 12 3/6/16 As a result of applying the sorting table, most of the capture files are in the range of 5000 to 6000 pixels in the long dimension, and their file sizes tend to be between 60 and 100 MB. The file processing workflow was developed and tested to handle file sizes up to about 140 MB. Included Targets A one-piece target is imaged at the edge of each capture. It combines the grayscale target and the color patches from a Kodak Q-13 Color Separation Guide and Grayscale with a centimeter scale, all in a very compact layout created using a hobby knife and twosided adhesive tape. The information from the target is intended to provide information about the tonality and scale of the image to scholars and technicians. The crucial "A," "M," and "B" steps of the grayscale are marked with small dots to make them easy to identify for making tonal measurements (see ‘Tonal Metric’ section below) during capture set-up and file processing. Several different-sized versions of the combined target are suited to the range of sizes of the originals. Tonal Metric The RGB data in the image files is captured in the native colorspace of the capture device (camera or scanner); that is, no color management step such as applying a Colorsync profile is used on the digital masters prior to saving. Before capture occurs, the camera (or scanner) operator uses the controls in the scanning software to adjust the color balance, brightness, and contrast of the scan so that the grayscale target in the image has the expected RGB values. These values are as follows: for the white "A" patch, R, G, and B values all at or near 239; for the middle-gray "M" patch, RGB = 98; for the near-black "B" patch, RGB = 31. These expected RGB values are appropriate for a 24 bit RGB image with gamma 1.8. Cropping and Background Originals are depicted completely, including blank margins, against a light gray background paper (Savage "Slate Gray"), so that the digital image documents the physical artifact, as well as reproducing the imagery that the artifact portrays. A narrow gap between the grayscale target and the original allows for cropping the grayscale out of the composition if desired for some new purpose. Adapting to Different Formats of Originals Some aspects of image capture were determined or suggested by the formats of the originals: Subcollections suitable for flatbed scanning: The lettersheets, clipper ship cards, and sheetmusic covers are all small enough to fit on the 12x17 inch platen of the Epson scanner, so that these groups were routed to the flatbed. By operating both the scanner and the camera in tandem, the production rate of image capture was increased. Best Practices 13 3/6/16 Miscellaneous flat art: this group, mostly stored in folders, represents the bulk of the Honeyman Collection, including watercolors, lithographs, maps, etc. Many items are mounted on larger backing sheets, and many are in mounts with window mats, making flatbed scanning difficult, even if size and fragility are not an issue. These items were sorted by capture resolution and routed to the Powerphase. Bound originals: scrap books and sketch books were captured with the digital camera. In cases where more than one item per page is cataloged, separate digital captures were made for each item; otherwise, the entire page is recorded. Framed works: the 136 framed items in the Collection were captured using a film intermediate. They were photographed at the Bancroft Library using 4x5 Ektachrome film, and the film was scanned on the Epson scanner. This was done to minimize the handling of these awkward and vulnerable objects in two ways: the originals would not have to be shuttled between the Bancroft Library and the Photographic Service, at opposite ends and levels of the Library complex, for the project; and the 4x5 film intermediates can be made available to publishers as need arises without further handling of the originals (no system is in place as yet to supply digital files to publishers). Storing the Digital Masters The digital capture files were recorded on external hard drives at the capture stations; the hard drives were than moved to other PC's equipped with CD-Recordable drives, where the digital master files were transferred to CD-R disks. Specifications for the Viewing Files The viewing files for the Honeyman web site are designed for convenient transmission over the Internet and satisfactory viewing using typical PC hardware and web browser software: A thumbnail GIF, maximum dimension 220 pixels A medium format JPEG-compressed (JFIF) version, maximum dimension 750 pixels, transmitted filesize around 50 KB. A higher-resolution JPEG version, maximum dimension 1500 pixels, transmitted filesize roughly 200 KB. Gamma adjustment: the viewing files are prepared for a viewing environment of gamma 2.2 Making the Viewing Files The viewing files were made from the CD-R files in batches, on yet another PC running Adobe Photoshop 5 or Debabelizer 3. The master files are opened, gammaadjusted, downsampled to size, sharpened with unsharp mask, and saved in GIF and JPEG formats. The derivatives from digital camera files are also color-managed with a Colorsynch profile created using Agfa Fototune Scan. Best Practices 14 3/6/16 Thoughts on Digital Capture from Film-based Formats (SideBar II) Digital masters should record the visual appearance of the original artifact in a welldefined way, so that later users can knowledgeably interpret and manipulate the data, and so that capture technicians can follow consistent procedures for capture and quality control. For most kinds of documents and flat artwork, this can be accomplished by defining the desired digital values for standard targets (primarily a grayscale) included in the imaging. However, originals in other formats such as slides and negatives present different challenges. For direct digital capture of negative collections, the visual appearance of the negative would ordinarily be of little interest; it is the visual appearance of the positive image created from the negative that is usually central. Methods and materials for making negatives have changed repeatedly over the years, and importantly, so have the materials and practices used in making prints from them. Planners may choose to express their capture plans in terms of the properties of the negative (e.g. transmission density), or try to describe a positive master format to be captured directly from the negative. Either course has pitfalls. While the first may seem like the safer approach, fully describing a negative will probably require more data than 8 bits per channel can carry, because negatives routinely record a much wider range of tones than media such as prints. It would be advisable for the planners to fully consider each step of the image transformation, from the densities in the negative, to raw scanned data, to digital master, to derivative products, making sure that enough of the right information is available in the master to satisfy the needs of derivative production. Also, as an aside, it's useful to remember that even black and white negatives often contain color information such as stained regions; scanning such a negative in color often reveals that one color channel emphasizes the effect of the stain, while another color channel may hide it. Planners contemplating a project to scan color slides, some of which are faded, may find they need to make choices about whether to try to correct for the fading at the time of scanning, or to store a "faded", realistic digital master and then possibly produce a "restored" derivative to satisfy a need for an unfaded product. The second method would be favored because it provides for both a realistic and a "restored" version, and offers the possibility that different, better "restored" versions can be created in the future, regardless of any ongoing changes in the condition of the slide. However, in some cases the scanner and its software may be better able to correct the faded color channels at the time of scanning by tailoring the information-gathering to the levels of each dye remaining. The purposes and priorities of the project can help make the necessary choices: if a purpose of the digital project is to give lecturers a tool for selecting lecture slides for projection, or to record the condition of the slides, then the project will require a product that faithfully represents the faded condition of the slide. Experimental trials can reveal whether the "restoration" is better if performed at the time of scanning for a particular scanner and Best Practices 15 3/6/16 level of fading; in some cases multiple scannings and multiple masters may be the solution. Another question comes up when a collection of slides that depict documents or works of art is to be scanned: should the digital master strive to represent the slide, or the original the slide depicts? On one hand, if the slide itself is considered an original work, the digital master should probably represent the slide as accurately as possible. On the other hand, many digital image capture projects involve a film intermediate: the document to be captured is first photographed on film, then the film is scanned to create the master file. In this case, the film may be seen as a vehicle for recording the tonality of the original document, and the scanning of the film may be adjusted so as to correct for the color and tonal errors introduced in the filming. The inclusion of a gray card or grayscale, photographed together with the work of art, can make objective corrections possible. Digital capture of faded documents presents many of the same challenges as scanning faded slides: is the objective to show the appearance of the document in its present condition, to make it maximally legible, or perhaps to depict it as we imagine it appeared as new? Ordinarily the digital master would be made to depict the document as it exists, and the master would then be processed to create the legible or "reconstructed" derivatives. However, in some situations faded information may be better captured using extreme, non-realistic means such as narrow-band light filters, or invisible wavelengths such as infrared. In these cases, multiple captures and multiple digital masters may be appropriate. Microfilm is a photographic medium designed not for natural, realistic tonal capture but for optimal legibility. A project to capture digital images from microfilm taken of manuscript originals might naturally choose to emphasize legibility over tonal accuracy in its masters and derivatives since the microfilm intermediate is already inclined that way; this would be an important consideration in choosing whether microfilm is an appropriate source for scanning for a particular purpose. Best Practices 16 3/6/16 Bibliography [[need some standardization of citation formats]] Metadata [Making of America II White Paper], Part III, Structural and Administrative Metadata http://sunsite.berkeley.edu/MOA2 [Cornell University Library] METADATA WORKING GROUP REPORT to Senior [Library] Management, JULY 1996 http://www.library.cornell.edu/DLWG/MWGReporA.htmand the related work “Distillation of [Cornell UL] Working Group Recommendations” November, 1996 http://www.library.cornell.edu/DLWG/DLMtg.html “Information Warehousing: A Strategic Approach to Data Warehouse Development” by Alan Perkins, Managing Principal of Visible Systems Corporation (White Paper Series) http://www.esti.com/iw.htm SGML as Metadata: Theory and Practice in the Digital Library. Session organized by Richard Gartner (Bodleian Library, Oxford) http://users.ox.ac.uk/~drh97/Papers/Gartner.html “A Framework for Extensible Metadata Registries” by Matthew Morgenstern of Xerox, a visiting fellow of the Design Research Institute at Cornell http://dri.cornell.edu/Public/morgenstern/registry.htm Using the Library of Congress Repository model, developed and used in the National Digital Library Program: The Structural Metadata Dictionary for LC Repository Digital Objects http://lcweb.loc.gov:8081/ndlint/repository/structmeta.html which then leads to further documentation of their Data Attributes http://lcweb.loc.gov:8081/ndlint/repository/attribs.html with a list of the attributes http://lcweb.loc.gov:8081/ndlint/repository/attlist.html and their definitions http://lcweb.loc.gov:8081/ndlint/repository/attdefs.html The same site then gives examples of using this model for a photo collection http://lcweb.loc.gov:8081/ndlint/repository/photosamp.html a collection of scanned page images http://lcweb.loc.gov:8081/ndlint/repository/timag-samp.html and a collection of scanned page images and SGML encoded, machine-readable text http://lcweb.loc.gov:8081/ndlint/repository/sgml-samp.html Best Practices 17 3/6/16 Organization of Information for Digital Objects The article “An Architecture for Information in Digital Libraries” by William Arms, Christophe Blanchi and Edward Overly of the Corporation for National Research Initiatives and published in D-Lib Magazine, February 1997 issue.http://www.dlib.org/dlib/february97/cnri/02arms1.html Howard Besser. Digital Longevity (website) http://sunsite.Berkeley.edu/Longevity Repository Access Protocol – Design Draft – Version 0.0 by Christophe Blanchi of CNRI is found at http://titan.cnri.reston.va.us:8080/pilot/locdesign.html and begins “ This document describes the repository prototype for the Library of Congress. This design is based on version 1.2 of the Repository Access Protocol (RAP) and the Structural Metadata Version 1.1 from the Library of Congress.” “The Warwick Framework: A Container Architecture for Aggregating Sets of Metadata” by Carl Lagoze, Digital Library Research Group, Computer Science Department, Cornell University; Clifford A. Lynch, Office of the President, University of California, and Ron Daniel Jr., Advanced Computing Lab, Los Alamos National Laboratory (July, 1996) http://cstr.cs.cornell.edu/Dienst/Repository/2.0/Body/ncstrl.cornell/TR96-1593/html Waters, Don, et. al. Preserving Digital Information: Report of the Task Force on the Archiving of Digital Information, Washington DC: Commission on Preservation and Access and Research Libraries Group, 1996 http://www.rlg.org/ArchTF/ Scanning And Image Capture Adobe Systems Incorporated, TIFF Revision 6: ftp://ftp.adobe.com/pub/adobe/devrelations/devtechnotes/pdffiles/tiff6.pdf This textbook-length standard defines the detailed structure of TIFF (Tagged Interchange File Format) graphics files for all standard TIFF formats (except TIFF/IT, see next entry). If you want to decipher the header information in a TIFF file, this provides the technical documentation. It is also interesting to see the variety of options (many rarely implemented) available to programmers within the standard, including features like JPEG compression, and metadata tags such as Copyright notice. Howard Besser and Jennifer Trant. Introduction to Imaging. Getty Art History Information Project. http://www.gii.getty.edu/intro_imaging/ Best Practices 18 3/6/16 Still the best overview of electronic imaging available for the beginner and should be considered recommended reading for any level. Starts with a basic description of what a digital image is, and continues with a discussion of the basic elements that need to be considered before, during, and after the capture process. Includes a detailed discussion of the image capture process, compression schemes, uses, as well as access to and documentation of the final product. Covers selection of scanning equipment, image-database software, network delivery, and security issues. Includes a top-notch glossary and links to many useful resources on the WWW. Highly recommended. -JE Howard Besser. Procedures & Practices for Scanning, Procedures and Processes for Scanning. Canadian Heritage Information Network (CHIN), http://sunsite.Berkeley.edu/Imaging/Databases/Scanning Posed as a series of questions to ask about the task at hand, this document outlines, in a very straightforward way, all the basic decisions that must be made before, during, and after the scanning process. Includes both abbreviated, and longer explanations of each point, making it useful to a wide audience with varying levels of technical sophistication. Electronic Text Center at Alderman Library, University of Virginia. "Image Scanning: A Basic Helpsheet, http://etext.lib.virginia.edu/helpsheets/scanimage.html A very straightforward, basic, how-to document that outlines the image scanning process at the Electronic Text Center at Alderman Library, University of Virginia. Includes a good, concise discussion of image types, resolution, and image file formats, as well as a brief discussion about "Archival Imaging" and associated metadata. Also includes more specific recommendations for using Adobe Photoshop and DeskScan software with an HP Scanjet flatbed scanner. Electronic Text Center at Alderman Library, University of Virginia. "Text Scanning: A Basic Helpsheet” http://etext.lib.virginia.edu/helpsheets/scantext.html A very concise description of the optical character recognition process that converts scanned images into text at the Electronic Text Center at Alderman Library, University of Virginia. Outlines the process in a step-by-step fashion, assuming the use of the Etext Center equipment, which consists of a pentium PC, an HP Scanjet flatbed scanner, and OmniPage Pro version 8 OCR software. Michael Ester. Digital Image Collections: Issues and Practice. Washington, D.C. , Commission on Preservation and Access (December, 1996). This pithy report is riddled with useful insights about how and why digital image collections are created. Ester is especially effective at Best Practices 19 3/6/16 pointing out the hidden complexities of image capture and project planning, without ever getting too technical for a general audience. Carl Fleischhauer. Digital Historical Collections: Types, Elements, and Construction. National Digital Library Program, Library of Congress, http://lcweb2.loc.gov/ammem/elements.html. One of three articles that cover the Library of Congress digital conversion activity as of August 1996. Discussion covers the types of collections converted, the access aids established for online browsers, types of digital reproductions made available (images, searchable text, sound files, etc.), and supplementary programs known as "Special Presentations" on the Library website. Describes the developmental approach to assigning names to digital elements, and how those elements are identified as items and aggregates. Brief descruption of how digital reproductions are being used in preservation efforts. Carl Fleischhauer. Digital Formats for Content Reproductions. National Digital Library Program, Library of Congress. http://lcweb2.loc.gov/ammem/formats.html A clear explanation of capturing digital representations of different types of materials. One of three articles that cover the Library of Congress digital conversion activity as of August 1996. Discussion documents Library's selection of digital formats for pictorial materials, textual materials, maps, sound recordings, moving-image materials, and computer file headers. Includes specific target values for tonal depth, file format, compression, and spatial resolution for the image and text categories. Good discussion of various mitigation measures that might be employed to reduce or eliminate moire patterns that result from the scanning of printed halftone illustrations and MrSID approach to map files. Sound recording and moving-image files are documented with the caveat that these will likely change in the near future as the technology evolves. Image Quality Working Group of ArchivesCom, a joint Libraries/AcIS Committee. Technical Recommendation for Digital Imaging Projects, http://www.columbia.edu/acis/dl/imagespec.html Contains a good Quick Guide, in table format, that recommends a specific conversion method, capture resolution, archive file format, screen format, and presentation format for 6 media types: 1) black and white text documents, 2) illustrations, maps & manuscripts, 3) 3-D objects, 4) 35mm slides & negatives, 5) medium to large format photos, negatives and transparencies or color microfiche, and 6) black and white microfilm. Clear, brief explanations for when to use film intermediaries, and more specific recommendations for bit-depth, capture resolution, and file formats for archival storage and presentation. Includes estimated file sizes for different file formats to facilitate the calculation of physical storage requirements. Best Practices 20 3/6/16 International Color Consortium: http://color.org/ The ICC is a consortium of major companies involved with digital imaging, formed to create industry-wide standards for digital color management. Systems such as Apple's Colorsync, Agfa's Fototune, and Microsoft's Integrated Color Management (II) which follow the standards are said to be "ICC compliant" and may be able to exchange information. The ICC web site offers a downloadable version of the standards along with other technical papers. International Organization for Standardization, Technical Committee 130 (n.d.). ISO/FDIS 12639: Graphic technology – Prepress digital data exchange – Tag image file format for image technology (TIFF/IT). Geneva: International Organization for Standardization (ISO). This standard defines an extension of the TIFF 6.0 standard specifically intended to apply to graphics files used by prepress applications in the printing industry. In addition to standardizing the formats used for electronic dissemination of page layouts, etc., it calls for backwardcompatibility with common TIFF 6-compatible applications such as Adobe Photoshop, to minimise proliferation of TIFF formats that can’t be opened by many applications. Anne R. Kenney. Digital Imaging for Libraries and Archives. Cornell University Library, June 1996. A very thorough and useful resource for digital imaging projects. The section on hardware is a bit dated, but most of the publication is still very useful. The explanations of digital imaging technology are useful, as is the advice for anyone tackling a digitization project for the first time. Text is VERY dense but is a good in-depth technical overview of the issues involved in the scanning process. The formulas are useful (if complex) and are better when it comes to text scanning than image scanning. Emphasis on benchmarking. Not for the faint of heart. Picture Elements, Inc. Guidelines for Electronic Preservation of Visual Materials (revision 1.1, 2 March 1995). Report submitted to the Library of Congress, Preservation Directorate. Poynton's Color FAQ: http://www.inforamp.net/~poynton/ColorFAQ.html This is one of several papers at this site by Charles Poynton introducing the technical issues of color and tonal reproduction in the digital realm for general audiences. Some of the explanations are accessible to everyone, such as how to adjust the brightness and contrast on a PC monitor; others include some math, such as how to transform from one color space to another. Many useful references are cited. Included are discussion of human visual response, primary colors, and the televisionbased standards underlying digital imaging. Best Practices 21 3/6/16 Steven Puglia and Barry Roginski. NARA Guidelines for Digitizing Archival Materials for Electronic Access, College Park: National Archives and Records Administration, January 1998. http://www.nara.gov/nara/vision/eap/digguide.pdf An exhaustive and specific set of guidelines for digitizing textual, photographic, maps/plans, and graphic materials. Includes specific guidelines about resolution and images size for master files, access files, and thumbnail files. Also includes specific guidelines for scanner/monitor calibration, and file header information tags. Handy quick reference chart at http://www.nara.gov/nara/vision/eap/digmatrx.pdf. Reilly, James M and Franziska S. Frey, "Recommendations for the Evaluation of Digital Images Produced from Photographic, Microphotographic, and Various Paper Formats" Report to the Library of Congress, National Digital Library Project by Image Permanence Institute. May, 1996 http://lcweb2.loc.gov/ammem/ipirpt.html This report is intended to guide the Library of Congress in setting up systematic ways of specifying and judging digital capture quality for LC's digital projects. It includes some interesting discussion about digital resolution, but discussion of tonality and color is brief. Best Practices 3/6/16 Specific Minimum Recommendations for a hypothetical large academic library in 1999 nal material /W text ocument 22 resolution in longest dimension 6000 pixels 3000 pixels6 digital master color depth 8 bit grayscale file format TIFF with lossless compression TIFF with lossless compression strations, Maps, uscripts, etc. 6000 pixels 3000 pixels 24 bit color mensional ects to be esented in dimensions m film, color gative or positive 6000 pixels 3000 pixels 24 bit color TIFF with lossless compression 6000 pixels 3000 pixels 24 bit color TIFF or PhotoCD with lossless compression m film, B/W gative or positive 6000 pixels 3000 pixels 8 bit grayscale TIFF or PhotoCD with lossless compression um to large mat color otograph, egative, parency or icrofiche ofilm, B/W 6000 pixels 3000 pixels 24 bit color TIFF or PhotoCD with lossless compression 6000 pixels 3000 pixels 8 bit grayscale TIFF with lossless compression 5 resolution in longest dimension5 1200 pixels 600 pixels screen display color depth file format resolution in longest dimension 3000 pixels or 6000 pixels print display color depth 8 bit grayscale GIF or JFIF 300 pixels 800 pixels 1500 pixels 3000 pixels 300 pixels 800 pixels 1500 pixels 3000 pixels 300 pixels 800 pixels 1500 pixels 3000 pixels 300 pixels 800 pixels 1500 pixels 3000 pixels 300 pixels 800 pixels 1500 pixels 3000 pixels 24 bit color JFIF, quality level 50 3000 pixels 24 bit color 24 bit color JFIF, quality level 50 3000 pixels 24 bit color JFIF, qua level 50-1 24 bit color JFIF, quality level 50 3000 pixels 24 bit color JFIF, qua level 50-1 8 bit grayscale JFIF, quality level 50 3000 pixels 8 bit grayscale JFIF, qua level 50-1 24 bit color JFIF, quality level 50 6000 pixels 24 bit color JFIF, qua level 50-1 300 pixels 800 pixels 1500 pixels 3000 pixels 8 bit grayscale JFIF, quality level 50 6000 pixels 3000 pixels 8 bit grayscale PDF or T with LZ compress The resource designer has considerable freedom to deviate from these recommendations to accommodate the presentation requirements of the individual collection. 6 The lower resolution represents a minimum value. Material scanned at less than the minimum value is not considered to be archival quality. Every effort should be made to scan at the recommended, rather than the minimum, value. 8 bit grayscale file form PDF or T with LZ compress JFIF, qua level 50-1