CHAPTER II THEORETICAL FRAMEWORK 2.1 Definition of Test

advertisement

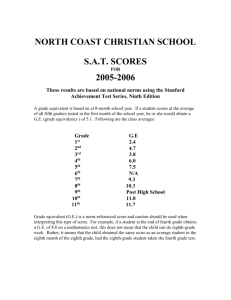

CHAPTER II THEORETICAL FRAMEWORK 2.1 Definition of Test Testing is an unavoidable part of teaching and learning process. After a certain period of learning, a test has to be conducted. According to Brown (2002), test is a method of measuring a person's ability, knowledge, or performance in a given domain. Test is one of the ways to measure what someone got during the learning process. It is the way to see the learner's progress, ability, and knowledge on given material, from the first they began to learn until the test has to be conducted. A psychological or educational test is a procedure designed to elicit certain behavior from which one can make inferences about certain characteristics of an individual (Carroll, 1986, p.46). Furthermore, Bachman (2004, p.20) inspired by Carroll's theory and said that test is a measurement instrUment designed to elicit a specific sample of an individual's behavior. As on type of measurement, a test usually quantifies the characteristics of individuals according to explicit procedures. In language testing, test is used as a measurement tool to find out someone's abilities in language learning. For the test makers, test can be an evaluation to see the teacher's ability in teaching, whether they should change their teaching system or continue their method. 6 However, learning and test is a part that is inseparable. The test could be a horrible and scary thing for some students who do not realize about the aims and the purposes of the test itself. 2.2 Functions of Tests There is no science without measurement. Testing that includes language testing is one form of measurement. A useful test which can measure someone's ability must provide reliable and valid measurement for a variety of purposes. Henning (1987) mentioned the functions of tests as follows: 2.2.1 Diagnosis and Feedback Generally, language tests and educational tests have purposes to find out the strengths and weaknesses in the learners' ability during the learning process. This kind of test is known as diagnostic test. The value of diagnostic test value is that it provides critical information to the student, teacher, and administrator that should make the learning process more efficient (Henning, 1987, p.2). Then, the information can be used to improve the learners' ability through the knowledge of the learners' strengths and weaknesses. 2.2.2 Screening and Selection The function of a test is to help in making decision of whether someone is allowed to participate in one institution or not. The selection 7 decision is made by determining who will be the most benefit from instruction, to reach mastery of language, and to become the useful practitioner to the tester's ability. In the area of language testing, a common screening instrument is named an aptitude test. It is used to predict the success or failure of students' prospective in a language-learning program (Carroll, 1965). 2.2.3 Program Evaluation Another important use of tests, especially achievement test, is to find out the effectiveness of programs of institutions. If the results of these tests are used to modifY the learning program to better needs of the students, this process is termed formative evaluation. As cited from Henning (1987, p.3) the final exam is administrated as part of the process of what is called summative evaluation (Scriven, 1967). 2.2.4 Placement In this case the tests are used to classifY the level of the student and place the student at an appropriate level of instruction based on their capability. Usually this test is used in the courses. The institute administers the test to measure the test-taker ability and take them in the right class according to their level. 8 2.2.5 Providing Research Criteria The score of the tests are used to provide the information as a standard value for another context research. The result test to be a research, then the research will be essential information for the other research. 2.3 Types of Tests The development of the purposes of the tests is followed with the development of the types of the tests. According to Hughes (1989), different purposes of testing will usually require different kinds of a test. 2.3.1 Criterion-referenced Tests Tests which are designed to provide the direct information about what someone can actually do in the language are criterion-referenced tests. The criterion-referenced tests are intended to classifY people according to whether they are able or not to perform their abilities in a set of tasks. They do not compared with the achievement of the other students, but their own achievement will be measured. It means the value of tests respect to the degree of their learning language. The tasks are set and the performances are evaluated whether the students pass or fail. In applying of this kind of measurement, these kinds of tests have both side of strengths and weaknesses. According to Henning (1987, p.7), the first positive side, the process of development of criterion-referenced 9 test will help in clarizying objectives. The tests are useful when the objectives are under constant revision. The tests are useful with small and unique groups for which norms are not available. Furthermore, on the negative side, Henning (1989) explain that the objectives measured are often too limited and restrictive. The objectives must be specified operationally. Another possible weakness is that scores are not considered to be referenced to a norm yet. The example of the criterion-referenced test is a teacher-made test. The teacher-made test is usually conducted in the classroom. Harris (1969, p.l) identified that the concept of the teacher-made test is rather informal. Classroom tests are generally prepared, administrated, and scored by one teacher. In this situation, test objectives can be based directly on course objectives. The instructor, the test-writer and the evaluator is the same person. The students know well what is expected of them and what kind of standard that is required in the tests. 2.3.2 Norm-referenced Tests The opposite of the criterion-referenced tests is the norm- referenced tests. By definition, the norm-referenced tests must be prepared and administrated before being conducted to a large sample of people from the target population. The norm-referenced tests relate one sample's performance to the other sample's performance. The acceptable standard will be known after the tests have been developed and administrated. The 12 standard is determined based on the mean or average scores all students from the same population (Henning, 1987). Similar to criterion-referenced tests, norm-referenced tests also have their own strengths and weaknesses. Harris (1969) explained for the strengths, the comparison between each population's achievements can be easily made. Also, because estimates of reliability and validity are provided, one can know for sure whether an educational institution can be trusted or not. The standard that is produced from the norm-referenced tests is fairer and less arbitrary than the criterion-referenced tests. This is because the acceptable standards of achievement are determined with reference to the achievement of other student. In the purpose of the range of performance results, norm-referenced test provides more information about their abilities rather than information about pass-fail. Everything has strengths, surely has weaknesses. The weakness of the norm-referenced is such tests are usually valid only with the normgroup. The norm group is typically a large group of individuals who are similar to the individuals for whom the test is designed. Norms change as the characteristics of the population change, and therefore such tests must be upgraded periodically. The development of norm-referenced tests is independently of any particular course of instruction. That's why the results with objectives basic are difficult to match. The norm-referenced tests are also known as standardized tests. Gronlund (1985) said that the standardized tests are based on a fixed or standard content, which does not vary from one form of the test to another. 11 This content may be based on a theory oflanguage proficiency, as with the Test of English as a Foreign Language, or it may be based on a specification of language users' expected needs, as in English Language Testing Service test. In the standardized tests, there are standard procedures for administering and scoring of the test, which is still the same as one administration of the test to the next. Moreover, standardized tests have been thoroughly tried out and through a process of empirical research and development, their characteristics are well known. So that, what type of provided measurement scale can be known. The reliability and validity can be investigated and demonstrated carefully for the intended uses of the test. The score distribution norms have been established with the normgroups. And if there are alternate forms of the test, these are equated statistically to assure that reported scored on each test indicate the same level of ability. 2.4 Characteristics of a Good Test Test is said to be a good test when the test has three criteria qualities: validity, reliability, and practicality. It means the test must be appropriate in terms of the objectives, dependable in the evidence it provides, and applicable to the particular situation. Like a piece of cake, it would not be a delicious cake if there is one thing of ingredient left. It would not be said as the good test if one of three criteria qualities left. In the preparing the good test, the teacher must certainly understand the three concept of the good test quality and how to apply them (Hughes, 1989). 12 2.4.1 Validity The validity of the test concerns whether it measures what the testtaker wants to measure. In Language Program, Brown (1996) stated that test validity is defined as the degree to which a test measures what it claims, or purports to be measuring. Gronlund (1981, p.65) said that the validity of the test related to the results of the test. The validity of the test is interpreted by the test result, not the test it self. The result can be a degree whether the purpose of the test-takers' want can be reached or not. For example, if the result is to measure the ability of making a paragraph, the test should be arranged to measure the ability to write a paragraph. 2.4.2 Reliability The reliability of the test concerns whether the test result is consistent or not. Reliability refers to the consistency of measurement (Gronlund, 1981, p.94). The reliable test result consider to the students' understanding of the material test that is given and learned. The test is said constant or reliable if the score of the student is more or less the same. Meanwhile, ifthe score has significant differences, the test is unreliable. The difference in score may be happen because of any factors that influence the test-taker performance. The noisy sound, the feeling of the test-taker, the situation of the time or anything else can disturb the test-taker concentration. 13 2.4.3 Practicality The practicality of the test concerns with the usability. The usability is about the availability of place and time. The other important thing is the administration process of the test. The shorter time that is used to administrate the tests, that is called practicality of the tests. 2.5 Theory of Reliability The reliability of the test concerns whether the test result is consistent or not. The characteristic of the reliability is termed consistency (Henning, 1987, p. 73). The accuracy in the consistency is reflected in the obtaining of similar scores when the test; as a measurement tool; is done repeatedly on the different occasion. But the test is still administrated to the same students with the same ability. As cited by Hughes (1989, p.29), the similar the scores would have been, the more reliable the test is said to be. The test is said constant or reliable if the score of the student is more or less the same. Meanwhile, if the score have significant differences, the test is unreliable. The reliable test result consider to the students' understanding of the material test that is given and learned. Furthermore, in the book, Testing for Language Teachers, Hughes (1989) informed that the ideal reliability of the test is 1.0- a test with a reliability coefficient of 1.0 is one which would give precisely the same result for a particular set of candidates regardless of when it happened to 14 be administrated. The coefficient reliability of test is found by the comparing the reliability of the different tests. Hughes (1989) added that a test which had a reliability coefficient of zero would give sets of result quite unconnected with each other. It is in the perspective that the score that someone actually got would be no help at all in attempting to predict score on the next test. Moreover, every author has a different opinion about how high a reliability coefficient that should expect for different types of language tests. As cited as Hughes (1989, p. 32), Lado (1961) suggested as the difficulty in achieving reliability in the testing based on the different abilities. The coefficient reliability of tests can be estimated in various methods. According to Hughes (1989), there are three methods that are usually used to estimate the coefficient reliability of tests. Then the coefficient reliability of tests can be used to find the criteria of a test to produce a constant score through knowing the standard error of measurement. 2.5.1 Retesting Method (Test-retest Reliability) This method must have two sets of scores for comparison and to calculate the scores between those both tests. Then the tests are readministered to the group of subject to take the same test twice (Hughes, 1989). The test-retest can provide the estimate of the stability of the test scores over time. As similar as Hughes' opinion, Henning (1987) said that 15 the same test is re-administered to the same people following an interval of no more than two weeks. The purpose of the interval limitation is to prevent any changes in the tester's true score, which can affect the coefficient reliability by this method. If the second administration of the test after the first test is too soon, the coefficient reliability of the test will be high. It is because the test-takers likely remind the test and do the second test as same as the first test. It can be said that the test-takers lack of the measurement of their own abilities. Otherwise, if there is a long gap between administrations, the other learning or forgetting will take a place, rewrite their true abilities in the first tests, and the coefficient will be lower than it supposed to be. 2.5.2 Alternate-forms Method (Parallel-form Reliability) In this method, two sets of tests are administered to the same group of students and scores are correlated to see the consistency. The two sets of the tests must have the equivalent forms. To demonstrate the equivalence of tests, these tests must show the equivalent difficulty, the equivalent variance in the scoring, and the equivalent covariance; the equivalent correlations coefficient (Henning, 1987, p.82). The coefficient reliability of this method is expected to be the same as the coefficient reliability which is figured out by the test-retest method. But the reminder in the test-retest method can be controlled in the alternate-forms method. That thing is because the test-takers do not need to remind and recall the items of tests for the second-same test from the 16 previous test. The test-takers do not need to perform the same tests, twice. However, this method is difficult to do. It is almost impossible to fulfill the criteria of the equivalent tests. 2.5.3 Split-half Method (Split-half Reliability) In the book Fundamental Considerations in Language Testing, Bachman (1990, p.l72) wrote that split-half method is the calculating of the coefficient reliability which the test is divided into two halves and then determine the scores on the two halves are consistent with each other. This method only needs once administration of test to the students. The requirement of this kind of method is the relationship between those two halves should measure the same ability. The items in the two halves have to be equal means and variance. The two halves also should independent of each other. Here of this means that individual's performance on the one half does not implicit the performance on the other half. The split-half method uses the odd-even method. This approach is grouping all the odd-numbered items together into one half and all the even-numbered into another hal£ This way is applicable for the requirement of the test as mentioned as the previous paragraph. The example of the test which has independent items and the same ability measurement is the multiple-choice tests of grammar or vocabulary. According to Lado (1961), the split-half coefficient of reliability could be estimated by the following formula, 17 Where:N =the number of students in the sample X = odd items scores Y = even items scores L;X =the sum of X scores L;Y = the sum ofY scores L;X 2 =the sum of the squares of X scores L;Y2 =the sum of the squares of X scores L;XY = the sum of the products of X and Y scores for each student r x/ = the square of the correlation of the scores on the two halves of the test This formula could calculate the correlation between the two sets of half scores by means of the above formula. The result will be classified by Walpole (1992) in the book Pengantar Statistika: -0.1 to -0.5 = low negative correlation -0.6 to -1.0 =high negative correlation 0.0 = no correlation 0.1 to 0.5 = low positive correlation 0.6 to 1.0 = high positive correlation 18 The next formula is That formula is root of r ,./becomes r xy where: r xy =the obtained reliability of half the test r x/ = the square of the correlation of the scores on the two halves /of the test Then use the Spearman-Brown formula to estimate the reliability of the entire test. Where:r,, =the obtained reliability coefficient of the entire test r11 = the obtained reliability of half the test 22 After getting the result, Gronlund (1981) implied the degree: 0.900 to 1.000 = very high correlation 0.700 to 0.899 = high correlation 0.500 to 0.699 =moderate correlation 0.300 to 0.499 = low correlation 0.000 to 0.299 = little if any correlation 19 2.6 TOEFL as a Good Language Testing Product Generally, the TOEFL test is intended to evaluate only certain aspects of the English language proficiency of persons whose native language is not English (Duran, Canale, Penfield, Stansfield, LiskinGasparro, 1985, p.l ). The test is used to review and qualified the language ability of incoming foreign students whose native language is not English by colleges and universities in the United States of America and Canada. 2.6.1 Aspects of the Standardized TOEFL Since 1976, TOEFL consists of three sections, with the range TOEFL score Comprehension, from 227-627. Structure and The three Written sections Expression, are and Listening Reading Comprehension and Vocabulary (Duran, Canale, Penfield, Stansfield, Liskin-Gasparro, 1985). Listening comprehension section. This section is designed to measure the test-taker's ability to understand spoken English in American dialect. In this section there are three item types, statements, dialogues, and mini-talks/ extended conversations. Structure and written expression section. The structure expression is intended to find out the test-taker's control of synthectic features appropriate to various clause and phrase types, negative constructions, comparative forms, word ordering in sentences, and the statements of parallel relationship at the phrase and clause level. Each items of the structure consist of incomplete sentence and appropriate word and 20 distracter words to complete the sentence. The appropriate word must be in grammatically correct given the structure and content of the sentence. Moreover, the written expression section consists of sentences and four underlined words. The test-takers have to choose which one between the underlined words is inappropriate in the context of a sentence. The written expression section is designed to find out the learner's ability in the synthectic and semantic characteristics of function and content words, appropriate usage of function words, appropriate word ordering, appropriate diction and idiomatic usage, appropriate and complete clause or phrase structure, and maintenance of parallel forms. Reading comprehension and vocabulary section is created to measure the test-taker's ability in understanding of the reading material and the meaning and use of the words. 2.6.2 Bina Nusantara Cnrricnlum of TOEFL In creating the uniform teaching and learning, SAP/ MP are needed as guidance. It is also needed to standardize the material will be given for all students in Bina Nusantara University. 2.6.2.1Module Plan The syllabus for Bahasa Inggris 3 odd semester 2008/2009: • Introduction to the course • Sentences with reduced clauses • Sentences with reduced clauses (continued) 21 • Reading (Market Leader) • Sentence with Inverted subjects and verbs • Sentences with inverted Subjects and verbs (continued) • Reading exercises - TOEFL • Reading exercises - TOEFL (Continued) • Problem with usage • Writing paragraph and essay • Reading exercises -TOEFL • Reading exercises -TOEFL • Reading exercises - Ethics (Market Leader) 2.6.2.2 Relationship of Cnrrlculum and Classroom Setting Harsono (1997, p. 21) explained that there was a close correlation between curriculum and its components (objective, materials, methodology, evaluation, lesson plan, and classroom situation) as shown in diagram below (cited from Fanny Wibowo, 13-14). 22 ( CURRICULUM I LESSON PLAN CLASSROOM SITUATION Figure 1 The Relation of Curriculum and Classroom Setting The highest position is the curriculum. Meanwhile, there is the classroom situation in the lowest position. It means that the curriculum is the most important and essential thing in the teaching-learning program. The syllabus of the curriculum is applied in the plan of the teachinglearning program. From the syllabus, the teacher gives the material to find out the students' achievements of the objectives. The way how to conduct the teaching-learning program is also important to help the students to achieve the objectives. Then the evaluation is administered to the students to measure how extensively the objectives they achieved during the teaching-learning process. Those four 23 components from the syllabus are expected can guide the teacher in the plan of teacher-learning program. The best place to organize it is in the school (lesson plan). With the plan, the teacher can handle the classroom in the right way, under control of the curriculum. 2.7 Criteria of a Test to Produce a Constant Score The constant score that is produced by the standardized test make the standardized test to be the test that is trusted and standardized by the people. Then the question appears about the non-standardized test, for example the teacher-made test. Can the teacher-made test be trusted by the people, or even more than that, can the teacher-made test become the standardized test? The criteria of reliable test in the standardized test are the important requirements to make the teacher-made test to be the standardized test. According to Hughes (1989, p.36), the criteria how to make test more reliable and to produce the constant score are: 2.7.1 Do not allow the testers too much freedom In some kinds of language test there is a part where the tester offers to choose a question and allow them to answer it in the way they want it. The example is in the writing test. Generally, the writing tests provide some topics for the students to choose and then allow them to elaborate the topic in each their ways. Hughes (1989) proposed that the more freedom that is given, the greater is likely to be the difference between the performance actually elicited and the performance that would have been 24 elicited had the test been taken a day later. Too much freedom has a bad effect in the reliability of the test. That is why the multiple-choice item is more reliable than the essay item. But it is not impossible to make the reliable test by the essay item. Limit the topic as limited as possible is the way to make the essay test more reliable (Hughes 1989). 2.7.2 Provide clear and explicit instructions Hughes (1989) said that the unclear instructions influence the testers to misinterpret what they are asked to do. Then the test-takers will do the tests based on their own understanding of the instructions. That way will make the test inaccurate and non-reliable. The test-takers can not perform the true items in the test and in the true instructions according to their own ability, to be measured accurately. 2.7.3 Use items that permit objective scoring The first recommendation of objective item that may be turn up is the multiple-choice items. However, good multiple-choice items are notoriously difficult to write and always require extensive pre-testing (Hughes, 1989). For the alternate of the multiple-choice items, the open-ended items can be used as items which are more reliable than the multiple- 25 choice items. The open-ended item has a unique, possibly one-word, correct response which the test-takers produce themselves. 2.7.4 IdentifY the test-taker's by number, not name The result of the tests is expected to reflectthe test-takers' abilities. So the scoring systems have to be as fair as possible, as objective as possible. The way in scoring which is not objective will affect the accuracy and the reliability of the tests. Hughes (1989) explained that, studies have shown that even where the candidates are unknown to the scores, the name on a script will make a significant difference to the scores given. For example, the gender and the nationality of the same will affect the scorer in the scoring system. The identification of the student by number will reduce this effect.