Yining Wang, Yu-Xiang Wang and Aarti Singh

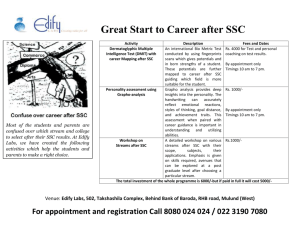

advertisement

D IFFERENTIALLY P RIVATE S UBSPACE C LUSTERING

Yining Wang, Yu-Xiang Wang and Aarti Singh

Definition. A randomized algorithm A is (ε, δ)differentially private if for all X , Y satisfying

d(X , Y) = 1 and all measurable sets S, we have

• Well-separation conditions for k-means subspace clustering

Definition. A dataset X is (φ, η, ψ)-well separated if

∆k (X ) ≤ min{φ

ε

Pr[A(X ) ∈ S] ≤ e Pr[A(Y) ∈ S] + δ.

Objective. Develop differentially private subspace clustering algorithms and characterize the

tradeoff between statistical efficiency and guaranteed privacy.

• Important as subspace clustering is an increasingly popular tool for anlyzing sensitive medical and social network data.

• k-means objective:

min

cost(C;

X

),

where

C

Pn

cost(C; X ) = i=1 minkj=1 d2 (xi , Sj )/n.

DP tools. Sample-and-aggregate [1], exponential

mechanism [2], SuLQ [3], etc.

−

2

ψ, ∆k,+ (X )

+ η}.

• Main result (Theorem 3.4): If f (·) is a constant approximation algorithm of k-means subspace

clustering, then sample-and-aggregate

is

(ε,

δ)-differentially

private

and

the

magnitude

of

per√

2

φ k

coordinate Gaussian noise is O( ε(ψ−η) ).

• Additional theoretical results for the stochastic setting available in the paper.

E XPERIMENTS

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

0.3

K−means cost

0.7

0.25

0.2

0.1

0

0.5

1

Log10ε

1.5

2

2.5

0.4

0.3

0.2

0

−1

3

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

−0.5

0

0.6

0.5

0.4

0.3

0.2

0.1

0.5

1

Log10ε

1.5

2

2.5

0

−1

3

3

4

9

2.5

3.5

8

Wasserstein distance

Wasserstein distance

0.5

0.1

−0.5

0.8

0.7

0.15

0

−1

0.9

0.6

0.05

• f (·) can be any approximation algorithm for subspace clustering.

2

2

∆k−1 (X ), ∆k,− (X )

Here ∆k uses k q-dimensional subspaces, ∆k−1 uses (k − 1) q-dimensional subspaces, ∆k,− uses

(k − 1) q-dimensional subspaces and one (q − 1)-dimensional subspace, ∆k,+ uses (k − 1) qdimensional subspaces and one (q + 1)-dimensional subspace.

M ETHODS

1. Sample-and-aggregate based private subspace clustering

2

K−means cost

Find k low-dimensional linear subspaces to approximate a set of unlabeled data points.

T HEORETICAL RESULTS

2

1.5

1

0.5

0

−0.5

−1

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

−0.5

0

0.5

3

2.5

2

1.5

1

0.5

1

Log10ε

1.5

2

2.5

3

Wasserstein distance

D IFFERENTIAL P RIVACY

K−means cost

S UBSPACE C LUSTERING

C ARNEGIE M ELLON U NIVERSITY

0

−1

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

−0.5

0

0.5

1

Log10ε

1.5

2

2.5

3

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

−0.5

0

0.5

1

1.5

2

2.5

3

1

1.5

2

2.5

3

Log10ε

7

s.a., SSC

s.a., TSC

s.a., LRR

exp., SSC

exp. LRR

SuLQ−10

SuLQ−50

6

5

4

3

2

−1

−0.5

0

0.5

Log10ε

• Requires stability of f (i.e., f (XS ) ≈ f (X )) to work.

2. Exponential mechanism based private subspace clustering

(

)

n

X

ε

k

n

2

p({S` }`=1 , {zi }i=1 ; X ) ∝ exp − ·

d (xi , S` ) .

2 i=1

From left to right: synthetic dataset, n = 5000, d = 5, k = 3, q = 3, σ = 0.01; n = 1000, d = 10, k = 3, q =

3, σ = 0.1; extended Yale Face Dataset B (a subset). n = 320, d = 50, k = 5, q = 9, σ = 0.01.

R EFERENCES

• (ε, 0)-differentially private, if sampled exactly from the proposed distribution.

1. K. Nissim, S. Raskhodnikova and A. Smith. Smooth sensitivity and sampling in private data

analysis. In STOC, 2007.

• A Gibbs sampling implementation (more details in the paper):

p(zi = `|xi , S) ∝ exp −ε/2 · d2 (xi , S` ) ;

p(U` |X , z) ∝ exp ε/2 · tr(U>

` X` U)`) .

2. F. McSherry and K. Talwar. Mechanism design via differential privacy. In FOCS, 2007.

• Can also be viewed as the Gibbs sampler for an MPPCA model with prior Nd (0, Id /ε).

3. A. Blum, C. Dwork, F. McSherry and K. Nissim. Practical privacy: the SuLQ framework. In PODS,

2005.