PNY Solutions for GPU Accelerated HPC

advertisement

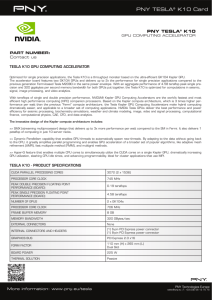

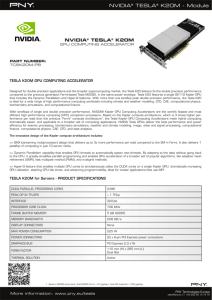

PNY Solutions for GPU Accelerated HPC ABOUT PNY Founded in 1985, PNY Technologies has over 25 years of excellence in the manufacturing of a full spectrum of highquality products for everything in and around the computer. PNY Technologies has a long history of providing designers, engineers and developpers with cutting-edge NVIDIA® Quadro™ and Tesla™ Solutions. PNY understands the needs of its professional clients offering professional technical support and a constant commitment to customer satisfaction. Based on the revolutionary architecture of massively parallel computing NVIDIA® CUDA™, the NVIDIA® Tesla™ solutions by PNY are designed for high performance computing (HPC) and offer a wide range of development tools. In 2012 PNY Technologies enhances its presence and offer in the HPC market becoming the European distributor of TYAN® servers based on NVIDIA® Tesla ™processors. www.pny.eu ABOUT TYAN TYAN designs, manufactures and markets advanced x86 and x86-64 server/workstation board technology, platforms, and server solution products. TYAN enables its customers to be technology leaders by providing scalable, highly-integrated, and reliable products for a wide range of applications such as server appliances and solutions for high-performance computing, GPU Computing and parallel computing. www.tyan.com unit) to do general purpose scientific and engineering comp HYBRID COMPUTING NVIDIA® TESLA® GPUs ARE REVOLUTIONIZING WHAT IS GPU COMPUTING HISTORY OF GPU COMPUTING COMPUTING GPU computing is the use of a GPU (graphics processing Graphics chips started as fixed function graphics pipelines. Over the years, these graphics chips became increasingly programmab The high performance computing (HPC) industry’s need for computation is increasing, as large and complex computational problems become commonplace across many industry segments. Traditional CPU technology, however, is no longer capable of scaling in performance sufficiently to address this demand. The parallel processing capability of the GPU allows it to divide complex computing tasks into thousands of smaller tasks that can be run concurrently. This ability is enabling computational scientists and researchers to address some of the world’s most challenging computational problems up to several orders of magnitude faster. 100x Performance Advantage by 2021 Conventional CPU computing architecture can no longer support the growing HPC needs. Source: Hennesse]y &Patteson, CAAQA, 4th Edition. 10000 20% year Performance vs VAX 1000 52% year 100 10 25% year 1 1978 1980 19821984198619881990 1992 19941996 19982000200220042006 CPU GPU Growth per year 3 2016 WHY GPU COMPUTING? With the ever-increasing demand for more computing performance, the HPC industry is moving toward a hybrid computing model, where GPUs and CPUs work together to perform general purpose computing tasks. As parallel processors, GPUs excel at tackling large amounts of similar data because the problem can be split into hundreds or thousands of pieces and calculated simultaneously. WHAT IS GPU COMPUTING HISTORY OF GPU COMPUTING GPU computing is the use of a GPU (graphics processing unit) to do general purpose scientific and engineering comp Graphics chips started as fixed function graphics pipelines. Over the years, these graphics chips became increasingly programmab HYBRID COMPUTING As sequential processors, CPUs are not designed for this type of computation, but they are adept at more serial based tasks such as running operating systems and organizing data. NVIDIA believes in applying the most relevant processor to the specific task in hand. CORE COMPARISON BETWEEN A CPU AND A GPU The new computing model includes both a multi-core CPU and a GPU with hundreds of cores. Multiple Cores Hundreds of Cores CPU GPU GPU SUPERCOMPUTING — GREEN HPC GPUs significantly increase overall system efficiency as measured by performance per watt. “Top500” supercomputers based on heterogeneous architectures are, on average, almost three times more powerefficient than non-heterogeneous systems. This is also reflected on Green500 list — the icon of Eco-friendly supercomputing. “ The rise of GPU supercomputers on the Green500 signifies that heterogeneous systems, built with both GPUs and CPUs, deliver the highest performance and unprecedented energy efficiency,” said Wu-chun Feng, founder of the Green500 and associate professor of Computer Science at Virginia Tech. 4 PROGRAMMING ENVIRONMENT FOR GPU COMPUTING NVIDIA’S CUDA CUDA architecture has the industry’s most robust language and API support for GPU computing developers, including C, C++, OpenCL, DirectCompute, and Fortran. NVIDIA Parallel Nsight, a fully integrated development environment for Microsoft Visual Studio is also available. Used by more than six million developers worldwide, Visual Studio is one of the world’s most popular development environments for Windows-based applications and services. Adding functionality specifically for GPU computing developers, Parallel Nsight makes the power of the GPU more accessible than ever before. The latest version - CUDA 4.0 has seen a host of new exciting features to make parallel computing easier. Among them the ability to relieve bus traffic by enabling GPU to GPU direct communications. RESEARCH & EDUCATION INTEGRATED DEVELOPMENT ENVIRONMENT LANGUAGES & APIS LIBRARIES CUDA ALL MAJOR PLATFORMS MATHEMATICAL PACKAGES CONSULTANTS, TRAINING, & CERTIFICATION TOOLS & PARTNERS Order personalized CUDA education course at: www.parallel-computing.pro ACCELERATE YOUR CODE EASILY WITH OPENACC DIRECTIVES GET 2X SPEED-UP IN 4 WEEKS OR LESS Accelerate your code with directives and tap into the hundreds of computing cores in GPUs. With directives, you simply insert compiler hints into your code and the compiler will automatically map compute-intensive portions of your code to the GPU. By starting with a free, 30-day trial of PGI directives today, you are working on the technology that is the foundation of the OpenACC directives standard. OpenACC is: • Easy: simply insert hints in your codebase • Open: run the single codebase on either the CPU or GPU • Powerful: tap into the power of GPUs within hours OpenACC DIRECTIVES The OpenACC Application Program Interface describes a collection of compiler directives to specify loops and regions of code in standard C, C++ and Fortran to be offloaded from a host CPU to an attached accelerator, providing portability across operating systems, host CPUs and accelerators. The directives and programming model defined in this document allow programmers to create high-level host+accelerator programs without the need to explicitly initialize the accelerator, manage data or program transfers between the host and accelerator, or initiate accelerator startup and shutdown. www.nvidia.eu/openacc WATCH VIDEO*: PGI ACCELERATOR, TECHNICAL PRESENTATION AT SC11 * Use your phone, smartphone or tablet PC with QR reader software to read the QR code. 4 A WIDE RANGE OF GPU ACCELERATED APPLICATIONS ISV/APPLICATION SUPPORTED FEATURES EXPECTED SPEED UP* ANSYS / ANSYS Mechanical Direct & iterative solver 2x SIMULIA / Abaqus/Standard Direct solver 1.5-2.5x IMPETUS / Afea SPH, blast simulations 10x SPH, 2x MSC Nastran Nastran: Direct solver 1.4 - 2x FluiDyna LBultra Lattice-Boltzmann 20x Vratis SpeedIT- OpenFOAM Solver Linear Equation solvers 3x Solver FluiDyna Culises – OpenFOAM Solver Linear equation Solvers 3x Agilent Technologies EMPro FDTD solver 6x CST Microwave Studio (MWS) Transient solver 9x-20x SPEAG SEMCAD-X FDTD solver 100x HIGH PERFORMANCE COMPUTING ENGINEERING: COMPUTATIONAL FLUID DYNAMICS, STRUCTURAL MECHANICS, EDA BUSINESS APPLICATIONS: COMPUTATIONAL FINANCE, DATA MINING, NUMERICAL ANALYSIS Murex MACS analytics library 60x-400x MathWorks MATLAB Support for over 200 common MATLAB functions 2 - 20x Numerical Algorithms Group Random number generators, Brownian bridges, and PDE solvers 50x SciComp, Inc Monte Carlo and PDE pricing models 30x-50x(MC), 10x-35x(PDE) Wolfram Mathematica Development environment for CUDA 2 - 20x AccelerEyes Jacket for MATLAB Support for several hundred common MATLAB functions 2 - 20x Jedox Palo Extend Excel with OLAP for planning & analysis 20-40x ParStream Database and data analysis Acceleration using GPUs 10x GeoStar RTM Seismic Imaging 4x-20x GeoMage Multifocusing Seismic Imaging 4x-20x PanoramaTech: Marvel Seismic Modeling, Imaging, Inversion 4x-20x Paradigm Echos, SKUA, VoxelGeo Seismic Imaging, Interpretation, Reservoir Modeling 5x-40x Seismic City RTM Seismic Imaging 4x-20x Tsunami A2011 Seismic Imaging 4x-20x OIL AND GAS LIFE SCIENCES: BIO-INFORMATICS, MOLECULAR DYNAMICS, QUANTUM CHEMISTRY, MEDICAL IMAGAMBER PMEMD: Explicit and Implicit Solvent 8x GROMACS Implicit (5x), Explicit(2x) Solvent 2x-5x HOOMD-Blue Written for GPUs (32 CPU cores vs 2 10xx GPUs) 2x LAMMPS Lennard-Jones, Gay-Berne 3.5-15x NAMD Non-Bond Force calculation 2x-7x VMD High quality rendering, large structures (100 million atoms) 125x TeraChem “Full GPU-based solution” 44-650x VASP Hybrid Hartree-Fock DFT functionals including exact exchange 2 GPUs comparable to up to 8 (8-core) CPUs GPU HMMER hmmsearch tool 60x-100x Digisens Computing/reconstruction algorithms, pre and post filtering 100x * Expected Speed Up vs a quad-core x64 CPU based system. Speed-ups as per NVIDIA in house testing or application provider documentation. ** Nastran: 5x with single GPU over single core and 1.5 to 2x with 2 GPUs over 8 core. Marc: 5x with single GPU over single core runs and 2x with 2 GPUs (with DMP=2). (2 quad-core Nehalem CPUs). 5 GPU ACCELERATION IN LIFE SCIENCES TESLA® BIO WORKBENCH The NVIDIA Tesla Bio Workbench enables biophysicists and computational chemists to push the boundaries of life sciences research. It turns a standard PC into a “computational laboratory” capable of running complex bioscience codes, in fields such as drug discovery and DNA sequencing, more than 10-20 times faster through the use of NVIDIA Tesla GPUs. Relative Performance Scale (normalized ns/day) 1,5 50% Faster with GPUs Complex molecular simulations that had been only possible using supercomputing resources can now be run on an individual workstation, optimizing the scientific workflow and accelerating the pace of research. These simulations can also be scaled up to GPU-based clusters of servers to simulate large molecules and systems that would have otherwise required a supercomputer. Applications that are accelerated on GPUs include: • 1,0 0,5 192 Quad-Core CPUs It consists of bioscience applications; a community site for downloading, discussing, and viewing the results of these applications; and GPU-based platforms. 2 Quad-Core CPUs + 4 GPUs JAC NVE Benchmark (left) 192 Quad-Core CPUs simulation run on Kraken Supercomputer (right) Simulation 2 Intel Xeon Quad-Core CPUs and 4 Tesla M2090 GPUs Molecular Dynamics & Quantum Chemistry AMBER, GROMACS, HOOMD, LAMMPS, NAMD, TeraChem (Quantum Chemistry), VMD • Bio Informatics CUDA-BLASTP, CUDA-EC, CUDA MEME, CUDASW++ (Smith Waterman), GPU-HMMER, MUMmerGPU For more information, visit: www.nvidia.co.uk/bio_workbench 6 AMBER AMBER: NODE PROCESSING COMPARISON 4 Tesla M2090 GPUs + 2 CPUs 192 Quad-Core CPUs 69 ns/day 46 ns/day HIGH PERFORMANCE COMPUTING Researchers today are solving the world’s most challenging and important problems. From cancer research to drugs for AIDS, computational research is bottlenecked by simulation cycles per day. More simulations mean faster time to discovery. To tackle these difficult challenges, researchers frequently rely on national supercomputers for computer simulations of their models. GPUs offer every researcher supercomputer-like performance in their own office. Benchmarks have shown four Tesla M2090 GPUs significantly outperforming the existing world record on CPU-only supercomputers. RECOMMENDED HARDWARE CONFIGURATION Workstation Server • • • • • • • 8x Tesla M2090 • Dual-socket Quad-core CPU • 128 GB System Memory 4xTesla C2075 Dual-socket Quad-core CPU 24 GB System Memory Server Up to 8x Tesla M2090s in cluster Dual-socket Quad-core CPU per node 128 GB System Memory 180 3T+C2075 3T 160 6T+2xC2075 140 114.9 6T 12T+4xC2075 120 0 74.4 85.7 cubic dodec 26.9 21.9 34.4 36.7 12.1 15.6 8.7 cubic + pcoupl 47.5 49.7 70.4 44.8 28.5 28.4 29.3 16.0 20 8.9 40 45.6 60 27.7 80 83.9 12T 100 72.4 ns/day GROMACS is a molecular dynamics package designed primarily for simulation of biochemical molecules like proteins, lipids, and nucleic acids that have a lot complicated bonded interactions. The CUDA port of GROMACS enabling GPU acceleration supports ParticleMesh-Ewald (PME), arbitrary forms of non-bonded interactions, and implicit solvent Generalized Born methods. 165.8 GROMACS dodec + vsites Figure 4: Absolute performance of GROMACS running CUDA- and SSE-accelerated non-bonded kernels with PME on 3-12 CPU cores and 1-4 GPUs. Simulations with cubic and truncated dodecahedron cells, pressure coupling, as well as virtual interaction sites enabling 5 fs are shown. 7 MSC NASTRAN, MARC 5X PERFORMANCE BOOST WITH SINGLE GPU OVER SINGLE CORE, >1.5X WITH 2 GPUs OVER 8 CORE SOL101, 3.4M DOF 7 1 Core 1 GPU + 1 Core 4 Core (SMP) 8 Core (DMP=2) 2 GPU + 2 Core (DMP=2) 6 1.8x 5 1.6x 4 5.6x 4.6x 3 2 1 0 3.4M DOF; fs0; Total 3.4M DOF; fs0; Solver Speed Up * fs0= NVIDIA PSG cluster node 2.2 TB SATA 5-way striped RAID • Nastran direct equation solver is GPU accelerated – Real, symmetric sparse direct factorization – Handles very large fronts with minimal use of pinned host memory – Impacts SOL101, SOL400 that are dominated by MSCLDL factorization times – More of Nastran (SOL108, SOL111) will be moved to GPU in stages Linux, 96GB memory, Tesla C2050, Nehalem 2.27ghz • Support of multi-GPU and for both Linux and Windows – With DMP> 1, multiple fronts are factorized concurrently on multiple GPUs; 1 GPU per matrix domain – NVIDIA GPUs include Tesla 20-series and Quadro 6000 – CUDA 4.0 and above SIMULIA Abaqus / STANDARD REDUCE ENGINEERING SIMULATION TIMES IN HALF With GPUs, engineers can run Abaqus simulations twice as fast. A leading car manufacturer, NVIDIA customer, reduced the simulation time of an engine model from 90 minutes to 44 minutes with GPUs. Faster simulations enable designers to simulate more scenarios to achieve, for example, a more fuel efficient engine. Abaqus 6.12 Multi GPU Execution 24 core 2 host, 48 GB memory per host 1,9 1,8 Speed up vs. CPU only As products get more complex, the task of innovating with more confidence has been ever increasingly difficult for product engineers. Engineers rely on Abaqus to understand behavior of complex assembly or of new materials. 1,7 2 GPUs/Host 1,6 1,5 1,4 1,3 1,2 1,1 1 8 1 GPU/Host 1,5 1,5 3,0 3,4 Number of Equations (millions) 3,8 GPU ACCELERATED ENGINEERING ANSYS: SUPERCOMPUTING FROM YOUR WORKSTATION WITH NVIDIA TESLA GPU HIGH PERFORMANCE COMPUTING “ A new feature in ANSYS Mechanical leverages graphics processing units to significantly lower solution times for large analysis problem sizes.” By Jeff Beisheim, Senior Software Developer, ANSYS, Inc. With ANSYS® Mechanical™ 13.0 and NVIDIA® Professional GPUs, you can: • Improve product quality with 2x more design simulations • Accelerate time-to-market by reducing engineering cycles • Develop high fidelity models with practical solution times How much more could you accomplish if simulation times could be reduced from one day to just a few hours? As an engineer, you depend on ANSYS Mechanical to design high quality products efficiently. To get the most out of ANSYS Mechanical 13.0, simply upgrade your Quadro GPU or add a Tesla GPU to your workstation, or configure a server with Tesla GPUs, and instantly unlock the highest levels of ANSYS simulation performance. FUTURE DIRECTIONS As GPU computing trends evolve, ANSYS will continue to enhance its offerings as necessary for a variety of simulation products. Certainly, performance improvements will continue as GPUs become computationally more powerful and extend their functionality to other areas of ANSYS software. 20X FASTER SIMULATIONS WITH GPUs DESIGN SUPERIOR PRODUCTS WITH CST MICROWAVE STUDIO RECOMMENDED TESLA CONFIGURATIONS Workstation Server •4x Tesla C2075 •Dual-socket Quad-core CPU •48 GB System Memory •4x Tesla M2090 •Dual-socket Quad-core CPU •48 GB System Memory 23x Faster with Tesla GPUs 25 Relative Performance vs CPU What can product engineers achieve if a single simulation run-time reduced from 48 hours to 3 hours? CST Microwave Studio is one of the most widely used electromagnetic simulation software and some of the largest customers in the world today are leveraging GPUs to introduce their products to market faster and with more confidence in the fidelity of the product design. 20 15 9X Faster with Tesla GPU 10 5 0 2x CPU 2x CPU + 1x C2075 2x CPU + 4x C2075 Benchmark: BGA models, 2M to 128M mesh models. CST MWS, transient solver. CPU: 2x Intel Xeon X5620. Single GPU run only on 50M mesh model. 9 NAMD NAMD 2.8 BENCHMARK Scientists and researchers equipped with powerful GPU accelerators have reached new discoveries which were impossible to find before. See how other computational researchers are experiencing supercomputer-like performance in a small cluster, and take your research to new heights. Ns/Day Gigaflops/sec (double precision) The Team at University of Illinois at Urbana-Champaign (UIUC) has been enabling CUDA-acceleration on NAMD since 2007, and the results are simply stunning. NAMD users are experiencing tremendous speed-ups in their research using Tesla GPU. Benchmark (see below) shows that 4 GPU server nodes out-perform 16 CPU server nodes. It also shows GPUs scale-out better than CPUs with more nodes. 3.5 3 GPU+CPU 2.5 2 1.5 1 CPU only 0.5 0 2 4 8 12 16 # of Compute Nodes NAMD 2.8 B1, STMV Benchmark A Node is Dual-Socket, Quad-core x5650 with 2 Tesla M2070 GPUs NAMD courtesy of Theoretical and Computational Bio-physics Group, UIUC LAMMPS LAMMPS is a classical molecular dynamics package written to run well on parallel machines and is maintained and distributed by Sandia National Laboratories in the USA. It is a free, open-source code. LAMMPS has potentials for soft materials (biomolecules, polymers) and solid-state materials (metals, semiconductors) and coarse-grained or mesoscopic systems. The CUDA version of LAMMPS is accelerated by moving the force calculations to the GPU. 250x RECOMMENDED HARDWARE CONFIGURATION 200x Workstation Speedup vs Dual Socket CPUs 300x • 4xTesla C2075 • Dual-socket Quad-core CPU • 24 GB System Memory 150x 100x 50x x Server 3 6 12 24 # of GPUs 48 Benchmark system details: Each node has 2 CPUs and 3 GPUs. CPU is Intel Six-Core Xeon Speedup measured with Tesla M2070 GPU vs without GPU Source: http://users.nccs.gov/~wb8/gpu/kid_single.htm 10 96 • 2x-4x Tesla M2090 per node • Dual-socket Quad-core CPU per node • 128 GB System Memory per node The latest release of Parallel Computing Toolbox and MATLAB Distributed Computing Server takes advantage of the CUDA parallel computing architecture to provide users the ability to • Manipulate data on NVIDIA GPUs • Perform GPU accelerated MATLAB operations • Integrate users‘ own CUDA kernels into MATLAB applications • Compute across multiple NVIDIA GPUs by running multiple MATLAB workers with Parallel Computing Toolbox on the desktop and MATLAB Distributed Computing Server on a compute cluster NVIDIA and MathWorks have collaborated to deliver the power of GPU computing for MATLAB users. Available through the latest release of MATLAB 2010b, NVIDIA GPU acceleration enables faster results for users of the Parallel Computing Toolbox and MATLAB Distributed Computing Server. MATLAB supports NVIDIA® CUDAtm – enabled GPUs with compute capability version 1.3 or higher, such as Tesla™ 10-series and 20-series GPUs. MATLAB CUDA support provides the base for GPUaccelerated MATLAB operations and lets you integrate your existing CUDA kernels into MATLAB applications. For more information, visit: www.nvidia.co.uk/matlab SPEED-UP OF COMPUTATIONS TESLA BENEFITS RELATIVE PERFORMANCE OF GPU COMPARED TO 5 • • • • Double Precision Single Precision 4 Speed-up Highest Computational Performance High-speed double precision operations Large dedicated memory High-speed bi-directional PCIe communication NVIDIA GPUDirect™ with InfiniBand Most Reliable 3 • ECC memory • Rigorous stress testing 2 Best Supported 1 0 0 500 1000 1500 2000 2500 3000 3500 4000 4500 Matrix Size • • • • • • Professional support network OEM system integration Long-term product lifecycle 3 year warranty Cluster & system management tools (server products) Windows remote desktop support RECOMMENDED TESLA AND QUADRO CONFIGURATIONS High-End Workstation Mid-Range Workstation Entry Workstation • Two Tesla C2075 • Quadro 4000 • Two quad-core CPUs • 12 GB system memory • Tesla C2075 • Quadro 6000 • Quad-core CPU • 8 GB system memory • Quadro 4000 GPU • Single quad-core CPU • 4 GB system memory 11 HIGH PERFORMANCE COMPUTING MATLAB ACCELERATION ON TESLA® GPUs LBULTRA PLUG-IN FOR RTT DELTAGEN LBULTRA DEVELOPED BY FLUIDYNA FAST FLOW SIMULATIONS DIRECTLY WITHIN RTT DELTAGEN IMPROVED OPTIMUM PERFORMANCE THROUGH EARLY INVOLVEMENT OF AERODYNAMICS INTO DESIGN The flow simulation software LBultra works particularly fast on graphics processing units (GPUs). As a plug-in prototype, it is tightly integrated into the high-end 3D visualization software RTT DeltaGen by RTT. As a consequence, flow simulation computing can be proceeded directly within RTT DeltaGen. First of all, a certain scenario is selected, such as analyzing a spoiler or an outside mirror. Next, various simulation parameters and boundary conditions such as flow rates or resolution levels are set, which also influence the calculation time and result‘s accuracy. Due to this coupling system, the designer can do a direct flow simulation of their latest design draft – enabling the designer to do a parallel check of aerodynamic features of the vehicle’s design draft. After determining the overall simulation, the geometry of the design is handed over to LBultra and the simulation is started. While the simulation is running, data is being visualized in realtime in RTT DeltaGen. Relative Performance vs CPU In addition, there is an opportunity of exporting simulation results. These results may then, for instance, be further analyzed in more detail by experts using highly-capable fluid mechanics specialist programs and tools. 75x Faster with 3 Tesla GPUs 3x Tesla C2075 12 20X Faster with 1 Tesla GPU Tesla C2075 Inrel Quad-Core Xeon HIGH PERFORMANCE COMPUTING GPU COMPUTING IN FINANCE – CASE STUDIES BLOOMBERG: GPUs INCREASE ACCURACY AND REDUCE PROCESSING TIME FOR BOND PRICING Bloomberg implemented an NVIDIA Tesla GPU computing solution in their datacenter. By porting their application to run on the NVIDIA CUDA parallel processing architecture Bloomberg received dramatic improvements across the board. As Bloomberg customers make crucial buying and selling decisions, they now have access to the best and most current pricing information, giving them a serious competitive trading advantage in a market where timing is everything. 48 GPUs 42x Lower Space 2000 CPUs $144K 28x Lower Cost $4 Million 38x Lower Power Cost $1.2 Million / year $31K / year NVIDIA TESLA GPUs USED BY J.P. MORGAN TO RUN RISK CALCULATIONS IN MINUTES, NOT HOURS THE CHALLENGE Risk management is a huge and increasingly costly focus for the financial services industry. A cornerstone of J.P. Morgan’s cost-reduction plan to cut the cost of risk calculation involves accelerating its risk library. It is imperative to reduce the total cost of ownership of J.P. Morgan’s risk-management platform and create a leap forward in the speed with which client requests can be serviced. THE SOLUTION J.P. Morgan’s Equity Derivatives Group added NVIDIA® Tesla M2070 GPUs to its datacenters. More than half the equity derivative-focused risk computations run by the bank have been moved to running on hybrid GPU/CPU-based systems, NVIDIA Tesla GPUs were deployed in multiple data centers across the bank’s global offices. J.P. Morgan was able to seamlessly share the GPUs between tens of global applications. THE IMPACT Utilizing GPUs has accelerated application performance by 40X and delivered over 80 percent savings, enabling greener data centers that deliver higher performance for the same power. For J.P. Morgan, this is game-changing technology, enabling the bank to calculate risk across a range of products in a matter of minutes rather than overnight. Tesla GPUs give J.P. Morgan a significant market advantage. 13 Your First Choice in GPU Solutions NVIDIA Tesla M2090 / M2075 Validated Platforms Model Number GPGPU Performance Tesla M2090 Tesla M2075 Highest Performance Mid-Range Performance Seismic processing, CFD, CAE, Financial computing, Computational chemistry and Physics, Data analytics, Satellite imaging, Weather modeling GPU Computing Applications Peak double precision floating point performance 665 Gigaflops 515 Gigaflops Peak single precision floating point performance 1331 Gigaflops 1030 Gigaflops Memory bandwidth (ECC off) 177 GBytes/sec 150 GBytes/sec 6 GigaBytes 6 GigaBytes 512 448 Memory size (GDDR5) CUDA cores FT48-B7055 GN70-B7056 B7056G70V8HR (BTO) B7055F48W8HR (BTO) x1 to x4 x 1 or x 2 GOLD GOLD Supported CPU (2) Intel® Xeon® E5-2600 Series (Sandy Bridge-EP) 16/ 8+8 Number of DIMM Slot Memory Type (max. capacity) R-DDR3 1600/ 1333/ 1066/ 800 w/ ECC (512GB) U-DDR3 1333/ 1066 w/ ECC (128GB) Storage Controller Intel® C602 PCH (SATA 6Gb/s & 3Gb/s) Networking PCI Expansion Slots Standard Model B7056G70V8HR (BTO) (3) GbE (shared IPMI NIC), or (2) 10GbE + (1) GbE (shared IPMI NIC) Storage Backplane 8-port SAS/ SATA Please contact us for all BTO Part Numbers Number of DIMM Slot Memory Type (max. capacity) Storage Controller Networking (2) PCI-E (Gen.3) x16 + (2) PCI-E (Gen.3) x8 Number of HDD Bay (8) hot-swap 3.5" Intel® C602 PCH Intel® QPI 8.0/ 7.2/ 6.4GT/s 8/ 4+4 R-DDR3 1600/ 1333/ 1066/ 800 w/ ECC (256GB) U-DDR3 1600/ 1333/ 1066 w/ ECC (64GB) Intel® C602 PCH (SATA 6Gb/s & 3Gb/s) 0, 1, 5, 10 (Intel® RSTe 3.0) RAID Support 0, 1, 5, 10 (Intel® RSTe 3.0) RAID Support (2) Intel® Xeon® E5-2600 Series (Sandy Bridge-EP) Chipset Interconnection Intel® QPI 8.0/ 7.2/ 6.4GT/s QuickPath Interconnect Supported CPU Chipset Intel® C602 PCH Chipset 4U (27.5" in depth) Enclosure Form Factor 2U (27.56" in depth) Enclosure Form Factor FT48-B7055 Model Number GN70-B7056 Model Number Power Supply (1+1) 770W RPSU PCI Expansion Slots Standard Model B7055F48W8HR (BTO) (3) GbE (shared IPMI NIC), or (2) 10GbE + (1) GbE (shared IPMI NIC) (4) PCI-E (Gen.3) x16 + (2) PCI-E (Gen.3) x8 + (1) PCI 32/33MHz Number of HDD Bay (8) hot-swap 3.5" Storage Backplane (2) 4-port SAS/ SATA 6Gb/s Please contact us for all BTO Part Numbers Power Supply (2+1) 1,540W RPSU FT72-B7015 FT77-B7015 B7015F72V2R(BTO) B7015F77V4R (BTO) x 1 to x 8 x1 to x 8 SILVER SILVER FT72-B7015 Model Number Supported CPU Number of DIMM Slot Memory Type (max. capacity) Storage Controller Networking PCI Expansion Slots Memory Type (max. capacity) Storage Controller (2) fixed 2.5" SATA-II Storage Backplane N/A N/A Multimedia Drive (4) GbE (8) PCI-E (Gen.2) x16; (2) PCI-E (Gen.2) x16( w/ x4 link) (1) PCI-E (Gen.2) x4; (1) PCI 32/33MHz B7015F72V2R(BTO) 0, 1, 10, 5 (Intel® Matrix RAID) RAID Support N/A Number of HDD Bay 18/ (9+9) R-DDR3 1333/ 1066/ 800 w/ ECC (144GB) U-DDR3 1333/ 1066 w/ ECC (48GB) Intel® ICH10R 6-port SATA-II Number of DIMM Slot 0, 1, 10, 5 (Intel® Matrix RAID) Standard Model Intel® QPI 6.4/ 5.86/ 4.8 GT/s Chipset Interconnection 18/ (9+9) R-DDR3 1333/ 1066/ 800 w/ ECC (144GB) U-DDR3 1333/ 1066 w/ ECC (48GB) Intel® ICH10R 6-port SATA-II Multimedia Drive Intel® (2) 5520 + ICH10R Chipset Intel® QPI 6.4/ 5.86/ 4.8 GT/s RAID Support (2) Intel® Xeon® 5500/5600 Series (Nehalem/ Westmere) Supported CPU Intel® (2) 5520 + ICH10R Chipset Interconnection 4U (28" in depth) Enclosure Form Factor (2) Intel® Xeon® 5500/5600 Series (Nehalem/ Westmere) Chipset FT77-B7015 Model Number 4U (28" in depth) Enclosure Form Factor (4) GbE (8) PCI-E (Gen.2) x16; (2) PCI-E (Gen.2) x16( w/ x4 link) (1) PCI-E (Gen.2) x4; (1) PCI 32/33MHz Networking PCI Expansion Slots Power Supply (2+1) 2,400W RPSU Please contact us for all BTO Part Numbers Standard Model Number of HDD Bay B7015F77V4R (BTO) (4) fixed 2.5" SATA-II Storage Backplane N/A Power Supply (2+1) 2,400W RPSU Please contact us for all BTO Part Numbers GN70-B8236-IL B8236G70W8HR-HE-IL (BTO) x 1 or x 2 GOLD Model Number GN70-B8236-HE-IL Enclosure Form Factor 2U (27.56" in depth) Supported CPU (2) AMD Opteron™ 6200 Series (Interlagos) AMD SR5690 + SR5650 + SP5100 Chipset HyperTransport™ Link 3.0 QuickPath Interconnect 16/ 8+8 Number of DIMM Slot Memory Type (max. capacity) Storage Controller RAID Support R-DDR3 1600/ 1333/ 1066/ 800 w/ ECC (256GB) U-DDR3 1333/1066 w/ ECC (128GB) LSI SAS2008 (SAS 6Gb/s) 0, 1, 1E, 10 (LSI RAID stack) (3) GbE (shared IPMI NIC) Networking PCI Expansion Slots Standard Model B8236G70W8HR-HE-IL (BTO) (2) PCI-E (Gen.2) x16 + (2) PCI-E (Gen.2) x8 Number of HDD Bay (8) hot-swap 3.5" Storage Backplane 8-port SAS/ SATA 6Gb/s Please contact us for all BTO Part Numbers Power Supply (1+1) 770W RPSU 15 PNY & TYAN IS COMMITTED TO SUPPORT TESLA® KEPLER GPU ACCELERATORS1 Tesla K10 GPU Computing Accelerator – Optimized for single precision applications, the Tesla K10 is a throughput monster based on the ultra-efficient GK104 Kepler GPU. The accelerator board features two GK104 GPUs and delivers up to 2x the performance for single precision applications compared to the previous generation Fermi-based Tesla M2090 in the same power envelope. With an aggregate performance of 4.58 teraflop peak single precision and 320 gigabytes per second memory bandwidth for both GPUs put together, the Tesla K10 is optimized for computations in seismic, signal, image processing, and video analytics. Tesla K20 GPU Computing Accelerator – Designed for double precision applications and the broader supercomputing market, the Tesla K20 delivers 3x the double precision performance compared to the previous generation Fermibased Tesla M2090, in the same power envelope. Tesla K20 features a single GK110 Kepler GPU that includes the Dynamic Parallelism and Hyper-Q features. With more than one teraflop peak double precision performance, the Tesla K20 is ideal for a wide range of high performance computing workloads including climate and weather modeling, CFD, CAE, computational physics, biochemistry simulations, and computational finance. TECHNICAL SPECIFICATIONS TESLA K102 TESLA K20 Peak double precision floating point performance (board) 0.19 teraflops To be announced Peak single precision floating point performance (board) 4.58 teraflops To be announced Number of GPUs 2 x GK104s 1 x GK110 CUDA cores 2 x 1536 To be announced Memory size per board (GDDR5) 8 GB To be announced Memory bandwidth for board (ECC off)3 320 GBytes/sec To be announced GPU Computing Applications Seismic, Image, Signal Processing, Video analytics CFD, CAE, Financial computing, Computational chemistry and Physics, Data analytics, Satellite imaging, Weather modeling Architecture Features SMX SMX, Dynamic Parallelism, Hyper-Q System Servers only Servers and Workstations. Available May 2012 Q4 2012 1 products and availability is subject to confirmation 2 Tesla K10 specifications are shown as aggregate of two GPUs. 3 With ECC on, 12.5% of the GPU memory is used for ECC bits. So, for example, 6 GB total memory yields 5.25 GB of user available memory with ECC on. 16 Offering pre-and post sales assistance, three year standard warranty, professional technical support, and an unwavering commitment to customer satisfaction, our partners and customers experience firsthand why PNY is considered a market leader in the professional industry. PNY Professional Solutions are offered in cooperation with qualified distributors, specialty retailers, computer retailers, and system integrators. To find a qualified PNY Technologies partner visit : www.pny.eu/wheretobuy.php Germany, Austria, Switzerland, Russia, Eastern Europe Email: vertrieb@pny.eu Hotline Presales: +49 (0)2405/40848-55 United Kingdom, Denmark, Sweden, Finland, Norway Email: quadrouk@pny.eu Hotline Presales: +49 (0)2405/40848-55 France, Belgium, Netherland, Luxembourg Email: sales@pny.eu Hotline Presales: +33 (0)5 56 13 75 75 WARRANTY PNY Technologies offers a 3 year manufacturer warranty on all Tesla based systems in accordance to PNY Technologies’ Terms of Guarantee. SUPPORT PNY offers individual support as well as comprehensive online support. Our support websites provide FAQs, the latest information and technical data sheets to ensure you receive the best performance from your PNY product. Support Contact Information Business hours: 9:00 a.m. - 5:00 p.m. Germany, Austria, Switzerland, Russia, Eastern Europe Email: tech-sup-ger@pny.de Hotline Support: +49 (0)2405/40848-40 United Kingdom, Denmark, Sweden, Finland, Norway Email: tech-sup@pny.eu Hotline Support: +49 (0)2405/40848-40 France, Belgium, Netherland, Luxembourg Email: tech-sup@pny.eu Hotline Support: +33 (0)55613-7532 PNY Technologies Quadro GmbH Schumanstraße 18a 52146 Würselen Germany Tel: +49 (0)2405/40848-0 Fax: +49 (0)2405/40848-99 kontakt@pny.eu PNY Technologies United Kingdom Basepoint Business & Innovation Centre 110 Butterfield Great Marlings Luton, Bedfordshire Lu2 8DL United Kingdom Tel : +44 (0)870 423 1103 Fax: +44 (0)870 423 1104 quadrouk@pny.eu CONTACT PNY Technologies Europe Zac du Phare 9 rue Joseph Cugnot - BP181 33708 Mérignac Cedex France Tel: +33 (0)5 56 13 75 75 Fax: +33 (0)5 56 13 75 76 sales@pny.eu 17 HIGH PERFORMANCE COMPUTING WHERE TO BUY www.pny.eu