Ranking Very Many Typed Entities on Wikipedia

advertisement

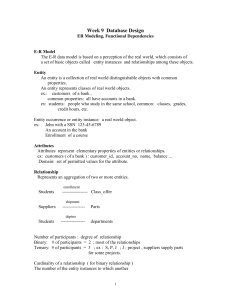

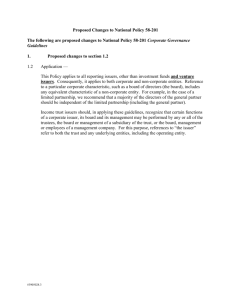

Ranking Very Many Typed Entities on Wikipedia Hugo Zaragoza Henning Rode Peter Mika Yahoo! Research Barcelona, Spain University of Twente The Netherlands Yahoo! Research Barcelona, Spain hugoz@yahoo-inc.com Jordi Atserias Massimiliano Ciaramita pmika@yahoo-inc.com Giuseppe Attardi Yahoo! Research Barcelona, Spain Yahoo! Research Barcelona, Spain Università di Pisa Italy jordi@yahoo-inc.com massi@yahoo-inc.com h.rode@cs.utwente.nl ABSTRACT We discuss the problem of ranking very many entities of different types. In particular we deal with a heterogeneous set of types, some being very generic and some very specific. We discuss two approaches for this problem: i) exploiting the entity containment graph and ii) using a Web search engine to compute entity relevance. We evaluate these approaches on the real task of ranking Wikipedia entities typed with a state-of-the-art named-entity tagger. Results show that both approaches can greatly increase the performance of methods based only on passage retrieval. attardi@di.unipi.it ∗ Instead, we must develop models on the fly, at query time. In this sense the models developed are more similar to those described in [1]. However, here we wish to rank the entities themselves, and not sentences. In this paper we explore two types of algorithms for entity ranking: i) algorithms that use the entity containment graph to compute the importance of entities based on the top ranked passages, and ii) algorithms that use correlation on web search results. 2. PROBLEM SETTING To study this task we followed these steps: 1. MOTIVATION We are interested in the problem of ranking entities of different types as a response to an open (ad-hoc) query. In particular, we are interested in collections with many entities and many types. This is the typical case when we deal with collections which have been analyzed using NLP techniques such as name entity recognition or semantic tagging. Let us give an example of the task we are interested in. Imagine that a user types an informational query such as “Life of Pablo Picasso” or “Egyptian Pyramids” into a search engine. Besides relevant documents, we wish to rank relevant entities such as people, countries, dates, etc. so that they can be presented to the user for browsing. We believe this task is novel and interesting in its own right. In some sense the task is similar to the expert finding task in TREC [2]. However, this task will lead to very different models, for two reasons. First we must deal with a heterogeneous set of entities; some of them are very general (like “school”, “mother”) whereas others are very specific (“Pablo Picasso”). Second, the entities are simply too many to build entity-specific models as is done for experts in TREC [2]. ∗on sabbatical at Yahoo! Research Barcelona. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Copyright 200X ACM X-XXXXX-XX-X/XX/XX ...$5.00. • we used a statistical entity recognition algorithm to identify many entities (and their corresponding types) on a copy of the English Wikipedia, • we asked users to issue queries to our baseline entity ranking systems and to evalute the results, • we compared the performance of several algorithms on these queries. In order to extract entities from Wikipedia, we first trained a statistical entity extractor on the BBN Pronoun Coreference and Entity Type Corpus which includes annotation of named entity types (Person, Facility, Organization, GPE, Location, Nationality, Product, Event, Work of Art, Law, Language, and Contact-Info), nominal entity types (Person, Facility, Organization, GPE, Product, Plant, Animal, Substance, Disease and Game), and numeric types (Date, Time, Percent, Money, Quantity, Ordinal and Cardinal). We note that some types are dedicated to identify common nouns that refer or describe named entities; for example, father and artist could be tagged with the Person-Description type. We applied this entity extractor on an XMLised Wikipedia collection constructed by the 2006 INEX XML retrieval evaluation initiative [3] (625,405 Wikipedia entries). This identified 28 million occurrences of 5,5 million unique entities. A special retieval index was then created containing both the text and the identified entities. The overall processing time was approximately one week on a single PC. This tagged collection has been made available [7]; more detailed information about its construction and content can be found at this reference. The evaluation framework for the task was set up as follows. First, the user chose a query on a topic that the user Query Table 1: Example queries and entity judgedments (see text for discussion). “Yahoo! Search Engine” Most Important Entities Yahoo, Google, MSN, Inktomi, Yahoo.com. Important Entities Web, crawler, 2004, AltaVista, 2002, Amazon.com, Jeeves, TrustRank, WebCrawler, Search Engine Placement, more than 20 billion Web, eBay, Worl WIde Web, BT OpenWorld, between 1997 and 1999, Stanford University and Yahoo, AOL, Kelkoo, Konfabulator, AlltheWeb, Excite. Related Entities users, Firefox, Teoma, LookSmart, Widget, companies, company, Dogpile, user, Searchen Networks, MetaCrawler, Fitzmas, Hotbot, ... Query “Budapest” Most Important Entities Budapest, Hungary, Hungarian, city, Greater Budapest, capital, Danube, Budapesti Közgazdaságtudományi és Államigazgatási Egyetem, M3 Line, Pest county. Important Entities University of Budapest, Austria, town, Budapest Metro, Soviet, 1956, Ferenc Joachim, Karl Marx University of Economic Sciences, Budapest University of Economic Sciences, Eötvös Loránd University of Budapest, Technical University of Budapest, 1895, February 13, Budapest Stock Exchange, Kispest, ... Related Entities Paris, Vienna, German, Prague, London, Munich, Collegium Budapest, government, Jewish, Nazi, 1950, Debrecen, 1977, M3, center, Tokyo, World War II, New York, Zagreb, Leipzig, population, residences, state, cementery, Serbian, Novi Sad, 1949, Szeged, Turin, Graz, 6-3, Medgyessy, ... Query Most Important Entities Important Entities Related Entities “Tutankhamun curse” Tutankhamun, Carnarvon, mummies, Boy Pharaoh, The Curse, archaeologist, Howard Carter, 1922. Pharaohs, King Tutankhamun. Valley, KV62, Curse of Tutankhamun, Curse, King, Mummy’s Curse, ... knew well and that was covered in Wikipedia. Then the system ran this query against a standard passage retrieval algorithm, that retrieved the 500 most relevant passages and collected all the entities which appeared in them. This is the candidate entity set which needs to be ranked by the different algorithms. Finally, the entities were ranked using our baseline entity ranking algorithm (discussed later) and given to the user to evaluate. The possible judge assessments were: Most Important, Important, Related, Unrelated, or Don’t know. The user was asked to rank all entries if possible, and at least the first fifty. Besides the judgment labels, users were not given any specific training nor were they given examples queries or judgments. 10 judges were recruited and each judged from 3 to 10 queries, coming to a total of 50 judged queries. Some resulting queries and judgments are given in Table 1; with these examples we want to stress the difficulty and subjectivity of the evaluation task. Indeed we realize that our task evaluation is quite naı̈ve and may suffer from a number of problems which we wish to address in future evaluations. However, this initial evaluation allowed us to start studying some of the properties of this task, and to compare (however roughly) several ideas and approaches. 3. ENTITY RANKING METHODS First, we will introduce some notation. Let a retrieved passage be the tuple (pID, s) where pID is the unique ID of the passage and s is the retrieval score of the passage for the given query. Call Pq the set formed by the K highest scored retrieved passages with respect to the query q (in our case K =500). Let an entity be the tuple (v,t) where v is its string value (e.g. ’Einstein’) and t is its type (e.g. Person). Call C the set of all entities in the collection and Cq the set of all entities occurring in Pq . The baseline model we consider is to use a passage retrieval algorithm and score an entity by the maximum score s of the passages pID in which the entity appears in Pq . This is referred to as MaxScore in Table 2. We report a number of evaluation measures. P@K, MAP and MRR denote precision at K, mean average precision and mean reciprocal rank respectively; these measures were computed by binarising the judgments into relevant (for Most Important and Important labels) and irrelevant (for the rest). DCG is the discounted cumulative gain function; we used gains 10, 3, 1 and 0 respectively for the Most Important to Unrelated labels, and the discount function used was −log(r+1). NDCG is the normalized DCG. 3.1 Entity Containment Graph Methods The first set of algorithms are based on the “entity containment” graph. This graph is constructed connecting every passage in Pq to every entity in Cq which is contained in the passage. This forms a bipartite graph in which the degree of an entity equals its passage frequency in passages Pq . Figure 1 shows two of the entity-containment graph obtained for the query ’Life of Pablo Picasso’. Once this graph is constructed we can use different graph centrality measures to rank entities in the graph. The most basic one is the degree. This method (noted Degree in Table 2) alone yields a 47% relative increase in MAP and 22% in NDCG. This is clear indication that the entity containment where N is the total number of sentences and ne the number containing the entity e. This improved the results further, leading to a 76% relative increase over the baseline in MAP and 31% in NDCG. We also tried to improve results by weighting the entity degree computation with the sentence relevance scores. This approach (noted W- in Table 2) did not improve the results, despite trying several forms of score normalization. we do not need to be constrained to the text of Wikipedia: to compute entity relevance, we can rely on the Web as a noisier, but much larger scale corpus. Based on this observation, we have experimented with ranking entities by computing their correlation to the query phrase on the Web using correlation measures well-known in text mining [6]. This technique has been successfully applied in the past, for example to the problem of social network mining from the Web [4, 5]. The difference here is that we are only interested in the correlations between the query and the set of related entities, while in co-occurrence analysis one typically computes the correlations between all possible pairs of entities to create a co-occurrence graph of entities. Query-to-entity correlation measures can be easily computed using search engines by observing the page counts returned for the entity, query and their conjunction. We found that of the common measures we tested (Jaccardcoefficient, Simpson-coefficient and Google distance), the Jaccard-coefficient clearly produced the best results (see Web Jaccard in Table 2). It resulted in practice that we obtain the best results from the search engine when quoting the query string, but not the entity label. This can be explained by the fact that queries are typically correct expressions, while the entity tagger often makes mistakes in determining the boundaries of entities. Enforcing these incorrect boundaries results in a decrease in performance. The improvement obtained over the baseline (32% relative in MAP and 6% in NDCG) is however not as good as that obtained from the entity containment graph. One of the reasons may be that, for some queries, the quality of the results obtained from searching the Web may be inferior to that obtained retrieving Wikipedia passages. For such queries, results obtained after a certain rank are not relevant and therefore bias the correlation measures. To alleviate this, we experimented with a novel measure based on the idea of discounting the importance of documents as their rank increases. Simple versions of this did not lead to an increase in performance. One of the main problems is that different queries and entities result in result sets of varying quality and size. This lead us to try slightly more sophisticated methods. In order not to penalize documents with lots of relevant results, instead of using the ranks directly we used a notion of average precision where the relevant documents are those returned both by the query and the entity. The method is illustrated in Figure 2. We compare the set of top K documents returned by the query (thought of as relevant) with the ranked list of results returned for a particular entity. Next, we determine which of the documents returned for the entity are in the relevant set and compute the their average precision. Computing such an average precision has the advantage of almost eliminating the effect of K, which should depend on the query. Indeed, this method greatly improves the result over the Jaccard and baseline methods (see Web RankDiscounted in Table 2). This method has achieved a performance that is on par with degree-based methods that take ief into account. Nevertheless, we still require the entity extraction and passage retrieval steps to produce the set of candidate entities. 3.2 4. Figure 1: Entity containment graphs for the query “Life of Pablo Picasso”. (a) Small Graph Detail (3 relevant sentences only): (b) Full Entity Containment Graph graph can be a useful representation of entity relevance. We experimented with higher order centrality measures such as finite stochastic walks or PageRank but the performance was similar or worse than that of degree. We observed that degree-dependent methods are biased by very general entities (such as descriptions, country names, etc.) which are not interesting but have high frequency. To improve on this, we experimented with two different methods. An ad-hoc method consists in removing the description types which are the most generic and would seem to be a priori the less informative. However, doing this did not lead to improved results (models noted F- in Table 2). Furthermore, this solution would not be applicable in practice since we may not always know which are the less informative types of a corpus. An alternative method considered was to weight the degree of an entity by its inverse entity frequency: ief := log(N/ne ) , Web Search based Methods For computing the relevance of entities to a given query, DISCUSSION We have taken the first steps towards studying the problem Table 2: Performance of the different models (best two in bold). MODEL MAP P@10 P@30 MRR DCG NDCG Relative ∆NDCG MaxScore 0.34 0.37 0.28 0.64 67.91 0.64 – MaxScore(1 + ief ) 0.40 0.39 0.29 0.66 69.92 0.68 6% Degree 0.50 0.54 0.37 0.96 79.69 0.78 22% F-Degree 0.50 0.52 0.39 0.95 79.23 0.79 22% Degree ·ief 0.60 0.63 0.451 0.98 83.89 0.84 31% F-Degree ·ief 0.57 0.60 0.44 0.98 82.59 0.82 28% W-Degree 0.48 0.51 0.38 0.92 79.11 0.76 21% W-Degree ·ief 0.54 0.63 0.42 0.94 82.68 0.81 28% Web RankDiscounted 0.62 0.65 0.50 0.95 86.34 0.83 30% Web Jaccard 0.45 0.50 0.340 0.75 78.27 0.71 10% of ad-hoc entity ranking in the presence of a large set of heterogeneous entities. We have constructed a realistic testbed to carry out evaluation of entity ranking models, and we have provided some initial directions of research. With respect to entity containment graphs our results show that it is important to take into account the notion of inverted entity frequency to discount general types. With respect to Web methods we showed that taking into account the rank of the documents in the computation of correlations can yield significant improvements in performance. Web methods are complementary to graph methods and could be combined in a number of ways. For example, correlation measures can be used to compute correlation-graphs among the entities; these graphs could replace the entity containment graphs discussed above. Furthermore ief could be combined with Web measures. Or we could define a ief that depends on Web information. Furthermore, it may be possible to select the candidate set of entities directly from the search results (or even just the snippets) obtained from a Web search engine. This would eliminate the need of offline pre-processing collections. We plan to explore these issues in the future. Nevertheless, it is necessary to increase the quality of the evaluation in order to further quantify the benefits of the different methods. To this end, we have released to the public the corpus used in this study and we plan to design and carry our more thorough evaluations. Figure 2: Computing the average precision of the results returned for the entity ”Gertrude Stein” with respect to the set of pages relevant to the query ”Life of Pablo Picasso”. [4] Yutaka Matsuo, Masahiro Hamasaki, Hideaki Takeda, Junichiro Mori, Danushka Bollegara, Yoshiyuki Nakamura, Takuichi Nishimura, Koiti Hasida, and Mitsuru Ishizuka. Spinning Multiple Social Networks for Semantic Web. In Proceedings of the Twenty-First National Conference on Artificial Intelligence (AAAI2006), 2006. [5] Peter Mika. Flink: Semantic Web Technology for the 5. ACKNOWLEDGEMENTS Extraction and Analysis of Social Networks. Journal of For entity extraction, we used the open source SuperSense Web Semantics, 3(2), 2005. Tagger (http://sourceforge.net/projects/supersensetag/). [6] G. Salton. Automatic text processing. Addison-Wesley, For indexing and retrieval, we used the IXE retrieval library Reading, MA, 1989. (http://www.ideare.com/products.shtml), kindly made avail- [7] H. Zaragoza, J. Atserias, M. Ciaramita, and G. Attardi. able to us by Tiscali. Semantically annotated snapshot of the English Wikipedia v.0 (SW0). http://www.yr-bcn.es/semanticWikipedia, 2007. 6. REFERENCES [1] S. Chakrabarti, K. Puniyani, and S. Das. Optimizing scoring functions and indexes for proximity search in type-annotated corpora. In WWW ’06, pages 717–726, New York, NY, USA, 2006. ACM Press. [2] H. Chen, H. Shen, J. Xiong, S. Tan, and X. Cheng. Social network structure behind the mailing lists: ICT-IIIS at TREC 2006 expert finding track. In Text REtrieval Conference (TREC), 2006. [3] Ludovic Denoyer and Patrick Gallinari. The wikipedia xml corpus. SIGIR Forum, 40(1):64–69, June 2006.