Problem set 8 solutions [50]

advertisement

![Problem set 8 solutions [50]](http://s3.studylib.net/store/data/008401373_1-b8f06761335565189e8dac73688116df-768x994.png)

The University of Chicago, Department of Physics

Page 1 of 8

Problem set 8 solutions [50]

Michael A. Fedderke

Standard disclaimer: while every effort is made to ensure the correctness of these

solutions, if you find anything that you suspect is an error, please email me at

mfedderke@uchicago.edu.

All problem references to Arfken and Weber, 7th edition.

I. 10.1.4 [10]

I assume that t ∈ (0, π), else the Green’s Function is trivially identically zero on the whole domain.

Consider the boundary value problem

1

−y 00 − y = δ(x − t);

y(0) = 0;

y(π) = 0.

(1)

4

In the domains x ≶ t, the differential equation is homogeneous and second-order, so the solution space is

spanned by two linearly independent solutions:

1

−y 00 − y = 0 ⇒ y(x) = A cos(x/2) + B sin(x/2),

4

so if we split the domain x ∈ [0, π] into sub-domains x ∈ [0, t) and x ∈ (t, π], we have the solution

A(t) cos(x/2) + B(t) sin(x/2) 0 ≤ x < t

G(x; t) =

,

C(t) cos(x/2) + D(t) sin(x/2) t < x ≤ π

(2)

(3)

where A, B, C, D depend in general on t but not on x. Imposing the BCs y(0) = 0 ⇒ G(x = 0, t > 0) = 0 and

y(π) = 0 ⇒ G(x = π, t < π) = 0 sets A = D = 0, while continuity at x = t demands that

B(t) sin(t/2) = C(t) cos(t/2) ⇒ C(t) = B(t) tan(t/2).

(4)

The value of B(t) can be found by integrating both sides of the ode in the small domain (t − , t + ) for some

small positive and then sending → 0:

Z t+

Z t+

1

00

dx −G − G =

dx δ(x − t)

(5)

4

t−

t−

⇒ −G0 |t+

t− − O() = 1

(6)

=1

(7)

1

1

B(t) cos(t/2) + C(t) sin(t/2) = 1.

2

2

Substituting (4) into (8), we have

(8)

⇒

G0−

−

G0+

⇒

B(t) = 2 cos(t/2)

(9)

C(t) = 2 sin(t/2)

(10)

Therefore, we have the Green’s Function

G(x; t) =

Mathematical Methods of Physics

2 cos(t/2) sin(x/2)

0≤x≤t

.

2 sin(t/2) cos(x/2) t < x ≤ π

v1.0

2 December 2015, 6:00pm

(11)

Autumn 2015

The University of Chicago, Department of Physics

Page 2 of 8

II. 10.1.7 [10]

I will initially assume that k 6= 0. Let’s find the Green’s function by solving

ψ̈ + k ψ̇ = δ(t − t0 );

(12)

ψ(0) = ψ̇(0) = 0.

I will also assume that t0 > 0 otherwise the Green’s function is again trivially identically zero on t ≥ 0. (I am

implicitly assuming that t ≥ 0 is the only domain we are interested in since we have initial conditions imposed at

t = 0.)

We split the domain up into t ∈ [0, t0 ) and t ∈ (t0 , ∞]. In either sub-domain, the ode is homogeneous:

ψ̈ + k ψ̇ = 0, and has a solution space spanned by two linearly independent solutions (they are only linearly

independent for k 6= 0): ψ(t) = Ae−kt + B. So the solution is

A(t )e−k(t−t0 ) + B(t ) 0 ≤ t < t

0

0

0

G(t; t0 ) =

,

(13)

C(t0 )e−k(t−t0 ) + D(t0 ) 0 < t0 < t ≤ π

where I have factored out ekt0 from A(t0 ) and C(t0 ) for reasons to become clear shortly. The IC’s ψ(0) = ψ̇(0) = 0

demand that A = B = 0, which can be thought of as a statement of causality: G(t; t0 ) = 0 for t < t0 . We demand

continuity at t = t0 so A + B = C + D ⇒ C = −D, giving

0

0 ≤ t < t0

G(t; t0 ) =

,

(14)

D(t0 ) 1 − e−k(t−t0 ) 0 < t0 < t ≤ π

To find D(t0 ) we again perform the trick of integrating the ode around t0 in an -domain, to find that

(15)

+

ψ̇|+

− + kψ|− = 1 ⇒ kD(t0 ) = 1 ⇒ D = 1/k,

so

G(t; t0 ) =

0

0 ≤ t < t0

.

1 1 − e−k(t−t0 ) 0 < t0 < t ≤ π

k

(k 6= 0)

(16)

I will not find the Green’s function for k = 0 because that case is much more easily handled by direct integration.

To solve

ψ̈ + k ψ̇ = e−t

(t > 0).

(17)

subject to the same ICs, we simply convolve the source (evaluated at t0 !) with the Green’s function. (There is no

need to include a general homogenous solution; if you do, you must find that the coefficients of the two linearly

independent solutions in your general solution vanish because the Green’s function we found above already satisfies

the ICs by construction. If you do not find this, you have made a mistake. Only if we changed the ICs, but kept

the same Green’s function, would you need the general homogenous solution to compensate for using a Green’s

function computed with different ICs.) Doing this, we obtain

Z

ψ(t) =

∞

G(t; t0 ) e−t0 dt0 =

0

Z

0

t

i

e−t0 h

1 − e−k(t−t0 ) dt0 ,

k

(18)

where I used that G(t; t0 ) = 0 for t0 > t. There is nothing non-trivial in evaluating this integral for k 6= 0; but one

needs to treat k = 1 as a separate case because the second term in the [ · · · ]-bracket becomes t0 -independent for

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

Autumn 2015

The University of Chicago, Department of Physics

Page 3 of 8

k = 1:

ψ(t) =

(k − 1) − e−t k − e−(k−1)t

k(k − 1)

1 − e−t − te−t

t − (1 − e−t )

k 6= 1, k 6= 0

k=1

(19)

k=0

I have now also listed the k = 0 solution, which is obtained trivially by direct integration of the ode: ψk=0 (t) =

R t 0 R t0 00 −t00

dt 0 dt e , where I have imposed the ICs. Alternatively, either of the k = 0, 1 cases could be obtained from

0

the limit of the general case (but you did need to actually do this, or somehow point out the anomalous cases and

mention that they are removable discontinuities of the solution when viewed as a function of k).

III. 10.1.9 [10]

This question proceeds much like the previous two, so I’ll be more brief here. I’ll also assume that k 6= 0, since

k = 0 is more easily handled by direct integration anyway. We solve G00 − k 2 G = δ(x − x0 ) subject to G = 0

as |x| → ∞. Away from the δ-fn the general solution is clearly G = Aek(x−x0 ) + Be−k(x−x0 ) , so the solution

appropriate for the BCs and which respects continuity at x = x0 is (we can take k > 0 w.l.o.g.)

ek(x−x0 )

x < x0

G(x; x0 ) = A(x0 )

.

(20)

e−k(x−x0 ) x > x0

Doing the same integrate-the-ode-in-an--domain-around-x0 trick, we obtain the condition G0 |+

− = 1, so −kA−kA =

1 ⇒ A = −1/2k, and we arrive at the solution

x < x0

1 ek(x−x0 )

(21)

G(x; x0 ) = −

2k e−k(x−x0 ) x > x0

=−

1 −k|x−x0 |

e

.

2k

(k > 0)

(22)

IV. 10.2.4 [10]

There are multiple methods to proceed here. I’ll give three methods.

Method 1: Fourier-transform the Ansatz, then differentiate

Consider

0

G(r, r 0 ) ≡ G(ξ) ≡ −

1 eikξ

1 eik|r−r |

=−

,

0

4π |r − r |

4π ξ

where ξ ≡ r − r 0 and ξ ≡ |ξ|. Now Fourier transform from ξ-space to k0 -space:

Z

eikξ ik0 ·ξ

−4π G̃(k0 ) = d3 ξ

e

ξ

Z 1

Z ∞

0

= 2π

dχ

ξ dξ eikξ eik ξχ

(χ ≡ cos θ)

−1

0

Z

h

i

0

0

2π ∞

= 0

dξ ei(k+k )ξ − ei(k−k )ξ .

ik 0

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

(23)

(24)

(25)

(26)

Autumn 2015

The University of Chicago, Department of Physics

Page 4 of 8

This integral is not well-defined; the quickest way to make it well-defined is to insert a convergence factor e−ξ

( > 0), evaluate the integral, then send → 0+ [this is equivalent to rotating the integration contour slightly into

the complex plane in an appropriate direction to ensure convergence]:

2π

ik 0

−4π G̃(k0 ) =

Z

∞

i

h

0

0

dξ ei(k+k )ξ−ξ − ei(k−k )ξ−ξ

(27)

0

ξ=∞

2π

1

1

i(k+k0 )ξ−ξ

i(k−k0 )ξ−ξ

= 0

e

−

e

ik i(k + k 0 ) − i(k − k 0 ) − ξ=0

1

2πi

1

−

= 0

k

i(k + k 0 ) − i(k − k 0 ) − 1

1

→0+ 2π

−

−→ 0

k k + k0

k − k0

2π −2k 0

= 0 2

k k − (k 0 )2

1

= −4π 2

k − (k 0 )2

1

⇒ G̃(k0 ) = 2

.

k − (k 0 )2

(28)

(29)

(30)

(31)

(32)

(33)

This allows us to write an alternative integral-form for G(ξ):

Z

0

G(r, r ) = G(ξ) =

0

d3 k 0 e−ik ·ξ

=

(2π)3 k 2 − (k 0 )2

Z

0

0

d3 k 0 e−ik · (r−r )

.

(2π)3 k 2 − (k 0 )2

(34)

Therefore,

2

2

0

2

2

Z

(∇ + k )G(r, r ) = (∇ + k )

Z

=

0

0

d3 k 0 e−ik · (r−r )

(2π)3 k 2 − (k 0 )2

0

d3 k 0 (∇2 + k 2 )e−ik · (r−r

(2π)3

k 2 − (k 0 )2

0

0

)

d3 k 0 (−(k 0 )2 + k 2 )e−ik · (r−r

(2π)3

k 2 − (k 0 )2

Z

d3 k 0 −ik0 · (r−r0 )

=

e

(2π)3

Z

=

= δ (3) (r − r 0 ).

(35)

(36)

0

)

(37)

(38)

(39)

Therefore, G satisfies the BCs by vanishing as the separation between r and r 0 goes to infinity, and also satisfies

the Helmholtz equation with a δ (3) source. G is therefore the Green’s function for the Helmholtz equation.

Method 2: Direct solution in Fourier space, then invert the transform

Alternatively, we can directly solve for the Fourier-space Green’s function and then invert the transform to

get the desired result. This is non-trivial due to a subtlety; see below. To start with, I will note that if I define

ξ ≡ r − r 0 and keep r 0 fixed then ∇r = ∇ξ and d3 ξ = d3 r, so I can write the Helmholtz equation with its δ-fn

source in position-space as

(∇2ξ + k 2 )G(ξ) = δ (3) (ξ)

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

(40)

Autumn 2015

The University of Chicago, Department of Physics

Page 5 of 8

R d3 k0 −ik0 ·ξ

G̃(k0 ) and use that the delta function has a unit Fourier transform

We now Fourier transform: G(ξ) ≡ (2π)

3e

(in my convention for the factors of 2π) to arrive at

(−(k 0 )2 + k 2 )G̃(k0 ) = 1 ⇒ G̃(k0 ) =

1

.

k 2 − (k 0 )2

We now invert the Fourier transform:

Z

d3 k 0 −ik0 ·ξ

G(ξ) ≡

e

G̃(k0 )

(2π)3

Z

0

d3 k 0 e−ik ·ξ

=

(2π)3 k 2 − (k 0 )2

Z ∞

Z 1

−ik0 ξχ

1

0 2

0 e

(k

)

dk

=

dχ

(χ = cos θ)

4π 2 −1

k 2 − (k 0 )2

0

Z 1

Z ∞

−ik0 ξχ

1

0 2

0 e

=

dχ

(k

)

dk

4π 2 −1

k 2 − (k 0 )2

0

Z ∞

0

0

ik ξ

1 i

− e−ik ξ

0

0e

=

)dk

(k

4π 2 ξ 0

(k 0 )2 − k 2

Z K

0

ik0 ξ

1 i

− e−ik ξ

0

0e

lim

=

(k )dk

4π 2 ξ K→∞ 0

(k 0 )2 − k 2

Z K

0

ik0 ξ

− e−ik ξ

1 i

0

0e

lim

(k

)dk

=

8π 2 ξ K→∞ −K

(k 0 )2 − k 2

"Z

#

Z K

0

K

−ik0 ξ

1 i

eik ξ

0

0

0

0 e

=

−

.

k dk 0 2

k dk 0 2

lim

8π 2 ξ K→∞ −K

(k ) − k 2

(k ) − k 2

−K

(41)

(42)

(43)

(44)

(45)

(46)

(47)

(48)

(49)

Now, the subtlety: neither of these integrals is well-defined since there are two poles lying on the real axis, at

k 0 = ±k. In order to give them well-defined values, we need to specify an (arbitrary) procedure for how we are

handling the poles. One choice would be to take the principal value, but we’ll do something different here instead:

suppose we send k 2 → k 2 + i in the denominator, where is a small positive quantity, which we will send to zero to

the end of the derivation. This will clearly move the poles off the axis allowing us to perform the integral without

0 2

0

) − (kI0 )2 = k 2

+ ikI0 and setting (k 0 )2 − k 2 − i = 0, we find that (kR

running through a pole. Writing k 0 = kR

0

0 0

and 2kR kI = . So the pole initially at k = k moves slightly up into the upper half plane and the pole initially

at k 0 = −k moves down slightly into the lower half-plane; see Fig. 1. To summarise, we have decided that we will

assign the Green’s function the following value:

"Z

#

Z K

0

0

K

1 i

eik ξ

e−ik ξ

0

0

0

0

G(ξ) =

lim

k dk 0 2

−

k dk 0 2

.

(50)

8π 2 ξ K→∞

(k ) − k 2 − i

(k ) − k 2 − i

−K

−K

+

→0

We use a contour integral to evaluate this. Since we have now done this a thousand times, I’ll be very brief: we

utilise a contour from −K to K along the real axis, closing with a half-circle in either the upper (for the integral

0

0

with eik ξ ) or lower half-plane (for the integral with e−ik ξ ) with the choice here made to ensure convergence; see

Fig. 1. We invoke Jordan’s Lemma to kill the piece of the integral arising from the half-circle in the limit K → ∞,

and utilise the residue theorem to pick up 2πi times the residue of the enclosed pole for the closed contour integral

H

. When the dust settles we have, in the limit K → ∞ and → 0+ ,

"Z

#

0

K

eik ξ

eikξ

0

0

0

k dk 0 2

lim

=

lim

[+2πiRes[k

=

+k

+

iO()]]

=

2πi

×

= iπeikξ

(51)

2 − i

K→∞

(k

)

−

k

2

→0+

−K

→0+

"Z

#

0

K

e−ik ξ

e−i(−k)ξ

0

0

0

lim

k dk 0 2

=

lim

[−2πiRes[k

=

−k

−

iO()]]

=

−2πi

×

= −iπeikξ .

(52)

2 − i

K→∞

(k

)

−

k

2

→0+

−K

+

→0

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

Autumn 2015

The University of Chicago, Department of Physics

Page 6 of 8

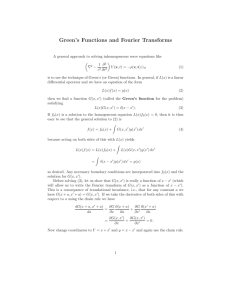

FIG. 1 The original pole positions (blue crosses) move (to the green crosses) under the k 2 → k 2 + i procedure

for passing the poles (movement exaggerated for clarity; it’s supposed to be an infinitesimal displacement).

Therefore,

G(ξ) =

1 i ikξ

1 eikξ

ikξ

iπe

−

−iπe

=

−

,

8π 2 ξ

4π ξ

(53)

or in the original notation,

0

1 eik|r−r |

G(r, r ) = −

.

4π |r − r 0 |

0

(54)

Note that if we had taken a different pole-passing procedure, we would have obtained a different Green’s

function. For example, if we have taken < 0 instead, we would pick up the opposite poles with each contour,

and so the sign in the exponent would change, and if we had displaced both poles up, or both poles down, we’d

get still more different Green’s functions. The salient point is that these all satisfy different boundary conditions:

the choice of pole-passing procedure dictates which boundary condition the Green’s function satisfies. In a more

mechanical sense, it seems weird at first glance that this integral could have multiple possible results, but is really

just because the integral was actually not defined in the first place, and we are choosing to assign it a value by

some consistent procedure; it’s just that there are multiple ways to do this and the value it is assigned differs

depending on the procedure used. This technique sees very wide use in quantum field theory.

Method 3: position-space differentiation

I will use some vector-calculus identities, which are conveniently tabulated on, e.g., the front cover of the

excellent textbook by Jackson, Classical Electrodynamics. I will employ five standard results:

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

Autumn 2015

The University of Chicago, Department of Physics

Page 7 of 8

• ∇(f g) = (∇f )g + f (∇g).

• ∇ · (f v) = f ∇ · v + (v · ∇)f.

• ∇·

hri

r̂

=

∇

·

= 4πδ (3) (r).

r3

r2

• ∇(r) = r̂ = r/r.

• ∇ · r = 3.

It follows from a simple application of these results that ∇·(r̂/r) = ∇·(r/r2 ) = (1/r2 )(∇·r)+(r·∇)(1/r2 ) = 3/r2 +

r∂r (1/r2 ) = (3−2)/r2 = 1/r2 , and ∇· r̂ = ∇·(r/r) = (1/r)(∇·r)+(r ·∇)(1/r) = 3/r +r∂r (1/r) = (3−1)/r = 2/r.

With those preliminaries out of the way, consider

G(r1 , r2 ) ≡ −

1

1

eik|r1 −r2 | .

4π |r1 − r2 |

(55)

I will define r ≡ r1 −r2 and treat r2 as a fixed vector so that ∇r1 = ∇r . Additionally, I will set r ≡ |r| = |r1 −r2 |,

so that

G(r1 , r2 ) ≡ −

1 1 ikr

e .

4π r

(56)

Then the gradient of G is given by

1 ikr

= eikr ∇(1/r) + (1/r)∇eikr

−4π∇G = ∇ e

r

(57)

(58)

= eikr (−1/r2 )∇(r) + (1/r)(ikeikr )∇(r)

ikr

= r̂(ik/r)e

2

− (r̂/r )e

ikr

(59)

.

So that the Laplacian of G is given by

(60)

−4π∇2 G = ∇ · [−4π∇G] = ∇ · r̂(ik/r)eikr − (r̂/r2 )eikr

ikr

= ike

(∇ · (r̂/r)) + (ik/r)(r̂ · ∇) eikr − eikr ∇ · (r̂/r2 ) − (r̂/r2 ) · ∇eikr

= ikeikr (1/r2 ) + (ik/r)∂r (eikr ) − 4πeikr δ (3) (r) − (1/r2 )∂r eikr

2

= (ik/r )e

2

= −k (e

ikr

ikr

2

− k (e

ikr

ikr (3)

/r) − 4πe

ikr (3)

/r) − 4πe

δ

δ

(r)

= 4πk 2 G − 4πeikr δ (3) (r).

2

2

⇒ (∇ + k )G = e

ik|r| (3)

δ

2

(r) − (ik/r )e

ikr

(61)

(62)

(63)

(64)

(65)

(66)

(r).

This is almost in the correct form: the RHS only has support for r = 0, so the eik|r| can be replaced by eik×0 = 1

yielding

(∇2 + k 2 )G = δ (3) (r).

(67)

Therefore, G satisfies the BCs by vanishing as the separation between r1 and r2 goes to infinity, and also satisfies

the Helmholtz equation with a δ (3) source. G is therefore the Green’s function for the Helmholtz equation.

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

Autumn 2015

The University of Chicago, Department of Physics

Page 8 of 8

V. 20.3.6 [10]

Consider

−ϕ00 + K 2 ϕ = qδ(x)

(q ≡ Q/D; D 6= 0; {Q, D, K} ∈ R).

(68)

R∞

I will use that F[δ(x)] = 1 and F[f 00 ] = −k 2 F[f ], where I define F[f ] ≡ f (k) ≡ −∞ f (x)e+ikx dx so that the

R

∞ dk

inverse transform is F −1 [f ] = f (x) = −∞ 2π

f (k)e−ikx . I now perform a Fourier Transform F on both sides of

the ode:

(k 2 + K 2 )ϕ(k) = q

⇒

ϕ(k) =

k2

q

q

=

.

2

+K

(k − i|K|)(k + i|K|)

(69)

Now invert (I also accepted your solution if you used (20.17) from the textbook here to go straight to the answer.)

ϕ(x) =

q

2π

Z

∞

−∞

e−ikx dk

q

=

PV

(k − i|K|)(k + i|K|)

2π

Z

∞

−∞

e−ikx dk

,

(k − i|K|)(k + i|K|)

(70)

where I inserted a PV prescription in the last step because the integral does exist and so will be equal to its PV,

and I need the PV prescription to set up a contour integral. Now analytically continue the integrand to the whole

complex plane, and consider the contours C ± which run along the real axis from −R and +R then close back to

±

−R on the real axis via a half-circle contour CR

in the upper (+) or lower (−) half-plane. C + is CCW; C − is

CW. The piece of the contour on the real axis yields the PV integral we seek in the R → ∞ limit, while Jordan’s

R

Lemma tells us that C ± vanishes as R → ∞ if we follow C + for x < 0 and C − for x > 0. Meanwhile, C ± encloses

R

H

R

R

a pole at k = ±i|K|. Using the residue theorem for C ± = (PV ) + C ± , and putting this all together, we have

R

(f (z) ≡ e−izx /(z 2 + K 2 ))

+Res(f (z); z = +i|K|) x < 0

q

ϕ(x) =

2πi

(71)

−Res(f (z); z = −i|K|) x > 0

2π

+ 1 e|K|x

x<0

2i|K|

= iq

(72)

− 1 e−|K|x x > 0

−2i|K|

e|K|x

x<0

q

=

(73)

2|K| e−|K|x x > 0

=

Q

e−|Kx| .

2D|K|

(74)

This agrees with the result we had in 10.1.9, if we set q = −1, take K > 0 and replace K → k, and shift x → x−x0 .

Note: The question explicitly stated that you should perform a Fourier transform, solve in Fourier space and

then invert. If you did any other method, you got a maximum of 7/10.

Mathematical Methods of Physics

v1.0

2 December 2015, 6:00pm

Autumn 2015