CHEMISTRY PROGRAM: Assessment of student learning activities.

CHEM I, II, III, IV

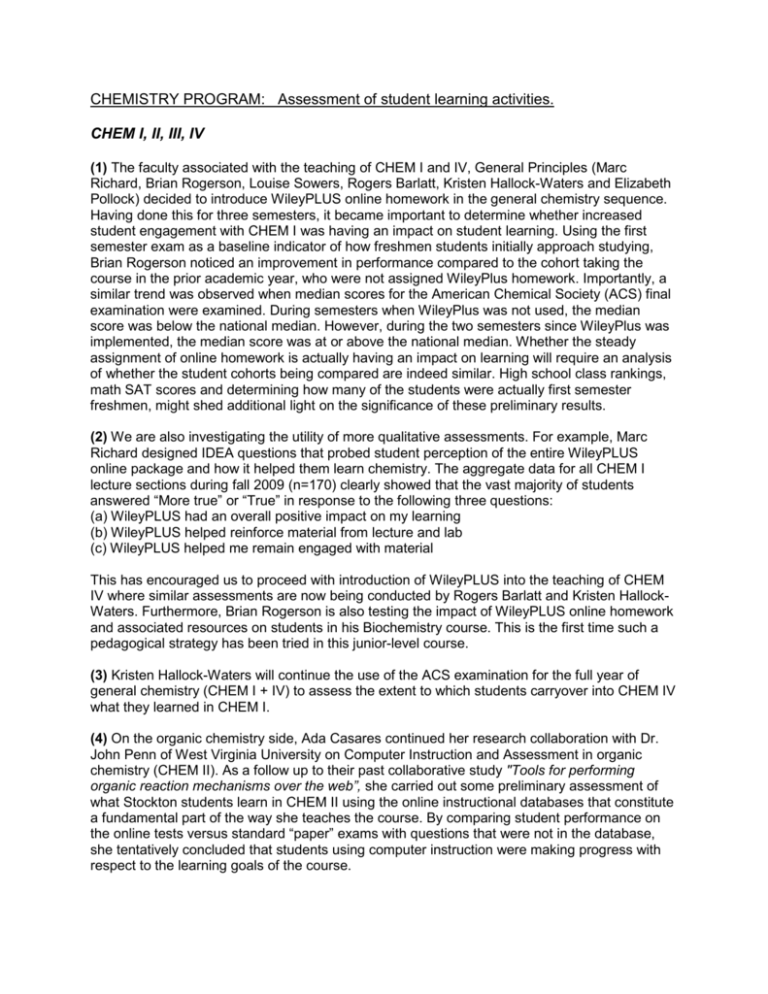

(1) The faculty associated with the teaching of CHEM I and IV, General Principles (Marc

Richard, Brian Rogerson, Louise Sowers, Rogers Barlatt, Kristen Hallock-Waters and Elizabeth

Pollock) decided to introduce WileyPLUS online homework in the general chemistry sequence.

Having done this for three semesters, it became important to determine whether increased

student engagement with CHEM I was having an impact on student learning. Using the first

semester exam as a baseline indicator of how freshmen students initially approach studying,

Brian Rogerson noticed an improvement in performance compared to the cohort taking the

course in the prior academic year, who were not assigned WileyPlus homework. Importantly, a

similar trend was observed when median scores for the American Chemical Society (ACS) final

examination were examined. During semesters when WileyPlus was not used, the median

score was below the national median. However, during the two semesters since WileyPlus was

implemented, the median score was at or above the national median. Whether the steady

assignment of online homework is actually having an impact on learning will require an analysis

of whether the student cohorts being compared are indeed similar. High school class rankings,

math SAT scores and determining how many of the students were actually first semester

freshmen, might shed additional light on the significance of these preliminary results.

(2) We are also investigating the utility of more qualitative assessments. For example, Marc

Richard designed IDEA questions that probed student perception of the entire WileyPLUS

online package and how it helped them learn chemistry. The aggregate data for all CHEM I

lecture sections during fall 2009 (n=170) clearly showed that the vast majority of students

answered “More true” or “True” in response to the following three questions:

(a) WileyPLUS had an overall positive impact on my learning

(b) WileyPLUS helped reinforce material from lecture and lab

(c) WileyPLUS helped me remain engaged with material

This has encouraged us to proceed with introduction of WileyPLUS into the teaching of CHEM

IV where similar assessments are now being conducted by Rogers Barlatt and Kristen HallockWaters. Furthermore, Brian Rogerson is also testing the impact of WileyPLUS online homework

and associated resources on students in his Biochemistry course. This is the first time such a

pedagogical strategy has been tried in this junior-level course.

(3) Kristen Hallock-Waters will continue the use of the ACS examination for the full year of

general chemistry (CHEM I + IV) to assess the extent to which students carryover into CHEM IV

what they learned in CHEM I.

(4) On the organic chemistry side, Ada Casares continued her research collaboration with Dr.

John Penn of West Virginia University on Computer Instruction and Assessment in organic

chemistry (CHEM II). As a follow up to their past collaborative study "Tools for performing

organic reaction mechanisms over the web”, she carried out some preliminary assessment of

what Stockton students learn in CHEM II using the online instructional databases that constitute

a fundamental part of the way she teaches the course. By comparing student performance on

the online tests versus standard “paper” exams with questions that were not in the database,

she tentatively concluded that students using computer instruction were making progress with

respect to the learning goals of the course.

(5) In addition, Jon Griffiths shared with the CHEM program the results of a 15-year study using

the ACS examination in organic chemistry as an assessment tool to gauge learning after

students completed the CHEM II + III sequence. The test was administered as a final exam for

the CHEM III course. As with CHEM I, he found that the median performance was below the

national median. Interestingly, when he compared the median score of the period spanning the

first 9 years versus the median score of the most recent 6 years, he found that the latter score

was significantly lower, meaning that recent cohorts were performing less well than earlier ones.

One interpretation was that this may be the result of an increased number of students taking

CHEM III due to recent changes in chemistry requirements made by some NAMS programs.

Whether this resulted in a decrease in the average level of academic preparedness of CHEM III

student cohorts remains to be determined. A more detailed analysis of class demographics is

required to settle this question.

(6) Based on our assessment workgroup discussions, Shanthi Rajaraman has included

questions in her CHEM II and CHEM III exams to test the following:

1. Structure, property correlation (qualitative goal)

2. Plotting and interpreting graphed information (quantitative goal)

To assess their understanding of graphs, she has been incorporating questions involving

graphs/figures from which they are required to interpret information and arrive at answers.

Shanthi has been able to see where the students are getting the concept and where they are

losing it, based on layering of questions on certain topics. These questions are a regular feature

in her tests- she is able to accurately gauge where the students have not grasped the concept

and also judge the level of challenge a student can take on, based on these layered questions

leading from lower level (something that involves slightly more than direct reproduction of

presented information) to higher level (synthesis questions).

(7) An organic chemistry workgroup has been recently formed that is beginning to review and

revise CHEM II laboratories in order to introduce the assessment of specific learning outcomes

for each laboratory exercise. The workgroup is not only made up of full time faculty, but includes

all part-time and adjunct faculty.

Experiential Chemistry (A GNM course)

This course was taught again after a brief absence from the schedule. Marc Richard is taking

over the reins of this course from Jon Griffiths who designed and taught it for many years. Jon is

retiring. This GNM course is unique since it is the only general studies course taught entirely in

the laboratory. A major revision of the course text, originally written by Jon Griffiths, has been

completed. In addition, Marc Richard has begun a two-year assessment of the course in

collaboration with the Center for Workshops in the Chemical Sciences. An outside evaluator

administered a survey and conducted face-to-face interviews with students. This past summer

Marc participated in a week long workshop conducted by the Center and the evaluator will be

returning do a second assessment.

Philosophy and Religion Program

Assessment Document

Working Copy

September 2010

Prepared by:

Prof. Lucio Angelo Privitello, Ph.D.

Associate Professor of Philosophy and Religion, and

Coordinator of the Philosophy and Religion Program

I. Opening Statement:

The study of philosophy entails cultivation in the history of the field along with

the use and proficiency in interpretive abilities founded upon critical thinking and

analytic techniques. In terms of the Program’s most current brochure - the study of

philosophy is undertaken to help develop student capacity for systematic reflection on the

human condition through philosophical analysis of values, with an application, through

proven models, of what they have learned to their lives lead as citizens and professionals.

While information is part of this process - historical, theoretical, laws of thought,

argument analysis, and interpretative techniques - the most important lessons in the study

of philosophy entail openness to the wonder of existence, to being in the world, and to the

importance of developing interpretative, analytic, and normative skills so that critical

reflection becomes an intellectual imperative carried out as a joy. For most of the

aforementioned key terms and areas of specialty there are measurable performances, and

yet, as part of the philosophical forma mentis, the exclamation “I know that I do not

know”, when uttered in sincerity is the very materiality of the measure that overwhelms

and at the same time grounds all practical and theoretical problems of philosophy as well

as learning outcome rubrics.

1

As an undergraduate program in philosophy our goal is to prepare students for the

further professional study of philosophy, along with its practice as a pedagogical art,

while maintaining a vision that a philosophical education serves as a thread within the

labyrinth of cultural emergence. If once philosophy was the Queen of the Sciences, today,

and with our help it may at least be the Prince of the Humanities. This call to a noble love

of culture serves our students for a preparation no matter what goals they have in mind,

and yet, hones and tests the skills of those who desire to pursue a graduate degree in

philosophy or religion.

II. Program Survey:

Faculty:

Full time: 5

Adjunct: 2

Majors: 19 (as of Sept. 2010)

Minors: 26 (as of Sept. 2010)

General Study Students: (number yet to be compiled that on average take

courses in Philosophy and Religion)

III. Goals:

Philosophy majors interested in continuing with their studies in the field, should

graduate with a certain level of mastery in the historical study of the field, and guided by

faculty members, begin to realize their particular interests (and deepen the use of

philosophical methods and analysis) so that selections of Graduate School study may be

realistically approached, and successfully begun. Because of the size of our Program we

are able to act as mentors in what can be called “apprenticeships in creativity” for the

good of the ideals of our students. For philosophy minors (requiring five courses) the

emphasis should be on the interdisciplinary application of philosophical methods, history,

and interpretative skills to their chosen discipline and area of interest. For majors and

minors as well as general studies students, a community of learning is displayed in

classes as the respect for dialectical debate, argument analysis, and the love of learning

2

and sharing ways of knowing. For students who take philosophy courses as part of their

GEN program, or as an elective, a goal is to provide an understanding of the nature of

philosophy as practice and theory, and deepen their awareness and interdisciplinary

aspects of critical reading and writing.

IV. Expected Outcomes:

For majors and minors in philosophy:

1. Theory of the philosophical and religious field(s):

a. the history and contemporary state of the field and issues

b. the understanding of the various fields and sub-fields within the disciplines

c. the ability to grasp principles, arguments, and methodologies

2. Practice in philosophical reflection and interpretation:

a. recognizing and analyzing arguments

b. clarity and confidence in oral and written expositions

c. ability to integrate and relate various approaches

d. ease in the research and critical selection of materials

3. Appreciation of Philosophy and Religion (for General Study students, and

majors and minors):

a. the ability to wonder, doubt, and reflect with joy

b. the widening of their cultural background and values

c. the improvement of communication and research skills

d. the development of a community of learners

e. the confidence to make contributions to their social arena

3

V. Dissemination:

Our Program brochure contains a basic outline of expected learning outcomes for

perspective students. As part of this first introduction, each Program member includes

these expectations, and adds detail to each of them, in their presentations to the Stockton

Open House gatherings that are scheduled twice a semester for prospective students and

parents. These presentations include information on what our more recent graduates are

doing, a record of admissions to graduate schools, placement records, and career choices.

This helps inspire our prospective students, and give some sense of reassurance to parents

while furthering our expected learning outcomes. In keeping an updated list of the

accomplishments, graduate programs, and degrees of our Program graduates we may

further disseminate and discuss what factors in our aims proved most effective in the

action of continuing a student’s education.

From our Program meeting discussions we maintain notes and periodically

incorporate these changes as part of the updated and edited versions of our Program

brochure. The frequency of changes to the Program brochure is once every two years (on

average). We wish to be able to make changes more frequently, and due to this factor we

are planning a more direct, interactive and timely use of our Program and Division

Website link. In brief, the program’s primary aim is to help develop student capacity for

systematic reflection on the human condition through philosophical analysis of values.

This aim goes hand-in-hand with an application, through proven models, of what they

have learned to their lives lead as citizens and professionals.

Course syllabi for Philosophy and Religion courses as well as GEN courses taught

by program members include course objectives for learning outcomes. We place our

course overviews and goals ‘front-and-center’ on the syllabus as “Course Description”,

“Course Objectives”, “Course Goals”, and follow these with “Requirements”, and

“Assessment” to how these goals must be attained. As part of our introduction to a

course, and before and after assignments, we outline goals and learning expectations.

4

Among these objectives there is the a) appreciation for the field and study of philosophy

and religion, b) the striving to raise interpretative and critical thinking abilities to

interpret texts and findings, c) the use of analysis and methods, d) participation in

discussions, e) the development of a critical understanding of student beliefs and the

belief and views of others, and f) the joy to explore and be comfortable with raising more

value questions about the world they live in to raise their consciousness of the affective

and social dimension. In the detailed responses to student papers the actions taken by

each program member is clearly evident, and displays a wide range of assessing progress

and pitfalls. The incorporation of “re-writes” (and for some courses, multiple versions of

papers) is our touchstone for a shared Program wide assessment findings. For proposed

courses for both the Philosophy and Religion Program and GEN courses there is a

presentation to a college-wide committee that reviews learning goals, the kind of courses

(Tradition, Thematic, Experiential), and general competencies and content experience.

The dissemination, discussion and evaluation of leaning measures at this level are

extremely intense and most rewarding. Review for courses over five years old (both

within the Program and in GENs) is also where we review and re-evaluate learning

outcomes and course effectiveness.

VI. Program Assessment History (thumb-nail version):

The Philosophy and Religion Program has, for some years, standardized the

format and expectations for the Senior Seminar, our capstone course. Students are

required to give a formal presentation on a text used within the course of philosophical

significance, along with an oral (and written) commentary on a fellow student’s paper.

The evidence of facility in using advanced research methods and the ability to produce a

15-20 page scholarly paper on a topic at that level has proven successful. As a Program

we have been collecting the Senior Seminar papers in electronic format and have, and

continue to store them on CDs. As will be mentioned below under “Discussion”, the new

course “Philosophical Research Methods” will allow us to take action on what we have

learned and shared as a Program from the collecting of Senior Seminar papers. The need

for a mid-point course to boost the facility for advanced research has already shown itself

5

as a positive step in the growth of our already implemented assessment stage at the

Senior Seminar level.

The Program has conducted “exit interviews” and administered them to students

to indicate their familiarity with majors themes and figures in the history of philosophy.

Part of the interview allowed students to report on the Program’s strengths and

weaknesses, and to indicate preferences for types of courses they would like to see

offered. Thanks to the consistent call for offerings in Religion we prepared for, and then

hired our specialist in the field, Prof. Siecienski. This has proven to be a deeply positive

outcome of that part of the exit strategy. Due to this we have decided to adjust the exit

strategy, and recalibrate it into what we are calling a “student narrative” (as mentioned

under “Assessment Measures”).

For a fuller version of our Program’s Assessment activities, discussions, course

load, advising load, and actions, we refer you to the Philosophy and Religion Program

2009-2010 Coordinator’s Report, prepared for and delivered to our Division Dean in May

2010, especially pages, 2, 4-5, and 15-20.

VII. Assessment Measures:

Our learning goals (see archived syllabi and the Philosophy and Religion Program

2009-2010 Coordinator’s Report) enable students to understand the nature of the theories

and methods acquired within our courses for use as Philosophy majors and minors. As

part of these goals we count a) corrected and graded papers (originals and re-writes)

where students are given guidelines for improvement, b) in-class presentations that

follow a clear format, c) research projects, and d) the Program’s “Capstone” Senior

Seminar course that is a culmination and intensification of these goals. The frequency of

points a through c is for every class and within the unfolding of a semester. While it is

rare (due to the size of our College, Division and Program) to have courses filled only by

Philosophy majors and minors (with the exception of the PHIL 4600 Senior Seminar, and

perhaps PHIL 3830 “Major Thinkers/Major Themes”, we must balance these goals by the

6

level of difficulty and intensity of focus in paper topic choices. As part of a response to

this aspect of a class, “topic choices” are commonly used that have a range of difficulty.

Both program courses and GEN courses taught by Philosophy and Religion program

members stress a structure for co-curricular learning, and provide a rich learning

experience for each enrolled and dedicated student. This is an evident match between our

courses and our Program objectives as outlined in our brochure and syllabi, as well as a

working partnership with our Division programs. Examples of these are found in our

syllabi archive.

Basic examples of the experience of assessment measurements begin with class

discussion, student presentations, student commentaries on presentations, as well as

faculty-student meetings (out-of-class interactions), papers and research. The frequency

for these mentioned aspects is during and for each semester course.

As a projected tool for assessment the Philosophy and Religion Program will be

implementing a “student narrative”, mostly likely begun before the midpoint of their

Philosophy and Religion course studies, (and returned to by the student) as a way to gain

more insight into how Philosophy and Religion majors and minors have experienced and

reacted to the courses, level, and workload. This has been based on our Program

discussion on the “exit interview”, and is a re-evaluation of our original document.

VIII. Discussion (Findings):

The learning goals are evaluated, developed and selected from faculty experience,

study, and reflection, and from questions related to student evaluations from the IDEA

forms as well as from faculty-student discussions at the end of the course. This

information is discussed at our Program meetings. Course objectives and work/reading

loads have been adjusted according to the constructive feedback of student questions.

These adjustments are recorded in the yearly Program’s Coordinator’s Report by placing

“Revised” after a specific course. By sharing these findings at our Program meetings, and

7

in conversations during the semester, we are able to reevaluate both the dissemination

and the learning measures. The archive of syllabi attests to these changes.

i) Assessment Measures (IDEA)

a. For each class taught by Program members we receive

assessment relevance rating scores on twelve learning objectives

that makes up the IDEA forms. Students also provide short

written comments. This information is released to faculty.

ii) Frequency

a. For every course (by semester) for untenured faculty

b. For selected courses (by semester) for tenured faculty

iii) Findings

a. Program faculty discusses student comments and adjusts

learning goals and pedagogical aspects accordingly.

b. Discussion to have copies of IDEA forms for all Senior

Seminars available to Program faculty next in line is currently

underway.

For philosophy majors, the implementation of a portfolio is one of the goals we

continue to work towards. This allows student to save their work from the various course

levels, so we may engage them in noticing their development. This factor should also be

stressed when it comes to student course Notebooks. This works best for philosophy

majors. Because our program is relatively small, we are able to engage students and

develop self-assessments because they show up in various courses that we teach, at

faculty office hour visits, at their student Philosophy Clubs, and the PST Honor Society

of Philosophy, as well as at the two Program dinner “socials” gatherings we offer. We

are able to assess their work, and show them actual progress, as they move from

introductory to upper-level courses due to the size and teacher-student ratio. We are also

able to discuss how they experienced and gauged our pedagogical approach. Because of

the size of our Program, and the co-curricular spirit of our College, we seek to

accommodate different learning styles, and in a few of the courses this takes the form of

8

projects that cross the curriculum into the fields of arts, theater, music, and poetry. We

have received positive feedback from Professors in various fields outside of philosophy

to how some of our projects have inspired and captivated their students.

Within each of the courses run by Program faculty, we share the technique of

showing students what represents excellent work in the field. This usually is in the form

of peer-reviewed articles, student articles in undergraduate journals of philosophy,

published Readers, and seminal texts from the history of philosophy. This particular

aspect is presently being refined as we move our new course “Methods” into its second

academic sailing, and will make up part of a “Methods Reader”. We expect great power

and range from how we have discussed the components of this new course.

Having recently implemented the new Philosophy/Religion program course

entitled “Philosophical Research, Writing, and Methodology” – or “Methods” for short,

we feel that assessing student learning will become clearer and more accessible. This is

presently “in the works”. The “Methods” course is now being taught for the second time.

This course is required at a specific time in a student’s learning process, and comes

before students may take upper level philosophy and religion courses and definitely

before taking the senior seminar course. As a midpoint stage to the course progression,

the methods course will allow students to critically review their past work and the work

they will be doing in a course that is all about outcomes and learning goals. It is at this

stage that a creation and subsequent critique of the “portfolio” of student work would

work best and prove most suitable for student learning. It is also a stage where the

“student narrative” will become a guide to both faculty and student assessment. This will

be a powerful tool, if not our most powerful tool, for assessing the changes and progress

in student work. The syllabus for this course, as well as the original course proposal is

archived and serves as a working document and testament to our commitment to achieve

excellence in the field.

As part of the work of program members, assessment, evaluation, re-evaluation

and actions, is also working at the level of student philosophy clubs and extra-curricular

9

activities. For instance, with the Stockton Philosophical Society (SPS), a student run

Society, we are able to gage how students take examples and topics from class and

initiate discussions among themselves. We notice what topics and problems, and theories

are more frequently raised. One faculty member is present at these meetings and shares

the outcome at our Program meetings. This is also part of what happens at the

“Philosophy Goes to the Movies” where students use parts of theories to further

understand and enjoy film making techniques and ideas. As a Program we have been able

to see actual student self-evaluations and changes in the approach to the SPS meetings.

We have seen and noted the changes that students have made to the structure of their

meetings, and discuss this at our Program meetings for use in the revision of course

aspects.

For philosophy majors and minors as well as interested Stockton students from all

majors, there also exists a more structured philosophy society, the Phi Sigma Tau

International Honor Society in Philosophy. This is an International and National

recognized Society, based on 3.00 GPA and three completed philosophy courses. There is

a student President, Vice-President and Secretary, and one Faculty Advisor. This

provides our Program with a clear gage of how students have responded and desire to

continue with readings and discussions outside of the classroom. This society (PST) is an

honor society where students graduate with recognition for academic achievement. The

National Newsletter publishes outlines of the meeting topics. Students from our Program

have consistently been recognized for their work, and have also published book reviews

(for their peer-reviewed undergraduate journal Dialogue). We are happy to find how

students re-evaluate the structure of the PST meeting, and share their decisions with

Program faculty.

Students in our Program also voluntarily attend lectures by visiting speakers

invited by our program in the field of philosophy, as well as attend the Classical

Humanities Society Lecture Series at Stockton (six lectures per academic year). This

evidence in the care for culture, the learning process, and the development of student

interest continues with our majors and minors with their participation in local philosophy

10

conferences “The Greater Philadelphia Consortium Undergraduate Conference”, as well

as conferences in NY and RI. These are very insightful markers for us because they are

purely voluntary as far as student attendance and interest is concerned. From the

experience of participating in these conferences students have proposed changes to their

own Philosophy Society (SPS, PST), and wish to expand their reach and organize a

Regional Undergraduate Philosophy Conference. These kinds of actions have given us

much to discuss on the vibrancy of our pedagogical approach.

IX. Precipitating Factors, Concerns, and Assessment Components:

For philosophy majors, fair assessment is best gained by students following the

course progression (Introductory, Mid-level, Upper-level courses) thus picking up tools

and mastering levels of difficulty to prepare them for the direct measurement of their

work within the Senior Seminar with the capstone project. As it stands, we wish to

outline and implement more rigorous “pre-requisites” so to guide students on this path,

and stress the need for Introductory and mid-level courses to be completed before more

theoretical/historical upper-level courses are entered into. A “Recommendation for

Philosophy Majors” has been proposed, and can be found on pages 49-50 of our 20092010 Coordinator’s Report, handed in May 2010.

Due to the size of our College, Division, and Program, and ability to offer

multiple sections of courses, and the restriction on low enrollment in a course, we remain

in discussion about the best way to obtain this course progression. We must be allowed to

adjust course enrollment numbers for this possibility. Without this change in a structured

course progression, assessment is weakened and ultimately suffers. This problem most

clearly shows itself with Transfer students and their previous courses and credits, and

students who enter mid and sometime upper-level philosophy courses without prior

courses in philosophy.

Our greatest need is to make sure that our new “Methods” course continues to run

(adjusting enrollment quotas) for without this quasi-Dantean mid-point gauge to student

11

development and practice in the tools that count for requirements as we have here

outlined and mentioned, assessment in the truest sense of the term would remain in a

pedagogical Limbo.

Part of our continuing vision for student involvement and, in turn, assessment of

student learning, is to have funds available for undergraduate assistantships to reward

dedicated and industrious philosophy and religion students to get a glimpse of the

workings of course preparation and the running of our scholarly societies (Classical

Humanities Society as a key example), as well as lecture preparation. This would prepare

students for future study, employment, and set us closer to the deepest factor in a

philosophical training, namely, mentorship and apprenticeships in creativity and values.

As a final concern for assessment outcomes and with the focus on the preparation

of collected materials, the Program has discussed the need of a paid course release for an

assigned faculty member (one course within two semesters) so to pool, copy, organize,

and report on the aspects of assessment factors outlined in this assessment document.

This course release would enable us to direct our materials and findings, pass over

student papers, evaluation forms, and all that would help refine and count as evaluations

to a faculty member that in fact will have been granted the time and office to compose

and deliberate on the findings.

12

ASSESSMENT OF STUDENT LEARNING

CHEMISTRY PROGRAM (2003-2007)

A. Learning goals

What are the particular levels of knowledge, skills, and abilities that our students attain as a

result of their experiences with the Chemistry Program curriculum? In response to this question,

during Spring 1999, the program came up with a list of skills/outcomes we wanted our students

to have/meet when they graduated. The categories were: Basic Laboratory Techniques,

Intermediate Lab Techniques, Instrumental Skills, Chemical Concepts, Quantitative (Science)

Skills, Technical Communication, and Decision Making Skills. This comprehensive list can be

found in Appendix 1.

These goals were primarily intended for our majors (Chemistry [CHEM] and

Biochemistry/Molecular Biology [BCMB]) and were in need of further deliberation. However,

we made no further progress as a program and instead individual faculty experimented with

classroom assessment techniques. With the advent of the institutional assessment of student

learning initiative, in spring 2003 the program decided to charge a committee/workgroup with

(1) identifying a set of learning goals that would be applicable to a broader student population

and (2) formulating an assessment plan. The committee members are Kelly Keenan, Kristen

Hallock-Waters, Shanthi Rajaraman, Bob Olsen, Ada Casares and Brian Rogerson.

The committee identified eight broad learning goals that need to be assessed:

1. Data Analysis Skills (graphing, data interpretation)

2. Molecular Representation

3. Basic Measurement Skills

4. Knowledge of Intramolecular and Intermolecular Forces

5. Recognizing the Properties of Substances: Structure/Properties Correlation

6. Knowledge of Solutions

7. Understanding How to Separate Compounds

8. Understanding Atomic, Molecular and Macromolecular Sizes.

This list is not meant to be comprehensive; it simply constitutes a reasonable starting point.

There is also no suggestion of relative importance at this stage. Each of these broad goals was

expanded into a subset of concepts that are described in detail in Appendix 2. This expansion

resulted in some degree of overlap across the main learning goals. Furthermore, discussions with

program faculty led to the recognition that this overlap was not only necessary but desirable as it

may help student learning and also help us achieve our desired learning outcomes. It should be

noted that since assessment of student learning will always be a work in progress, these goals

will be subject to constant modification, particularly as a result of feedback from assessment

instruments, or because of changing pedagogical interests of Chemistry Program faculty, and at

yet other times because of programmatic changes in the curriculum.

1

A preliminary discussion concerning appropriate assessment instruments to measure these

learning outcomes resulted in the narrowing of the scope of our initial assessment effort. The

assessment workgroup submitted a recommendation that we initially assess two basic qualitative

goals and two basic quantitative goals:

Qualitative:

1) Understanding what chemical formulas mean and how to properly represent the corresponding

chemical structures.

2) Understanding how to separate compounds.

Quantitative:

1) Understanding what the calculations required for the preparation of a solution are (using

written protocols).

2) Plotting data and interpreting graphed information.

These four goals are described in more detail in Appendix 3 and have been approved by the

Program. The workgroup suggested that these four concepts be used to model the assessment

process because they are among those amenable to assessment across the chemistry curriculum.

The idea is to evaluate how the understanding of the concept matures as the student progresses

through the curriculum. Informally, we have always made the claim that students “learn

something” by the time they graduate. Our intention then, is to provide direct evidence that this

is actually the case.

B. Assessment instruments for measuring the learning outcomes of our majors

We then considered how the learning goals would be assessed, which student populations would

be assessed, and at what stage(s) in their college education they would be assessed. Our thinking

has come full circle in at least one respect. We started out wanting to assess student learning in

our majors, but then broadened the effort to include a number of majors that we serve, such as

Biology, Environmental Studies and Marine Science. However, we are focusing once again on

our majors. One reason is to keep assessment manageable. Another is that our majors tend to be

very consistent about the sequence in which they take their courses. For example, a very limited

survey of the transcripts of 2004 and 2005 BCMB graduates shows that ~75% took CHEM I, II,

III and IV in sequence (while ~13% each took the I, IV, II, III and the I, II, IV, III sequences).

CHEM I and IV correspond to the first and second semester of general chemistry, while CHEM

II and III correspond to the first and second semester of organic chemistry. A third reason we

wish to focus on our majors is that it is easier to track the progress in student understanding when

following students who continue to take more chemistry courses, and these, of course, are our

majors.

As a way to monitor student learning, the assessment workgroup has decided to use embedded

questions to assess the progress of student understanding of the two qualitative and two

quantitative learning goals described earlier. Student understanding will be assessed as they

progress through the Chemistry Program curriculum. We wish to test the idea that multiple

exposure to a particular concept in different course contexts results in a better understanding of

the concept. The idea is to assess global programmatic influences (rather than individual faculty

2

influences) on students. For instance, questions about plotting data and interpreting graphs will

be placed in the CHEM I → CHEM II → CHEM IV → BIOCHEM → BLM (Biochemistry

Laboratory Methods) course sequence, because it is in these courses where students are plotting

data and interpreting graphs. Furthermore, the questions will be course-specific, with

expectations higher in the more advanced courses.

These instruments were discussed by the workgroup during the summer and early fall of 2006.

We have asked all interested faculty to identify candidate questions in their tests for this purpose.

At this point we are not concerned about format, just identifying questions that meet the spirit of

the assessment. Although we did have detailed discussions on how to best construct these

questions. As a way to assuage any fears, we have pointed out that whether a student does well

(or poorly) on an embedded question while in a particular class (i.e. with a particular teacher) is

not what should concern the Program. Rather, what matters is whether students show consistent

progress over time in their understanding of the concept and hopefully by the time they graduate.

Again, what is being addressed is the influence of the program as a whole. The hypothesis is that

exposure in different contexts (i.e. different courses) is the key to fully understanding a concept

or mastering a skill. If a particular student does not “get it” while in a particular course, they may

do so in the next course. Students have different ways of learning and faculty have different

ways of teaching, so it should be obvious that it is our joint effort (and not our isolated efforts)

that plays the major role in ensuring that our graduates are well prepared.

In order to make this experiment manageable we are planning to assess the learning exhibited by

cohorts of students (rather than by following individual students), and samples of students (rather

than all students).

During Fall 2006 and Spring 2007, workgroup members have been testing such embedded

question instruments to assess two learning goals that the program identified as essential: 1)

Understanding what chemical formulas mean and how to properly represent their chemical

structure (a qualitative goal), and 2) Plotting and interpreting graphed information (a quantitative

goal). Data from tests in CHEM I lab, CHEM II lecture, and Biochemistry lecture have been

collected and analyzed. Additional data will be collected and analyzed by the end of the spring

2007 semester. Indeed, all of the CHEM program faculty have been invited to participate to

increase the course coverage and example questions. We will then evaluate how effective the

instrument is at identifying proficiencies or deficiencies in student learning. Our expectations are

that the results from such assessments will guide how we modify our teaching to address any

deficiencies we may identify in student learning outcomes.

C. Earlier/Current assessment of student learning efforts

A number of us (Olsen, Hallock-Waters and Rogerson) are already experimenting with several

American Chemical Society standardized tests (Toledo, First-term and Two-term exams) for

measuring learning outcomes in general chemistry. We are assessing skills and knowledge in a

number of areas at the beginning and end of CHEM I (single course analysis), or the CHEM I,

IV sequence (combined course analysis). In the latter case, we are trying to determine the degree

to which students taking CHEM IV have retained any understanding from CHEM I. This work is

3

experimental in nature and aimed at determining whether these instruments are appropriate for

basic assessment of learning outcomes in general chemistry.

One critical issue for the Chemistry Program has been freshman performance in CHEM I, the

very first chemistry course students take at Stockton, and very likely the one that leaves a lasting

impression on students concerning our Program. We have noticed (as others have done at other

institutions) a significant withdrawal frequency reflecting a substantial fraction of students

struggling with college-level chemistry. Our concern translated into a study that we carried out

over several semesters during the 2002 and 2003 academic years involving most of the CHEM I

section instructors.

The study was led by Bob Olsen and Brian Rogerson, the results of which were presented at the

2004 Day of Scholarship by Dr. Olsen. We asked the question: Can success in CHEM I be

predicted? We attempted to answer this question by administering the Toledo Chemistry

Placement Examination during the first week of class. It is a standardized test designed by the

American Chemical Society, which consists of 20 questions on general mathematics, 20

questions on general chemical knowledge and another 20 questions on specific chemical

knowledge. Student background is assumed to include one year of high school chemistry and one

year of high school algebra. Not surprisingly, students tend to perform better on the general

rather than the specific chemical knowledge sections. However, what interested us was their

performance in the general mathematics section. When distributions of the student Toledo math

scores were plotted versus their final grades for the course, a trend suggesting a link between

quantitative skills and success in the CHEM I course became evident. Such a correlation was

also evident, but less striking when distributions of student math SAT scores were plotted versus

their final grades.

As we proceed with our assessment of student learning plan, it may be possible (with further

study) to use the Toledo math scores to identify students at risk in CHEM I. Such early

intervention may help put these students on a path that leads to more favorable learning

outcomes in CHEM I and future college chemistry courses.

D. Additional learning outcomes we wish to assess

We are also considering assessing student proficiencies at the senior research project/internship

level. We would like to develop a common assessment rubric that will measure student

understanding of the research experience in their written theses and oral presentations. Among

the desired learning outcomes we wish to measure are hypothesis formulation, understanding of

project significance, data interpretation, grasp of relevant data, awareness of related literature,

and understanding of the application of basic biological/chemical principles in their research

projects. We hope this will allow us to introduce some consistency into the research experience

particularly when it comes to assessing intramural versus extramural student projects. The plan

would be to have research mentors/sponsors meet at the end of each semester to discuss the

rubric scores and determine which goals require greater attention. Modifications would then be

introduced into our teaching of subsequent research students to remedy any past outcome

deficiencies

4

There are also non-curricular instructional goals to consider (adapted from Angelo and Cross’

Teaching Goals Inventory and listed in Appendix 4) such as developing a commitment to

accurate work and improving oral presentation skills. Some of the listed goals will be readily

amenable to assessment if instruments are designed or chosen properly for the qualitative and

quantitative goals listed above. Other goals may require their own assessment instruments.

E. How do we define success?

While the Program has not decided what will constitute a “satisfactory” percentage of students in

terms of meeting our learning goals (or other measured outcomes), the Middle States assessment

booklet “Options and Resources” can serve as a guide. The Teaching Goals Inventory (page 23)

suggests a rating scale that could be used for the goals chosen by the Program:

Essential

Very Important

Important

Unimportant

Not Applicable

A goal you always/nearly always try to achieve (76% - 100% of the time)

A goal you very often try to achieve (51% - 75% of the time)

A goal you sometimes try to achieve (26% - 50% of the time)

A goal you rarely try to achieve (1% - 25% of the time)

A goal you never try to achieve

If writing a good scientific manuscript is identified as an essential program goal, or if a particular

concept in the chemistry curriculum is identified as an essential program goal, we could ask if it

is being achieved > 75% of the time. The Program, of course, could come up with its own

guidelines and definitions of success. Middle States allows for this, and it is our prerogative.

Also, we know that a number of our CHEM and BCMB graduates go on to graduate school.

Others get jobs in academia or industry as lab technicians or research assistants. If graduate

school acceptance and placement in field-related jobs is identified as an essential program goal,

we can ask if it is being achieved > 75% of the time. In those cases where graduate school

acceptance requires a good score on an examination, it could be argued that entrance exam

performance is connected to what they learned at Stockton. In contrast, gaining employment says

little, if anything, about any student learning that may have occurred while at Stockton.

Employer satisfaction surveys also do not address what students learned while at Stockton. More

direct measures are necessary to determine whether learning is taking place. This is why we are

developing the embedded question assessment instrument (described above). If assessment

results indicate that certain learning goals are not being met, the program will introduce

curricular changes in an attempt to correct any such deficits in future student cohorts.

F. Obstacles to assessment of non-majors

It is useful to be reminded of what (and how many) chemistry courses Stockton students take.

The list below also highlights the broad student population that we serve (Our majors are

underlined). The Chemistry Program teaching responsibilities affect the following numbers of

Stockton graduates: ~10 chemistry majors/year, ~15 BCMB majors/year, ~120 biology

majors/year, ~30 environmental studies majors/year and ~ 30 marine science majors/year. The

challenge we face in assessing student learning in such a broad student population is significant,

mostly because non-majors take a limited number of chemistry courses and in many cases, can

5

do so at any time during their tenure at Stockton (i.e. as early as their freshman year or as late as

their senior year).

BCMB (Majors)

CHEM I, II, III, IV, Biochem, Biochem Lab Meth., BCMB 4800 (Senior research)

Survey of Inst., Adv. Biochem., P. Chem

BIOLOGY (Biotech track)

CHEM I, II, III, IV, Biochem (Survey of Inst. and Biochem. Lab Meth.)

BIOLOGY (General/Integrative track)

CHEM I, II, IV, CHEM III or Biochem

BIOLOGY (Pre-med track)

CHEM I, II, III, IV, Biochem (Survey of Inst. and Biochem. Lab. Meth.)

BIOLOGY (Pre-PT, and MPT-accepted)

CHEM I, II

CHEMISTRY (Standard track, ACS) (Majors)

CHEM I, II, III, IV, Inorg, Org. Lab., Lab. Meth. I, II, P. Chem I, II, CHEM 4800 (Senior

research), Biochem

CHEMISTRY (Environmental track) (Majors)

CHEM I, II, III, IV, Envl, Lab. Meth. I, II, Atmospheric, CHEM 4800 (Senior research)

ENVIRONMENTAL STUDIES

CHEM I, CHEM II or CHEM IV

GEOLOGY

CHEM I, CHEM II or CHEM IV

MARINE SCIENCE (Marine Biology, Marine Resource Management tracks)

CHEM I, II

MARINE SCIENCE (Oceanography track)

CHEM I, IV

PUBLIC HEALTH

CHEM I

6

Chemistry Assessment: Biochemistry

Biochemistry is a class that cuts across traditional disciplines of chemistry and biology.

Students in the class have taken prerequisites in chemistry as well as biology. It was observed

that students thought that some molecules were taught of and belonged in biology and some in

Chemistry. In particular, DNA and genes was observed to be thought of by the students to be

the realm of biology and proteins was the realm of chemistry. In semester after semester, the

students scored poorly on questions that asked them to relate the protein and the gene.

In order to address this, two changes were made in the class. The first involved the use of

articles that were related to class material but not specifically covered in class. For example,

one of the topics in Biochemistry involves serine proteases. An assigned article describes the

link between one such serine protease and the role it plays in Alzheimer’s disease. The gene

involved as well as the role of the protein encoded by it was described in the article which led

to potential medicines.

Another change in Biochemistry is the use of presentations. Students were put into groups and

assigned topics which often dealt with various diseases. Students were asked about the

defective protein in the diseases as well as what approaches can be used to treat them. Some

treatments aim to replace or overcome the loss of the protein and others aim to add a normal

version of the gene. Similarly to the articles, the questions asked about these diseases cut

across the biology and chemistry disciplines. IT has become important for students to master

this understanding of the relationship between the protein and the gene now that entire

genomes have been sequenced and efforts have been to identify all of the proteins.

Since the articles and the presentations have been used, there has been an improvement by all

students in those questions that cut across disciplines and address proteins and genes. The

overall average for the scores on these questions is similar to that of other material.

Chemistry Assessment use- CHEM I (CHEM 2110, Chemistry I, General Principles)

The decision by the CHEM I/IV workgroup (six faculty members) to use the WileyPLUS online

homework system is an example of how a group of faculty put assessment data to work. There

was a recognition that students were not engaged with the material. Student surveys revealed

that a substantial proportion of them were spending very little time studying for the course and

were not bothering to do homework (most instructors assigned it, but did not grade it). This

lack of engagement was reflected in their quiz and exam grades. When one of the instructors in

this group started doing daily (non-graded) in-class assessments, he noticed a clear drop in

withdrawal frequency, but unfortunately only a very modest improvement in academic

performance at the low end of the grade scale (an increase in C and Ds). This was very

disappointing, but reviewers argued that the in-class assessments were "helping" the marginal

students because they were serving as a partial substitute “for the study routine that is the

staple of the more able student”. This in-class assessment research was presented at the 5th

Annual Lilly Conference on College and University Teaching (2001) and published in the Journal

of Chemical Education (J.Chem.Ed. 2003, 80, 160-164)

Members of the CHEM I/IV workgroup always talked about the lackluster performance of our

CHEM I students. Evidence for a lack of student engagement was mounting and so the group

implemented the WileyPLUS online homework system, which is gaining widespread use at

other institutions.

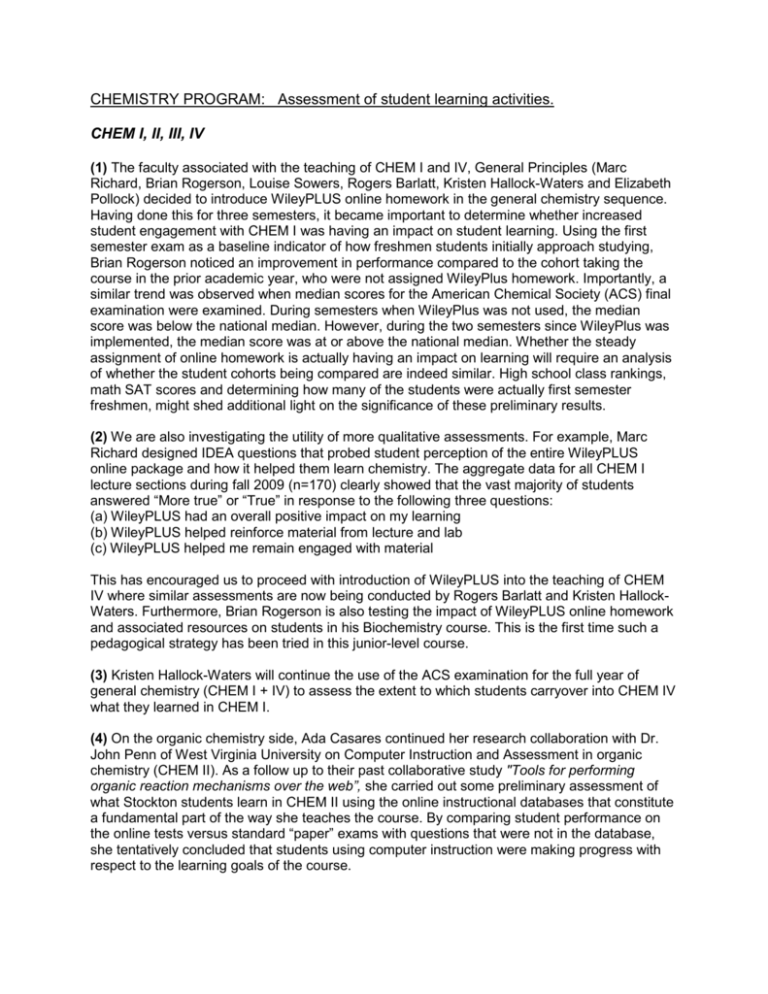

During the three semesters since the implementation of this tool, the workgroup surveyed

CHEM I students about their perceptions of WileyPLUS. Students report that they are more

engaged and that the steady regimen of homework helps reinforce the material. This is

consistent with the improved scores that one instructor is getting on his final exam, a

standardized American Chemical Society (ACS) test. The ACS test is measuring the proficiency of

the students that did not withdraw from the class.

Many questions remain to be addressed. For example, whether the ACS data is generally true

for all CHEM I instructors (not all instructors use this tool as the final exam) and whether class

demographics have an impact (the freshman/non-freshman ratio varies from section to

section). The impact of class module and whether it was a fall or spring offering is slowly

averaging itself out. The instructor in question has taught CHEM I at many different times, in all

kinds of rooms, both fall and spring.

Use of WileyPLUS correlates with an improvement in the median score on a standardized test

ACS Test Results (CHEM I, n=233) No WileyPLUS

(Fall01, Spr02, Fall03, Spr04, Spr06, Fall07, Spr08)

14

12

10

8

6

4

20

25

30

35

Class

15

→

0

→

2

40

45

National

Number of students

16

50

55

60

Number of correct answers (out of 70)

ACS Test Results (CHEM I, n=86) With WileyPLUS

(Fall08, Spr09, Fall09)

7

6

5

4

3

2

20

25

30

35

→

15

Class

0

→

1

National

Number of Students

8

40

45

50

55

60

Number of correct answers (out of 70)

Chemistry Assessment use- Physical Chemistry

When the instructor for Physical Chemistry I taught it for the first time in Fall 2007 he noticed

that students were having a very hard time with the mathematics of physical chemistry. This

was true both of the mechanics of calculus (i.e. how to take an integral or derivative) but also of

the conceptual understanding of the calculus (what exactly does a derivative mean). He

observed this through class discussion. He was spending more class time than he had expected

going over material from Calculus I and when asked, students did not understand what these

mathematical observations meant in a chemistry context. In addition, he noticed on

homeworks and exams that a major obstacle to understanding the chemistry was the math,

and students were not performing well.

To address this situation, the instructor applied for and received funding and training through

the Summer Tech Academy to develop mathematics tutorials for Physical Chemistry students.

The instructor prepared a series of five video podcasts (available on the course blog) that had

several goals:

1. Help students with the mathematics so the underlying chemistry concepts are clear

2. Develop an understanding of both the concepts of calculus and the mechanics

3. Use online tutorials to cover the math topics outside of class and on-demand

4. Include problem solving strategies whenever possible

The tutorials outlined the mechanics that they should have developed in their previous calculus

courses as well as what these things meant in the context of physical chemistry (conceptual

understanding).

In Fall 2008 (the first semester with the podcasts) the instructor administered a pre- and postsemester math skills inventory to assess the impact on the tutorials on the students'

understanding of both the conceptual ideas and the actual mechanics. An increase in most

areas was observed (both conceptual and mechanical), especially in the area of partial

derivatives (which is a new concept not covered in the prerequisite calculus classes).

The instructor shared the results of this work in a seminar entitled “Screencast tutorials in

mathematics and problem solving for physical chemistry” at the 2009 Day of Scholarship and at the

2010 Biennial Conference on Chemical Education in Denton, TX.

Communication Studies Program Assessment of Student Learning

Introduction

As a collective faculty, we have not conducted any formal research regarding student learning;

however, we have had numerous Communication Majors make great accomplishments, both

inside and outside of the college. We believe that much of what we do in the Communication

Studies Program is based on theories of applied learning. We, therefore, concur that our students

have been able to successfully apply what they have learned in their Communication courses

here at Stockton. Below is an incomplete list of some of those accomplishments. We can also

produce copies of some of this material if requested.

Student Presentations

In the spring of 2007, approximately 30 students from the Communication Seminar Course

attended the 11th Annual New Jersey Communication Association Conference, held at Kean

University. Approximately 20 of those students presented on a panel entitled, “Communication

and Popular Culture.” Presentation titles included: MTV and its Impact on Music, Reality

Television, and Youth; The Beatles Impact on Popular Music; The McDonald’s Corporation and

its Impact on Family Interaction; The Impact of AOL Instant Messaging on Interpersonal

Communication; and The Impact of Cell Phones on Interpersonal Communication.

Jobs in the Field & Graduate Programs – 1989 – 2010

1989

• Andrea Gabrick (Fall 1998, Program Distinction, Magna Cum Laude) was offered a full-time

position in a media organization in Arlington, Virginia before she had completed the

internship there.

1999

• Janel Keller (Spring 1999, Program Distinction) was offered a part-time position after her

internship at Shore Memorial Hospital in Somers Point. The intern site created the job for her

as they were very pleased with her internship performance.

• Susan Evrard (Spring 1999, Program Distinction), who was a senior reporter and editor for

ARGO, was offered a reporter position in Asbury Park Press. According to Allison Garvey

of AP Press, “With each assignment Sue exhibited a professionalism and curiosity that signal

a real calling to a career as an outstanding beat reporter.”

• Amanda Keyes (Spring 1999) became an assistant manager at Unitel-Wireless

Communications Systems in Egg Harbor Township.

• Jennifer Wilson (Spring 1999) was offered a formal position for camera work at WMGM,

NBC, Channel 40.

• Kevin Barrett (Spring 1999) received a position for data management in Philadelphia.

• Joshua Gershenbaum (Spring 1999) was hired as a post-production technician at Superdupe

Recording Studio at Madison Ave., New York.

• Lori Rao (Spring 1999, Program Distinction) received a full-time position at Edison Media

Research.

•

•

•

•

•

•

•

Andrea Levick (Fall 1999, Program Distinction) was asked to take on a part-time position

within the Programming Department of 100.7 WZXL because of her excellent work in data

management and public relations

Debra Bessetti became a part-time copywriter for Equity Communications after her

internship.

Marc Strozyk was asked to stay at Ginn Computer Company, Ocean City with a starting

salary of $800 a week.

Regina Higbee was offered a decent job at Bell Atlantic Network Services in South Plainfield.

Bethany Farms got accepted in the production team of a Philadelphia TV station.

Matthew David Finelli (Fall 1999) accepted a job at a local advertising agency.

Tim Campbell (Fall 1999) got a job at TNS as a part-time cameraman.

2000

• Anthony Fiumara was awarded a full-time job in his internship company with an annual

salary of $38,000.

• Pamela Reistle received a full-time job offer from her internship company Compas Inc. in

Cherry Hill.

• Frank Tedesco, who completed his internship at WMGM, NBC, Channel 40 in 1999, received

a full-time job offer as a radiographer.

• Jennifer Wilson also got a video production staff position after her internship at WMGM.

• Shawn Rody (Fall 2000, Program Distinction) was hired as a TV production person in

Manhattan when he had completed his internship at Cronkite Productions Inc. in New York.

• Kathryn Player (Spring 2000, Program Distinction, Summa Cum Laude) received admission

notices with financial scholarship from several prestigious universities. She chose to complete her

graduate study in Sports Management at Temple University with a full assistantship.

2001

• Wesley Schnell (Spring 2001, Program Distinction) played a leading role in Communication

Society and maintained a GPA of 3.9. He received a number of scholarship and other honors

during his studies at Stockton and was listed in Who is Who. With strong recommendations

from James Shen and Joe Borrell, he attempted his internship first at the CNN headquarters

in Atlanta, Georgia, and later ended it in Washington D.C. for political communication and

congressional reporting.

• Minority student Llewelyn Glover (Spring 2001, Program Distinction) did extremely well in

his communication and theatre studies. With the reference letters from James and others, he

was admitted with scholarship in the film school of Chapam University in California.

• Elizabeth Makoski completed her internship at New Jersey Motion Picture and Television

Commission in Newark, NJ. Her media-related experience and computer skills won her a

position as manager associate in Commerce Bank before she walked through the

commencement. Her starting salary was $33,000.

• Right after her graduation Kari Biehler was hired as a commercial account executive by

Pitney Bowes, a Fortune 500 company. She impressed the employer with her solid computer

application skills, and the marketing and media experience she obtained from her internship

at the Press of Atlantic City.

• Christopher Nagle had his DJ job with his internship experience at K Rock 98.7 & The Coast

WCZT 94.3.

•

•

•

•

•

Guy Zompa got his PR job in Absecon before graduation.

Nicole Muscarella impressed the Manhattan boss with her oral, written and computer skills

and organizational insight during her internship at Koch Entertainment on Broadway, New

York. She was offered a formal job position with $41,000 before she finished her degree

studies at Stockton.

When Lisa Easter completed her internship at Atlantic Cape Community College, she was

asked to take an administrative position with the mid 30’s. But she found a better position at

Slack Inc. in Deptford as a marketing research assistant.

Nick DeLuca found a corporate position soon after his internship at KYW.

Christina Soffer (Fall 2001, Program Distinction) created a good performance record for her

internship in a real estate business in northern New Jersey. She received a job offer at WHD

(Whole Home Direct) with $35,000 and full benefits right after her graduation.

2002

• Brian Garnett interned at 102.7 WJSE Somerspoint, NJ last summer. Because of his excellent

work performance, he took a job offer from the radio station before his graduation from

Stockton.

• Kelly Washart (Spring 2002, Program Distinction) was offered a position of PR Assistant in

Parker & Partners Marketing Resources, L.L.C. for her internship performance impressed

the company supervisors.

• Damon Scalfaro received a job offer at Australian Trade Commission with lower 30’s after

he completed his internship in the Embassy of Australia in, Washington D.C.

• Kim Pinnix (Spring 2002, Program Distinction, Cum Laude) got a full-time position of

marketing assistant at Seashore Gardens Living Center because of her internship experience

at American Society for Engineering Education in Washington D.C.

• Suzanne Wavro (Spring 2002, Program Distinction, Cum Laude cum laude, transfer Scholar

Award Antoinette Bigel Studies Endowed Scholarship) was accepted by Stockton Education

Program.

• Lisa Boccuti, (Fall 2002, Program Distinction, Cum Laude) accepted a job as a marketing

associate of a pharmaceutical company in South Jersey.

• Katy Smith (Fall 2002, Program Distinction, Magna Cum Laude) received a job offer at

Access Response, Inc., an advertising company in Toms River, with her successful internship

experience in PR. The new alumna even offered internship positions for the communication

majors in 2003.

2003

• Lapell Chapman finished his internship at Pleasantville High School, where he operated the

Media Distribution System, and performed on site camera operations for sports events and

other activities, and controlled the sound system for board of education meetings. His

excellent performance bought him a dual position of a K-12 teacher and director of the TV

station with a starting salary of 45K.

• Kelly Vance became the promotions and marketing coordinator of the Atlantic City

Boardwalk Bullies Hockey Team when she had completed her internship in promotion and

advertising there.

• James Dunn was sent to WAYV 95.1, Atlantic City for his radio internship, and he got a job

offer there due to his outstanding work performance in radio production.

•

•

•

•

•

•

Robyn Porreca’s internship experience with WRAT 95.9 in S. Belmar helped her gain the

position of promotion support staff.

Melissa Gonzalez, who impressed her internship supervisor in Millennium Radio Group with

her outstanding work in radio promotion, copy writing, and advertising, found a conversion

sales representative position for R.J. Reynolds in Wright Consulting in Philadelphia with

32K. She worked as a city manager with another communication alumna Kelly Rich.

Kari Spodofora had her internship at Gilmore & Monahan Attorneys & Law, Toms River.

Her positive attitude and work performance in legal communication management was greatly

appreciated by the lawyers. With the education at Stockton and the internship experience, she

was admitted into Law School of Rutgers, Camden in fall 2003.

Jennifer Kane, (Spring 2003, Program Distinction, Magna Cum Laude), found an

administrative job in Ocean County College. She was later accepted as a graduate student in

the MAIT Program at Stockton.

Sharon Giordano (Fall 2003, Program Distinction, Magna Cum Laude) was hired by Stockton

as Assistant Director for Community of Scholar in 2004.

Stephanie Grossman was offered a job at the Government Affairs Office for the ExxonMobile

Corporation, Washington D.C. in February 2003. In six months she was promoted to be

Congressional Staff Assistant, working with the lobbyists of Exxon for the House and

Senate. In Spring 2004, she was accepted into the Columbian Graduate School of Public

Administration at George Washington University.

2004

• Kelly Lenzi did her internship at the Miss America Organization in Atlantic City. Her

outstanding performance and communication capability won her a producer position before

she finished the seasonal work there.

• When Erin Foster finished her internship at ImageMaker, Brick, NJ, she got a job at Lanmark

Advertising in Eatontown in October 2003. She started as a marketing associate at $29K.

• Leah Kuchinsky got a job at AC Coin & Slot as their sales coordinator after she completed

her internship at Taj Mahal.

• In Maryville Foundation, Franklinville, NJ, Laurie Emhe did her internship in fundraising

and social investigation for the Chair of Camden County Freeholder Thomas Gurick. Her

independent research work and grant writing was considered efficient and professional,

“providing valuable information concerning capitalization of foundations.” She was awarded

a position in October 2003 as Human Resource Manager/Grant writer for Maryville

Addiction Services (non-profit) in Williamston. Her starting salary was 37,500, to be

increased to 40,000 in 3 months.

• Esther Guzman did her internship at UniVision. Her positive attitude and work performance

in communication management was greatly appreciated by the Hispanic media organization.

She was offered a job position soon afterwards.

• Jessica Batha (Spring 2004, Program Distinction, Cum Laude), who did an advertising/PR

internship, found a job as assistant for the Account Executive.

• Cindy Coppola, who received a very positive internship evaluation from the Miss America

Organization, was accepted by both California State University at Los Angeles and

California State University at Fullerton in fall 2004. She went to the latter for her graduate

study in Communication/Public Relations.

•

Kim Troncone (Fall 2004, Program Distinction, Summa Cum Laude) with dual degrees in

Communication and Education, obtained a teacher position in a public school in South

Jersey.

2005

• Cannella Autumn, who interned in American Cancer Society, Philadelphia, was hired as

marketing sales associate in Fashion Biz, NYC in fall 2005.

• Terrence Field got a job at Human Capital Management, Inc., Valley Forge, PA, an IT

consultant business, after completing the internship in spring 2005.

• Allison L. Venezio did her in KYW-TV 3, CBS, Philadelphia. She not only participated in

many production events but also aired a show that she directed and produced.

• Sarah Hamilton got admitted at Master Program in Journalism, Regent University, Virginia

Beach, VA.

2006

• Sharon Giordano and Bojan Zilovic got admitted into Stockton’s MAIT Program in F06.

• Barbara Knopp-Ayres was hired by Absegami High School as Media Assistant.

• Intern: Lisa Diebner, Interned in WMCN DT-44, TV in Atlantic City, and was hired as

production assistant in Music Choice, New York City, 10/16/06

• Scott Holden, Staff Reporter of The Central Record in Medford, NJ, 11/16/06 e-mail

• Lauren Shapiro received admission notice from the graduate school of Communication

Department of Johns Hopkins University.

• Brian Coleman, who interned in Washington D.C. in fall 2006, received a job offer as

Continuity of Operations Specialist for the Department of Defense, Washington

Headquarters Services. Nominated by the Mass Communications Advisor to be featured in

the e-newsletter that the Washington Center regularly sends to prospective interns, Brian was

asked to deliver a speech as the Mass Communications representative at the scheduled TWC

commencement on December 11, 2006.

2007

• Elizabeth M. Runner’s application for Peace Corps got accepted by the organization’s

Administrations Office, Washington D.C.

• Vanya Kasakova, a S07 communication graduate, got accepted into the MAIT.

• Jill Pokus, a comm. graduate and the Program Distinction Honor recipient in May 2005, was

hired as Associate Center Manager and Marketing Manager of Kaplan Test Prep and

Admissions with a starting salary of 36k.

• Communication senior Aja Graffa interned with CNN in Washington D.C. in fall 2007. Aja

received a job offer from CNN right after she completed her internship.

2008

• Darlene Dobkowski is admitted into the fall 2008 Graduate Program of Communication of

Emerson College.

• Jeff Schiffer got accepted into Graduate School of Communication of Monmouth University

in spring 2008

•

•

•

•

•

Jaclyn Malley, a 2006 communication graduate and an associate producer of Red Films, New

York City, got admitted into the Graduate School of Corporate Communication, Monmouth

University, fall 2008.

One of James’ former advisees Sherri Alexandersen, who completed her MAIT studies at

Stockton with the honor of Founders Award in May 2007, landed a job as a Multimedia

Designer for the aerospace firm Lockheed Martin in fall 2007.

Another James’ former advisee Kimberly Pinnix graduated from Drexel University with an

M.S. Degree in Public Communication (in Publishing track, GPA: 3.88).

In 2008, there were at least five communication majors working at the Press of Atlantic City

as full-time or part-time employees, including three recent graduates Rachel Guevara,

Jennifer Hults, and Kelly Ashe, and the recipient of Charles C. Reynolds Endowed

Journalism Scholarship Brittany Grugan.

Darlene Dobkowski, a fall 2007 graduate now studying at the graduate school of journalism

at Emerson College, received an internship position at www.boston.com

2009

• Reyanna Turner got accepted at The University of Maryland, College Park, and Towson

University.

• Joshua Hoftiezer got admitted into the fall 2009 graduate program (writing) of the

communications department, Rowan University.

• Graduating senior Jessica Grullon interviewed Governor Corzine during her fall 2009

internship with NBC40 WMGM-TV). She received a very positive evaluation from the news

director for her media writing and Spanish speaking skills.

• Justin Dolan was hired by MTV in New York City in 2009.

• Bojan Zilovic, a 2005 graduate, was hired by ACCC as an assistant professor of

communication in 2009

• Jennifer Giardina, ’09 summer graduate, received a position as a part-time Production

Assistant with NBC40 WMGM-TV in November 4, 2009

• Aneta Soponska, post-graduation practicum at New York Times, New York, fall 2009.

Working fulltime in Poland now.

• Celeste George, a 2004 graduate, served as the art director of Artcraft Promotional Concepts

in Moorestown, New Jersey

2010

• In spring 2010, Christa Cycak interned with MTV in New York City. She took many daily

responsibilities for production management projects in Comedy Central:

o Coordinating a number of producers and free-lance workers for TV shows

o Designed spread sheets for nationwide contacts and crew traveling

o Prepared financial packets for the accounting department

o TV editing

o Assisted different projects, including a new reality show in downtown New York

City.

o During his internship with Atlantic Prevention Resources in fall 2009, William Walsh

helped produce a documentary for banning smoking in Atlantic City casinos.

• Lyndsey Watkins worked with the NJ Department of Environmental Protection in Leeds

Point. Her internship involved data management and web site improvement.

•

•

•

•

Christy Barbetto interned in Fastenal, Hammonton, NJ and received a manager’s position

before she completed the internship in spring 2010.

Jennifer Jade Lor performed an internship in the Southern New Jersey Office of U.S. Senator

Robert Menendez during the summer of 2010. She received a very positive evaluation for

“her effective communication and organization skills.”

Emily Lingo did her internship in the Clear Channel Communications in Philadelphia in the

summer of 2010. She updated the station’s website and coordinated many events and was

commended for her good communication management skills.

Andrew Moore went through the WLFR Internship and now he is one of the WLFR student

managers. He currently writes for a music magazine Origivation.

http://www.origivation.com/

Student Publications