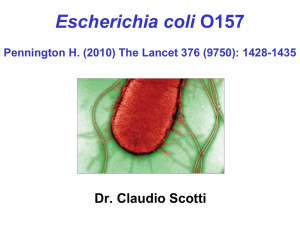

Field Epidemiology

advertisement