Identifying and Understanding Dates and Times in Email

advertisement

Identifying and Understanding Dates and Times in Email

Mia K. Stern

Collaborative User Experience Group

IBM Research

1 Rogers Street

Cambridge, MA 02142

mia_stern@us.ibm.com

ABSTRACT

Email is one of the “killer applications” of the internet,

yet it is a mixed blessing for many users. While it is vital

for communication, email has become the repository for

much of a user’s important information, thus overloading

email with other responsibilities. One way in which users

overload their email is by using it as a calendar and a todo list. People keep in their email reminders of meetings,

events, and things to do by some deadline, all of which

contain dates and times. Unfortunately, these emails can

get lost amongst all the other emails. Because of the

numerous ways dates and times can be expressed in

written language, traditional searches are often not

effective. In this paper, we discuss a technique to help

users extract such calendar and deadline information from

their emails by identifying dates and times within an

email message. We believe identifying dates and times

will help users organize their schedules better and find

lost information more easily. In this paper, we discuss

our approaches for this problem. We discuss syntactic

methods used to find dates and times and semantic

methods to understand them. We then present the results

of two user studies conducted to determine the accuracy

of our technique.

Keywords

Date extraction, Time extraction, Email, Evaluation

INTRODUCTION

Increasingly, people are using their email inboxes as a

way of organizing their lives (Duchenaut & Bellotti,

2001)(Whittaker & Sidner, 1996). Inboxes are no longer

simply repositories of incoming mail; they are where the

details of peoples' lives reside. People use their inboxes

as calendars, to-do lists, and address books, among other

things. People keep documents in their inboxes because

those documents have pieces of information that they do

not want to delete. They also save documents to keep the

information readily accessible. Unfortunately, the more

documents that accumulate in the inbox, the harder it is to

manage the information that is contained there.

While email documents are semi-structured in that they

contain well-defined fields, the bodies and the subjects

are unstructured. These unstructured parts may contain

some information that can help the user organize

important information better, such as names of people and

companies, URLs, phone numbers, and places where

meetings take place. Email documents also contain dates

and times that allow users to use their inboxes as

calendars and to-do lists. In this paper, we focus on

extracting this date and time information from email

documents to make some user's tasks easier.

We have developed an add-on to email (currently

implemented in Lotus Notes) that can help users keep

track of items in their inboxes by taking advantage of the

semi-structured information provided by dates and times.

This system identifies date and time phrases that appear in

the bodies and subjects of email messages, and interprets

these phrases into a canonical calendar format.

There are a number of potential applications for this

technology. For example, the system can help the user

more easily make calendar entries (such as appointments

and meetings) and to-do items. When the user wants to

make such an entry, she can choose from the dates and

times found and the entry will be made for that selection.

Nardi, et.al. (Nardi, et al., 1998) present a similar idea to

this, but in their system, the user must first select the text

which contains the date to be parsed. Our system also

allows this functionality, but it can also find all dates and

times in an email message without requiring the

intervention of the user.

This technology can also be used for smart reminders,

indicating messages with an approaching due date, or

even messages that have “expired.” Furthermore, users

can search through their email for a date, regardless of its

textual format. This paper focuses primarily on the

accuracy of the underlying text extraction techniques that

will support a range of such applications.

The rest of the document is organized as follows. We

begin by discussing our technique for locating dates and

times within an email message. We then describe the

methods for semantically processing a date or time. The

results of our user studies are then discussed. We

conclude with some thoughts and future work.

FINDING DATES AND TIMES

The goal of this project is to identify and understand dates

and times that appear within email messages. Although

there has been previous work on identifying dates/times in

standard corpora (Mani et al., 2001)(Grover et al.,

2000)(Message understanding conference), in historical

documents (Mckay, 2001), and in scheduling dialogs

(Wiebe et al., 1998), there has not been a focus on the

kinds of dates/times that appear in email messages. The

Selection Recognition Agent (Pandit and Kalbag, 1997),

an application-independent feature recognizer, could be

applied to email, although that was not the main focus of

that work.

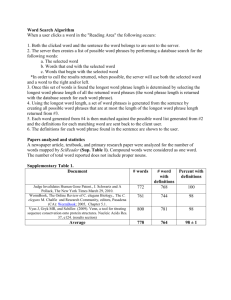

The purpose of this testing was to build a lightweight

date/time extractor to start building applications specific

to email. The first step was constructing a grammar that

would detect date and time phrases in email messages.

For this, we constructed regular expressions to find the

dates and times, since regular expressions are a simple

way to represent most of the date and time expressions we

have discovered in email messages. Some of the regular

expressions we are using can be found in Figure 1. The

seventh regular expression, MONTH_DAY_YEAR, can

identify, for example, January 1st, 2002. The last

expression, TIME_AMPM, can identify times such as

10:03 a.m.

SHORTMONTH =

(Jan|Febr?|Mar|Apr|May|Jun|Jul|Aug|Sept?|

Oct|Nov|Dec)\.?

LONGMONTH =

January|February|March|April|May|June|July|

anticipate the needs of a set of meaningful email and

calendar applications. These included figuring out when

to present a date to the user as a single date and when as a

range of dates, and how to define certain ranges. Single

dates, occur on only one day whereas date ranges cover

multiple days.

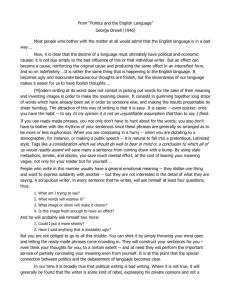

We have determined a similar

classification for times, where single times refer to a

moment on a calendar and time ranges have distinct start

and end times. Furthermore, both dates and times can be

classified as explicit or inexact. Exact dates and times are

those that are explicitly spelled out, while inexact dates

and times are more vague in their references to a calendar.

Inexact dates are typically in reference to a known date,

and inexact times have a more fuzzy start and end than do

explicit times. Table 1 shows some examples of dates and

times that fall under these categories.

Our use of regular expressions has some limitations.

While regular expressions can be very fast and reasonably

accurate for date and time detection, they are not

sufficient for finding all dates and times, as there are a

large variety of formats for those expressions. Similarly,

they are not sufficient for linking a related date and time

that are split by extraneous text. For example, in the

phrase January 24, 2002, 1 Rogers Street Room

5003, 12:00-1:30pm, it is very hard to link January

24, 2002 with 12:00-1:30pm. Our goal, however, is

not perfection with regards to feature detection, since

heuristics are by their nature imperfect. Rather, we are

hoping to be “close enough” so that users will find this

technology useful and usable.

Explicit

August|September|October|November|De

cember

MONTH = SHORTMONTH | LONGMONTH

DAY = [0-2]?[0-9]|3[0-1]

YEAR = \d{4}

SUFFIXES = st|rd|th|nd

MONTHDAYYEAR =

MONTH\s+DAY+SUFFIXES?,?\s+YEAR

HOUR_12_RE = 0?[1-9]|1[0-2]

HOUR_24_RE = [0-1]?[0-9]|2[0-3]

MINUTE_RE = [0-5][0-9]

AM_RE = (a|(\s*am)|(\s*a\.m\.))

PM_RE = (p|(\s*pm)|(\s*p\.m\.))

AM_OR_PM_RE = AM_RE | PM_RE

TIME_AMPM =

HOUR_12_RE(\s*:\s*MINUTE_RE)?\s*:\s*

MINUTE_RE AM_OR_PM_RE

Figure 1: Example regular expressions

One of the most challenging parts of this project was

defining qualities of dates and times to test for that would

Dates

Inexact

Single

Range

8/16/2002

August 8-12, 2002

4 August 2002

June 12 – July 5

Tomorrow

Next week

Next Thursday

August

Explicit

10am

Inexact

At 4

Times

10am – noon

from 3 to 4pm

Morning

lunchtime

Table 1: Examples of different kinds of dates and times

Understanding dates and times

Once a date/time has been located, it must be semantically

parsed so that it can be used by the applications we have

mentioned. To do this, the system must convert each

date/time found into a canonical format. In this section,

we discuss the heuristics we use for this semantic

analysis.

Kind of

expression

Heuristic assumption

Example

Date/ time

interpretation for

message received

on January 28,

2003

No year

given

Check verb tense, if

future assume within

next 12 months

We will meet on

February 4

February 4, 2003

This <day>

Before the end of this

week

This Thursday

January 30, 2003

Next <day>

During the week that

starts on the upcoming

Sunday

Next Thursday

February 6, 2003

Last <day>

During the week that

ended on the previous

Saturday

Last Thursday

January 23, 2003

No a.m. or

p.m.

Assume during normal

business hours

Let’s meet at 1

January 28, 2003 at

1pm

Table 2: Some heuristics used for filling in missing fields

Missing fields

example, if today is Monday, and the document mentions

Dates and times that are fully specified are easy to convert

into this canonical format. A fully specified date is one

that has its calendar date(s) and time(s) explicitly stated.

An example of a fully specified date/time is Thursday,

March 28, 2002 from 1:00pm to 2:00pm. These

kinds of dates tend to appear in formal meeting

announcements or talk announcements.

next Thursday, is the Thursday in question the day 3

However, many emails, especially those written using a

more “conversational” tone, do not contain such formal

dates and times. Dates and times occurring in email

messages are more informal, often assuming human

readers can fill in the unspecified portions. For example,

people can easily process and understand Let's

meet at 4 on Thursday by using their background

common sense knowledge and experience. In our system,

heuristics are needed to fill in the missing fields of both

dates and times. For example, when writing times, people

will frequently omit a time of day indication (a.m. or

p.m.). If this omission occurs within email, we assume

the hour referred to occurs during the regular business

day, i.e. 7am through 6pm. People will also write times

without associated dates, such as Let's meet at 10am.

In this case, we assume the date to be the reference point

date (described in the next section).

Inexact date phrases and time-only phrases need a

reference point from which the date for the phrase can be

calculated. Some systems, such as LookOut (Horvitz,

1999), use the date the message was sent as this reference

point. However, this is not always correct. For example,

in Figure 2, there are two instances of the word

“tomorrow.” If we were to use the date the email was

sent as the reference point, the second “tomorrow” would

be interpreted incorrectly.

With dates, different details can be left unspecified. A

very commonly unspecified detail for a date is the year,

e.g. March 29. In this case, we assume the date to be

within the next twelve months of the reference point date

(March 29 would be interpreted as March 29, 2003).

The header of the message starts the first header block,

and subsequent headers found within the body start their

own header block. Each block is terminated by the

subsequent header. Each header contains the date

indicating when that part of the document was sent. The

system uses this date as the reference point for any dates

or times found within the header block.

Inexact dates frequently need many of the date/time fields

filled in. These kinds of dates can be confusing to

interpret, for human readers as well as computers. For

days from the reference date or 10 days from that date?

We are making the assumption that “next” anything is

within the week that starts from the upcoming Sunday and

ends the following Saturday. A sampling of some of the

heuristics used can be found in Table 2.

Setting reference point dates for inexact dates

Email messages, however, provide additional clues as to

what these reference points should be, namely those dates

which can be found in headers within the body of an

email message. Messages which are replies or forwards

frequently contain these kinds of textual headers.

Reference points are determined by treating the email as a

series of headers and bodies, with each header and body

making a header block.

Using this new heuristic, both of the instances of

“tomorrow” in Figure 2 would be interpreted correctly.

The first is interpreted as May 18, 2002, while the second

is interpreted as May 15, 2002.

F ro m : M ia S te r n

0 5 /1 7 /2 0 0 2 0 3 :1 2 P M

T o : D erek La m

cc:

S u b je c t: R e : M e e tin g

S o rry I c o u ld n 't m a k e it th e n . Ho w a b o u t to m o r ro w in s te a d ?

- M ia

F ro m : D e re k L a m

0 5 /1 4 /2 0 0 2 0 1 :3 8 P M

T o : M ia S te rn

cc:

S u b je c t: M e e tin g

C a n y o u m a k e a m e e tin g to m o r ro w?

D e re k

Figure 2: Why headers are used for reference point dates,

rather than using the sent date of the message

Part of speech tagging

We use another heuristic technique for determining the

correct date being referred to in a document. We cannot

always assume that dates are in the future. If we make

such an assumption, we cannot process phrases like As

we said on Thursday and accurately determine the

correct date. Therefore, we use part of speech tagging

(Brill, 1992) to determine if the date is in the past or the

future. We look at the verb closest to the match, and if it

is in the past tense, we assume the date is also in the past.

A similar approach to using parts of speech is presented in

(Mani & Wilson, 2001).

F ro m : M ia S te rn

0 1 /1 5 /2 0 0 3 0 2 :4 3 P M

T o : D a n ie l G ru e n

cc:

S u b je c t: w e b s ite

Hi DanIn th e m e e tin g w e h a d o n M o n d a y , w e

ta lk e d a b o u t s e ttin g u p a n e w w e b s ite . C a n

w e m e e t o n F rid a y to ta lk a b o u t th is m o re ?

T hanks,

- M ia

Figure 3: Using part of speech tagging for disambiguating the

meaning of a date phrase.

For example, consider the message given in Figure 3.

Without part of speech tagging, Monday would have been

interpreted as Monday, January 20, 2003. However, since

the closest verb to that phrase is in the past tense (in this

case “had”), the phrase is interpreted correctly as

Monday, January 13, 2003. Similarly, since the verb

closes to Friday, “meet”, is not in the past tense, that

phrase is interpreted to mean Friday, January 17, 2003.

Ambiguities

There is another difficulty with the semantic processing of

dates and times. There are ambiguities that arise with

dates and times that can be difficult to resolve without

context. For example, is Thursday, 7-10 a date, as in

July 10th, or is it a time, from 7pm to 10pm (or 7am –

10am)?

Our current method is to assume in this case a time, rather

than a date. In the ideal case, both options and context

surrounding the match would be presented to the user so

she could disambiguate more easily.

EVALUATION

Before exploring whether any applications using this

date/time understanding technology would be viable, we

wanted to investigate whether the technology could

accurately identify and interpret the specific dates and

times that appear in email messages. We also wanted to

discover if there were any differences in how users rate

the dates and times that were found and how they were

interpreted.

Methodology

We conducted a user study with 9 participants in which

each user processed about 20 of her own emails. Each

user was presented with her email one message at a time,

and for each message, she is asked about the dates and

times that were found.

Each date/time found is presented one at a time and a

series of questions appropriate to the kind of date/time is

asked. The first question asked is obviously, “Is this

phrase a date/time related phrase?” If the user answers

“yes”, she is then asked if the date is classified correctly

as a single date or a date range, if that classification is

correct, and if the interpreted date(s) is correct? The user

is then asked about the time classification and the time

interpretation.

We present our results in terms of precision and recall.

Precision is the number of date/time phrases correctly

processed in category x divided by the total number

processed in category x (these can also be called false

positives). Recall is the number of date/time phrases

correctly processed in category x divided by the number

of things that really should have been processed in

category x. See Table 3 for an illustration of how

precision and recall are calculated.

Is this a

date/time

related

phrase

User

Identifies as

Date/Time

User Rejects

as

Data/Time

System

Proposes as

Date/Time

System

Does not

Propose

A

B

(correct)

(misses)

dates as date ranges, indicating that perhaps single dates

are a more important focus for this kind of work.

Recall =

A/(A+B)

C

(false

positives)

Precision =

A/(A+C)

Table 3: How precision and recall are calculated. The grayed

out box cannot be calculated.

Results

We collected 150 email messages from the 9 users in our

study.

Our user population consisted of interns,

developers, researchers, and one executive. Of the 150

collected email messages, 78% had dates and/or times.

Eleven of the 117 messages contained only machine

generated dates (e.g. dates that appear within header

blocks). Within these 117 messages, our system proposed

593 date/time phrases, of which 546 were actually

date/time phrases. Thus our precision is 92.07%. Our

system missed an additional 39 dates/time phases that

users identified, giving us a recall value of 93.33%.1

These are just broad claims, however, indicating whether

the system is focusing on the right types of phrases. To

be effective, the system must also correctly classify the

type of date/time phrase and accurately interpret the date

or time found. In the sections that follow, we delve into

more detail on how well the system classified and

interpreted single dates and date ranges and then how well

it did on both single times and time ranges.

Dates

Once a phrase has been identified as a date/time phrase, it

must be classified as either a single date or a date range.

On single date identification, the system achieved a

precision of 89.65% and a recall of 92.92%. With date

range identification, on the other hand, the precision is

only 74.82% while the recall is only 83.2%. Clearly the

system does not perform as well on identifying date

ranges. However, there are almost 4 times as many single

1

It is possible that the documents we collected are

skewed in the number dates and times, since users

analyzed only those documents they wished to share; we

do not know the proportion of date and time phrases in

documents users did not allow us to see.

Table 4 illustrates how well our system performs on both

classifying and interpreting single dates. We have broken

down the kinds of date phrases into whether they were an

explicit date, an inexact date (e.g. tomorrow, next

Tuesday, or this morning), a time without a date (e.g. 1pm

or from 1-2pm), and all others. The rows in the table

indicate the user responses, including whether the date

was classified and interpreted correctly, whether it was

either misclassified (the system claimed the phrase was a

single date when it was not) or interpreted incorrectly (the

system knew it was a single date but it got the date

wrong), or whether it was not really a date at all. Overall,

the system achieved 81.06% precision on interpreting

single dates and 84.02% recall (the system missed 28

single dates and claimed that 3 actual single dates were

date ranges) on locating and interpreting single dates.

We expected the system to be able to interpret explicit

dates relatively well but to have difficulties with other

kinds of dates. As anticipated, the system had more

difficulty classifying and interpreting inexact dates than

explicit dates, since it needed to infer the date from

context rather than just parse the phrase. It also had some

difficulty associating the correct date with phrases which

contained only a time. One possible reason for this

difficulty is due to the heuristic we are using for filling in

the missing date in those cases. We are currently using

the reference point date of the header block, which is

frequently not the correct date. However, if we change

our heuristic to use the closest date to the time phrase in

the sentence, we feel we can improve the accuracy on

those phrases.

In Table 5, we see how well our system does on

identifying and understanding date ranges. Similar to our

analysis of single dates, we have broken down date ranges

into various kinds of ranges, including explicit ranges

(e.g. November 6-8), months with years (e.g. June 2003),

months without years (e.g. June), years or year ranges

(e.g. 2002 or 2002-2003), and inexact date ranges (e.g.

this week or next week). The rows are the same as in

Table 4. Overall the precision for interpreting date ranges

is 69.06% and the recall is 82.05% (the system missed 10

ranges and claimed 11 actual ranges were single dates).

It is interesting to note how many kinds of date ranges

there were, with most of them being somewhat ill defined,

and only 5 explicit date ranges. There were a large

number of inexact ranges, and the system did relatively

well on those kinds of dates. The largest number of date

ranges were years or year ranges. However, one reason

the system performed poorly in general on date ranges is

the number of phrases the system classified as years that

users did not consider date ranges at all. One reason for

this is our regular expression for detecting years is overly

general; it detects every four digit number. By limiting

the range for years to between 1900 and 2099, we should

Extraction process: Single dates

Specific

date

Inexact

date

Time only

Other

total

196

127

44

1

368

(95.6%)

(74.27%)

(64.71%)

(10%)

(81.06%)

Incorrectly

classified or

interpreted

7

35

18

9

38

Not a date

phrase

2

9

6

0

17

total

205

171

68

10

454

Correctly

classified

and

interpreted

Table 4: System's performance on identifying and interpreting single dates. The percentages given are precision values, calculated by

dividing the value in the cell by the total for that column.

Extraction process: Date ranges

Explicit

date range

Month w/

year

Month w/o

year

Year / year

ranges

Inexact

date range

Other

Total

5

11

9

44

26

1

96

(100%)

(91.67%)

(81.82%)

(57.14%)

(78.79%)

(100%)

(69.06%)

Incorrectly

classified or

interpreted

0

1

0

5

7

0

13

Not a date

phrase

0

0

2

28

0

0

30

Total

5

12

11

77

33

1

139

Correctly

classified and

interpreted

Table 5: System's performance on identifying and interpreting date ranges. The percentages given are precision values, calculated by

dividing the value in the cell by the total for that column.

be able to reduce the number of false positive year

detections. By making this change, we would have

avoided 21 false positive instances, increasing precision

on date ranges to 81.36%, up from 69.06%.

Overall, on dates, the system achieved a precision value

of 78.25% and a recall result of 79.32%. We believe that

with some simple changes to some of our heuristics, we

can potentially improve these results.

Times

In addition to dates, we also investigated how well the

system could find and interpret times. Table 6 shows the

system’s results on finding times associated with dates.

An interesting thing to note from this table is the

frequency with which the system missed times. Many of

these missed times occur when a date and time appear

within the same sentence but are located far apart, similar

to the problem we discussed with incorrect dates being

associated with time only phrases. For example, let’s

tomorrow, say around 1pm contains two

date/time phrases, tomorrow and 1pm. Our system misses

the time for tomorrow and assigns the incorrect date to

1pm. By using the heuristic discussed in the last section,

we hope to alleviate both problems.

meet

For single times, the system was very accurate (precision

of 91.5% and recall of 80.63%). For these times, there

were 112 instances when the time was given with either

a.m. or p.m. The system correctly interpreted all 112.

For the 17 instances in which no a.m. or p.m. was given,

the system interpreted all but 2 correctly. In both cases,

the system mistook a day for a time (e.g. in 01 JUL 02,

it interpreted the time as 1pm).

With time ranges, the system achieved a precision of

75.36% but a recall of only 71.25%. However, of the

time ranges the system found, it correctly interpreted all

40 explicit ranges. For inexact ranges (such as morning

or afternoon), the system correctly interpreted only 12 out

of 26 cases. We believe this is because our definitions of

Extraction process: time

No

Correctly

classified and

interpreted

Incorrectly

classified

and/or

interpreted

time

Single

time

Time

range

Total

171

129

52

352

(83%)

(91.5%)

(75.4%)

(84.6%)

35

10

54

3

7

10

1.5

1.0

0.0

USER1

206

141

69

Individual differences

The results reported thus far are from pooling the data

from all 9 participants. However, this kind of analysis

does not help us determine if there are any individual

differences in how users interpret dates and times. To

determine if there are such differences, we performed an

analysis on the accuracy of the date detection and

understanding. We calculated the log odds2 of the

precision for each document a user evaluated, and then

calculated the mean of these values and the standard error

of the mean for each person and their documents (see

Figure 4). We can see from this analysis that there no

detectable difference between the means for the

individuals and the mean for the population.

However, based on discussions with users, we are

inclined to believe there are some individual differences

that cannot be detected statistically. For example, some

participants did not agree with our specifications for the

beginning and end of a week (in phrases such as this week

or next week). Three participants consistently challenged

our definition of when a week starts and ends, while the

other six agreed with our specifications.

With respect to time, the only occasions on which there

were some disagreements were in how times of day

should be interpreted, such as morning, afternoon, and

Log odds is calculated using the following formula:

numcorrect + 0.5

The 0.5 correction is a

ln(

).

numfalsepositive + 0.5

Bayesian technique that may be used when the actual

number of observations is small, e.g., one of the

numbers might be zero.

USER3

USER2

416

Table 6: Results for finding times.

2

2.0

.5

Not a time

phrase

Total

9

evening or night. Two participants disagreed about the

start and end time for afternoon while one participant

disagreed about morning. One possible approach to this

is to model individual user preferences on these time

ranges.

Mean +- 1 SE

when these inexact ranges begin and end do not agree

with users’ opinions on these ranges.

USER5

USER4

USER7

USER6

USER9

USER8

Figure 4: Comparing means of log odds between users. The

dotted line shows the mean for the whole population and the

surrounding box is one standard error of the mean.

Second user study

We took the results from the first user study and analyzed

where we could make improvements in our algorithm.

There appeared to be some clear areas where we could

make improvements without significantly changing our

strategy. Some of the improvements were designed to

increase precision while others should help improve

recall. We made these changes to the algorithm and ran a

second user study to determine if the results in fact

improved. Rather than running our algorithm over the

data we collected in the first user study, we decided to run

a second study to determine if our results would

generalize over a different data set.

Improvements

One improvement to increase precision is to restrict our

definition of a year. In the first user study, we were

detecting all 4 digit numbers as years. This led to a large

number of false positive finds. To reduce the number of

false positives, we are now only recognizing years

between 1900 and 2099.

A second improvement to increase precision is a heuristic

to link dates and times together. During the first user

study, the system frequently did not find times associated

with some dates when the participant indicated that there

was in fact a time. Similarly, on many phrases that

consisted of only a time or a time range, the system

assumed an inappropriate date for that phrase. To fix this

problem, the system now looks within a sentence to see if

there is a time to associate with a date or a date to

associate with a time. However, if there is more than one

Extraction process: single dates (second study)

Specific date

Inexact date

Time only

Total

193

128

26

347

(88.53%)

(89.51%)

(26.53%)

(75.60%)

Incorrectly classified

or interpreted

19

12

25

56

Not a date phrase

6

3

47

56

Total

218

143

98

459

Correctly classified

and interpreted

Table 7: Single date results from the second user study.

Extraction process: date ranges (second study)

Explicit date

range

Month w/o

year

Year or

year range

Inexact

date range

Deadline

date

Total

3

9

24

22

42

100

(100%)

(69.23%)

(40%)

(91.67%)

(85.71%)

(67.11%)

Incorrectly

classified or

interpreted

0

2

5

2

7

16

Not a date phrase

0

2

31

0

0

33

Total

3

13

60

24

49

149

Correctly classified

and interpreted

Table 8: Date range results from second user study.

Extraction process: time (second study)

Correctly classified

and interpreted

Incorrectly classified

or interpreted

No time

Single time

Time range

Deadline times

Total

185

129

60

46

420

(93.91%)

(96.27%)

(57.14%)

(92%)

(86.42%)

12

3

2

3

20

2

43

1

46

134

105

50

486

Not a time phrase

Total

197

Table 9: Time results from the second user study.

time in a sentence, the system will not choose one to

match with a date, and vice versa. To improve recall, we

are now also detecting date and time ranges we are calling

deadline dates and times. These phrases are distinguished

by including keywords such as “by”, “until”, or even

“through”. Previously, we were finding the dates and

times in these phrases, but we were not recognizing that

those dates and times indicated a range, rather than a

single date or time. For example, in the phrase “The

paper is due by October 14”, we would find October 14,

but we would not recognize that this date represented a

deadline. Users indicated that the whole phrase should be

identified as a date range, starting when the email was

received and ending at the date in the phrase.

The last improvement we made, also to improve recall, is

to expand our notion of time ranges by detecting

mealtimes, such as “lunch(time)” or “dinner”. We are

also now detecting the phrases “AM” and “PM” as time

ranges. A number of users indicated during the first user

study that these were phrases that represented times.

Results

CONCLUSIONS

For the second user study, we had 7 users evaluate a total

of 162 documents, 115 of which had dates. Our system

detected 608 date/time phrases, 519 of which were

actually date/times (precision = 85.36%). The system

missed only 19 date/time phrases (recall = 96.47%).

In this paper, we have presented a technique to detect and

interpret dates and times within email.

We have

attempted to determine the accuracy of our technique

through two user studies.

Our overall results for the second user study are fairly

similar to the first user study (see Tables 8-10), with the

only significant difference on precision being a lower

precision in the second user study on single dates (t=2 on

an independent samples t-test, p < 0.05). For recall, we

increased our results significantly for single dates

(t=3.874, p < 0.005) and for times (t=3.566, p < 0.005).

What we are really interested in discovering, however, is

the effects our specific changes made in these results.

The first improvement we made, reducing the range of

years detected as dates, lowered the number of false

positives. In the first user study, 59.6% of false positive

date detections were years, while in the second user study,

only 35.2% of false positives were years.

The second improvement we tried is to link dates and

times that appear in the same sentence. Unfortunately,

our heuristic did not apply in any instances in this dataset.

However, there were still 23 cases in which a phrase

contained only a time and the system could not correctly

calculate the date. Similarly, there were 12 date phrases

for which the system found no time but users indicated

there was an associated time. In some of these cases, the

dates and times were in adjacent sentences; in others,

there were multiple times and/or multiple dates, which we

specifically chose not to match up. Clearly we need

another heuristic to help in these cases.

Our third heuristic improvement was to look for

“deadline” dates and times. These are phrases that start

with “through”, “until”, or “by”. Our system found 50

deadline dates and times, only one of which was

considered not a date phrase. The system achieved a

precision of 84% identifying and interpreting these

phrases. Thus it appears that adding deadline dates and

times provides a good improvement to our algorithm.

Finally, our last improvement, expanding our definition of

time ranges, appeared to have a large negative impact on

our results. Only 2 out of 27 instances (0.07%) where

“AM” and “PM” were detected were considered to be

legitimate time phrases, whereas 28.4% (25 out of 88) of

our false positives involved these phrases. With respect

to meal times, 9 instances were considered not to be date

phrases and 9 instances were considered to be date

phrases. Therefore, it seems in these cases, the false

positive rate is too high to provide significant benefit

from identifying these time ranges

We have demonstrated that we can achieve a fairly

reasonable accuracy (about 80%) on finding and

interpreting dates and times that appear in email

messages. We can detect and understand not only

standard dates, such as “July 1, 2003”, but also inexact

dates and times, such as “next Tuesday morning”. We are

beginning to explore the use of this technology in some

applications, including smart calendar entries and smart

reminders. Additionally, we have built an application that

lets users search for dates, regardless of the format of the

search query or of the dates that are contained in the

documents. However, we must determine if the accuracy

we have achieved is “good enough” for these

applications.

There are certain classes of dates and times that we are

currently unable to detect and understand: repeated dates.

For example, Let’s meet every Thursday at 10

indicates that a meeting will occur every Thursday at

10am. Currently, however, we do not recognize that the

word “every” indicates a repeated event, not a one time

occurrence. We plan to include these kinds of dates and

times in our next version.

ACKNOWLEDGEMENTS

I would like to thank John Patterson and Daniel Gruen for

their help in designing the study and in analyzing the data.

I would also like to thank all the study participants for

their time and patience.

REFERENCES

Brill, E., A simple rule-based part of speech tagger. In

Proceedings of Third Conference on Applied Natural

Language Processing, Trento, Italy, 1992.

Duchenaut, N. and Bellotti, V., Email as habitat: An

exploration of embedded personal information

management. ACM Interactions, 8(1):30-38, SeptemberOctober 2001.

Grover, C., Matheson, C., Mikheev, A., and Moens, M.,

LT TTT – A Flexible Tokenisation Tool. In Proceedings

of Second International Conference on Language

Resources and Evaluation, 2000.

Horvitz, E., Principles of Mixed-Initiative User Interfaces.

In Proceedings of CHI '99, ACM SIGCHI Conference on

Human Factors in Computing Systems, 159-166, 1999.

Mani, I., Ferro, L., Sundheim, B., and Wilson, G.,

Guidelines for Annotating Temporal Information. In

Proceedings of the Human Language Technology

Conference, 2001.

Mani, I. and Wilson, G., Robust temporal

processing of news, in Proceedings of the 38th

Annual Meeting of the Association for

Computational Linguistics, 2001.

Mckay. D., Mining dates in historical documents. In

Fourth New Zealand Computer Science Research

Students Conference, 2001.

Message understanding conference.

www.itl.nist.gov/iaui/894.02/related_projects/muc

Nardi, B., Miller, J., and Wright, D., Collaborative

programmable intelligent agents. Communications of the

ACM, 41(3):96-104, March 1998.

Pandit, M. and Kalbag, S., The Selection Recognition

Agent: Instant access to relevant information and

operations. In Proceedings of Intelligent User Interfaces,

pages 47-52, ACM, 1997.

Whittaker, S. and Sidner, C., Email overload: Exploring

personal information management of email.

In

Conference Proceedings on Human Factors in Computing

Systems, 276-283, 1996.

Wiebe, J., O'Hara, T., Ohrstrom-Sandgren, T. and

McKeever, K. An Empirical Approach to Temporal

Reference Resolution. Journal of Artificial Intelligence

Research, 9, 247-293, 1998.