Lab 2: Clarify in Stata

advertisement

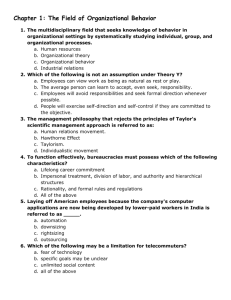

Lab 2: Clarify in Stata D. Alex Hughes January 21, 2013 Introduction This lab will demonstrate how to set up a program file in STATA and how to generate the appropriate statistics for reporting the resulst of logit and probit estimates in STATA using CLARIFY. Before you begin, download a few documents if you don’t have them yet. 1. Stata Code for the lab; 2. If you want to verify your results, you can grab the log file with the output I generated here. 3. The Official Clarify Documentation from Gary King’s website. Programming Best Practices • Comment your Code. Don’t be a bum! Your memory isn’t as good as you wish it were. So, when you write some killer line today, you will have literally no idea what it does in a week. • Make every step in your analysis repeatable and re-executable. You should start with a data file and have executable code that you can run for each stage of your work. I know it is tempting to make a change in STATA’s editor window or in Excel, but don’t. Not under any circumstance. You will never be able to remember all the tiny changes you’ve made, I promise you. • Use a consistent case structure for your code. I prefer CamelCase, but there are many out there. A good case will have a few features: 1. It will be immediately legible. Rather than reading as endoffile it should read as EndOfFile or endOfFile or end_of_file. There are different conventions. 2. It will not have any spaces. Whitespace is meaningful to most computer languages, and single objects should be read as a single word (aka: no spaces). So your vote share variable should not be Vote Share, but instead voteShare or vote_share. • Indent your code to make it more legible. Indentations should allow you to more clearly see code blocks – be they functions, regressions, plots, etc. 1 The standard style is a hanging indent, and many editors will automagically indent your code when appropriate. For example, compare these two layouts: p <- ggplot(data = plot.data, aes(x = time, y = output, colors = group)) + geom_lines() + stat_summary(method = "lm") + facet_ wrap("town") + opts(main = "blarg", sub = "foo") p <- ggplot(data = plot.data, aes(x = time, y = output, color = group)) + geom_lines() + stat_summary(method = "lm") + facet_wrap("town") + opts(main = "blarg", sub = foo") Which seems to make more sense to you? Another example from last week. out <- optim( par = c(0,1), fn = probit, y = y, X = x, method="BFGS", control=list(fnscale = -1), hessian = TRUE ) out versus head(gavote) summary(gavote) gavote$undercount <- (gavote$ballots-gavote$votes)/gavote$ballots summary(gavote$undercount) sum(gavote$ballots-gavote$votes)/sum(gavote$ballots) hist(gavote$undercount,main="Undercount",xlab="Percent Undercount") plot(density(gavote$undercount),main="Undercount") rug(gavote$undercount) pie(table(gavote$equip),col=gray(0:4/4)) barplot(sort(table(gavote$equip),decreasing=TRUE),las=2) gavote$pergore <- gavote$gore/gavote$votes plot(pergore ~ perAA, gavote, xlab="Proportion African American",ylab="Proportion 2 • Include the date, time, and place that you were writing the code. This is something that I learned from my grandpa who was a pattern maker. It will come to pass that 2 years hence (or 20?), you will come back to a piece of code that you wrote. Its pretty nice to have a sense for where you were and what you were doing at the time. Every chest that I’ve built (and code that I’ve written) has a little stamp with the date and town that I was working in. Call me sentimental, but it makes coming back to a project a little more palatable. Programming in STATA Many (most) political science journals now require authors to submit replication code along with their submissions. This is especially the case if you’re submitting work that uses publicly available data like Afrobarometer, ANES, or COW data sets. If you come up with a new result from publicly available data, folks will (a.) Be disappointed in themselves for not finding the result first; and, consequently, (b.) Come after your methods. You can prevent this by having lock-down documentation of everything you did. With this in mind, you should always write .do file in STATA, and .R files in R. Get in the habit of making sure that your program file runs and executed without errors. A good way to start is by including a couple of lines that clear anything currently in working memory and change the preferences to your preferred settings. These lines will clear all the macros in memory, close a log file if one is open, prevent STATA from stopping when the screen fills with text (why would stopping be the default...), sets the memory size, creates a local macro to reference where files are stored, sets the seed for randomization (so our results are identical), and opens a log file. 1 2 3 4 5 6 7 8 9 10 11 clear all capture log close set more 1 set mem 50 M local filepath " ~ / documents / TA / poli271 / labs / " set seed 12345 log using ’ filepath ’ Lab2 . log 3 MLE Results in Clarify As we discussed last week logit and probit estimators – along with most other nonlinear estimators – provide coefficients with very little intuitive interpretation. King saws away at this in a 2000 AJPS article, saying, "bad intepretations are substantively ambiguous and filled with methodological jargon: ‘the coefficient on education was statistically significant at the 0.05 level.’ " And he’s right for a few reasons. 1. One should be able to answer Gary Jacobson’s jab: “So What?" with a compelling response. “So, a change from a high-school degree to a college degree is associated with a $1,500 per year increase in wage earnings, plus or minus about $300.” Political science demands that you interpret your results for more than statistical significance. 2. If one’s answer to this so what? isn’t that compelling, she should consider that journal reviewers will likely think the same thing. Unimpressed reviewers are not reviewers that are likely to accept your submission. 3. A finding of statistical significance, without an understanding of the relationship to the data frequently obscures the meaning of a result. Take, for example, the following two results, both of which are generated from a positive, significant result. • A change from a high-school degree to a college degree is associated with a $1,500 per year increase in wage earnings, plus or minus about $300. • A change from a high-school degree to a college degree is associated with a $15 per year year increase in wage earning, plus or minus about $3. Although both are results that are not likely to be caused by random sampling error, they tell very different stories! There are three main commands in Clarify that correspond to the three main steps you would use to calculate marginal effects for a model: 1.) Estimate a model; 2.) Set the levels of the parameters other than your parameter of interest; 3.) Specify how your parameter(s) of interest change. Clarify operates by generating simulated values rather than explicitly calculating marginal effects. 1. Estimate the model with estsimp. When you include estsimp before the model Clarify generates simulated values for variables that it will use for the further calculations. One can modify the number of simulations along with lots of other options at this step. estsimp logit bigchange negdemscale expab impab s_un_glo fndelec aidGDP distance polterror 2. Set the variables with setx. The setx command will fix each parameter at 4 a specified level. Frequently authors use the mean (setx mean) or median (setx median), but you can set it to a specified observation (setx [2000]) or to a specific value (setx 27). 3. Generate the simulation result using simqi – simulate quantity of interest. This command generates the simulated difference that will be reported. There are a number of possible quantities of interest that you can report; choose the quantity of interest that best supports your theory and demonstrates the relationship that you care about. Example Now that we generally know how the commands work, let’s go over a specific example of how you might use Clarify in comparative politics. The remaining code and text for this section is from Thad. After estimating the model described above, suppose I want to look at whether a recipient country’s chanced of getting funding are contingent upon its political behavior. I can examine the first differences for various changes the explanatory variable negdemscale, which lists the number (0-4) of antidemocratic actions taken by the government. I’ll begin by holding all of the other variables constant. setx median simqi fd(pr) simqi fd(pr) simqi fd(pr) simqi fd(pr) changex(negdemscale changex(negdemscale changex(negdemscale changex(negdemscale 0 1 2 3 1) 2) 3) 4) Now suppose we want to look at the effects of bad political behavior in specific cases, rather than just some generic case in which all variables take on their median values. We can code Clarify for effects in observation number 2000 and 5000 by inputing: setx [2000] simqi fd(pr) changex(negdemscale 0 1) setx [5000] Now let’s look at some of the other quantities of interest. If I want to get the simulated probability that a changein funding occurred (or did not occur) for the mean case, code: setx mean simqi, pr This probability will be different for each type of case. And across simulations, the expected value of the dependent variable will vary(due to stochastic variation). To look at the predicted values over the 1000 simulations, call: 5 simqi, pv If I really want to know what that mean case looks like, I can use the listx option to tell me about it: simqi, pr listx And I can also go through the same exercise of getting a prediction for and a description of one particular case: setx [2000] simqi, pr listx I can look at the first differences using a 90% confidence interval, and then I can look at the first difference caused bya change in to explanatory variables: simqi fd(pr) changex(negdemscale 0 1), level(90) simqi fd(pr) changex(negdemscale 0 1 polterror 0 1), level(90) Replication Green, Gerber and Larimer (2008) present a modern-day classic in political science (IMO). Because you didn’t read the paper for this class, here’s the run down. There is a debate in the literature about why people vote – the act of voting seems to defy rationalization – just ask Riker and Ordershook (1968). And yet, people do vote, and pretty frequently. Green, Gerber and Larimore test on theory about why people vote – social pressure. The authors send postcards to residents of the State of Michigan (I received one!), informing them that the act of turning out to vote is public knowledge. They send out 4 treatments: 1. You are being studied (Hawthorne Effect) 2. Go vote! Its your civic duty 3. WHAT IF YOUR NEIGHBORS KNEW WHETHER YOU VOTED? And then they SHOW a list of people in their town and whether or not they voted! Awesome!! 6 4. WHO VOTES IS PUBLIC INFORMATION! And then they SHOW whether the individual voted or not in the last election! Aah! Double Awesome!! But Gary King probably pulled his hair out when he read their results. The table is included here as Table 1. Note that there are potentially two complaints one could make about these results. First, examine their replication code. GGL’s main regression is on a binary dependent variable – whether an individual turned out to vote or not. However, they have estimated their model making assumptions that the data follow a Gaussian distribution – they run OLS. Why might this be a problem? Second, look at how they present their results. What are we to make of these? What if they had instead (correctly) estimated a non-linear model – a logit regression? What would we do with their estimated parameters then? Figure 1: Green, Gerber and Larimore Main Regression Table 7 Using the skills that we have developed today, how would you go about adding to GGL’s analysis? Our Code is available HERE. 1 2 3 4 5 6 7 8 9 10 /***********************************************************************/ / * Replication Dataset for Gerber et al 2008 APSR Social Pressure Study * / / * Alan Gerber ( alan . gerber@yale . edu ) */ / * Modififed by D . Alex Hughes ; Encintas , CA ; Jan . 2013 for Poli271 */ /***********************************************************************/ * * * * loading data * * * * clear set mem 450 m * use " Replication Dataset for Gerber et al 2008 APSR Social Pressure Study . dta " , clear 11 12 * * * * Table 1 * * * * 13 * collapse ( max ) treatment hh _ size ( mean ) g2002 g2000 p2004 p2002 p2000 sex yob , by ( hh _ id ) 14 * bysort treatment : sum hh _ size g2002 g2000 p2004 p2002 p2000 sex yob 15 16 * * * * MNL reported on p .37 of APSR article * * * * 17 * mlogit treatment hh _ size g2002 g2000 p2004 p2002 p2000 sex yob , baseoutcome (0) 18 * save " Replication Dataset2 for Gerber et al 2008 APSR Social Pressure Study household level . dta " , replace 19 20 * * * * Table 2 * * * * 21 use " http : / / polisci2 . ucsd . edu / dhughes / teaching / G e r b e r G r e e n L a t i m o r e . dta " , clear 22 tabulate voted treatment 23 24 * * * * Table 3 * * * * 25 gen control = treatment ==0 26 gen hawthorne = treatment ==1 27 gen civicduty = treatment ==2 28 gen neighbors = treatment ==3 29 gen self = treatment ==4 30 31 * * * * Table 3 , model a * * * * 32 regress voted civicduty hawthorne self neighbors , robust cluster ( hh _ id ) 33 34 * * * * Compare the Regression w / and w / o estsimp * * * * 35 logit voted civicduty hawthorne self neighbors sex yob , robust cluster ( hh _ id ) 36 estsimp logit voted civicduty hawthorne self neighbors sex yob , robust cluster ( hh _ id ) 37 38 * * * * Run through the Simulation Algorithm * * * * 39 40 setx mean 41 42 simqi fd ( pr ) changex ( hawthorne 0 1) 43 simqi fd ( pr ) changex ( civicduty 0 1) 44 simqi fd ( pr ) changex ( neighbors 0 1) 45 simqi fd ( pr ) changex ( self 0 1) 46 47 simqi fd ( pr ) changex ( yob 1950 1980) 48 simqi fd ( pr ) changex ( yob 1960 1980 neighbors 0 1) 49 50 * * * * Create Histograms of The Predicted Change of " Being Watched " * * * * 51 setx hawthorne 0 52 simqi , genpr ( predval ) 53 54 setx hawthorne 1 55 simqi , genpr ( predval1 ) 56 57 gen preddiff = predval - predval1 58 hist preddiff 8 Graphs of Clarify Objects Full disclosure: I am a really uninformed Stata programmer. There are a few resources that people have written to create graphs of Clarify objects in STATA. These would be of the flavor of what I demonstrated last week in R’s Zelig. From what I see, there are a set of .do files that you use after estimating your Clarify object and an .ado (?) file that you use to modify the canned Clarify routines. The link to this is HERE. Figure 2: R output from a Zelig Model Density of First Differences in Min GeoDesic Distance 60 40 20 0 Density 80 100 120 1 −> 0 deg 2 −> 1 deg 3 −> 2 deg 4 −> 3 deg 5 −> 4 deg 6 −> 5 deg 0.00 0.05 0.10 P(Y=j|X) − Estimate of First Difference 9 0.15