Task Force on Student Learning Assessment Report

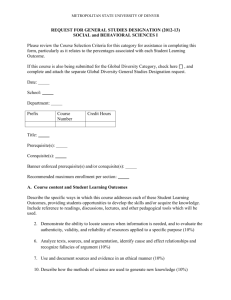

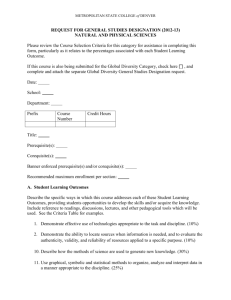

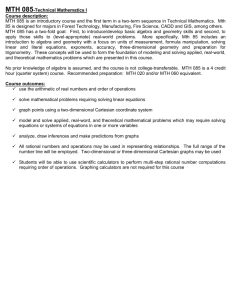

advertisement