Differentiating Models of Associative Learning

advertisement

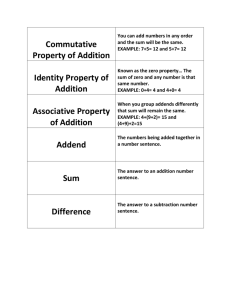

Differentiating Models of Associative Learning: Reorientation, Superconditioning, and the Role of Inhibition Brian Dupuis (bdupuis@ualberta.ca) Michael R.W. Dawson (mdawson@ualberta.ca) Department of Psychology, University of Alberta Edmonton, Alberta, Canada Keywords: Rescorla–Wagner model; artificial neural networks; operant choice; reorientation; superconditioning. locations with the correct geometric configuration in absence of the correct feature. Although developed with reorientation in mind, the Miller-Shettleworth model shows signs of a serious mathematical mistake when tested on this task. At high learning rates, the model predicts dramatic fluctuations in associative strength (Figure 1) and in choice probabilities, eventually culminating in a global divide-by-zero error. The Miller-Shettleworth Model One broad class of models of associative learning, based on Rescorla and Wagner’s (1972) original model, views stimuli as collections of cues that compete with each other for associative strength. Miller and Shettleworth (2007, 2008) attempted to apply a variation of this model to the field of spatial learning. Their model attempts to capture the role of choice behavior in spatial navigation tasks – specifically, how agents estimate the likelihood of reinforcement based on their experience, and how their choices are influenced by these estimates. They observe, correctly, that this makes such tasks exercises in operant conditioning, while the well-established Rescorla-Wagner model captures classical conditioning. In their model, Miller and Shettleworth multiply the Rescorla-Wagner equation by a “probability” term – a ratio of the associative strengths a single choice’s cues to the total associative strength of all cues at all possible choices. However, this produces a model that is empirically and mathematically flawed (Dupuis & Dawson, in press). Here, we describe these empirical and formal flaws, and supply an alternative associative model for investigating these tasks. Figure 1: The Miller-Shettleworth model’s associative strength on a reorientation task at a high (0.7) learning rate. Superconditioning Superconditioning arises when an excitatory cue paired with an inhibitor produces greater excitation during further training than it produces when paired with a neutral cue. This is a prediction of the Rescorla-Wagner (1972) model and is well-established in animal experimental literature. A recent experiment (Horne & Pearce, 2010) established that this effect also occurs in spatial learning – that is, rats trained with an inhibitory feature responded to geometryonly probe trials with greater probability than rats trained with a neutral feature. However, when the MillerShettleworth (2008) model attempts to model this effect, it predicts the opposite result (experimental 0.91, control 0.94). Horne and Pearce observed that the model was not assigning sufficient inhibitory associative strength to cues that are present at both reinforced and non-reinforced locations. Empirical Shortcomings The Miller-Shettleworth model’s flaws are evident when one investigates how it behaves in a pair of spatial tasks. Reorientation A ‘reorientation task’ is a common experimental paradigm, used to explore spatial and geometric learning, where agents are placed inside a controlled arena – typically rectangular, with an assorted set of feature information or landmarks such as colored panels over the corners. The subjects must learn which locations are or are not reinforced. Systematic change to this arena after training produces regular effects, most famously “rotational error”. If the colored panel is moved from the reinforced corner to a different corner, agents will follow it, but they will also return to its original corner… as well as the corner rotationally opposite (which has walls in the same configuration as the original corner). In an associative context, each location is defined as the collection of cues present at that location – the geometric configuration of the walls, the type of features present, and so on. Rotational error is explained as a response to Why Does It Fail? This weakness in handling inhibition is indicative of why the Miller-Shettleworth model produces such unusual results. The root cause lies with their decision to implement operant choice by scaling the Rescorla-Wagner equation. The Rescorla-Wagner model includes an implicit measure of the time that passes with each iteration of the equation within its learning rate parameter – a term that is held constant (and thus suppressed), because to do otherwise 322 would “beg justification” (Rescorla & Wagner, 1972). When one calculates the change in associative strength as this change in time approaches zero, the Rescorla-Wagner equation produces the instantaneous time derivative of associative strength. Miller and Shettleworth (2007, 2008) multiply the Rescorla-Wagner equation by some “probability” ratio. The problem is that both of these equations are functions of current associative strength – meaning both equations contain a (suppressed) time term. In order to correctly control for this additional time dependency, the chain rule must be applied to the equation or the form of the derivative will change. Miller and Shettleworth did not do this. In effect, this uncontrolled time dependency is equivalent to allowing the learning rate to vary independently for each type of cue, with none of the justification that Rescorla and Wagner “beg”. is, it is not normalized), which has important theoretical implications. Empirical Robustness When tested on the same reorientation task illustrated above, the operant perceptron converges upon the expected solution at low (0.15), high (0.7), and extremely high (1.0) learning rates, illustrating that it does not succumb to the scaling problems seen in the Miller-Shettleworth model. (A discussion of perceptrons and reorientation is found in Dawson et al. (2010).) Presenting Horne and Pearce’s (2010) superconditioning experiment to the operant perceptron leads to a prediction consistent with their animal experiments (experimental 0.998, control 0.970). Examining the operant perceptron’s behavior over time shows that it assigns substantially more inhibitory associative strength to partially-reinforced cues than the Miller-Shettleworth model – a result of applying the full learning rule at some frequency rather than a scaled rule. Solution: The Operant Perceptron In light of these empirical and mathematical results, we must recommend that the Miller-Shettleworth model be abandoned. However, this need not spell the end for associative theory in exploring these areas. An alternative model – a simple artificial neural network known as a perceptron – has been shown to successfully model many of the standard reorientation task results (Dawson, Kelly, Spetch, & Dupuis, 2010), even though it employs a classical-conditioning training algorithm. This perceptron is presented a pattern of cues representing a location in a reorientation arena, which is then sent through weighted connections to an output unit. This output unit sums the weighted signals together and responds with the logistic function of this value; mistakes in this response are then used to modify the connection weights using a gradientdescent learning rule based on the Rescorla-Wagner equation. A simple modification to this algorithm to capture the probabilistic choice behavior in operant conditioning produces an “operant perceptron”. After a location’s cues are presented, the operant perceptron generates its logistic output – a number that must fall between 0 and 1. This has been shown to literally be the estimate of the conditional probability of reinforcement given the pattern of cues (Dawson & Dupuis, 2012). Therefore, this output response is used as the probability of the operant perceptron investigating a location. If it does not investigate a location, its weights are not changed – as in operant conditioning, the operant perceptron only learns from its experience. A critical difference between the operant perceptron and the Miller-Shettleworth model is that the latter applies a scaled Rescorla-Wagner equation with every iteration, while the former applies a normal, unscaled Rescorla-Wagnerstyle equation on some subset of iterations. This allows the operant perceptron to bypass the calculus error described above. Furthermore, the operant perceptron generates conditional probabilities for each option independently (that References Dawson, M. R. W., & Dupuis, B. (2012). The equilibria of perceptrons for simple contingency problems. IEEE Transactions in Neural Networks and Learning Systems, 23(8), 1340–1344. doi:10.1109/TNNLS.2012.2199766 Dawson, M. R. W., Kelly, D. M., Spetch, M. L., & Dupuis, B. (2010). Using perceptrons to explore the reorientation task. Cognition, 114(2), 207–26. doi:10.1016/j.cognition.2009.09.006 Dupuis, B., & Dawson, M. R. W. (in press). Differentiating Models of Associative Learning: Reorientation, Superconditioning, and the Role of Inhibition. Journal of Experimental Psychology: Animal Behavior Processes (36 pages, accepted February 4, 2013). Horne, M. R., & Pearce, J. M. (2010). Conditioned inhibition and superconditioning in an environment with a distinctive shape. Journal of Experimental Psychology: Animal Behavior Processes, 36(3), 381– 94. doi:10.1037/a0017837 Miller, N. Y., & Shettleworth, S. J. (2007). Learning about environmental geometry: An associative model. Journal of Experimental Psychology: Animal Behavior Processes, 33(3), 191–212. doi:10.1037/0097-7403.33.3.191 Miller, N. Y., & Shettleworth, S. J. (2008). An associative model of geometry learning: a modified choice rule. Journal of Experimental Psychology: Animal Behavior Processes, 34(3), 419–22. doi:10.1037/0097-7403.34.3.419 Rescorla, R. A., & Wagner, A. R. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In A. H. Black & W. F. Prokasy (Eds.), Classical conditioning II: current research and theory (pp. 64– 99). New York, NY: Appleton-Century-Crofts. 323