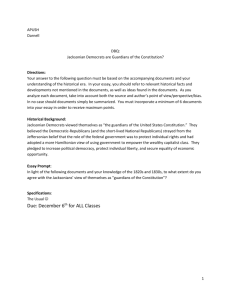

AP US History Reader: Columbian Exchange & Exploration

advertisement