STAT301 Solutions 2

advertisement

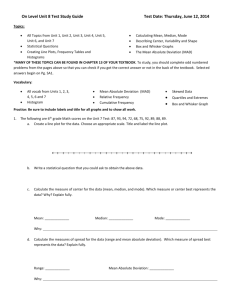

STAT301 Solutions 2 (1a) (i) I count the number of words on a page. On seven pages the word count is 100, 200, 500, 600, 800, 1300, 1600. What is the mean and standard deviation for the above data set? The mean is 728.5714 the standard deviation is 552.9144. X̄ Transform the above data using the z-score Z = X− . After making this transs.d formation you should have a new data which is of also of size 7. Evaluate the mean and standard deviation for this new data set. The new data set is −1.1368, −0.9560, −0.4134, −0.2325, 0.1292, 1.0335, 1.5761. The mean of this transformed data is zero and the standard deviation is one. (ii) I measure the length of 7 ants, this is the data 0.01, 0.015, 0.016, 0.02, 0.024, 0.027, 0.03. What is the mean and standard deviation for the above data set? Transform the X̄ above data using the z-score Z = X− . After making this transformation you s.d should have a new data which is of also of size 7. Evaluate the mean and standard deviation for this new data set. The new data set is −1.4416, −0.7408, −0.6007, −0.0400, 0.5206, 0.9411, 1.3615. The mean of this transformed data is zero and the standard deviation is one. (b) What happens to both the mean and standard deviation for both the transformed data sets? Despite the spread of both data sets being very different. After using the z-transform both data sets have mean zero and standard deviation one. We can also see the difference when looking at the numbers. Before transformation (i) takes extremely large and small values, whereas (ii) takes very small values. After the z-transform both data sets take values mainly in [-2,2]. (2) Using the instructions in HW1, load the yearly temperature data into Statcrunch. This temperature is in Celsius. Using the class notes convert the temperature into Fahrenheit (in Statcrunch go to transform data, then put the expression in the top bar 32 + (9/5) × global - include the global variable by highlighting on the global variable and pressing add column, then press compute - this should give you a new column with the global temperature in Fahrenheit). Make relative frequency histogram plots and summary statistics (both mean and standard deviation) of both the temperature in Celsius and Farhenheit (you don’t need to use the same x-axis for both plots in this question). (i) What is the relationship between the mean and standard deviations in Celsius and Fahrenheit? For Celcius the mean and standard deviation are It is clear that FahrenCelsius Fahrenheit mean standard deviation -0.183 0.0527 31.67 0.1709 First Quartile Third Quartile 0.334 31.39 -0.023 31.958 heit mean = 32 + 95 × (−0.183) and Fahrenheit standard deviation = 9 × 0.0527, which confirms what the relationships between the data and 5 the transformed data. (ii) If you use Statcrunch’s default binwidth the shape of the relative frequency plots in Celsius and Fahrenheit will look different. However, in class we said that a linear transformation should preserve the shape. Can you give an explanation as to why the histograms you have made have different shapes (hint: count the number of bins in both histogram)? The Histograms for both Celsius and Fahrenheit using the default is: However, choosing a slightly narrowed binwidth for the Fahrenheit is Figure 1: The left plot is the histogram in Celsius the right plot is the histogram in Fahrenheit. in Figure 2, this Figure is closer in Shape to the Celsius plot. Thus the difference between the plots is due to the default binwidths that are being used (one cannot expect a plot with 5 binwidths to be the same as one with 7 binwidths). Figure 2: The Fahrenheit Histogram using 7 bins rather than 5. (3) Using the instructions in HW1, load the calf weight data (see the link next to this homework) into Statcrunch (make sure the delimiter is set to comma when uploading the data). Make a relative frequency histogram of the calf weight data at birth (that is column Wt 0) and overlay it with the a normal density (in Statcrunch on the third page of the histogram option in overlay density choose option normal). You should have a relative frequency histogram with a normal density plot over the top. (i) Do you think the calf weights at birth are close to normally distributed? What is the mean and standard deviation of the calf weights at birth? There is not a great match, but it does seem plausible. (ii) Let us assume that the normal plot overlaying the histogram (not that the mean and standard deviations are calculated from the data) is the true density plot for the distribution of calf weights at birth. Using this normal density, calculate probability a new born calf weighs more than 100 pounds. The normal distribution used is N(93.2,7.78). First we assume that the weights are normally distributed. Under this assumption to calculate the chance a randomly selected calf is less than 100 pounds, we need to make a z-transform (and a plot) Z = (100 − 93.2)/7.78 = 0.874. Looking this up in the normal tables (look from outside in) we have 0.81. Thus given that the weight of 8 week calves are normally distributed with mean 93.2 pounds and standard deviation 7.78, there is 81% chance a randomly selected calf will be less than 100 pounds. (4) Suppose that the weight of hogs is normally distributed with mean 250 pounds and standard deviation 20 pounds. Calculate the probability that the weight of a randomly selected hog weights between 240 to 290 pounds. We start by making a z-transform of the weights 240 → z = 240 − 250 = −0.5 20 290 → z = 290 − 250 = 2. 20 The probability we are after is the area between [−0.5, 2] on the standard normal curve. Looking up the tables, the area less than 2 is 0.977 and the area less than −0.5 is 0.308. Thus the area between 2 and −0.5 is 0.977−0.308 = 0.669. Thus the chance of a hog’s weight lying between 240 to 290 pounds is 66.9%. (5) The weight of jersey cows is normally distributed with mean 300 pounds and standard deviation 25 pounds, whereas the weight of a highland cow is normally distributed with mean 380 pounds and standard deviation 40. A farmer has a jersey cow that weighs 320 pounds and a highland cow that weighs 400 pounds. Which cow is heavier relative to their breed of cows (in other words, which cow is in the higher percentile). Of course in real terms the highland cow is heavier than the jersey cow, but we can see from the distribution of their respective breeds this is expected. What we want to see is which cow is in the higher percentile fit her breed. To do this we make a z-transform and then look these number up in the tables. zJ = 320 − 300 = 0.8 25 zH = 400 − 380 = 0.5. 40 Looking up these numbers in the z-tables gives that the jersey cow is in the 78.8% percentile whereas the highland cow is in the 69.1% percentile. Thus in terms of breed, the jersey cow is heavier than 78.8% of jersey cows and is the heavier cow. (6) Let us return to Question 4 in homework 1, in particular the relative frequency histograms of both the total number of M&Ms in a bag and the TotalGroup (the 34 averages). In Statcrunch (using the same procedure given in Q2) overlay the default normal densities over both relative frequency histograms. By comparing the relative frequency histogram with the overlayed normal densities, which is more normal; the distribution of the total number of M&Ms in a bag or the distribution of average number of M&Ms (recall this the average of 5 bags)? Neither plots look like they are very normal. But certainly the plot of TotalGroup which is closer in structure to a normal - the main feature is that it is not bimodal, the values are concentrated about the mean, whereas the histogram of the number of M&Ms is clearly far from normal - indeed it is bimodal (has two peaks). Figure 3: Top plot is the Histogram of the number of M&Ms in a bag. The lower plot is a histogram of the average number of M&Ms in a bag, where the average is taken over 5 bags.