Dual Attributes for Face Verification Robust to Facial Cosmetics

advertisement

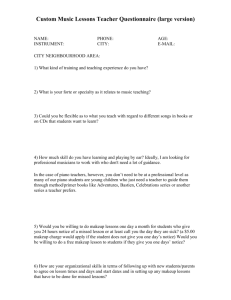

1 Dual Attributes for Face Verification Robust to Facial Cosmetics Lingyun Wen and Guodong Guo*, Senior Member, IEEE Abstract—Recent studies have shown that facial cosmetics have an influence on face recognition. Then a question is asked: Can we develop a face recognition system that is invariant to facial cosmetics? To address this problem, we propose a method called dual-attributes for face verification, which is robust to facial appearance changes caused by cosmetics or makeup. Attribute-based methods have shown successful applications in a couple of computer vision problems, e.g., object recognition and face verification. However, no previous approach has specifically addressed the problem of facial cosmetics using attributes. Our key idea is that the dual-attributes can be learned from faces with and without cosmetics, separately. Then the shared attributes can be used to measure facial similarity irrespective of cosmetic changes. In essence, dual-attributes are capable of matching faces with and without cosmetics in a semantic level, rather than a direct matching with low-level features. To validate the idea, we ensemble a database containing about 500 individuals with and without cosmetics. Experimental results show that our dualattributes based approach is quite robust for face verification. Moreover, the dual attributes are very useful to discover the makeup effect on facial identities in a semantic level. Index Terms—Dual attributes, face authentication, face verification, facial cosmetics, robust system, semantic-level matching. I. I NTRODUCTION It is quite common for women to wear cosmetics to hide facial flaws and appear more attractive. Archaeological evidence of cosmetics dates at least back to the ancient Egypt and Greece [1], [5]. The improved attractiveness using cosmetics has been studied in [13], [8]. Facial cosmetics or makeup can change the perceived appearance of faces [23], [27], [7]. In human perception and psychology studies [23], [27], it is revealed that light makeup slightly helps recognition, while heavy makeup significantly decreases human ability to recognize faces. In a computational approach, the impact of facial makeup on face recognition has been presented very recently [7]. There are eight aspects of facial makeup to impact the perceived appearance of a female face [7]: facial shape, nose shape and size, mouth size and contrast, form, color and location of eyebrows, shape, size and contrast of the eyes, dark circles underneath the eyes, and skin quality and color. It was also shown that facial makeup can significantly change the facial appearance, both locally and globally [7]. Existing face matching methods based on contrast and texture information can be impacted by the application of facial makeup [7]. Is it possible to develop a face recognition system that is invariant or insensitive to facial cosmetics? To answer this Manuscript received January 25, 2013. This work was supported in part by a Center for Identification Technology Research (CITeR) grant. L. Wen and G. Guo are with the Lane Department of Computer Science and Electrical Engineering, West Virginia University, Morgantown, WV, 26506, USA. E-mail: lwen@mix.wvu.edu, guodong.guo@mail.wvu.edu. (Corresponding author, G. Guo, phone: 304-293-9143). question, we study how to develop a method that is robust to facial changes caused by facial cosmetics or makeup. In previous face recognition research, there are a number of studies to address various variations in face recognition, such as pose [18], [24], illumination [12], expression [19], [4], resolution [24], etc., however, few of previous works are to deal with the influence of cosmetics on face recognition. In this paper, we propose a method called dual-attributes for face verification, which is robust to facial appearance changes caused by cosmetics or makeup. Our key idea is that the dual-attributes can be learned from faces with and without cosmetics, separately. Then the shared attributes can be used to measure facial similarity irrespective of cosmetic changes. In essence, dual-attributes are capable of matching faces with and without cosmetics in a semantic level, rather than a direct matching with low-level features. Attribute-based methods have shown successful applications in a couple of computer vision problems, e.g., object recognition and face verification [17], [11], [15]. However, no previous approach has specifically addressed the problem of facial cosmetics using attributes. Attributes are a semantic level description of visual traits [17], [11]. For example, a horse can be described as fourlegged, mammal, can run, can jump, etc. A nice property of using attributes for object recognition is that the basic attributes might be learned from other objects, and shared among different categories of objects [10]. Facial attributes [15] are a semantic level description of visual traits in faces, such as big eyes, or a pointed chin. Kumar et al. [15], [16] showed that a robust face verification can be achieved using facial attributes, even if the face images are collected from uncontrolled environments on the Internet. A set of binary attribute classifiers are learned to recognize the presence or absence of each attribute. Then the set of attributes can be combined together to measure the similarity between a pair of faces for verification [15]. Parikh et al. proposed relative attributes [22], and emphasized the strength of an attribute in an image with respect to other images. For instance, the approach in [22] can predict person C is smiling more than person A but less than person B. The method can define richer textual descriptions for a new image. Facial attributes can also be used for face image ranking and search [14], [25]. The query can be given by semantic facial attributes, e.g., a man with moustache, rather than just a query image. Here we propose a method called dual-attributes to deal with face verification under the presence of facial cosmetics or makeup. Previous facial attributes [14], [25], [15], [16], [22] have not addressed the problem of facial makeup in face recognition. Our main contributions include: (1) a method called dual- 2 Fig. 1. Illustration of our dual-attributes approach to face verification. Two sets of attributes are learned in face images with and without cosmetics, separately. In testing, the pair of query faces undergo two different groups of attribute classifiers, and the shared attributes are used to measure the similarity between the pair of faces in a semantic level, rather than a direct match with low-level features. attributes is proposed to reduce the influence of facial cosmetics in face verification; and (2) a database of about 500 female individuals is collected to facility the study of facial makeup on face verification. Our approach is illustrated in Figure 1. In the remaining, we present our dual-attributes method in Section II. The database description, experimental setup and results are given in Section III. Finally, we draw conclusions. II. D UAL ATTRIBUTES FOR FACE M ATCHING Given the recent studies that showed that facial cosmetics has an influence on face recognition [23], [27], [7], it will be helpful to develop a face recognition system that is robust to facial cosmetics. In this paper, we use dual-attributes for face verification that focuses on reducing the influence of facial makeup on face matching. Attributes are visual properties of an object or face which can be described semantically [17], [11], [15], [22]. For face verification, Kumar et al. [15] proposed a set of facial attributes for face verification, and found that the attributesbased method can work successfully for face verification even with significant changes of pose, illumination, expression, and other imaging conditions. Built on Kumar et al.’s work, we want to study the influence of cosmetics on attributes, and develop a new method called dual-attributes for robust face verification. In this section, we first introduce the attributes that are used to describe the visual appearance of female faces. Then we present the details on how to compute the dual-attributes. Finally, we describe face verification using dual-attributes to deal with facial cosmetics in face matching. A. Attributes We use 28 attributes to describe female faces with and without cosmetics. Since our focus is on reducing the facial makeup effect on face recognition, we do not consider some groups of people, such as children or men. So there is no attribute related to children or males. The list of the 28 attributes is given in Table I. Some examples of these attributes are shown in Figure 2. The attributes used in our approach are learned from face images with and without cosmetics, separately. As shown in Figure 2, we can visually check the facial appearance differences between faces with and without cosmetics. To better describe the female faces with and without cosmetics, we used some detailed attribute features, compared to the attributes used in [15], [14], [25]. There is no specific consideration of facial cosmetics for face recognition in [15], [14], [25]. For example, in [25] and [14], only the attribute of eye glasses is used to describe the characteristics of eyes, while only the attributes of eye width, eyes open, and glasses are used in [15]. In our approach, more detailed attributes are used, such as blue eyes, black eyes, double-fold eyelids, slanty eyes, and eye crows feet, which include more details related to female beauty and facial cosmetics. From Figure 2, one can get an intuitive observation of the appearance variations of the same attributes between nonmakeup and makeup faces. To quantify the differences, it might be necessary to learn the attribute classifiers separately, for the makeup or non-makeup face images. Therefore, we propose the dual-attributes classifiers, which will be presented in the next. TABLE I L IST OF THE 28 ATTRIBUTES USED IN OUR APPROACH . Asian Attractive Big eyes Big nose Black eyes Blue eyes Double-fold eyelids Eye bage Eye crows feet Eyebrow long Eyebrow thickness Eyebrow wave Full bang Half bang High nose Lip line Nose middle heave No bang Open nose Philtrum Pointed chin Red cheek Small eye distance Slanty eyes Square jaw Thick mouth Wide cheek White B. Dual-Attributes Classifiers To address the visual appearance differences between faces with and without makeup in attribute learning, two classifiers are learned for each attribute, one is on faces with makeup, and the other is on faces with non-makeup. They are called dualattributes classifiers. For the i-th attribute, the two classifiers 3 Fig. 2. Example face images for some attributes. Faces in the same row are the positive and negative examples for a given attribute label. From left to right: positive non-makeup face, positive makeup face, negative non-makeup face, and negative makeup face. Fig. 3. Local patches in faces for dual-attributes learning. are denoted as N M Ci and M Ci , for non-makeup and makeup faces, respectively. In Section II-A, we introduced 28 attributes. Three attributes among the 28 are related to color: blue eye, black eye, and red cheek. The R, G, and B channels of an image (using local patches) are combined together to form the color feature. For other attributes, visual features that can characterize the local shapes or texture will be used, e.g., the histogram of oriented gradient (HOG) [6] features can be used to represent the local patches as shown in Figure 3. We can compare between the use of dual attributes and direct feature matching using the same low-level features, to show the benefit of using the dual attributes. Note that our emphasis is the concept of dual attributes rather than low-level features. In Figure 3, 12 local patches are illustrated, which are used to learn the attributes in faces. For example, attributes related to eyes use features mainly extracted from the eye patches. Each attribute classifier is trained using combined color and other features. We emphasize the separation between makeup and nonmakeup faces in learning the attribute classifiers. So, the attribute vector for the group of makeup face is M C(I) = hM C1 , M C2 , · · · , M Cn i, while the attribute vector for the group of non-makeup faces is N M C(I) = hN M C1 , N M C2 , · · · , N M Cn i, where n is the number of attributes, I is the index of the I-th individual. To develop an automated method for dual-attributes learning, we use an automated detector for face and facial feature detection [29], [9]. Nine facial points are detected automatically. Then all face images are normalized according to the positions of two eyes and the whole face is divided into patches according to these facial point locations. Different features can be extracted automatically from the local patches, as shown in Figure 3. In our dual-attributes classifier learning, the support vector machines (SVMs) with the RBF kernel [28] are used. C. Face Verification with Dual-Attributes The above learned dual-attributes can be combined together for face matching. Note that this matching is on the semantic level represented by the attributes, rather than using lowlevel facial features for direct matching. In our dual-attributes approach, the attributes for a makeup face will be detected using the attribute classifiers learned from makeup faces, while the attributes for a non-makeup face will be obtained by using the classifiers learned from non-makeup faces. For face verification, all available attributes extracted from a pair of faces form a vector of values, denoted by F (I) = hM C(I), N M C(I)i for individual I. A SVM face verification classifier will be trained to distinguish whether two face images are the same woman or not. In [15], the attribute vector is binary with values either 0 and 1, where 0 means the face does not have the attribute, while 1 means the face has the attribute. In our experiments, we use distances from the test sample to the SVM hyperplane instead of the binary values, and found the face verification accuracy can be improved by about two percent over the binary values. III. E XPERIMENTS We evaluate our dual-attributes approach to face verification experimentally. The face images are detected and normalized, and the attributes are learned from the makeup and nonmakeup faces separately. To verify the influence of facial cosmetics on face verification, we also perform a cross-makeup attributes classification. This further proves the influence of facial makeup on face analysis, and the necessity of using dualattributes to deal with facial cosmetics for face verification. We introduce the database that we assembled. Then we compare the performance of attribute classifications within the same group of faces or across groups (i.e., from makeup to non-makeup, or vice versa). Finally, face verification is performed using our dual-attributes. 4 A. Database There is no public available face database containing both makeup and non-makeup faces, to the best of our knowledge. To facilitate this study, we assembled a face database containing 501 pairs of female individuals. Each pair has two face images, one with makeup and the other without. All face images are collected from the Internet with text information about makeup or non-makeup. So the labels of makeup and non-makeup, and the facial identities are collected together with the face images from the Internet, rather than labeled afterward. To the best of our knowledge, this type of database is collected for the first time of its kind. Some example face images from our database are shown in Figure 4. From these examples, we can see that there are also some other variations in addition to makeup, e.g., expression, pose, occlusion, lighting change, etc. In this study, we focus on the cosmetics for face verification. Fig. 4. Some examples of face pairs without (left) and with (right) cosmetics in the database. We also asked five human subjects to manually label the facial attributes. The participants discussed with each other for each attribute label to find if there is an inconsistency. If an attribute label cannot be determined by the participants, or cannot come up with an agreement, it is just discarded. Finally, we got 28 attributes agreed among the participants, which are used as the ground truth attribute labels for our experiments. B. Within-Group and Cross-Group Attribute Classifications We evaluate the dual-attributes classification results and then combine the attributes for face verification. In examining the dual-attributes, we also perform a cross-group attributes classification with two purposes: (1) investigating the influence of facial cosmetics on attribute classification; and (2) justifying the necessity of using dual-attributes. Here we have two groups, one contains makeup faces, and the other contains non-makeup faces. In other words, each individual has two face images in our database, one is in the makeup group, and the other is in the non-makeup group. When both learning and testing are in the same group for attribute classifications, it is called within-group classification, while the training and testing are in two different groups, e.g., from makeup to nonmakeup, or vice versa, it is called cross-group or cross-makeup attribute classification. We randomly divide the individuals into training and testing sets for attribute classification. Each pair of faces for an individual are either in the training or testing set. There are about 4/5 individuals or face pairs are in the training set, and the remaining 1/5 are used for testing the attribute classification. The testing set is also used for the face verification experiments. For each attribute, two SVM classifiers are trained, using two groups of faces, makeup and non-makeup, separately. The attribute classification results are shown in Table II. The first column is the attribute classification accuracies using nonmakeup faces for both training and testing. Please note there is no overlap of individuals between the training and testing sets. The second column shows the classification results using the same non-makeup faces for testing, but with different attribute classifiers trained by using makeup faces. The third column ‘decrease1’ shows the decrease of classification accuracy when the second column is compared to the first column in each row. If the decrease is negative, it means the accuracy is increased. The remaining three columns have the similar meanings. To better understand the attribute classification results in various cases, we perform an accuracy change analysis (ACA) for the attribute classification results. Specifically, we categorize the accuracy changes into four cases: “Small change,” “Mpreferred,” “NM-preferred,” and “Big change,” where “M” is for makeup, and “NM” is for non-makeup. When the makeup group is used for training, while the cross-group accuracy is higher than the within-group, it is categorized as “Mpreferred.” Similarly, we may have “NM-preferred.” From Table II, one can observe the four cases. Firstly, the attribute classification results belonging to the case of “Small change” include: ‘Big nose’, ‘Nose middle heave’, ‘Philtrum’, ‘Wide Cheek’, and ‘White’. Our interpretation is that the facial cosmetics does not change those attributes (mainly on shape) too much. In this case, the attribute classification accuracy changes are small when the cross-group accuracies are compared to the within-group. To tolerate the small estimation errors caused by other variations (pose, expression, illumination, etc.) and consider the fact that the training database is not big, we use threshold values between -2% and 2% to determine this case. In other words, if the accuracy changes are within the range of -2% to 2%, it is termed as a small change. The second case is called “M-preferred,” which means the cross-group accuracy is increased when the makeup group is used for training attribute classifiers. In this case, the attribute class includes: ‘High nose’, ‘Lip line’, and ‘Asian’. Our interpretation is that the facial makeup will enhance the classification accuracy for those attribute classes. 5 TABLE II ATTRIBUTE CLASSIFICATION RESULTS FOR BOTH WITHIN - GROUP AND CROSS - GROUP CASES . W E USE “A → B” TO INDICATE THAT ‘A’ IS FOR TRAINING , WHILE ‘B’ IS FOR TESTING . T HE TWO GROUPS ARE ‘ MAKEUP ’ AND ‘ NON - MAKEUP ’ FACES . T HE COLUMN ’ DECREASE 1’ SHOWS ACCURACY DECREASE WHEN THE TRAINING GROUP IS CHANGED TO MAKEUP. T HE COLUMN ‘ DECREASE 2’ SHOWS ACCURACY DECREASE WHEN THE TRAINING GROUP IS CHANGED TO NON - MAKEUP FACES . Big nose Nose middle heave Philtrum Wide cheek White Half bang High nose Lip line Asian Double-fold eyelids Small eye distance Open nose Pointed chin Thick lips Eyebrow long Eyebrow thickness Eyebrow wave Blue eyes Black eyes Eye bag Slanty eyes Eye crows feet Big eye Square jaw Red cheek Attractive Full bang No bang non-makeup → non-makeup 74 % 73 % 61 % 85 % 88 % 85 % 70 % 63 % 84 % 66 % 74 % 72 % 90 % 63 % 68 % 64 % 68 % 75 % 85 % 63 % 67 % 71 % 64 % 64 % 67 % 68 % 92 % 95 % makeup → non-makeup 74 % 73 % 60 % 87 % 88 % 84 % 73 % 66 % 87 % 62 % 69 % 68 % 83 % 61 % 58 % 32 % 68 % 74 % 81 % 65 % 54 % 65 % 59 % 62 % 61 % 64 % 92 % 95 % Similarly, the third case is called “NM-preferred,” which means the cross-group accuracy is increased when the nonmakeup group is used for training attribute classifiers. In this case, the attribute class includes only the ‘Double-fold eyelids.’ Our interpretation is that this attribute may be easier to be learned from non-makeup face images. The last case is the “Big change”, which means that crossgroup classifications result in significant accuracy decreases. There are 18 attributes belonging to this case, as can be seen in Table II. Our decision of the four cases are based on the rules as below: Small change, d1 and d2 ∈ [−2%, 2%], M -pref erred, d1 < −2% and d2 ≥ 0, ACA = N M -pref erred, d1 ≥ 0 and d2 < −2%, Big change, otherwise where ACA is for ‘Accuracy change analysis’, d1 is for ‘decrease1,’ d2 is for ‘decrease2.’ M-preferred means ‘Makeup preferred’, and NM-preferred means ‘Non-makeup preferred.’ The summary of the four cases is shown in Table III for a clearer view. From Table III, we can understand that most of these attribute changes or no changes are close to our common knowledge. Women like to wear makeup near eyes to appear decrease1 0% 0% 1% -2 % 0% 1% -3 % -3 % -3 % 4% 5% 4% 7% 2% 10 % 32 % 0% 1% 4% -2 % 13 % 6% 5% 2% 6% 4% 0% 0% makeup → makeup 64 % 65 % 71 % 84 % 84 % 81 % 78 % 69 % 88 % 62 % 67 % 74 % 85 % 71 % 64 % 84 % 62 % 73 % 78 % 71 % 59 % 69 % 67 % 71 % 71 % 78 % 96 % 85 % non-makeup → makeup 64 % 65 % 70 % 82 % 85 % 80 % 73 % 65 % 84 % 68 % 67 % 67 % 75 % 68 % 58 % 48 % 53 % 70 % 76 % 66 % 58 % 67 % 60 % 60 % 67 % 78 % 89 % 80 % decrease2 0% 0% 1% 2% -1 % 1% 4% 4% 4% -4 % 0% 7% 10 % 3% 6% 36 % 9% 3% 2% 5% 1% 2% 7% 11 % 4% 0% 7% 5% TABLE III ATTRIBUTE CLASSIFICATION ACCURACY CHANGE ANALYSIS (ACA). T HE CHANGE ANALYSIS RESULTS IN FOUR CASES : S MALL CHANGE , M AKEUP PREFERRED , N ON - MAKEUP PREFERRED , AND B IG CHANGE . Attribute Classification Small change Makeup preferred Non-Makeup preferred Big change Attributes Big nose, Nose middle heave, Philtrum, Wide Cheek, White, Half bang, High nose, Lip line, Asian, Double-fold eyelids, Small eye distance, Open nose, Pointed chin, Thick lips, Eyebrow thickness, Eyebrow long, Eyebrow wave, Blue eyes, Black eyes, Slanty eyes, Eye crows feet, Big eyes, Square jaw, Red cheek, Attractive, Full bang, Forehead visible, more attractive. Usually there is less makeup designed to change the appearance of nose. That is why 2 out of 4 attributes related to nose have small changes, while most eye-related attributes (8 out of 9) have big changes. That is the makeup effect. One interesting attribute is ‘double-fold 6 eyelids.’ Usually we can observe whether a female is doublefold eyelids or not when there is no makeup around the eyes. But with makeup, it may bring some difficulty to determine if the woman has double-fold eyelids or not, especially using faked eyelashes for makeup. As a result, we categorized the ‘double-fold eyelids’ as non-makeup preferred attribute. Our dual-attributes-based analysis makes it easier to understand and interpret the computational approaches in terms of the effect of facial cosmetics on facial image analysis. This is a nice property using the attributes, and is different from previous approaches, where either purely human perception is used [23], [27] or just low-level features are computed [7]. On the other hand, we can see that the accuracies of many attribute classifiers are decreased, some may be decreased by more than 30%. This indicates the necessity of learning dual-attributes in facial image analysis for matching between makeup and non-makeup faces. Next, we will show the use of dual-attributes for face verification. C. Face Verification Results For face verification, we use the same test set as that used in our attribute classification experiments. We have about 100 pairs of positive faces for testing. There is no overlap between training and test sets. To have some negative pairs, we randomly pick up one face from the testing set other than the individual under consideration. The random selection keeps the makeup and non-makeup group information, i.e., if the face is non-makeup under consideration, the randomly selected negative face will be from the makeup group. So our face matching is always between a pair of makeup and non-makeup faces, no matter whether they are positive or negative pairs. The random pairing process was repeated for each individual. As a result, we have 100 negative pairs of face images. In total, we have 200 pairs of faces for testing. We combine all dual-attributes, as introduced in Section II-C, for face verification experiments. Some results are shown in Table IV. When the HOG feature is used to learn the dualattributes, the face verification accuracy is 71.0%, which is higher than the 66.5% accuracy when the HOG feature is used to match face images directly. Since only one face image exists for each individual in the gallery, it is not appropriate to learn a classifier for each individual. We used the cosine distance measure [21] for direct matching of two faces, which is much better than the Euclidean distance. The LBP (local binary pattern) feature is often used for face recognition [2]. We also compared the dual-attributes and direct matching based on the LBP feature. As shown in Table IV, the accuracy is 66.0% when the LBP feature is used for direct matching. When the LBP feature is used for dual attributes learning, the accuracy is 67.5%, slightly higher than the direct matching. This demonstrates two things: (1) facial makeup has a significant influence on facial texture, and the classical LBP feature cannot work well for makeup faces, and (2) the LBP feature is not good to learn the dual-attributes for makeup face verification. The receiver operating characteristic (ROC) curves are shown in Figure 5 with the area under the curve (AUC) values. We can observe that the verification performance based TABLE IV FACE VERIFICATION ACCURACY OF THE DUAL - ATTRIBUTES METHOD . Dual-Attr. (HOG) 71.0% HOG 66.5% Dual-Attr. (LBP) 67.5% LBP 66.0% on dual-attributes using HOG features is consistently higher than the direct matching using the HOG feature (or the LBP feature). The performance of dual-attributes learned by the LBP features is not consistent. For some false positive rate (FPR), the true positive rate (TPR) is higher than the LBP features, but for some other FPR, the TPR is lower than the direct matching with the LBP feature. As a result, the HOG feature is appropriate to learn the dual-attributes in our current experiments, while the LBP cannot work very well. We also compare the accuracies of direct matching using some other low-level features. When the PCA feature [26] is used, the accuracy is 65.0%; when the Scale-Invariant Feature Transform (SIFT) [20] feature is used, the accuracy is 65.5%; when the Gabor filters [30] are used for feature extraction, the accuracy is 64.5%. The linear discriminate analysis (LDA) [3] is not proper to use in our case, since there is only one example exists for each individual in the gallery. All these accuracies are lower than the 71.0% based on our dualattributes learned by the HOG feature. These comparisons demonstrate that learning the dual-attributes is useful to deal with facial cosmetics in face recognition. The PCA feature is not proper to learn the dual-attributes. We also used the SIFT feature to learn the dual-attributes. Experimentally, we found that the SIFT based dual-attributes cannot work well for low FPR in the ROC curve, although it can perform well when the FPR is higher (the curve is not shown here). So we do not recommend to use the SIFT feature for dual-attribute learning. Although attributes have been used for face verification in previous works, e.g., [15], there are several differences between our work and that in [15]: (1) We study facial makeup on face verification while there is no specific study on facial makeup in [15]; (2) The main focus in [15] is about face verification with pose, illumination, and expression (PIE) changes, which also appears in our database, but we emphasize the makeup influence and study face verification across facial makeup specifically; (3) In our database, there are faces with large head pose variations, while the face images in [15] were filtered by a frontal-view face detector and thus the pose variations are not very large; (4) The population contains women adults only in our database, while there are both men and women, young and adult in [15], where many attributes such as the age group, gender, beards, etc., can be used in [15], but those attributes cannot be used to help face authentication in our study. More importantly, we propose the new concept called dual-attributes, which has not been presented in any previous attribute-base approaches, to the best of our knowledge. To have a more intuitive understanding of the face verification results with facial cosmetics, we show some real examples of the verification results in Figure 6. There are four different results: true positive, false negative, true negative, and false 7 positive. One can see that for false negative results, it is quite challenging to match those faces. For false positive results, the face pairs do have some overall similarity and similar attributes. It is quite challenging to separate them into different individuals. 1 0.9 0.8 0.7 TPR 0.6 0.5 0.4 0.3 Dual−attr. HOG (AUC: 0.75) Dual−attr. LBP (AUC: 0.71) HOG match (AUC: 0.66) LBP match (AUC: 0.69) 0.2 0.1 0 0 0.2 0.4 0.6 0.8 1 FPR Fig. 5. ROC curves of the dual-attributes methods for face verification, compared to using the same features but without attributes learning. The LBP and HOG features are used here. The area under the curve (AUC) values are also computed for each curve. IV. C ONCLUDING R EMARKS We have proposed a method called dual-attributes for robust face verification with respect to facial cosmetics. A database of about 500 pairs of face images (with and without facial makeup) has been collected to facilitate our study. The empirical study has shown that the dual-attributes method is quite robust for face verification, matching between nonmakeup and makeup faces. In addition to face verification, the dual-attributes can be used to understand and interpret the influences of facial cosmetics on facial identities in a semantic level, which is difficult for previous approaches that are purely based on a direct matching of low-level features. Our preliminary result is promising. In the future, we will enhance the authentication system by learning the dual-attributes on a larger database, and explore other learning methods for dualattributes computation. R EFERENCES [1] L. Adkins and R. Adkins. Handbook to life in ancient Greece. Oxford University Press, 1998. [2] T. Ahonen, A. Hadid, and M. Pietikainen. Face recognition with local binary patterns. In Eur. Conf. on Comput. Vision, pages 469–481, 2004. [3] P. Belhumeur, J. Hespanha, and D. Kriegman. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. on Pattern Analysis and Machine Intelligence, 19(7):711–720, 1997. [4] A. Bronstein, M. Bronstein, and R. Kimmel. Expression-invariant 3d face recognition. In Audio-and Video-Based Biometric Person Authentication, pages 62–70. Springer, 2003. [5] B. Burlando, L. Verotta, L. Cornara, and E. Bottini-Massa. Herbal Principles in Cosmetics: Properties and Mechanisms of Action, volume 8. CRC PressI Llc, 2010. [6] N. Dalal and B. Triggs. Histograms of oriented gradients for human detection. In IEEE Conf. on CVPR, pages 886–893, 2005. Fig. 6. Some examples of our face verification results based on the dualattributes method. (a) true positive, (b) false negative, (c) true negative, and (d) false positive. Here “positive” means (the ground truth of) the pair is genuine, while “negative” means the pair is impostor. If the method returns a correct result, it is called “true,” otherwise, it is called “false.” [7] A. Dantcheva, C. Chen, and A. Ross. Can facial cosmetics affect the matching accuracy of face recognition systems? In IEEE Conf. on Biometrics: Theory, Applications and Systems. IEEE, 2012. [8] A. Dantcheva and J. Dugelay. Female facial aesthetics based on soft biometrics and photo-quality. In IEEE Conf. on Institute for Computational and Mathematical Engineering. IEEE, 2011. [9] M. Everingham, J. Sivic, and A. Zisserman. “Hello! My name is... Buffy” – automatic naming of characters in TV video. In Proceedings of the British Machine Vision Conference, 2006. [10] A. Farhadi, I. Endres, and D. Hoiem. Attribute-centric recognition for cross-category generalization. In IEEE Conf. on CVPR, pages 2352– 2359, 2010. [11] A. Farhadi, I. Endres, D. Hoiem, and D. Forsyth. Describing objects by their attributes. In Computer Vision and Pattern Recognition, IEEE Conference on, pages 1778–1785, 2009. [12] A. Georghiades, P. Belhumeur, and D. Kriegman. From few to many: Illumination cone models for face recognition under variable lighting and pose. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 23(6):643–660, 2001. [13] N. Guéguen. The effects of womens cosmetics on mens courtship behavior. North American Journal of Psychology, 10(1):221–228, 2008. [14] N. Kumar, P. Belhumeur, and S. Nayar. Facetracer: A search engine for large collections of images with faces. Computer Vision–ECCV 2008, pages 340–353, 2008. [15] N. Kumar, A. Berg, P. Belhumeur, and S. Nayar. Attribute and simile classifiers for face verification. In Computer Vision, 2009 IEEE 12th International Conference on, pages 365–372. IEEE, 2009. [16] N. Kumar, A. C. Berg, P. N. Belhumeur, and S. K. Nayar. Describable visual attributes for face verification and image search. In IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), October 2011. 8 [17] C. Lampert, H. Nickisch, and S. Harmeling. Learning to detect unseen object classes by between-class attribute transfer. In Computer Vision and Pattern Recognition, IEEE Conference on, pages 951–958, 2009. [18] A. Li, S. Shan, X. Chen, and W. Gao. Maximizing intra-individual correlations for face recognition across pose differences. In Computer Vision and Pattern Recognition, 2009. IEEE Conference on, pages 605– 611. IEEE, 2009. [19] Y. Liu, K. Schmidt, J. Cohn, and S. Mitra. Facial asymmetry quantification for expression invariant human identification. Computer Vision and Image Understanding, 91(1):138–159, 2003. [20] D. Lowe. Distinctive image features from scale-invariant keypoints. International journal of computer vision, 60(2):91–110, 2004. [21] T. Pang-Ning, M. Steinbach, and V. Kumar. Introduction to data mining. WP Co, 2006. [22] D. Parikh and K. Grauman. Relative attributes. In Computer Vision (ICCV), 2011 IEEE International Conference on, pages 503–510. IEEE, 2011. [23] G. Rhodes, A. Sumich, and G. Byatt. Are average facial configurations attractive only because of their symmetry? Psychological Science, 10(1):52–58, 1999. [24] A. Sharma and D. Jacobs. Bypassing synthesis: Pls for face recognition with pose, low-resolution and sketch. In CVPR, pages 593–600. IEEE, 2011. [25] B. Siddiquie, R. Feris, and L. Davis. Image ranking and retrieval based on multi-attribute queries. In Computer Vision and Pattern Recognition, 2011 IEEE Conference on, pages 801–808. IEEE, 2011. [26] M. Turk and A. Pentland. Eigenfaces for recognition. J. of Cognitive Neuroscience, 3(1):71–86, 1991. [27] S. Ueda and T. Koyama. Influence of make-up on facial recognition. Perception, 39(2):260, 2010. [28] V. N. Vapnik. Statistical Learning Theory. John Wiley, New York, 1998. [29] P. Viola and M. Jones. Rapid object detection using a boosted cascade of simple. In Proc. IEEE CVPR, 2001. [30] L. Wiskott, J. Fellous, N. Kruger, and C. von der Malsburg. Face recognition by elastic bunch graph matching. IEEE Trans. Pattern Anal. Mach. Intell., 19(7):775–779, 1997. Lingyun Wen received her B.S. degree in Computer Science from Shandong Normal University, Jinan, China, in 2006. In 2009, she received her M.S. degree in Computer Science from University of Science and Technology of China (USTC), Hefei, China. She worked as a research engineer in Beijing, China, from 2009 to 2010. She is currently a graduate student in the Lane Department of Computer Science and Electrical Engineering at West Virginia University. Her research area includes computer vision and machine learning. Guodong Guo (M’07-SM’07) received his B.E. degree in Automation from Tsinghua University, Beijing, China, in 1991, the Ph.D. degree in Pattern Recognition and Intelligent Control from Chinese Academy of Sciences, in 1998, and the Ph.D. degree in computer science from the University of Wisconsin-Madison, in 2006. He is currently an Assistant Professor in the Lane Department of Computer Science and Electrical Engineering, West Virginia University. In the past, he visited and worked in several places, including INRIA, Sophia Antipolis, France, Ritsumeikan University, Japan, Microsoft Research, China, and North Carolina Central University. He won the North Carolina State Award for Excellence in Innovation in 2008, and Outstanding New Researcher of the Year (2010-2011) at CEMR, WVU. His research areas include computer vision, machine learning, and multimedia. He has authored a book, Face, Expression, and Iris Recognition Using Learning-based Approaches (2008), published over 40 technical papers in face, iris, expression, and gender recognition, age estimation, multimedia information retrieval, and image analysis, and filed three patents on iris and texture image analysis.