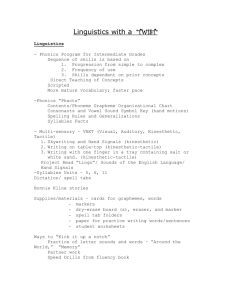

2010 - Department of Linguistics - University of California, Davis

advertisement

Long-Distance Coarticulation:

A Production and Perception Study of English and American Sign Language

By

MICHAEL ANDREW GROSVALD

B.A.S. (University of California, Davis) 1989

M.A. (University of California, Berkeley) 1993

M.A. (University of California, Davis) 2006

DISSERTATION

Submitted in partial satisfaction of the requirements for the degree of

DOCTOR OF PHILOSOPHY

in

Linguistics

in the

OFFICE OF GRADUATE STUDIES

of the

UNIVERSITY OF CALIFORNIA

DAVIS

Approved:

_____________________________________

_____________________________________

_____________________________________

Committee in Charge

2009

i

Copyright

by

Michael Andrew Grosvald

2009

ii

TABLE OF CONTENTS

List of Tables .................................................................................................................vi

List of Figures ...............................................................................................................vii

Dedication ......................................................................................................................ix

Acknowledgements......................................................................................................... x

Abstract ........................................................................................................................xiii

Chapter 1 — Introduction............................................................................................. 1

1.1. Motivation for study............................................................................................ 1

1.2. Assimilation and coarticulation ......................................................................... 3

1.2.1. Assimilation in spoken language.................................................................... 3

1.2.2. Distinguishing coarticulation and assimilation ..............................................5

1.2.3. Assimilation and coarticulation in signed language....................................... 6

1.2.3.1. Some models of sign language phonology.............................................. 7

1.2.3.2. Approaching coarticulation in signed language ....................................13

1.3. Comparison of English schwa and ASL neutral space .................................. 16

1.3.1. Status of schwa............................................................................................. 17

1.3.2. Neutral signing space ................................................................................... 20

1.3.3. Schwa and neutral space: Comparison and contrast .................................... 23

1.3.4. Schwa and neutral space: Summary............................................................. 26

1.4. Dissertation outline ........................................................................................... 27

1.5. Research questions and summary of results................................................... 28

PART I: Spoken-language production and perception ............................................ 32

Chapter 2 — Coarticulation production in English.................................................. 32

2.1. Introduction ....................................................................................................... 32

2.1.1. Segmental and contextual factors................................................................. 35

2.1.2. Language-specific factors ............................................................................ 39

2.1.3. Speaker-specific factors ............................................................................... 40

2.2. First production experiment: [i] and [a] contexts .......................................... 41

2.2.1 Methodology ................................................................................................ 42

2.2.1.1. Speakers ................................................................................................ 42

2.2.1.2. Speech samples ..................................................................................... 42

2.2.1.3. Recording and digitizing ....................................................................... 47

2.2.1.4. Editing, measurements and analysis......................................................48

2.2.2. Results and discussion.................................................................................. 51

2.2.2.1. Group results ......................................................................................... 51

2.2.2.2. Individual results ................................................................................... 53

2.2.2.3. Follow-up tests ...................................................................................... 57

2.2.2.4. Longer-distance effects .........................................................................63

2.3. Second production experiment: [i], [a], [u] and [æ] contexts........................ 64

2.3.1 Methodology ................................................................................................ 65

2.3.1.1. Speakers ................................................................................................ 65

2.3.1.2. Speech samples ..................................................................................... 65

2.3.1.3. Recording and digitizing ....................................................................... 68

2.3.1.4. Measurements and data sample points ..................................................68

2.3.2. Results and discussion.................................................................................. 68

iii

2.3.2.1. Group results ......................................................................................... 68

2.3.2.2. Individual results ................................................................................... 73

2.3.2.3. Follow-up tests ...................................................................................... 84

2.3.2.4. Longer-distance effects .........................................................................87

2.3.2.5. Further comparison of the two experiments..........................................88

2.4. Chapter conclusion............................................................................................ 93

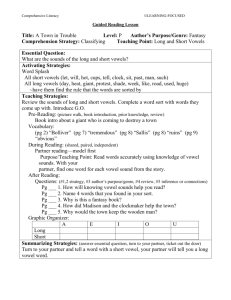

Chapter 3 — Coarticulation perception in English: Behavioral study ................... 94

3.1. Introduction ....................................................................................................... 94

3.2. Methodology ...................................................................................................... 97

3.2.1. Listeners ....................................................................................................... 97

3.2.2. Creation of stimuli for perception experiment .............................................98

3.2.3. Task ............................................................................................................ 100

3.3. Results and discussion..................................................................................... 103

3.3.1. Perception measure .................................................................................... 103

3.3.2. Group results .............................................................................................. 105

3.3.3. Individual results ........................................................................................ 105

3.3.4. Correlation between production and perception ........................................ 109

3.4. Chapter conclusion.......................................................................................... 115

Chapter 4 — Coarticulation perception in English: ERP study............................ 118

4.1. Introduction ..................................................................................................... 118

4.2. Methodology .................................................................................................... 122

4.2.1. Participants and Stimuli ............................................................................. 122

4.2.2. Electroencephalogram (EEG) recording ....................................................124

4.3. Results and discussion..................................................................................... 126

4.3.1. Latency ....................................................................................................... 134

4.3.2. Relationship to behavioral results .............................................................. 136

4.3.2.1. Can ERP results predict behavioral outcomes?................................... 140

4.3.2.2. Are ERP and behavioral responses correlated in general?..................141

4.4. Chapter conclusion.......................................................................................... 141

PART II: Signed-language production and perception.......................................... 143

Chapter 5 — Coarticulation production in ASL..................................................... 143

5.1. Introduction ..................................................................................................... 143

5.2. Initial study ...................................................................................................... 148

5.2.1. Methodology .............................................................................................. 149

5.2.1.1. Signer 1 ............................................................................................... 149

5.2.1.2. Task ..................................................................................................... 150

5.2.1.3. Motion capture data recording ............................................................ 152

5.2.2. Results ........................................................................................................ 154

5.3 Main study ....................................................................................................... 163

5.3.1. Methodology .............................................................................................. 164

5.3.1.1. Subjects ............................................................................................... 164

5.3.1.2. Task ..................................................................................................... 165

5.3.2. Results ........................................................................................................ 172

5.3.2.1. Group results ....................................................................................... 174

5.3.2.2. Signer 2 ............................................................................................... 182

5.3.2.3. Signer 3 ............................................................................................... 192

iv

5.3.2.4. Signer 4 ............................................................................................... 200

5.3.2.5. Signer 5 ............................................................................................... 208

5.3.3. Other aspects of intersigner variation......................................................... 221

5.4. Chapter conclusion.......................................................................................... 226

Chapter 6 — Coarticulation perception in ASL: Behavioral study ...................... 232

6.1. Introduction ..................................................................................................... 232

6.2. Methodology .................................................................................................... 233

6.2.1. Participants ................................................................................................. 233

6.2.2. Creation of stimuli for perception experiment ...........................................233

6.2.3. Task ............................................................................................................ 237

6.3. Results and discussion..................................................................................... 241

6.3.1. Perception measure .................................................................................... 241

6.3.2. Group results .............................................................................................. 241

6.3.3. Individual results ........................................................................................ 244

6.3.4. Relationship between production and perception ......................................247

6.4. Chapter conclusion.......................................................................................... 247

Chapter 7 — Discussion and conclusion .................................................................. 249

7.1. Models of spoken-language coarticulation.................................................... 249

7.2. Incorporating signed-language coarticulation ............................................. 253

7.3. Cross-modality contrasts ................................................................................ 256

7.4. Dissertation conclusion ................................................................................... 263

Appendix A ................................................................................................................. 265

Appendix B ................................................................................................................. 269

Appendix C ................................................................................................................. 280

Appendix D ................................................................................................................. 288

Appendix E ................................................................................................................. 297

Appendix F.................................................................................................................. 309

Appendix G ................................................................................................................. 311

Bibliography ............................................................................................................... 321

Vita .............................................................................................................................. 333

v

LIST OF TABLES

Table 2.1: Significance testing outcomes for first group of speakers ............................ 55

Table 2.2: Follow-up testing results, Speakers 3 and 7.................................................. 59

Table 2.3: Possible very-long-distance coarticulatory effects for Speakers 3 and 7...... 64

Table 2.4: Expected coarticulatory influence of four vowels on nearby schwa ............ 66

Table 2.5: ANOVA testing results, all 38 speakers ....................................................... 72

Table 2.6: Significance testing outcomes, second group of speakers ............................ 75

Table 2.7: Average formant values, Speaker 37 ............................................................79

Table 2.8: Significance testing results for all context pairs, Speaker 37 ....................... 80

Table 2.9: Further results, Speaker 37............................................................................ 81

Table 2.10: Summary of testing outcomes for all contexts, second speaker group ....... 83

Table 2.11: Very-long-distance significance testing results for four speakers .............. 88

Table 2.12: Summary of overall results for Speakers 3, 5 and 7 ................................... 90

Table 2.13: Summary of outcomes for the two experiments ......................................... 92

Table 3.1: Type of data obtained from each subject group............................................ 98

Table 3.2: Duration, amplitude and f0 values used in normalizing vowel stimuli ......100

Table 3.3: Perception scores, all subjects..................................................................... 108

Table 4.1: Latency results for the entire subject group ................................................ 136

Table 4.2: Latency results by subgroup ....................................................................... 138

Table 5.1: Results for WANT in z-dimension (height) at distance 3, Signer 1 ........... 159

Table 5.2: Distance-1 results for WANT, Signer 1......................................................162

Table 5.3: Demographic information of the five signers ............................................. 164

Table 5.4: Sentence frames and context signs, main sign production study................166

Table 5.5: Numerical results for the main sign production study ................................ 181

Table 5.6: Production results, Signer 2 ........................................................................ 190

Table 5.7: Production results, Signer 3 ........................................................................ 199

Table 5.8: Production results, Signer 4 ........................................................................ 206

Table 5.9: Production results, Signer 5 ........................................................................ 220

Table 5.10: Some measures of intersigner differences ................................................ 222

Table 5.11: Quantification of neutral-space “drift” for each signer.............................223

Table 6.1: Duration and height-difference information for the sign stimuli................ 237

Table 6.2: Results for each subject in the sign perception study ................................. 245

Table A1: Formant values of distance-1 target vowels in [a] vs. [i] contex ................266

Table A2: Formant values of distance-2 target vowels in [a] vs. [i] context ...............267

Table A3: Formant values of distance-3 target vowels in [a] vs. [i] context ...............268

vi

LIST OF FIGURES

Figure 1.1: Autosegmental representation of nasal place assimilation ............................ 4

Figure 1.2: Move-Hold representation of the ASL sign IDEA ........................................ 8

Figure 1.3: Representation of a sign in the Hand-Tier model........................................ 10

Figure 1.4: Hand-configuration assimilation in the Hand-Tier model...........................11

Figure 1.5: The vowel quadrangle and schwa................................................................ 18

Figure 1.6: Possible coarticulatory influences on schwa and neutral signing space......23

Figure 2.1: Fowler’s (1983) model of VCV articulation ............................................... 36

Figure 2.2: Coarticulation model from Keating (1985) ................................................. 38

Figure 2.3: Expected coarticulatory influence on schwa of nearby [i] or [a].................45

Figure 2.4: Editing points used for the sequence up at a ............................................... 48

Figure 2.5: Average F1 and F2 of target vowels, first group of speakers......................53

Figure 2.6: Coarticulatory effects produced by Speaker 7............................................. 57

Figure 2.7: A typical recording made from Speaker 7...................................................60

Figure 2.8: Correlation results, production at distances 1 and 2, first speaker group .... 62

Figure 2.9: Expected influence on schwa from [i], [a], [u] or [æ] .................................67

Figure 2.10: Target vowel positions in F1-F2 space, second group of speakers ........... 70

Figure 2.11: Coarticulatory effects on target vowels, Speaker 37 ................................. 77

Figure 2.12: Correlation results for production at distances 1 and 3, both groups ........85

Figure 3.1: Logistic curve .............................................................................................. 96

Figure 3.2: Design of the perception task for the speech study ................................... 102

Figure 3.3: Production-perception correlation results, first speaker group.................. 111

Figure 3.4: Production-perception correlation results, second speaker group ............. 112

Figure 3.5: Production-perception correlation results, both groups............................. 113

Figure 3.6: Production and perception—hypothetical threshold values ...................... 115

Figure 4.1: Hypothetical patterning of perceptual sensitivity to coarticulation........... 122

Figure 4.2: Sequencing of the ERP study stimuli ........................................................ 123

Figure 4.3: Configuration of the electrodes in the 32-channel cap ..............................125

Figure 4.4: Topographical maps, entire subject group................................................. 129

Figure 4.5: Waveforms at selected sites, entire subject group ..................................... 132

Figure 4.6: Topographic distribution of the MMN-like effects, entire subject group .134

Figure 4.7: Topographic distribution of MMN-like effects, by subgroup ................... 139

Figure 5.1: Expected coarticulatory behavior of schwa and neutral signing space ..... 148

Figure 5.2: Locations of FATHER, MOTHER and neutral signing space .................. 150

Figure 5.3: The location of the target sign WANT in a typical utterance.................... 151

Figure 5.4: Position of the ultrasound markers ............................................................152

Figure 5.5: Definition of x, y, and z dimensions relative to signer.............................. 154

Figure 5.6: Two ASL sentences, as seen in motion capture data................................. 156

Figure 5.7: Locations of seven context signs ............................................................... 161

Figure 5.8: Beginning and end points of WISH........................................................... 165

Figure 5.9: Newer variant of RUSSIA ......................................................................... 167

Figure 5.10: Apparatus used for <red> and <green> ................................................... 168

Figure 5.11: Context-sign locations on the body and in 3-space ................................. 175

Figure 5.12: Motion capture data for two sentences, Signer 2.....................................183

Figure 5.13: Context item locations for left-handed signers........................................ 185

vii

Figure 5.14: Motion capture data for two sentences, Signer 3.....................................192

Figure 5.15: Signer 3 signing BOSS on upper chest.................................................... 194

Figure 5.16: Motion capture data for four sentences, Signer 4 ....................................200

Figure 5.17: Signer 4’s preferred form of GO ............................................................. 201

Figure 5.18: Motion capture data for two sentences, Signer 5.....................................208

Figure 5.19: Contraction of “I WANT,” Signer 5........................................................ 210

Figure 5.20: Contraction of “I WISH,” Signer 5..........................................................211

Figure 5.21: PANTS and HAT with modified location, Signer 5................................ 212

Figure 5.22: Orientation assimilation of WANT, Signer 5 .......................................... 215

Figure 5.23: Drift of target-sign location, Signers 3, 4, 5 ............................................ 226

Figure 6.1: Design of the perception task for the sign study ....................................... 241

Figure 7.1: Fowler’s (1983) model of VCV articulation ............................................. 250

Figure 7.2: Location assimilation in PANTS, and a contraction I_WANT .................258

Figure 7.3: Hand-Tier representation of neutral-space variant of PANTS ..................261

viii

Dedication

To my family.

ix

Acknowledgements

First and foremost, I wish to thank my committee members: David Corina, C. Orhan

Orgun and Tamara Swaab. I could not have arrived at this point without their guidance

and support.

David came to UC Davis during the second year of my graduate studies. I feel very

fortunate that he then invited me to work as a research assistant in his lab, which is one

of the most positive work environments I have ever been in. In my time here, I have

enjoyed learning about sign language, psychology and the design and carrying out of

language-related experiments. It has been a fascinating and rewarding experience, and

in addition has opened the door to a number of interesting adventures, such as the

SignTyp conference in Connecticut, the Zachary’s Pizza expedition during the CNS

conference, and the moving of the hot tub.

When I was looking for a topic for my first qualifying paper, it was Orhan who

suggested that I investigate vowel-to-vowel coarticulation across particular kinds of

consonants. Questions that arose during my work on that project led me to explore

coarticulation in other contexts, and that research in turn became the starting point of

this dissertation. It would therefore be difficult to overstate how valuable his input has

been. I have also enjoyed our weekly meetings over Chinese food, where he has

answered my phonetics and phonology questions with patience and humor.

x

Tamara gave me my first in-depth look at ERP methodology, and it was she who

suggested that I investigate the MMN component in my perceptual studies. I also very

much appreciate her willingness to meet with me when I have had questions, and have

valued her feedback and support.

A number of other people have also provided encouragement along the way,

particularly at this project’s early stages. These include Diana Archangeli, Carol

Fowler, Keith Johnson, Patricia Keating, Harriet Magen and Daniel Recasens. I also

wish to thank audiences at the UC Berkeley Phonetics/Phonology Phorum, the 2008

meeting of the Linguistic Society of America, the 2008 Northwest Linguistics

Conference, and the 2007 coarticulation workshop of the Association Francophone de

la Communication Parlée in Montpellier.

For my ASL studies, I have often needed guidance from others who know much more

about sign language than I do. In particular, David Corina, Sarah Hafer, Tara Williams,

Martha Tyrone and Claude Mauk have been extremely supportive. Thanks are also due

to participants of the 2008 SignTyp conference and audiences at the 2009 meeting of

the Linguistics Society of America and at Haskins Laboratories.

David Corina and Tamara Swaab were my primary go-to people when I had questions

related to my ERP work. However, a number of other people also gave me help along

the way. Foremost among these is Tony Shahin, who has been a good friend as well as

a life-saver during my initial efforts to learn about Matlab and EEGLAB. Eva Gutierrez

xi

showed me the ropes in SPSS. Thank you also to Emily Kappenman and Steve Luck.

Any errors in my use of ERP methodology are my responsibility and no one else’s.

Finally, I wish to thank members of my family, particularly those in Chico, Prague and

Los Angeles, who have lived through much of this writing process with me and given

me love, patience and support during this long and intensive effort. I would not be

where I am without them as well.

This research project was supported in part by grant NIH-NIDCD 2RO1-DC003099

(David P. Corina).

xii

Abstract

This project investigates the production and perception of long-distance coarticulation,

defined here as the articulatory influence of one phonetic element (e.g. consonant or

vowel) on another across more than one intervening element. Part I explores

anticipatory vowel-to-vowel (VV) coarticulation in English; Part II deals with

anticipatory location-to-location (LL) effects in American Sign Language (ASL). Longdistance effects were observed in both speech and sign production, sometimes across

several intervening elements. Even such long-distance effects were sometimes found to

be perceptible.

For the spoken-language study, sentences were created in which multiple consecutive

schwas (target vowels) were followed by various context vowels. Thirty-eight English

speakers were recorded as they repeated each sentence six times, and statistical tests

were performed to determine the extent to which target vowel formant frequencies were

influenced differently by the context vowels. For some speakers, significant effects of

one vowel on another were found across as many as five intervening segments. The

perception study used behavioral methods and found that even the longest-distance

effects were perceptible to some listeners; nearer-distance effects were detected by all

participants. Subjects’ coarticulatory production tendency was not correlated with

either speaking rate or perceptual sensitivity.

xiii

Seventeen perception-study subjects also provided EEG data for an event-related

potential (ERP) study, which used the same vowel stimuli as the behavioral perception

study, and sought to determine whether ERP methodology might provide a more

sensitive measure than behavioral methods. Significant ERP effects were found in

response to nearer-distance VV coarticulatory effects, but generally not for the longestdistance ones. This is the first ERP study to investigate the sub-phonemic processing

associated with the perception of coarticulation.

In Part II, motion-capture technology was used to investigate LL coarticulation in the

signing of five ASL users. Evidence was found of significant LL coarticulatory

influence of one sign on another across as many as three intervening signs. However,

LL effects were weaker and less frequent than the VV effects found in the spokenlanguage study. The perceptibility of these LL effects was then tested on both deaf and

hearing subjects; some subjects in each group scored significantly better than chance on

the task.

xiv

1

CHAPTER 1 — INTRODUCTION

1.1. Motivation for study

The phenomenon of coarticulation is relevant for issues as varied as lexical

processing and language change, for both spoken and signed languages. However,

research to date has not determined with certainty how far such effects can extend,

though it is apparent that there is a substantial amount of coarticulatory variability

among speakers in the production of spoken language. The first part of this project

investigates the extent of long-distance vowel-to-vowel (VV) coarticulation in

American English, and interspeaker variation in the production of these effects. While

work on coarticulation in sign language has been conducted by some researchers

(Cheek, 2001; Mauk, 2003), the question of long-distance coarticulation in sign

languages appears to have been unaddressed until now. The second part of the project

investigates long-distance location-to-location (LL) coarticulation in American Sign

Language (ASL). In addition, while research on the issue of perceptibility of

coarticulatory effects has been underway for at least a few decades in spoken language

research (e.g., Lehiste & Shockey, 1972), corresponding work on sign language seems

not yet to have begun in earnest. This project examines the perceptibility of longdistance coarticulatory effects in both spoken and signed language.

2

Manual-visual languages like American Sign Language (ASL) are naturally

occurring and show syntactic, morphological and phonological complexity which is

comparable to that of spoken languages. Sign languages are not mime, nor are such

languages a word-for-word translation of any spoken language. Besides the fact that

signed language is an interesting object of study in its own right, research into sign

languages also brings with it the insights to be gained by a comparison of

corresponding phenomena in the spoken and signed modalities. By examining the

similarities and differences of various aspects of spoken and signed language, we may

find that some assumptions about human language universals have to be revised, while

in other cases, new insights relevant to language in general may be revealed. Although

the structures of spoken and signed languages may seem quite different at first glance,

the history of sign language research supports this general approach.

This is seen, for example, in the formal study of sign language phonology,

which began with the work of Stokoe (1960). Not only did he recognize the

appropriateness of the appellation sign language, but was the first to develop the insight

that traditional methods of linguistic analysis (i.e., those developed for spoken

languages) could offer great utility in the study of sign, hence to the understanding of

the human language capacity in general. This reasoning has been followed by many

researchers since Stokoe began his work, and is the approach that I will follow here.

With the basic motives of this project now established, next follows a

discussion of assimilation and coarticulation, which are closely related but which

nevertheless can be usefully distinguished. Since these notions have been frequently

examined in previous spoken-language phonological research, this discussion will

3

concentrate more on their conception in sign language phonology and how one might

expect to usefully investigate them there. After this, the specific targets of study in this

project, English schwa and ASL neutral signing space, will be introduced and the

reasons for their use in this project will be explained. This introductory chapter then

concludes with a statement of this project’s research questions and an outline of

subsequent chapters of the dissertation.

1.2. Assimilation and coarticulation

1.2.1. Assimilation in spoken language

Following the line of reasoning described above, it will now be useful to sketch

some relevant ideas from spoken language phonology, starting from first principles,

and then consider possible sign-language analogues.

In spoken language phonology, the regularity of certain segment-to-segment

influences can be expressed by means of rules like those used in SPE (Chomsky &

Halle, 1968). Most directly, one can express the relevant changes in terms of the

segments themselves. For example, if one notices in some language that alveolar nasal

stops are persistently realized as velars before [k], one might express this process as

follows:

n→ŋ/_k

While this rule covers cases involving [k], one might subsequently find that it

misses others, such as those involving nasals preceding [g], or those involving place

assimilation before labials, even though these cases might seem to be effected by the

4

same process. Rephrasing the rule in terms of nasality and place-of-articulation features

makes the rule more general and also offers more explanatory power concerning the

underlying dynamics involved. Following the autosegmental approach (Goldsmith,

1976), one may even go one step further, as shown in Figure 1.1 below:

Figure 1.1. Nasal place-of-articulation assimilation, represented in autosegmental

terms.

Here, different parameter types, such as nasality and place, are separated into

different tiers, emphasizing their (relative) independence from one another. Such

configurations can be advantageous when certain phonetic/phonological properties

seem to be fundamentally related to (or “dependent” on) others, and the autosegmental

approach is also useful in cases where non-linear processes are involved, such as in the

templatic morphology of Arabic, in which different types of information may be carried

by consonant sequences and vowel sequences, which are eventually interleaved. The

autosegmental approach has also proven quite fruitful in the study of signed languages,

where arguably more use is made of simultaneity of structure than in spoken languages.

5

1.2.2. Distinguishing coarticulation and assimilation

Historically, the terms coarticulation and assimilation have often co-occurred in

the phonetics and phonology literature of both spoken and signed languages, seemingly

interchangeably in some cases (Kühnert & Nolan, 1999, for a historical summary).

However, it has also proven useful to distinguish the two; for example, Keating (1985,

p. 2) distinguishes assimilation from coarticulation as follows: “with assimilation, a

segment which normally might have some particular target or quantitative value for a

given feature, has a different target or quantitative value when adjacent to some other

segment.” Accordingly, I will consider assimilation to the special case of coarticulation

which is definable in terms of phonological features. That is, if the influence of item X

on item Y is such that item Y undergoes a change expressible in terms of a feature

alteration, then assimilation has occurred.1 Coarticulation is the more general case of

articulatory influence of one phonetic element (e.g. a consonant, a vowel, a gesture) on

another.

As an example, consider the American English pronunciation of the word

“haunt.” The velum lowering needed to articulate the [n] may occur early in the word,

resulting in a form with a nasalized vowel, [hãnt]. If velum lowering was in effect

throughout the duration of the vowel, then it may be said that the vowel has acquired a

[+nasal] or [+nasalized] feature, as is suggested by the transcription just given, in which

case this is an instance of assimilation. On the other hand, a speaker saying “haunt”

1

Keating’s description of assimilation in terms of “segments” is somewhat problematic when applied to

sign language, however, which is why I have used the more general term “item.” Also, I prefer a rather

loose interpretation of the term “adjacent” that Keating uses, so that assimilation can be considered

possible even for items which are not strictly consecutive (as occurs in vowel harmony, for example).

Assimilation can be either obligatory (e.g. nasal place assimilation in Japanese) or optional (e.g. nasal

place assimilation in English in cases like “inconceivable” or “ten bags”).

6

might accomplish complete lowering of the velum in time for the onset of the [n]

without having had the velum lowered until late into the articulation of the preceding

vowel. In this case, it might be argued that the vowel did not actually acquire a positive

feature value for nasality, even though some velum lowering did occur during the

vowel. This would be an instance of coarticulation, but not assimilation.

Clearly, such distinctions may not always be easy to make; for instance, in the

example just presented, the exact dividing line between “nasal enough” and “not nasal

enough” for a feature change to be said to have occurred is not obvious. The matter is

complicated by the question of what sorts of features should be considered relevant; for

example, vowel nasality is not considered phonologically contrastive in English, but

may often be indispensable for hearers in cases where the nasal consonant in a VN

sequence is deleted altogether. The nasal vowel could then be an important clue for the

listener trying to distinguish “haunt” [hãt] and “hot” [hat] (although with this pair,

context would be likely to help).

1.2.3. Assimilation and coarticulation in signed language

Having distinguished assimilation and coarticulation in spoken language, we

should now be in a good position to consider the corresponding distinction in signed

language. As it turns out, much research has already been conducted on assimilation in

sign languages such as ASL (see Sandler & Lillo-Martin, 2006). Rules representing

various types of sign-language assimilation are often similar in spirit to the

autosegmental rules for spoken languages that were discussed above, but depending on

7

the representational framework for signs that one adopts, the precise form of such rules

will vary somewhat.

1.2.3.1. Some models of sign language phonology

Stokoe (1960) treated signs as combinations of three parameters—Handshape,

Location, and Movement—occurring simultaneously. More recent approaches

incorporate sequential structure into sign representations as well. In discussing the

history of sign language phonology, Sandler and Lillo-Martin (2006) draw a parallel

with the study of spoken language phonology, in which the earliest approaches used

sequential, segment-by-segment representations, while later researchers have found it

useful to model certain aspects of spoken language phonology in non-sequential terms,

as in the autosegmental approach.

Move-Hold model

The Move-Hold model of Liddell and Johnson (1989[1985]) posits that signs

have a canonical phonological form which is expressible as a sequence of

“Movements” (“M”) and “Holds” (“H”), which are segmental items distinguished

according to whether or not the hands move. The Hold segment includes features

related to both of what Stokoe would have termed Location and Handshape. This model

is illustrated below in Figure 1.2 with an example given by Sandler and Lillo-Martin

(2006, p. 129), which shows the Move-Hold representation of the ASL sign IDEA.2

2

As is customary in the sign language literature, glosses of ASL signs will be given in capital letters.

8

Figure 1.2. Move-Hold representation of the ASL sign IDEA.

In many respects, this model offers greater explanatory power than Stokoe’s

original conception of signs, which emphasized the simultaneity which is characteristic

of the phonological structure of signed language, relative to that of spoken language.

Since the Move-Hold model builds sequential structure into its representation of signs,

some phenomena such as within-sign metathesis, requiring reference to sequentiality

and hence problematic for Stokoe’s approach, are analyzed quite straightforwardly.

Consistent with the autosegmental approach for spoken language, assimilation between

successive signs can be represented by reassociation of the relevant features. The model

incorporates 13 handshape features and 18 features for place, and can be used to

describe signs with a substantial degree of phonetic detail.

9

However, the Move-Hold model has also been criticized, in part because this

richness of phonetic description also leads to overgeneration and at the same time,

misses important generalizations. As an example of the latter, in many signs, the

general configuration of the hand(s) is consistent throughout the course of the sign, but

many features of both Hold components must be specified redundantly in both the first

and final columns of the Move-Hold representation of the sign, as can be seen for IDEA

in Figure 1.2.

Hand-Tier model

Sandler’s Hand-Tier model (1986, 1987, 1989), illustrated in Figure 1.3 below,

avoids many such problems by positing a hand-configuration (HC) node with multiple

associations to three nodes sequentially arranged in the order Location-MovementLocation, as depicted below. Based on the idea that (global) location tends to remain

relatively stable during most signs, even when path movement does occur, the two

Location nodes are associated to a node called “place,” an indicator of general global

position. This model is therefore similar to the Move-Hold model in incorporating

sequentiality within its representation of signs, but at the same time seeks to avoid the

redundancies, just discussed in connection with that model, which are undesirable from

a phonological perspective.3

3

It should be noted that later modifications by Liddell & Johnson (1989) and Liddell (1990) to the

Move-Hold model make use of underspecification, with a similar goal in mind.

10

Figure 1.3. Representation of a sign in the Hand-Tier model.

Many cases of assimilation can be efficiently represented within this

framework. An example taken from Sandler and Lillo-Martin (2006, p. 137), shown

below as Figure 1.4, is the hand-configuration assimilation in the ASL compound

BELIEVE, formed from the base signs THINK and MARRY. Notice that in order to

preserve the canonical L-M-L sequencing in the output form, Location deletion also

takes place.

It should be noted that the sign illustrated in Figure 1.4, BELIEVE, is

articulated by many signers with a “1” handshape transitioning into a “C” handshape

during the movement of the dominant hand from the forehead down to the nondominant hand. This differs from the situation depicted in Figure 1.4, which specifies a

“C” handshape throughout the duration of the sign. This model is in fact able to deal

with situations in which two handshape configurations or two places are maintained in

a compound; the form of BELIEVE illustrated in Figure 1.4 happens to be an example

of the latter and not the former.

11

Figure 1.4. Hand-configuration assimilation, represented in the Hand-Tier model.

The HC category itself includes a rich featural hierarchy, analogous to spokenlanguage feature geometry (Clements, 1985; Sagey, 1986) and incorporating work of

Sandler (1995, 1996), van der Hulst (1995), Crasborn and van der Kooij (1997) and van

der Kooij (2002). This is closely related to work by Corina (1990) seeking to provide

an adequate account of handshape assimilation; in both approaches, descriptions of

partial handshape assimilation are possible in addition to cases of total assimilation like

that depicted in Figure 1.4. Like spoken-language feature geometry, this is

accomplished in part by recognizing that certain features tend to behave similarly in

phonological systems, typically for physiological reasons.

Prosodic model

The Prosodic model of Brentari (1998) differs significantly from the models just

described, particularly in its treatment of movement. In this model, sign features are

divided into two types, Inherent and Prosodic, with the latter coding properties not

12

visible at any particular instant (i.e., those specifically relevant to motion). Because

movement does seem to be a particularly salient component of signs in terms of

perception (Corina & Hildebrandt, 2002), it may be reasonable to treat it as “special” in

some way as this model does, and Brentari (1998) provides additional justifications for

doing so. However, some phenomena that are arguably best characterized by a single

rule can only be coded in the Prosodic model by separate reference to two branches of

structure. As one example of this, Sandler and Lillo-Martin (2006) discuss the ASL

compound FAINT, formed from the base signs MIND and DROP. The relevant issue

here is that when hand configuration assimilates in ASL, not only are handshaperelated features like finger position and orientation assimilated, but so too is any

internal movement. This process can be expressed with a single rule in the Hand-Tier

model, but requires separate reference in the Prosodic model to the Inherent and

Prosodic feature types.

One key point that has emerged in the preceding discussion is the ongoing effort

of sign researchers to describe the formational parameters of signs in a way that is

comprehensive but at the same time constrained enough to provide descriptions which

are adequate at the phonological level. Conversely, for the kind of phonetic detail that

will be examined in this project, even the substantial amount of information that can be

conveyed in the Move-Hold or other sign models is not sufficient. This can be

compared to the situation in spoken language: in the studies that will be presented here,

sub-phonemic vowel contrasts will generally be discussed in terms of formant

frequencies, measured in Hertz. This is so because even the relatively fine phonetic

13

distinctions expressible in transcription systems like the IPA, making use of diacritics

indicating varying degrees of fronting, lowering and so on, are not sufficient for the

task.

Similarly, when sign-language coarticulation data are given, location will

generally be expressed in terms of three-dimensional spatial position, measured in

millimeters, because the degree of detail that is involved is not expressible in existing

sign models or transcription systems. However, there are some interesting cases of

assimilation in the sign results that will be discussed, and these will be expressed in

phonological terms. In such cases, the Hand-Tier model will be adopted, because the

key variable involved will be location, so movement need not be foregrounded as in the

Prosodic model, and the relevant issues can be expressed more succinctly in the HandTier model than in the Move-Hold or other sign models.

1.2.3.2. Approaching coarticulation in signed language

Leaving aside the specific case of assimilation proper, the general study of

coarticulation in sign language is bound to present certain challenges, regardless of the

framework one adopts. Much of the research completed to date in which sign-language

coarticulation has proven relevant (or problematic) has been conducted in the context of

machine learning (e.g., see Vogler & Metaxas, 1997), though some theoretical work

has been successfully conducted as well (e.g. Cheek, 2001; Mauk, 2003; see Chapter 5

for a discussion of both). Here again, looking to work already done in spoken language

research may prove useful (for an overview, see Hardcastle and Hewlett, 1999). Such

work to date has investigated many aspects and types of spoken-language production

14

phenomena, including VV, C-to-C, lingual, labial, and velar effects, as well as work on

perception and on long-distance coarticulation. By analogy, what sorts of coarticulation

might prove amenable to study in sign language research?

Probably, the sign parameter for which subtle phonetic-level phenomena such

as coarticulatory effects can be studied most directly is location. This is so since the

position of any point on the body in motion (e.g. a fingertip or a point on the palm) can

be measured at any particular timepoint and described in terms of three numerical

values, i.e. those corresponding to the three spatial dimensions (using motion-capture

technology or multiple video cameras) or perhaps just two (if only a single video image

is used). If one accepts the premise that movement is the most perceptually salient

feature of signs (e.g., see Hildebrandt & Corina, 2002), and hence may play a role in

sign akin to that of vowels in spoken language, then sign location (as well as

handshape) might be seen as performing a more “consonantal” function. If so, LL

effects could be considered an analogue of one kind of C-to-C coarticulation.

The detailed study of handshape-to-handshape (HH) coarticulation presents

more difficulties than that of LL effects, partly because of the many interacting

components—such as the numerous joints on the hand—that are involved in the

articulations of particular handshapes. Fine-level phonetic measurements and

descriptions of handshape effects in general will require measures for relative locations

of multiple parts of the hand (e.g. various points on the different fingers), which differ

as joints are bent at various angles, and would also have to be robust to changes in

orientation. Still, interesting experimental work can be accomplished in this domain,

particularly if one focuses on a limited subset of all the articulatory possibilities. One

15

example of this is Cheek’s (2001) work investigating HH coarticulation in the contexts

of the “1” and “5” handshapes. This was accomplished by measuring and comparing,

between the two contexts, pinky-to-base-of-hand distances at key timing points during

the articulation of various signs; this distance was expected to be smaller in the context

of the “1” handshape (since the pinky is curled in when that handshape is formed) than

in the “5” context (since the pinky is extended for that handshape).

Other coarticulation types may prove to be even more challenging as research

targets. Although previous theoretical (Perlmutter, 1991) and experimental research on

perceptual saliency properties of different sign parameters (Corina & Hildebrandt,

2002) indicate that movement-to-movement (MM) effects may be the closest analogue

of VV effects in spoken language, MM coarticulation is likely to be much more

challenging to study and describe. While VV effects can be analyzed fairly

straightforwardly by means of formant frequency measurements at particular

timepoints, movements are dynamic events tracing a three-dimensional path during an

interval of time. Describing MM effects in general would seem to require an algorithm

able to analyze the data record of such events in 3-space as being consistent with an

arc, or a single linear motion, or a spiral, and so on for the complete inventory of

motion types, regardless of other complicating issues such as spatial orientation. As an

example of the difficulties involved, might an arc path movement in one sign influence

a closed-to-open finger movement in the following sign? How would one seek to

measure this? One possibility I would suggest is that the complexity associated with

the characterization of movements might be usefully reduced, by looking for example

16

at simpler but importantly related parameters such as velocity, path length, or starting

and ending points of the paths traced by individual movements.

Simple parameter-to-parameter (X-to-X) effects will probably not tell the whole

story here, just as they do not in spoken language coarticulation. For example, prosodic

structure has been shown to be relevant in spoken-language work on coarticulation

(e.g., Cho, 1999, 2004), and there is no reason to assume this could not be the case with

sign. Brentari and Goldsmith (1993) have argued that a sign’s non-dominant hand may

be analogous to a syllable coda in spoken language, so coarticulatory effects related

specifically to the dominant or non-dominant hand might have implications for the

prosodic nature of signs or of language overall. More generally, one might also expect

to see interactions between sign parameters, such as location-to-movement effects, just

as one sees C-to-V effects in spoken language. Then again, perhaps some such

phenomena will prove to be specific to one modality only; if so, this would be

informative as well.

Because it is unlikely that all such possibilities can be investigated in a single

project, I have chosen in the current study to focus on LL coarticulation, since as

discussed above, location is probably the sign parameter for which coarticulatory

effects can be most straightforwardly measured.

1.3. Comparison of English schwa and ASL neutral space

In this project, I investigate coarticulation in spoken and signed language, with

an emphasis on the temporal extent of the phenomenon and variability in its production

17

and perception among speakers and signers. Since coarticulation is a complex,

multifaceted object of study, I have chosen to narrow my focus specifically onto VV

coarticulation in English and LL coarticulation in American Sign Language (ASL).

Specifically, I examine long-distance coarticulatory effects of various English vowels

on the instantiation of schwa and of various sign locations on that of ASL neutral space.

Schwa and neutral space were chosen as target items because of certain parallels that

may be drawn between the two. However, when considered more closely and with

respect to these items’ phonological status, such similarities appear more superficial;

therefore, an examination of the schwa - neutral space analogy will be useful in

evaluating the extent to which the comparison may be valid.

1.3.1. Status of schwa

The schwa is a mid central vowel and as such is located in the middle of twodimensional vowel space, as illustrated in Figure 1.5 below. This is so whether we

consider schwa in terms of the articulatory properties of height and frontness or the

acoustically determined quantities of first and second formant, since there is a strong

correspondence between these articulatory and acoustic measures.4

Unstressed English vowels often reduce to schwa, resulting in the oppositions

seen in pairs such as {photography [a], photograph [ə]} and {reflex [i], reflexive [ə]}.

Although other outcomes of vowel reduction, such as the high central [i], are possible,

these will not be considered here, as schwa appears to be the most frequent such

4

At first glance, this articulatory-perceptual distinction seems quite different from the situation in signed

language, since the sign articulators are viewed directly by the perceiver. However, since it is as yet

unclear just how language is “perceived,” whether there might in fact be a parallel along these lines

between signed and spoken language is an intriguing question.

18

reduction outcome and is certainly the most-discussed in the relevant literature. This is

so both for English and for other languages; for example, it is well-known that some

Russian vowels undergo reduction to schwa when in stressed or pre-tonic position. In

fact, schwa plays a “special” role in the phonological systems of most languages in

which it is a member, typically being the product of processes like reduction or

epenthesis (van Oostendorp, 2003).

Figure 1.5. The familiar vowel quadrangle, with mid central schwa also shown. The

articulatory parameters of tongue height and backness correspond roughly to the

inverses of the acoustic parameters first and second formant frequency, respectively.

In my work on spoken-language coarticulation, I have chosen schwa as target

vowel because of its susceptibility to coarticulatory influence from nearby vowels, a

19

property that has emerged in previous studies, both acoustic and articulatory (e.g.

Fowler, 1981; Alfonso & Baer, 1982; respectively). The great variability in the

production of English schwa has raised the question of whether this vowel may be

completely underspecified except for a [+vocalic] or [-consonantal] feature when

considered in phonological terms (e.g. see van Oostendorp, 2003), or may be

“targetless” when considered in articulatory terms. Browman and Goldstein (1992)

investigated the latter possibility through experimental study of one speaker and

concluded that at least for that speaker, schwa was not quite targetless, but rather had a

weak target which was “completely predictable ... [corresponding] to the mean tongue

... position for all the full vowels.” (p. 56)

Even if schwa is not completely underspecified or targetless, its coarticulatory

tendencies are well-established and hence this vowel has seemed a logical choice as

target in the present study of long-distance coarticulation. Analysis of acoustic data

obtained in an earlier spoken-language coarticulation study has found strong evidence

that in environments containing multiple consecutive schwas, VV coarticulatory effects

can reach at least as far as six segments’ distance (Grosvald & Corina, in press). The

search for a possible analogue of such effects in sign language has led me to wonder if

neutral signing space might behave similarly to schwa in its articulatory behavior, but

this also raises the question of how comparable English schwa and ASL neutral space

are within their linguistic systems.

20

1.3.2. Neutral signing space

A look at the sign language phonology literature suggests that the term “neutral

space,” typically defined as the general signing area in front of the signer not

immediately adjacent to any particular body part, refers to something that may actually

not be unitary in nature. This is apparent if we consider how two prominent

phonological theories deal with neutral space.

Recall that in Brentari’s Prosodic model (Brentari, 1998), each sign is

represented by means of a feature tree in which motion is accorded special status,

having its own branch of structure in which motion-related “prosodic features” are

coded, while articulator and place-of-articulation (POA) information are located in

separate branches under a broad “inherent features” node (p. 94). Under the POA node

are features coding where on the body a sign is articulated, along with the “articulatory

plane” associated with the sign. For signs articulated in neutral space, there is no body

location specified, except for the case where the non-dominant hand is used as sign

location, in which case “h2” is taken as body location. Since the latter kind of twohanded signs are also typically articulated in the neutral space area, this model suggests

that not all signs physically articulated in that region are phonologically alike with

respect to location.

In her Hand-Tier model, Sandler also represents such “h2-P” (i.e. non-dominant

hand as Place) signs differently from other neutral-space signs. In the Hand-Tier model,

“Place” refers to the general region where a sign is articulated, and is represented as a

node which is linked to more-finely tuned “Location” nodes in the posited Location-

21

Movement-Location sequence. For example, the neutral-space signs WANT and

DON’T_WANT have place feature [trunk] throughout their duration, but have location

features for distance and height (“settings”) that change as needed to describe each

sign. In the case of WANT, the “distance setting” changes from [distal] to [proximal]

while the “height setting” remains set at [mid] during the articulation of the sign, while

during the articulation of DON’T_WANT, the distance setting remains set at

[proximal] while the height setting changes from [mid] to [low]. (Sandler & LilloMartin, 2006, p. 229-30). For neutral-space signs articulated on the non-dominant hand,

Place is specified instead as [h2] (p. 186). Neither of these theories posits that neutralspace signs’ representations are underspecified with respect to location.5 In fact, both

the Hand-Tier and Prosodic models allow for phonologically coded positional

distinctions within the neutral-space region. The signs just discussed, WANT and

DON’T_WANT, are represented in the Hand-Tier model by varying the settings of

phonological features coding—within neutral space—height of articulation and

proximity to the body.

Similarly, the Prosodic model allows for “setting changes” in neutral-space

signs such as the move from [contra] to [ipsi] in the articulation of CHILDREN through

the transverse plane (i.e. toward the dominant hand’s side of the body from the other

side of the body). Such setting changes (i.e. movements) are specified on the Prosodic

Features branch of a sign’s representation, and allow for movements in both directions

and along either dimension within the plane specified as a neutral-space sign’s place of

articulation (Brentari, 1998, p. 151-4). In addition to these sorts of subdivisions of

5

In Brentari’s theory, the lack of association with any particular body area in the case of neutral-space

signs not articulated on the non-dominant hand is not treated as a case of underspecification.

22

neutral space recognized by the Hand-Tier and Prosodic models, both models also

make a distinction between neutral-space signs like the ones just described and those

articulated on the non-dominant hand, treated in both models as a separate place of

articulation.6

I am also unaware of any evidence suggesting that other signing locations

reduce to neutral space in particular contexts the way unstressed vowels so often reduce

to schwa (more discussion of this point, including the possible relevance to this issue of

sign whispering, follows in Section 1.3.3). Although one might expect, for instance,

that signs articulated at higher locations tend to migrate to lower locations—therefore

nearer to neutral space—when preceded and followed by lower-articulated signs during

rapid signing, an explanation of this type of process need not invoke neutral space

specifically, nor require an argument involving underspecification. For example,

Mauk’s (2003) discussion of coarticulation in this context is phrased in terms of

undershoot, and he does not claim any special status for neutral space.

6

An interesting question about “h2-P” signs is how to explain that the non-dominant hand’s physical

position is almost always itself in neutral space. Assuming that the non-dominant hand itself is not

phonologically specified as having a neutral-space location (double-marking of monosyllabic signs’

location as both “neutral-space” and “h2” would violate Battison’s (1978) observation that a sign has

only one major body area), this would seem to suggest that neutral space serves as a default position in

these cases, which would be consistent with underspecification.

23

Figure 1.6. Expected direction of influence of various vowels on schwa and of various

sign locations on neutral signing space (N.S.).

1.3.3. Schwa and neutral space: Comparison and contrast

In light of the preceding discussions of English schwa and ASL neutral signing

space, meaningful comparison of the two within their respective linguistic systems can

be made. The two share a significant degree of articulatory freedom—schwa is

articulated in the middle of vowel space and displays more articulatory variability than

other vowels, and neutral-space signs are articulated at an area in front of the signer’s

body where freedom of movement also appears to be relatively large. As an illustration

of the latter point, consider that the nose and chin are just a few centimeters apart and

serve as distinct sign locations, while the low and high boundaries of neutral signing

space appear to be significantly further apart. Work by Manuel and Krakow (1984) and

Manuel (1990) indicates that languages with more crowded vowel inventories tend to

display less VV coarticulation, presumably because of a smaller margin of error, which

suggests that a signing location like neutral space with fewer close neighbors might

24

also tend to show more coarticulatory variability. Figure 1.6 above illustrates the

expected coarticulatory influence of various linguistic elements (i.e. vowels or signing

locations) on schwa and neutral space within their respective articulatory domains. The

choice to use schwa and neutral space as coarticulation targets in the present study was

strongly motivated by this comparison.

Regardless of any articulatory similarities between schwa and neutral space,

however, the functional differences between the two are considerable. Probably the

most obvious example of such a difference is the fact that English schwa is a segment,

while post-Stokoe (1960) analyses tend to treat sign parameters like location as subsegmental entities more akin to features or autosegmental units. Perhaps the clearest

evidence for this distinction is the fact that schwa can be uttered in isolation, while

location does not have any such articulatory independence within ASL. Though

perhaps less significant, it should also be noted that freedom of movement within

neutral space is three-dimensional, while vowel space is most often depicted as twodimensional, though this characterization of vowels is somewhat incomplete. It is also

possible that schwa admits some freedom of variation in other ways, such as via labial

action or with respect to the advanced tongue root parameter.

In addition, while the vowel-consonant distinction in spoken-language

linguistics is fairly plain, the existence of entities within sign linguistics analogous to

spoken-language consonants and vowels is not universally accepted. Where suggestions

of such a parallel might be made, whether in terms of phonological structure (e.g.

Perlmutter, 1991) or perceptual salience (e.g. Corina & Hildebrandt, 2002), the

25

movement parameter seems to be a much stronger candidate for a vowel analogue than

location. This alone means that schwa and neutral space may belong to significantly

different category types within the linguistic systems of English and ASL.

Finally, despite their apparent articulatory similarities, schwa and neutral space

do not appear to behave alike within their phonological systems, a fact reflected in the

lack of similarity of their treatment within the most dominant phonological models. For

example, to the best of my knowledge, ASL lacks the abundance of word pairs like

those in English mentioned earlier (photography-photograph and reflex-reflexive) that

would offer support for the existence of oppositions of neutral space with other sign

locations like the oppositions of [a] and [i] with schwa in the (American) English word

pairs just given. This indicates that neutral space is not some kind of default location

that other location values migrate to in particular circumstances like the unstressed

environments which tend to yield schwa.

At first glance, evidence against this assertion might seem to come from a

phenomenon like sign whispering, in which the signing space is substantially reduced

and may be confined to a small region such as neutral space. However, Emmorey,

McCullough and Brentari (2003, p. 41) report that this smaller articulation space in sign

whispering is restricted to “a location to the side, below the chest or to a location not

easily observed.” In other words, neutral space is not a region to which the articulation

space is exclusively limited in these circumstances, but is simply one of a number of

options. This is unlike the situation of schwa described above.

26

1.3.4. Schwa and neutral space: Summary

Because of their positions in their respective articulatory spaces, schwa and

neutral space share some evident similarities. Previous investigations, as well as

analysis of data to be presented in the current studies, indicate that both are susceptible

to long-distance coarticulatory effects, although admittedly this does not mean that both

are unique in this way within the inventories of their phonological systems. Sign

languages in particular have been little-investigated with respect to the possibility of

differing coarticulatory patterns among segments or other contextual variables. In any

event, both schwa and neutral space may be considered useful targets of study because

of their similar positioning in the middle of their articulatory spaces: they can both be

expected to undergo influence from “above,” “below,” or from “the side,” whether in

the literal physical sense of where the articulators (whether tongue or hands) are

located, or with respect to the somewhat more abstractly conceived formant space

location in the case of spoken language.

Nevertheless, based on the considerations explored in this paper, the schwa neutral space analogy must be considered imperfect at best. Findings like those of

Corina and Hildebrandt (2002), as well as phonological models such as Perlmutter’s

Mora model (Perlmutter, 1991), argue for movement (perhaps in combination with

location) being a sign parameter more analogous to the vowel in spoken language,

which if so would mean that the status of schwa and neutral space within speech and

sign must be fundamentally dissimilar. Therefore, the present study’s investigations of

English and ASL coarticulation, while taking advantage of the “middle of articulation

27

space” position that schwa and neutral space have in common, should not be considered

an attempt to show that these two items are analogous in any deeper sense such as with

respect to their phonological status.

1.4. Dissertation outline

This dissertation continues in Chapter 2 with the presentation of a production

study of VV coarticulation in English in which two experiments were carried out. The

first of these involved a very limited set of vowel contrasts while the second

investigated a larger vowel set. Long-distance VV effects were seen over a greater

distance than has been found in previous studies like Magen’s (1997): several of the 20

speakers tested showed significant anticipatory VV effects across at least three vowels’

distance. A great deal of variation among speakers was also seen, however, and some

speakers showed no or only weak effects.

Recordings made of some of the production-study speakers were used as stimuli

for the closely-linked perception study, which was carried out simultaneously with the

production study and the results of which are presented in Chapters 3 and 4. The

perception study involved both behavioral and event-related potential (ERP)

methodologies; the behavioral results are described in Chapter 3, while the ERP study

outcomes are presented in Chapter 4. The results showed that all listeners were

sensitive to nearer-distance effects, while at further distances much more variation

between listeners was seen. Some listeners were sensitive even to effects which had

occurred over three vowels’ distance. Somewhat unexpectedly, strength of

28

coarticulatory effects was not significantly correlated with either speaking rate or

perceptual sensitivity to such effects.

The approach followed in the spoken-language study—that of performing a

production and perception study simultaneously—was also followed in this project’s

investigation of coarticulation in ASL. Chapter 5 describes a LL coarticulation

production study of ASL, which examined five signers and which, like the spokenlanguage study, found evidence of long-distance coarticulatory effects in some signers

as well as a great deal of variability among the participants. The task also included a

“non-linguistic coarticulation” component. Chapter 6 discusses the integrated

perception study, whose results indicate that at least some signers are sensitive even to

relatively subtle Location-related coarticulatory effects.

Chapter 7 is the concluding chapter of the dissertation, in which some

implications of this project’s findings are presented.

1.5. Research questions and summary of results

Following are the main questions that this research aimed to address, along with

a brief summary of the outcomes that were found.

1) How far can anticipatory VV coarticulation extend in English?

The great majority of the 38 speakers investigated here showed significant

coarticulatory VV effects over at least one intervening consonant. Several speakers

persistently showed such effects across as many as five intervening segments

29

(including two intervening vowels). Follow-up experiments indicated that for such

speakers, even longer-distance effects might sometimes occur, but not as consistently.

2) How far can anticipatory LL coarticulation extend in ASL?

Five signers of ASL were investigated and some evidence of LL coarticulation

was found, including apparent cases of LL effects across two or three intervening signs.

However, the strength of these effects was notably weaker than that of the speech

effects.

3) In both cases, how perceptible are these effects?

The nearer-distance speech effects were easily perceived by all 28 listeners who

took part in the speech perception study. Even the longer-distance effects, which were