a minimization approach for two level logic

advertisement

International

Journal of Computer

Engineering

andCOMPUTER

Technology (IJCET),

ISSN 0976-6367(Print),

INTERNATIONAL

JOURNAL

OF

ENGINEERING

&

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

TECHNOLOGY (IJCET)

ISSN 0976 – 6367(Print)

ISSN 0976 – 6375(Online)

Volume 5, Issue 11, November (2014), pp. 23-31

© IAEME: www.iaeme.com/IJCET.asp

Journal Impact Factor (2014): 8.5328 (Calculated by GISI)

www.jifactor.com

IJCET

©IAEME

A MINIMIZATION APPROACH FOR TWO LEVEL LOGIC

SYNTHESIS USING CONSTRAINED DEPTH-FIRST

SEARCH

Vikul Gupta

Oregon Episcopal School, Portland, Oregon, USA

ABSTRACT

Two-level optimization of Boolean logic circuits, expressed as Sum-of-Products (SOP), is an

important task in VLSI design. It is well known that an optimal SOP representation for minimizing

gate input count is a cover of prime implicants. Determination of an optimal SOP involves two steps:

first step is generating all prime implicants of the Boolean function, and the second step is finding an

optimal cover from the set of all prime implicants. A cube absorption method for generating all

prime implicants is significantly more efficient than the tabular Quine-McCluskey method, when

implemented using the position cube notation (PCN) to represent product cubes, and efficient bit

manipulations to implement the cube operations. The main contribution of this paper is a constrained

depth-first search (dfs) of the prime implicant decision tree to find the optimal cover, which is posed

as a combinational optimization problem. A virtual representation of the decision tree is arranged

such that the first path explored in a depth first search manner corresponds to the greedy solution to

this problem, and further paths are explored only if they improve the current minimum solution. The

exponential explosion of the search space is tamed by using this efficient search approach, and

terminating the search at suitably large thresholds. Numerical results of synthesizing benchmark

PLA functions show that for a vast majority of the tests, the results produced by constrained dfs

approach are better or as good as those produced by state-of-the-art logic synthesis. Another

interesting observation is that the greedy solution is often a minimum solution, and even in the other

cases, it was not improved significantly by the dfs search.

Keywords: Two-Level Logic Synthesis, Minimum Prime Implicant Covers, Constrained Depth First

Search, PCN Implementation of Product Cubes, VLSI Design.

23

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

I. INTRODUCTION

Digital electronics has revolutionized our world in the last 50 years. Starting with arithmetic

computations on behemoth computers, today digital systems are pervasive everywhere, in smart

phones, notepads, audio and video systems, medical devices, automotive systems, and of course, in

global communication systems and back offices of all corporations. This has been enabled by the

exponential increase in the number of transistors that can be integrated on a silicon wafer since the

1960s, with state of the art chips today containing upwards of 5 billion transistors [1]. With such

powerful implementation technologies available, increasingly innovative and complex systems are

being designed and implemented in semiconductor technology. Logic design of such systems has

been feasible with electronic design automation (EDA) systems because it is impossible to design

such complex systems manually. As a result, automated synthesis of optimal logic design from high

level description has received considerable research attention over the years. This paper describes a

new logic synthesis approach for minimization of two level logic circuits.

In the high-level design of digital systems, combinational logic is expressed as Boolean

expressions in some suitable format, typically expressions in a hardware description language such

as SystemVerilog. Logic synthesis is the process of transforming these expressions to a network of

logic gates which can be implemented with the desired semiconductor technology. Two-level

synthesis converts the logic functions to a sum-of-products (SOP) form which is technology

independent. Multiple two-level networks of AND and OR gates representing a logic function can be

generated. The goal is to find the network which uses the minimum number of gates. Even though

this was one of the early problems analyzed in logic synthesis, it is still a significant problem. Circuit

realizations with technologies like programming logic arrays (PLAs) benefit directly from optimal

SOP representations. Further, a common approach to logic synthesis is to first perform technology

independent optimization of logic in a presentation like SOP, and then map this technology

independent solution to the appropriate semiconductor technology.

It is well known that a minimizing sum-of-products representation with respect to number of

gates is a cover of prime implicants[2, 3]. Determining the optimal SOP representation involves first

determining all prime implicants of the logic function, and then selecting a set of prime implicants

that cover the function with minimum number of gates. Karnaugh maps provide a manual, textbook

approach for determining the prime implicants of a Boolean function. Then, ad hoc heuristics can be

employed to select a low cost SOP implementation. This approach works for 5 or 6 variables,

beyond that it is not humanly possible to manage all the possibilities. A tabulation procedure, known

as the Quine-McCluskey method of reduction[3], can be used to determine all prime implicants

given the on-set or minterms of a logic function. This systematic procedure could be programmed on

a computer to automatically generate prime implicants of functions with larger number of variables.

Once all prime implicants have been obtained, prime covers are generated using the prime implicant

charts. The Petrick-method provides another interesting approach for generating all the covers from

the set of prime implicants. The cost of each of these covers can be obtained in terms of the number

of gates used, and the minimum cover is selected as the exact minimum solution. The fundamental

problem is the exponential growth in the search space as the problem size in terms of the number of

input variables increases. Heuristic approaches described in [6] are used to get around this problem

of exponential increase in search space and compute resources. These approaches do not guarantee

the exact minimum solutions but lead to sub-optimal solutions which are quite efficient in practice.

This paper alleviates the limitations of the current exact minimization approaches with a new

search scheme that allows exact minimum of larger problems. Efficient software implementation of

the cube absorption approach [3, 4] is used generating all prime implicants of a logic function. New

algorithms for cube operations in PCN representation are presented. The main contribution of this

paper is a constrained depth first search approach to determine exact minimum cost covers of prime

24

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

implicants. A decision tree of prime implicants is arranged in a manner that enables rapid search of

minimum solutions; in particular, the greedy solution is the first solution obtained in this search.

Further, the dfs search is constrained to traverse only those paths that could potentially improve the

current minimum cost. Details of this method are described in Section 4 of this paper.

This paper is organized as follows: Section 2 describes the necessary background ideas

needed in this work. Cube operations and their efficient implementation are described in Section 3.

The main contribution of this work, the cost-constrained depth first search is described in detail

Section 4. Numerical results are presented in Section 5, comparing this approach with state-of-the-art

logic synthesis tools, to quantify the benefits of this approach. Finally, Section 6 discusses

conclusions of this work and possible extensions for future.

II. BACKGROUND

Minimal two level logic synthesis, in terms of sum-of-products, of Boolean logic functions, is

discussed in this paper. This section reviews relevant concepts for this discussion. Note that,

mathematically, Boolean algebra characterizes a class of abstract algebras. However, in this paper,

the commonly understood meaning of Boolean algebra as the binary Boolean algebra or switching

algebra is used.

The Boolean values are denoted as 0 and 1. Thus, the set of Boolean values is = {0,1}. A

Boolean variable takes one of these Boolean values, ∈ . The domain of n input variables

{

, , … , , }is . Thus, : → , is a Boolean function of n input variables. The on-set

of a function, , is defined as = { ∈ | = 1}. Similarly, the off-setis =

{ ∈ | = 0}. Note that since ∈ , 0 and 1 are the only possible values of , thus, the onset, , completely specifies the Boolean function.

Boolean values of variables can be viewed as a string of binary values, which can be

interpreted as an unsigned decimal number. For example, suppose a 3-input variable, ∈ , has

the values , , = {1,1,0} . These values can be viewed as the binary string 110 which may be

interpreted as the number 6. With this interpretation, all possible values of n variables can be

represented as integers between 0 and 2

− 1. In particular, the on-set of a Boolean function can be

specified as a subset of integers in this range. For example, = + , is equivalently defined

by = {1,3,5,6,7}.

A literal is an input variable in true or complemented form, that is, $ or $′ . A product term is

a product of one or more literals. If all the literals are present in a product term, it is called a

minterm. The on-set of a minterm is one integer. For example, on-set of ′ , is {5}. Similarly,

the on-set of product term ′ = {1, 3}.

A product term, %, is an implicant of a Boolean function, , if % ⟹ , or equivalently,

% ⊆ . An implicant is a prime implicant of if there is no other product implicant of which

implies this implicant as well. A set of prime implicants, ( = {% , % , … , %) }, is said to cover the

function, , if the union of on-sets of these prime implicants is equal to the on-set of the function,

( = *%$ = . A set of prime implicants is irredundant if no prime implicant can be removed

from the set without changing its on-set. As shown in [2], a minimal sum-of-products representation

of a Boolean function must be an irredundant set of prime implicants. Thus, using the number of

gates as the cost, a minimal two-level implementation is an irredundant set of prime implicants.

Minimal two-level representations of Boolean functions are obtained in two steps. First,

generate all possible prime implicants of the Boolean function, and, second, search for lowest cost

irredundant set of prime implicants that covers the function, from all possible sets. The textbook

approach to generate all prime implicants of a function is the Quine-McClusky tabulation approach

[2, 3]. However, it is noted that a cube absorption approach [4, 5], with efficient algorithmic

25

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

implementation described in the next section, results in significantly better performance on large

problems. The second aspect of minimal two-level synthesis is searching for the lowest cost

irredundant set of prime implicants covering the Boolean function [6]. The prime-implicant chart and

the Petrick method are common approaches for exhaustively enumerating all possible covers. These

exhaustive approaches are limited to small problems, because of exponential growth as the number

of input variables increases. Combinational optimization approach to this problem leads to efficient

solutions. This paper presents a greedy algorithm [7], which can be extended to constrained depth

first search for an exact minimum.

III. PRIME IMPLICANTS WITH CUBE ABSORBTION

Computational Boolean reasoning can be performed very efficiently with vertices of a unit

cube in -dimensional space [4]. Product terms with Boolean variables can be represented as

vertices of an -d unit cube. For example, a 3 variable minterm ′ , can be represented as the

vertex of 3-d unit cube with coordinates = 1, = 0, = 1. A string representation of this

minterm is “101”. Product terms correspond to a set of vertices which form a sub-cube. For

example, the product term ′ has the string representation is “01*” where the ‘*’ represents the

corresponding variable is missing. In higher dimensions, a 5-d sub-cube “01*0*” consists of 4

minterms “01000,” “01001,” “01100,” and “01101.” For a cube that has ‘*’, there are 2

subcubes. A cube set represents a union of all the vertices of the cubes in the set. Thus, a function is

represented as a cube set which contains cubes whose union has the same vertices as the +-set of

the function. For instance, the vertices in the +-set of the 3 input function = ′ ′ + ′ are the

union of vertices in two cubes, so this function is represented by the cube set,

, ={“00*”, “*10”}.

Many operations defined on these unit cubes are useful for Boolean computations.

Intersection of two cubes is defined, like sets, as the vertices common to both cubes. These vertices

form a sub-cube which is contained in both cubes. If there are no common vertices, the intersection

is a null cube. For example, the intersection of two cubes “10**1” and “10*1*” is the sub-cube

“10*11”, and intersection of “10**1” and “11***” is the null cube. Intersection of cubes is widely

used in the current work to determine whether a vertex is covered by a cube. Cube absorption is an

important cube operation that combines two adjacent cubes to form one larger cube. Adjacent cubes

have identical values for all variables except one, and that variable has the complemented form in

one cube and the uncomplemented form in the other cube. These cubes are absorbed into a single

cube in which this variable is missing. As an example, consider cubes “0*1*1” and “0*0*1.” These

cubes can be combined to form the absorbed cube, “0***1.” Note that the cube “0*1*1” cannot be

combined with the cube “1*0*1” because these cubes differ in 2 variables.

The Positional Cube Notation (PCN) representation [6] along with efficient implementation

of the cube operations is discussed next. Each variable is represented by two bits which hold the

enum values, {null, one, zero, both}. Pseudo-code for setting and getting value of the * -. variable is

shown below. Beyond compact representation of cube data, the PCN notation enables efficient

implementation of the cube operations such as bitwise and for cube intersection. Pseudo-code for

intersect operation is shown below. The intersect operator is used to determine whether a vertex cube

is contained in a larger cube. A new powerful algorithm to determine whether two cubes can be

absorbed, and absorbing them if possible is implemented using bitwise xor. The bitwise xor

operation results in the special null value in all the places where the corresponding variables are

identical. Thus, if one variable has non-null value, then the cubes can be absorbed and that location

gets both values in the absorbed cube. Pseudo-code for xor and absorb operations is shown below.

26

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

{ Null, One, Zero, Both } <= CLit;

setIthVar(unsigned i, CLitval)

{ mData |= val<< 2*i; }

getIthVar(unsigned i)

{ return ((CLit) ( 3 & (mData>> 2*i))); }

setNull(void)

{ mDim = 0; mData = 0; }

intersect(CubeC&c1, CubeC&c2)

mData = c1.mData & c2.mData;

for (inti = 0; i<mDim; i++)

if (getIthVar(i) == Null) { setNull();}

boolcontainsCube(CubeC&c1)

CubeCcheck.intersect(c1, *this);

return (!check.isNull());

xor(CubeC&c1, CubeC&c2)

mData = c1.mData ^ c2.mData;

absorb(CubeC&c1, CubeC&c2)

CubeCct.cubexor(c1, c2);

for (inti = 0; i<mDim; i++) {

if (ct.getIthVar(i) != Null) {

if (index == -1) { index = i; }

else { setNull();

setIthVar(index, Both);

Using the cube operations described above, prime implicants can be obtained by absorbing

adjacent cubes as much as possible. Absorbing of a vector of cubes, denoted lower in pseudo-code

below, considers all possible pairs of cubes. If possible, absorption of the cubes is performed, and the

absorbed cube is stored in a set of Cubes. The absorbed cubes are marked accordingly. Upon

completion, the unique cubes in the set are transferred to a vector called higher. Thus, all possible

absorption of cubes in lower are performed and results stored in higher vector. The cubes that are

not absorbed are prime implicants. The process is repeated until no more absorption is possible.

absorb(vector<CubeC>&lower, vector<CubeC>&higher)

for (inti = 0; i< sz-1; i++) {

for (int j = i+1; j <sz; j++) {

CubeCtemp.absorb(lower[i], lower[j]);

if (!temp.isNull())

CSet.insert(temp); lower[i,j].setIncluded();

}

}

Higher <= Cset();

primeImplicants(CubeSetC&vertices)

vertices.toVector(lower);

while (!lower.empty()) {

absorb(lower, higher);

forall elements in lower {

if (!it->included())

addCube(*it);

}

lower = higher;

IV. CONSTRAINED DFS SEARCH

The main result of this paper, a constrained depth first search approach to determine

minimum cost cover from the set of prime implicants, is presented in this section. Given the set of all

prime implicants, the decision problem is to select a subset of prime implicants that cover the

Boolean logic function with minimum cost. For each prime implicant, the algorithm has to decide

whether to include it in the optimal subset or not. Thus, if the cardinality of the set of all prime

implicants, ( = {% , % , … , %- }, is /, its power set, 20 ,the set of all subsets of prime implicants, has

cardinality, 2- . Clearly, all subsets do not satisfy the criterion of covering the entire Boolean

function–for example, the empty set does not cover the function. The combinational optimization

problem is to select the subset of prime implicants that has a minimal cost while satisfying the

aforementioned criterion. Denoting the cost of a subset of prime implicants, 1, as ,1, the

combinational optimization problem is to characterize the minimal set for two-level synthesis

defined as:

123- = {1 ∈ 20 |,1 ≤ ,66 ∈ 20 7*/ℎ1 = 6 = }

The cost, ,1, is typically the number of gates or the number of inputs to these gates. For

example, the cost for the product cube, “*01*1”, could either be 1 gate, from the AND gate, or 3

inputs, for the inputs to the AND gate. Fan-in limited, minimal, two-level synthesis could also be

27

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

formulated by either eliminating high fan-in prime implicants from the initial set P, or assigning a

very large cost to these prime implicants. The numerical examples presented in the next section use

the number of inputs to gates of the two-level implementation as the cost function. Note however,

that the approach described below will work for any other cost function as well.

Flexibility of data organization in C++ allows representation of the prime implicant data in a

manner that allows a virtual representation of the decision tree, so that the exponentially large

decision tree is never created in memory. This data representation can be viewed as an extension of

the classical Prime-implicant chart. For each vertex in the on-set (or row of the Prime-implicant

chart), a vector of prime implicants containing that vertex is created. However, each vector of prime

implicants can be in any arbitrary order, and are not constrained to be in the column order of the

Prime-implicant chart. Therefore, this vector for each minterm is sorted in an ascending order of

cost, so that the minimum cost prime implicant is the first element of the vector. Furthermore, the

vertices can be visited in any arbitrary order, not necessarily in the order presented by the input

representation. Therefore, the vector of vertices is also sorted in an ascending order such that the

minterm covered by the least cost prime implicant is first. A feasible subset, 1, corresponding to a

cover of the logic function, may be obtained by selecting any prime implicant from its vector for

each vertex. A virtual representation of the decision tree is obtained as a vector of indices referring to

a prime implicant for each vertex, without explicitly creating the tree in memory. A depth first search

of this tree simply maps to an algorithm for incrementing these indices in a manner that simulates

depth first traversal of the virtual search tree. With this decision tree paradigm, a cover of the

Boolean function is a full path from the root to leaf of this tree.

A class of algorithms for combinational optimization that take the best possible option at

every stage is known as greedy algorithms. While there may be situations where expensive steps in

the beginning lead to better options later on so that the global solution is better than the greedy

solution, usually greedy approaches lead to good results. This is certainly the case for this problem as

will be shown in the next section. The greedy solution is provided by the first, left most path of the

depth first search, since the procedure picks the least costly prime implicants at every step. After

determining the greedy solution, the search proceeds in a depth first manner, but with certain

constraints. First constraint is that if a vertex has already been covered by previously selected prime

implicants, the search moves on to the next vertex. The second constraint, which enhances the search

performance, is that whenever the current cost exceeds previously computed minimum cost, the

current path is skipped, and the search backtracks to explore the remaining paths. Finally, for large

number of variables, eventually the number of potential covers grows exponentially, and complete

exact minimization search becomes prohibitive. For such cases, some limiting threshold can be used

to terminate the search when this limit is reached. With the greedy arrangement of the data, having

lower cost prime implicants earlier in the search phase, leads to good results even when such limits

terminate the search.

V. NUMERICAL RESULTS

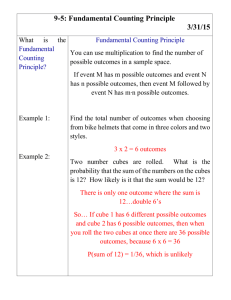

Results of two-level logic synthesis of several single output PLA benchmarks from MCNC

tests [8] are presented in Table 1. The number of inputs in column 2 roughly quantifies the size of

the problem. Cost of the initial PLA representation and the first greedy solution cost are listed next.

While the search terminated in a majority of the benchmarks resulting in an exact minimum solution,

some searches were too large, and limiting thresholds had to be applied. The next two columns show

the costs after limiting the searches at 300, 000 and 1,000,000. The last column shows whether the

exact minimum was found with the search completing or the search hit the limiting threshold and

best available result is reported. In some cases, the inverted function produced a more efficient logic

implementation; this is reported in the second last column.

28

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

The results from constrained depth first search algorithm were compared to the state-of-theart logic synthesis tool in open literature [9]. Instead of minimization algorithms, ABC uses heuristic

transformations of the input design which lead to suboptimal logic circuits. Though these suboptimal results do not have guaranteed optimality, the results usually are very good, close to the

optimal solution. The use of heuristics allows fast computation of suboptimal solutions for large

problems.

The results from constrained depth first search are minimal when the search terminated. This

is corroborated with the results in Table 1. The optimal results from constrained depth first search are

as good as, or better than, ABC results. In some cases, most notably rd84f1, exact minimum from

constrained dfs search produced a significantly better result than the heuristic result of ABC. Even

for large problems where constrained dfs search was terminated prematurely, the results are as good

or better than those produced by ABC. Only with a couple of cases with 9-10 variables, the heuristic

result from ABC was better.

9sym

con1f1

con2f2

exam1

exam3

life

max46

newill

newtag

rd53f1

rd53f2

rd53f3

rd73f1

rd73f2

rd73f3

rd84f1

rd84f2

rd84f3

rd84f4

sao2f1

sao2f2

sao2f3

sao2f4

sym10

xor5

Inputs

Initial

Greedy

9

7

7

3

4

9

9

8

8

5

5

5

7

7

7

8

8

8

8

10

10

10

10

10

5

1553

18

20

12

24

1260

408

137

75

30

100

80

272

448

224

960

1024

8

1296

92

200

800

791

8370

80

504

11

14

12

13

532

408

35

18

20

40

80

272

448

140

644

1024

8

288

92

135

97

58

930

80

Min

300K

504

11

14

12

13

532

408

35

18

20

40

80

272

448

140

644

1024

8

288

92

135

97

58

930

80

Min

1M

504

11

14

12

13

532

408

35

18

20

40

80

272

448

140

644

1024

8

288

92

135

97

58

930

80

ABC

min

504

20

14

12

13

532

381

35

21

20

40

80

288

448

140

701

1024

8

288

99

200

85

125

930

80

Inverted? Exact?

yes

no

yes

no

no

yes

no

yes

no

no

yes

no

no

no

no

no

no

no

yes

yes

yes

no

yes

yes

no

yes

no

yes

yes

yes

yes

no

no

no

yes

yes

yes

yes

yes

yes

yes

yes

yes

yes

no

no

no

no

yes

yes

Further, it should be noted that the results did not change at all between the two runs which

were terminated after 300,000 and 1,000,000 covers. In fact, the greedy solution has not been

improved at all in the results presented here. In other situations, the greedy results have improved in

subsequent search, but typically the change has occurred in tens of thousands of steps.

29

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

VI. CONCLUSION

This paper presents a constrained depth-first search approach for the synthesis of optimal

two-level logic circuits. When the search terminates, the solution is an exact minimum solution for

that Boolean function. This guaranteed minimum solution provides better results than heuristic stateof-the-art tools. When the search space hits the exponential knee for a large number of input

variables, the search can be terminated at some large threshold, and the resulting minimum is

comparable to state-of-the-art tools. It is often the case that the greedy solution or improvements to

this solution in the early stages of the search lead to a minimum solution. Also, it is noted that the

virtual representation of the decision tree ensures that even for large problems, available memory

does not pose any limitation: it is the computational time that may get too large. Thus, this approach

allows for the possibility of obtaining a minimal result if long computational times are acceptable.

An extension of this work is to investigate applications of the constrained dfs approaches for multivalued logic synthesis.

VII. ACKNOWLEDGEMENTS

I am grateful to Professor Marek Perkowski, a Professor of Electrical and Computer

Engineering Department at Portland State University, Portland, Oregon, for his patient nurturing and

guidance in the field of logic synthesis, and inculcating an unrelenting pursuit of precision and

clarity in research. I would also like to thank all my science teachers at Oregon Episcopal School for

their support of experiential research beyond the traditional science curriculum.

VIII. REFERENCES

[1]

Transistor count. (2014, November 23). Retrieved November 25, 2014, from

http://en.wikipedia.org/wiki/Transistor_count

[2] Kohavi, Z., and Jha, N. K., “Switching and Finite Automata Theory,” Third Edition,

Cambridge University Press, Cambridge, 2010.

[3] Roth, C. H. Jr, “Fundamentals of Logic Design,” Fourth Edition, PWS Publishing

Company, Boston, MA, 1995.

[4] Lee, C. Y., “Switching Functions on an n-Dimensional Cube,” Transactions of the

American Institute of Electrical Engineers, vol 73, part I, pp.142-146, September, 1954.

[5] Rawat, S, and Sah, A., “Prime and Essential Prime Implicants of Boolean Functions through

Cubical Representation,” International Journal of Computer Applications, Vol.70, No. 23,

May 2013.

[6] Micheli, G, “Synthesis and Optimization of Digital Circuits,” McGraw Hill, Inc, New York,

NY, 1994.

[7] Cook, W. J., Cunningham, W. H., Pulleyblank, W. R., and Schrijver, A, “Combinatorial

Optimization,” John Wiley & Sons, Inc, New York, NY, 1998.

[8] S. Yang, "Logic Synthesis and Optimization Benchmarks, Version 3.0," Tech. Report,

Microelectronics Center of North Carolina, 1991.

[9] ABC: A System for Sequential Synthesis and Verification. (n.d.).Berkeley Logic Synthesis

and Verification Group.Retrieved November 7, 2014, from

http://www.eecs.berkeley.edu/~alanmi/abc/.

[10] Binoy Nambiar and Jigisha Patel, “On the Algebraic Relationship Between Boolean

Functions and their Properties in Karnaugh-Map”, International Journal of Electronics and

Communication Engineering & Technology (IJECET), Volume 4, Issue 5, 2013,

pp. 225 - 236, ISSN Print: 0976- 6464, ISSN Online: 0976 –6472.

30

International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-6367(Print),

ISSN 0976 - 6375(Online), Volume 5, Issue 11, November (2014), pp. 23-31 © IAEME

AUTHOR’S DETAIL

Vikul Gupta is a high school junior at Oregon Episcopal School (OES) in

Portland, Oregon, one of the best private high schools in the United States,

according to Niche.com. He excels in mathematics and science fields, and

enjoys advanced research at Portland Oregon Logic Optimization (POLO)

group at the Portland State University, Portland, Oregon. He was a national

finalist for the international BioGENEius competition and was ranked in the

top ten at an Oregon mathematics tournament. He founded his school's debate

club, is in charge of the school's Speech and Debate team, and helps coach the novice debaters. He is

also on the varsity swim team and swims with a competitive club.

31