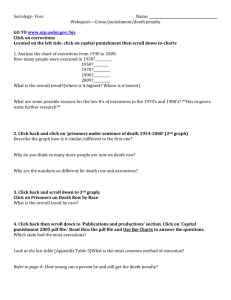

Uses and Abuses of Empirical Evidence in the Death Penalty Debate

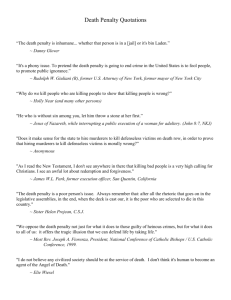

advertisement