An Introductory Crash-Course in Machine Learning

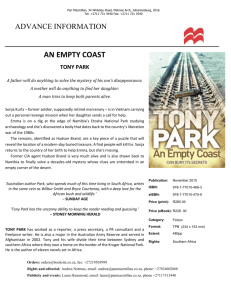

advertisement

Tony Jebara, Columbia University

An Introductory

Crash-Course in

Machine Learning

Tony Jebara

Columbia University

Tony Jebara, Columbia University

Machine Learning: What/Why

Application

Statistical Data-Driven Computational Models

Up

Real domains (vision, speech, climate):

Inference

2

have no E=MC

are noisy, complex, nonlinear

Algorithm

have many variables

Criterion

may be non-deterministic

have incomplete/approximate models

Model

Bottom-Up: build models from data

Why? Big data is everywhere,

sensors, audio, video, internet…

Representation

Data

Bottom

Sensors

Tony Jebara, Columbia University

Machine Learning Applications

• ML: Interdisciplinary (CS, Math, Stats, Physics, OR, Neuro)

• Data-driven approach

• When domain knowledge is too hard to encode manually

Speech Recognition (HMMs, ICA)

Computer Vision (face rec, digits, MRFs, super-res)

xxxxxx

x

x

xx xx

Time Series Prediction (weather, finance)

Genomics (micro-arrays, SVMs, splice-sites)

NLP and Parsing (HMMs, CRFs, Google)

Text and InfoRetrieval (docs, google, spam, TSVMs)

Medical (QMR-DT, informatics, ICA)

Behavior/Games (reinforcement, gammon, gaming)

Tony Jebara, Columbia University

x

x

x

x

x

xx

x

x

x

x

x

Classification y=sign(f(x))

x

x xx x x x

x

xx x

x

O

OO

O OO

OO O

O

Dimensionality Reduction

Clustering

Probabilistic Modeling p(x)

Detection p(x)<t

x

x xx x x x

x

xx x

x

UNSUPERVISED

Regression y=f(x)

SUPERVISIED

Machine Learning Tasks

Tony Jebara, Columbia University

Regression

⎡

⎢ x (1)

• Start with iid training dataset

⎢

⎢ x (2)

D

X = (x1,y1 ), (x 2,y 2 ),…, (x N ,yN ) x ∈ R = ⎢⎢

⎢ ...

⎢

• Given N (input, output) training pairs

⎢ x (D )

⎢⎣

• Find a function f(x) to predict y from x

y

x x

{

}

x

x

x

x

x

x

x

x

x

⎤

⎥

⎥

⎥

⎥ y ∈ R1

⎥

⎥

⎥

⎥

⎥⎦

x

• Example: predict the price of house in dollars y

given input x = [#rooms; latitude; longitude; …]

x

• Need: a) Way to evaluate how good a fit we have

b) Class of functions in which to search for f(x)

Tony Jebara, Columbia University

Empirical Risk Minimization (ERM)

• Idea: minimize ‘loss’ on the training data set

• Empirical: use the training set to find the best fit

• Define a loss function of how well we fit a single point:

(

)

L y, f (x )

• Empirical Risk = the average loss over the dataset

Remp =

1

N

∑

N

i =1

(

)

L yi , f (x i )

• Simplest loss: squared error from y value

(

)

Lsqr yi , f (x i ) =

1

2

(y − f (x ))

2

i

i

• Another example loss: absolute error

(

)

Labs yi , f (x i ) = yi − f (x i )

Tony Jebara, Columbia University

Linear Function Classes

• The simplest class of functions to search over:

f (x; θ) = ∑ d =1 θd x (d ) + θ 0 = θT x

D

• Plug into Empirical Risk

Remp (θ) =

1

N

∑

N

i =1

(

)

L yi , f (x i ; θ)

• If Remp(θ) is convex, its minimum occurs when it is flat:

∇θRemp = 0

• If we can’t solve the gradient=0, use numerical methods

like gradient descent

Tony Jebara, Columbia University

Least Squares Regression

• Have N apartments each with D measurements

• Form a data matrix X and an outputs vector y of prices

⎡

⎤

⎡ y ⎤

1

x

1

…

x

D

(

)

(

)

⎢

⎥

1

1

⎢ 1 ⎥

⎢

⎥

⎢

⎥

⎥

X=⎢

y

=

⎢ ⎥

⎢

⎥

⎢

⎥

⎢ 1 x (1) … x (D ) ⎥

y

⎢ N ⎥

N

N

⎢⎣

⎥⎦

⎣

⎦

• Empirical risk is Remp (θ) =

1

2N

∑ (y

(

T

N

i =1

• Setting gradient=0: θ = X X

*

(

)

• Use f x; θ* = x T θ*

)

−1

T

i

− θ xi

2

)

=

1

2N

y − Xθ

2

XT y ≈ pinv (X) y ≈ X \ y

Tony Jebara, Columbia University

Evaluating Our Learned Function

• We minimized empirical risk to get θ*

• How well does f(x;θ*) perform on future data?

• It should Generalize and have low True Risk:

Rtrue (θ) =

∫ P (x,y ) L (y, f (x; θ))dx dy

• Can’t compute true risk, instead use Testing Empirical Risk

• We split data into training and testing portions

{(x ,y ),…, (x

1

1

N

}

,yN )

• Find θ* with training data:

{(x

N +1

}

,yN +1 ),…, (x N +M ,yN +M )

Rtrain (θ) =

• Evaluate it with testing data: Rtest (θ) =

1

N

1

M

∑

∑

N

i =1

(

)

L yi , f (x i ; θ)

N +M

i =N +1

(

)

L yi , f (x i ; θ)

Tony Jebara, Columbia University

Overfitting

• Overfitting: get misleadingly low Rtrain(θ*) and high Rtest(θ*)

• High dimensional problems often lead to overfitting

• Adding more features (extra columns in X) will always

reduce Rtrain(θ*) and eventually cause overfitting

• If we have more dimensions than data, can get Rtrain(θ*)=0

( )

Rtest θ*

Risk

( )

Rtrain θ*

underfitting

overfitting

D

Tony Jebara, Columbia University

Regularized Risk Minimization

• Empirical Risk Minimization gave overfitting & underfitting

• Apply a penalty for using too many theta values

• This gives us the Regularized Risk

Rregularized (θ) = Rempirical (θ) + Penalty (θ)

=

1

N

∑

N

i =1

(

)

L yi , f (x i ; θ) +

θ

λ

2

2

• Solution for l2-Regularized Risk with Least Squares Loss:

∇θRregularized

*

2

⎛1

= 0 ⇒ ∇θ ⎜⎜⎜ 2N y − Xθ +

⎝

(

T

θ = X X + λI

)

−1

XT y

λ

2

⎞⎟

θ ⎟⎟ = 0

⎠

2

Tony Jebara, Columbia University

Regularized Risk Minimization

• Have D=16 features, try minimizing Rregularized(θ) to get θ*

• Try minimizing with different λ, note that λ=0 is like ERM

Tony Jebara, Columbia University

Crossvalidation

• Try fitting with different lambda regularization levels

• Select lambda which gives lowest Rtest(θ*)

Risk

( )

Rtest θ*

( )

Rtrain θ*

underfitting overfitting

Best lambda

• Lambda measures simplicity of the model

• Models with low lambda are more flexible

1/λ

Tony Jebara, Columbia University

From Regression To Classification

• Classification is another important learning problem

Regression

Classification

{(x ,y ), (x ,y ),…, (x

X = {(x ,y ), (x ,y ),…, (x

X =

1

1

1

1

2

2

}

)} x ∈ R

2

1

D

,y

)

y

∈

R

x

∈

R

N

N

2

,yN

N

D

y ∈ {0,1}

• E.g. Given x = [tumor size, tumor density]

Predict y in {benign,malignant}

• Should we solve this as a least squares regression problem?

x

x xx x x x

x

x x x

O

x

OO

O OO

O

O O

O

Tony Jebara, Columbia University

Logistic Regression

• Given a classification problem with binary outputs

X = (x1,y1 ), (x 2,y 2 ),…, (x N ,yN ) x ∈ RD y ∈ {0,1}

{

}

• Use this function and output 1 if f(x)>0.5 and 0 otherwise

(

(

f (x; θ) = 1 + exp −θ x

T

))

−1

• Instead of squared loss, use Logistic Loss

(

)

(

)

(

)

Llog y, f (x; θ) = yi log f (x; θ) + (1 − yi ) log 1 − f (x; θ)

• The resulting method is called Logistic Regression.

• But Empirical Risk Minimization has no closed-form sol’n:

Remp (θ) =

1

N

∑

N

(

)

(

)

y log f (x; θ) + (1 − yi ) log 1 − f (x; θ)

i =1 i

Tony Jebara, Columbia University

Gradient Descent

• Useful when we can’t get minimum solution in closed form

• Gradient points in direction of fastest increase

• Take step in the opposite direction!

• Gradient Descent Algorithm

choose scalar step size η

initialize t=0 and θ t = small random vector

while not converged {

θt +1 = θt − η∇θRemp

t = t +1

}

• For appropriate η, this will converge to local minimum

Tony Jebara, Columbia University

Logistic Regression

• Logistic regression gives better classification performance

• Its empirical risk is convex so gradient descent always

converges to the same solution

Tony Jebara, Columbia University

Perceptrons

• Classification scenario once again but consider +1, -1 labels

X =

{

}

(x1,y1 ), (x 2,y2 ),…, (xN ,yN )

x ∈ RD

y ∈ {−1,1}

• Use linear function, output 1 if f(x)>0 and -1 otherwise

f (x; θ) = θT x

• Classification Loss is nice but makes ERM hard

(

)

(

)

L

Lcls y, f (x; θ) = step −yf (x; θ)

yf(x;θ)

• So, we’ll instead consider the Perceptron Loss

Lper

⎧

⎪

⎪ −yf (x; θ) if yf (x; θ) < 0

y, f (x; θ) = ⎪

⎨

⎪

⎪

0

otherwise

⎪

⎩

(

)

L

yf(x;θ)

Tony Jebara, Columbia University

Perceptrons

()

per

• Perceptron empirical risk: Remp θ = − N1

(

T

y

θ

∑ i∈misclassified i xi

• Use gradient descent... or approximate the gradient

on one random point via Stochastic

Gradient Descent

initialize t = 0 and θ 0 = 0

while not converged {

pick i ∈ {1,…,N }

(

)

if yi x iT θt < 0

{ θt +1 = θt + yi x i

t = t +1

}}

• SGD only needs O(D) per iteration

• If all ||xi|| < r and an ideal θ* achieves

a margin γ, then SGD converges in t ≤ r 2 γ−2 θ*

2

)

Tony Jebara, Columbia University

Perceptrons

• Were developed in the 1950s

Tony Jebara, Columbia University

Regularized Risk for Classification

• As with regression, Empricial Risk Minimization can overfit

• ERM finds minimum training (not testing) error

• For non-separable problems, Perceptron does not converge

• The Perceptron can stop at a bunch of different solutions:

⇒

… a better solution SVMs

Tony Jebara, Columbia University

Bounding the True Risk

• ERM may do better

on training than

on testing!

Remp (θ)

R (θ̂) ≥ Remp (θ̂)

• Idea: add a regularizer to

• Use penalty or regularize

J (θ) = Remp (θ) +C (θ)

Remp (θ)

which favors simpler

• If R (θ) ≤ J (θ) we have a bound called the guaranteed risk

• After training, guarantees future error rate is ≤ min θ J (θ)

Tony Jebara, Columbia University

Bound the True Risk with VC

• Vapnik: with probability 1-η the following bound holds:

R (θ) ≤ J (θ) = Remp (θ) +

2h log ( 2eN

+ 2 log

h )

N

( ) ⎛⎜⎜⎜1 +

4

η

⎜⎜

⎜⎝

1+

NRemp (θ)

h log ( 2eN

+ log

h )

h = Vapnik-Chervonenkis (VC) dimension

⎧

⎡ 4r 2 ⎤ ⎫

⎪

⎪

⎪

⎢

⎥

h ≤ min ⎨ceil ⎢ 2 ⎥ ,d ⎪

⎬+1

⎪

⎢⎣ m ⎥⎦ ⎪

⎪

⎪

⎩

⎭

m

m=margin

2r r=radius

d=dimensionality

• Instead of ERM, do Structural Risk Minimization:

minimize a bound J(θ) on true risk to find θ*

()

4

η

⎞⎟

⎟⎟

⎟⎟

⎟⎟

⎠

Tony Jebara, Columbia University

Support Vector Machines

• An SVM maximizes margin m while classifying perfectly

• This reduces VC dimension while minimizing empirical risk

• These constraints ensure we get zero empirical risk:

wT x i + b ≥ +1

∀yi = +1

wT x i + b ≤ −1

∀yi = −1

• Margin of an SVM is m = 2 w

−1

m

• So, solve this quadratic program (QP):

min

1

w,b 2

w

2

(

)

subject to yi wT x i + b −1 ≥ 0

L

yf(x;θ)

Tony Jebara, Columbia University

Support Vector Machines

• For non-separable problems, add slack variables to the QP

• Penalize slacks with a scalar C (found via crossvalidation)

LP : min

1

w,b,ξ 2

(

2

w +C ∑ i ξi

)

s.t. yi wT x i + b −1 + ξi ≥ 0

• Instead of the primal QP, we can solve the dual QP

LD : max α ∑ i αi − 12 ∑ i, j αi α j yiy j x iT x j

s.t. ∑ i αiyi = 0 and αi ∈ ⎡⎢0,C ⎤⎥

⎣

⎦

• Classifier is the sign of

f (x ) = ∑ i αiyi x T x i + b

Tony Jebara, Columbia University

Kernelized SVMs

• The dual only involves inner produces between data-points

LD : max α ∑ i αi − 12 ∑ i, j αi α j yiy j x iT x j s.t. ∑ i αiyi = 0 and αi ∈ ⎡⎢0,C ⎤⎥

⎣

⎦

f (x ) = ∑ i αiyi x T x i + b

• To make the SVM handle non-linear decision boundaries,

replace any inner-product with a general kernel function

T

i

(

x x j → k x i ,x j

)

for example

(

) (

T

i

)

k x i ,x j = x x j + 1

3

• We can use (almost) any function which accepts two data

points and outputs a scalar, this gives the kernelized SVM

Tony Jebara, Columbia University

Kernelized SVMs

• Polynomial kernel:

• Radial basis function kernel:

Polynomial Kernel

)

( ) (

⎛ 1

k (x ,x ) = exp ⎜⎜⎜−

⎝

2σ

T

i

k x i ,x j = x x j + 1

i

j

p

2

xi − x j

RBF kernel

• Least-squares, logistic-regression, perceptron are also

kernelizable

2

⎞⎟

⎟⎟

⎠

Tony Jebara, Columbia University

Relative Margin Machines

• Instead of max margin,

can do max relative margin:

SVM : min

1

w,b,ξ 2

w

(

2

)

s.t. yi wT x i + b ≥ 1

RMM : min

1

w,b,ξ 2

(

w

2

)

s.t. B ≥ yi wT x i + b ≥ 1

• Similar QP algorithm

• Gives better generalization

(Shivaswamy & Jebara 2010)

Tony Jebara, Columbia University

Classifying Digits

• Classify MNIST digits

• Train on 60,000 pairs

• Test on 10,000

Algorithm

Error Rate

Logistic Regression

12.0%

SVM (RBF)

1.46%

SVM (Poly 4)

1.83%

SVM (Poly 7)

1.36%

RMM (RBF)

1.44%

RMM (Poly 4)

1.56%

RMM (Poly 7)

1.15%

Tony Jebara, Columbia University

Dimensionality Reduction

• An unsupervised learning problem

• Given only high-dimensional input data (no target outputs)

X = {x1,x 2,…,x N } x ∈ RD

• Learn low-dimensional outputs (where d<<D)

Y = {y1,y 2,…,yN } y ∈ Rd

→

Tony Jebara, Columbia University

Principal Components Analysis

• Idea: instead of writing data in all its dimensions,

only write it as mean + steps along one direction

x

⎡

⎤ ⎡

x

1

⎢ i ( ) ⎥ ⎢ µ (1)

⎢

⎥≈⎢

⎢ x (2) ⎥ ⎢ µ (2)

⎢⎣ i

⎥⎦ ⎢⎣

⎤

⎥

⎥ + yi

⎥

⎥⎦

⎡

⎤

v

1

⎢ () ⎥

⎢

⎥

⎢ v (2) ⎥

⎢⎣

⎥⎦

• More generally, keep a subset

of dimensions d from D (i.e. 2 of 3)

d

x i ≈ µ + ∑ j =1 yi ( j )v j

x

y

• We reduce dimensionality if: i

i

• Optimal directions: along eigenvectors of covariance

• Which directions to keep: highest eigenvalues (variances)

Tony Jebara, Columbia University

Principal Components Analysis

• With eigenvectors, mean and coefficients, reconstruct x:

d

x i ≈ µ + ∑ j =1 yi ( j )v j

• Get eigenvectors and eigenvalues of DxD covariance matrix

Σ = V ΛV T

• Higher eigenvalues are higher variance, use the top d ones

λ1 ≥ λ 2 ≥ λ 3 ≥ λ 4 ≥ … ≥ 0

• To compute the coefficients use:

T

yi ( j ) = (x i − µ) v j

• Can also get the eigenvectors from the NxN Gram matrix:

Aij = x iT x j

…these inner-products can be kernelized

Tony Jebara

Principal Components Analysis

• Related to spectral graph embedding (Hall 70, Shoelkopf 98)

• Just embed with Y=leading eigenvectors of A

• But, for nonlinear data PCA fails

Aij = x iT x j

→

• Problem: PCA preserves all distances

• This requires many dimensions for Y

• Solution: sparsify graph of pairwise distances between points

• Preserve only local distance edges (Weingberger & Saul33 04)

Tony Jebara

Sparsifying graphs

• Given distance graph between all points find sparse subgraph

n (n−1)/2

• There are 2

possible subgraphs!

6

4

34

Tony Jebara

Sparsifying graphs

• InO (n 2 ) we could find k nearest neighbors

6

4

6

4

35

Tony Jebara

Sparsifying graphs

• InO (n 2 ) we could find k nearest neighbors

6

6

4

4

• Get a binary connectivity matrix C

⎡

⎢

⎢

A=⎢

⎢

⎢

⎢⎣

4

3

1

0

1

3

3

4

3

1

0

1

1

3

4

3

1

0

0

1

3

4

3

1

1

0

1

3

4

3

3

3

0

1

3

4

⎤

⎡

⎥

⎢

⎥

⎢

⎥ → C =⎢

⎥

⎢

⎥

⎢

⎥⎦

⎢⎣

1

1

0

0

0

1

1

1

1

0

0

0

0

1

1

1

0

0

0

0

1

1

1

0

0

0

0

1

1

1

1

0

0

0

1

1

36

⎤

⎥

⎥

⎥

⎥

⎥

⎥⎦

Tony Jebara

Semidefinite embedding

• Semidefinite embedding (SDE) improves spectral embedding

• Unfolds sparse graph by finding large weight matrix

• But K must preserve distances on sparse graph

• Given connectivity C, solve the SDP:

⎧

⎪

⎪

⎪

⎪

κ⎪

⎨

⎪

⎪

⎪

⎪

⎪

⎩

max K tr (K )

subject to K 0,

∑

ij

K ij = 0

K ii + K jj − K ij − K ji =

Aii + Ajj − Aij − Aji if C ij = 1

• Embed with eigenvectors of K instead of A

37

Tony Jebara

Semidefinite embedding

• SDE pulls apart pruned graph maximally

while preserving pairwise distances if Cij=1

• SDE’s stretching of data improves spectral embedding

• Are we done? Is SDE the solution for all sparse graphs?38

Tony Jebara, Columbia University

Semidefinite Embedding

• On general graphs, SDE unfolding can worsen visualization!

• Example: star graph…

PCA

eigenvalues

SDE

eigenvalues

• SDE is increasing dimensionality!

• Can we do better?

Tony Jebara

Minimum volume embedding

• Maximizing trace in SDE can be bad…

Fans out some manifolds

• Minimum Volume Embedding (MVE) (Shaw Jebara 07)

Unfold only in the two

preserved dimensions

Squash down in others

40

Tony Jebara

Minimum volume embedding

• To reduce dimension, drive energy into top (e.g. 2) dims

• Maximize fidelity F (K ) = λ1 + λ2 or % energy in top dims

∑

i

λi

F (K ) = 0.98

F (K ) = 0.29

41

Tony Jebara

Minimum volume embedding

• An iterative SDP:

Fidelity = max K ∈κ ∑ i αi λi

(

= max K ∈κ maxV tr K ∑ i αiviviT

)

s.t. viT v j = δij

SVD for V

SDE’s SDP for K

Iterate SDP and SVD

Maximizes variational bound on original problem.

Fast monotonic convergence, code available.

42

Tony Jebara

Minimum volume embedding

• Star graph experiment visualization and spectra

• MVE converges in 5 iterations

PCA

SDE

MVE

43

Tony Jebara

Minimum volume embedding

• Collaboration network with spectra

PCA

SDE

MVE

44

44

Tony Jebara

Comparing Embeddings

• Evaluate fidelity or % energy in top 2 visualized dimensions

PCA

SDE

MVE

Star

95.0%

29.9% 100.0%

Spiral

45.8%

99.9%

99.9%

Twos

18.4%

88.4%

97.8%

Faces

31.4%

83.6%

99.2%

5.9%

95.3%

99.2%

Network

45

Tony Jebara

Comparing Embeddings

• ENSO Data (Lima, Lall, Jebara & Barston 2009)

PCA

SDE

46

Tony Jebara, Columbia University

x

x

x

x

x

xx

x

x

x

x

x

Classification y=sign(f(x))

x

x xx x x x

x

xx x

x

O

OO

O OO

OO O

O

Dimensionality Reduction

Clustering

Probabilistic Modeling p(x)

Detection p(x)<t

x

x xx x x x

x

xx x

x

UNSUPERVISED

Regression y=f(x)

SUPERVISIED

Machine Learning Tasks