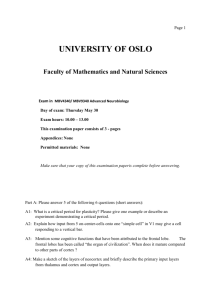

A Model of Computation and Representation in the Brain

advertisement