advanced data infrastructure for scientific research

advertisement

ADVANCED DATA INFRASTRUCTURE

FOR SCIENTIFIC RESEARCH

------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Waseem Rehmat

Jari Veijalainen

1

Permission is granted to make and distribute copies of this document provided the copyright notice

and this permission notice are preserved on all copies.

Permission is granted to copy and distribute modified versions of this document under the

conditions from authors, provided that the entire resulting derived work is referenced and includes

authors in the ownership.

Permission is granted to copy and distribute translations of this document into another language,

under the above conditions for modified versions, except that this permission notice may be stated

in translation approved by authors.

2

Table of contents

Acknowledgements ……………………………………………………………………………

1

Summary ……………………………………………………………………………………….

2

1.

Introduction ………………………………………………………………………………..

3

1.1. Purpose………………………………………………………………………………...

6

1.2. Scope…………………………………………………………………………………..

6

1.3. Process Flow……………………………………………………………………………

7

1.4. Documentation Flow…………………………………………………………………..

7

1.5. Stakeholders of the Project……………………………………………………………

8

1.6. Project phases …………….……………………………………………………….

10

2. Stakeholder’s analysis and Current Use cases …………………………………………...

11

2.1. Department of Music………………………………………………………………….

2.2. Faculty of Sports and health Sciences………………………………………………..

3. Functional Requirements of solution (What system should do?)………………………..

15

3.1. Dynamics of planned Data Infrastructure in general…………………………………

3.2. Key addressable areas of proposed Data infrastructure …………………………….

3.3. Objectives of planned data Infrastructure…………………………………………….

3.4. Challenges of planned Data Infrastructure……………………………………………

4. New Use Cases and Specifications …………………………………………………………

22

4.1. Actors of Advanced data Infrastructure………………………………………………..

4.2. Links between actors and Use cases……………………………………………………

4.3. Use cases of proposed structure……………………………………………………….

4.4. Conclusion ……………………………………………………………………………….. 42

3

ACKNOWLEDGMENTS

Data Infrastructure has been a project in which professionals from diverse backgrounds and divisions

of University were involved. Without a doubt, it was not possible to complete and develop this

document as a useful resource without valuable contribution of complete project team which

extends to departmental reference personnel, interviewees and stake holders.

We wish to thank Mr. Aki Karjalainen (Development Manager at Faculty of Sport and Health

Sciences) for joining us from start of project documentation and briefing us about current data

handling practices at Faculty of Sports and health sciences of University of Jyäskylä. He also provided

us with useful resources and links for better understanding of faculty needs and expectations.

Vision of proposed solution was elaborated by Professor Taru Lintunen. She has not only been

available for any queries but also contributed towards better visibility of project path and

stakeholders expectations. We also thank Professor Sulin Cheng for her candid feedback during

previous drafts presentations.

Technology perspective of the project was always highlighted and raised to table by Joonas

Kesäniemi. We wish to thank him on this platform for his contributions.

During the data gathering and interviewing phase of our project report, Prof. Lyytinen from

psychology dept referred us to Mr. Kenneth Eklund who is actually owner of a similar solution at

different level. It was informative having a session with him about our project and getting valuable

recommendations.

Perspective about project from Department of music was discussed with Tuomas Eerola who guided

us about current processes of data handling at the department. Meetings with him were informative

and contributing.

Last but not the least, we would like to thank team members and professionals contributing towards

project refinement in many way. We are grateful to University management and Funding authorities

for providing the opportunity and platform to excel in the project.

Warm Regards,

Jari Veijalainen

Waseem Rehmat

4

SUMMARY

Primary and basic motive of this document is to understand the domain of data infrastructure

existing at the University of Jyväskylä, identify areas where improvement is possible and explore

alternate solutions to data related issues where it has been identified that optimal data handling is

not under practice.

There are two ways in which we have moved forward towards domain understanding and

requirements elicitations. Firstly, we developed better understanding of systems by interviewing and

questioning stakeholders and secondly, we analyzed standard modern data infrastructure

management practices. Later, those practices were manipulated to fit our specific needs and

according to expectations of stakeholders.

Process flow is described in this document as a cyclic process in which we identify needs, define and

elaborate on solution, incorporate solution into the system, evaluate system wrt to incorporated

solution and then identify needs again. Following this process flow enables us to make a constantly

improving data structure with constant improvement inherent into it.

According to data needs of stakeholders who are Faculty of sports, Department of music, University

administration, Researchers and funding authorities currently, we have covered elicitation of data

related needs starting from types of data needed to the end point of data archiving covering

maximum data related concerns of stakeholders.

All project phases are defined in this document in the flow diagram starting from basic requirements

to technical requirements. So, this document will serve as a base guide for different project steps

and to measure the progress of milestones.

Current data practices are depicted in the form of use cases diagrammed after discussing them with

stake holders and identified personnel’s of concerned departments. On the basis of current

practices, we identified functional requirements of the system including Functionality, Usability,

Reliability, performance and supportability of the supposed solution. Key addressable issues of the

solution are also identified to assist developers at technical level.

In the last phase of the document, we have presented proposed use cases and process flows. Each

use case includes actors and functions of each actor. This document includes 7 meta level use cases

and three detailed process flows.

5

1.0 Introduction:

1.1 Purpose:

The purpose of this document is to develop an understanding of business and

technical requirements that will be handled and solved by project team of dataInfra

Project.

This document will serve as baseline for solution development, performance control

and implementation.

This document will provide holistic view of the project to new inductions in the

project (Researcher’s, stakeholders etc) and will provide ground information.

Through this RM documentation, we will measure work, progress and activities of

project for consistency.

This RM document may determine if end solutions provided are in line with

stakeholder’s expectations.

This requirements Management document is a living document that will be updated

and supplemented throughout the project lifecycle.

1.2 Scope :

The scope of this RM document includes

Meta description for project flow

What is the current state of matters at stakeholder’s end wrt data ?

Initial classification of data encountered in research project

What needs to be done with reference to the above data?

How it shall be done? (Meta Level view)

What level of quality must be achieved in different phases of project?

6

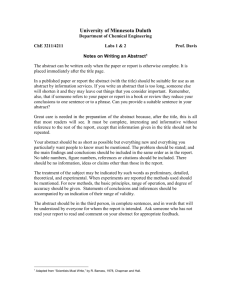

1.3 Envisioned Process Flow:

Requirements are identified and gathered directly from stakeholders. These

requirements are clarified to ensure understanding of technical specification

development team including any required decomposition of requirements.

Following diagram shows the generic process flow of DataInfra Requirements

management.

1.4 Documentation Flow:

7

1.5 Project Phases:

Mentioned below flow diagram details the flow of the project and suggested phases to be followed

at implementation.

In terms of data resources possessed by University of Jyvaskyla, Research data is the most valuable

source. Currently, this data is stored at different data sources in various formats and increase in the

data is exponential at many locations which give rise to data in measuring of gigabits and terabits.

8

With such an amount of data at the university, there is rising need of finding ways to develop a

relation between data sources (Data synchronization and meta data management). Another primary

need which develops with increasing research data is management of property rights, ownerships

and consent management which is either not managed in totality or managed in paper format in

current systems.

Generic (meta) research process is depicted in following diagram.

Generic Research Process

9

The above diagram shows a schematic research project flow from the idea to the end. If

individual persons are not object of the study, the steps in the middle (select test persons,

gather their consents) can be skipped. The data gathering, storing and analysis is present in

all projects, but in different forms. The source can be TV news, Internet sources,

newspapers, scientific literature, etc. We observed especially cases, where the data is

gathered by various sensors or interviews about persons. The sensors can also gather data

directly or indirectly about phenomena in nature (experiments in physics, observations in

astronomy). The collected digital raw data and the information carried by them is then

analysed by software (or manually) and new kind of data is produced and stored. This cycle

can continue several times and additional raw data can be fed in. The main result from a

scientific project are scientific publications, patents, and perhaps also new substances,

products, business models, methods, etc.

In this context we only consider all kind of project-based information that can be encoded

into bit strings and stored and transmitted as bit strings, i.e. as data. These are produced in

various phases of each real project. The types of the data vary from project to project and

phase to phase.

The final phase is data archiving. This can happen already during the project – especially if

the project lasts years – but most often it is performed as part of closing the project. It is an

issue that needs special treatment as concerns adherence to the legal and ethical

requirements, privacy preservation, access rights to the data, storage requirements,

retention time, etc.

1.6 Stakeholders of Scientific Projects:

Stake holders of the project are following

Faculties and Departments (case study: Faculties of Sports, Social Sciences and Humanities)

University Administration

Internal & External Researchers

Funding Authorities

This document is also intended to update stakeholders about our current standing in the Data Infra

project.

Current practices linked with data creation and management at two faculties are mentioned below

according to following basic heads.

10

Amount of data

Type of data in terms of data format

Data ownership

Data privacy matters

Data protection practices

Consent handling (Object and first user)

Copyright issues

Data sharing

Data archiving and data backup

Current metadata

Retention time of data and disposal

Physical data storage mediums

2.0 Stakeholder’s analysis and Current Use cases:

2.1 Department of Music:

Department of Music is highly data intensive due to its nature of activities. data formats inevitably

created and used in the faculty are high quality sound/video files with supposedly many formats

including WAV,AIFF,WMA,MP3 and 4,ra&m,MSV,AMR, in audio and MPG,MOV,VMV RM in video

formats.

Data ownership matters are handled on individual basis and we do have a mechanism for this which

might need automation and to be brought under a structured flow. Data ownership & privacy are of

prime importance at the department and this is to be taken into account while structuring any future

data infrastructure. A structured consent handling mechanism including automated consent forms

and its presence in the research data handling flow is to be developed.

A very basic and simple assumed practice linked with data creation is depicted in the flow diagram

below.

11

Prerequisites of above mentioned data flow including consents and ownership issues are managed

manually before research data creation and currently, are not directly linked with each data

generation instance.

Multimedia data, consisting of alphanumeric, graphics, image, and animation, video and audio

objects is surely different in terms of semantics and viewing. From viewing perspective, multimedia

data is huge in size and consists of time dependant characteristics for flawless viewing and retrieval

and this can be one of challenges while structuring advanced infrastructure. The architecture of

database system may consist of modules for query processing, process management, buffer

management, file management, recovery and security along with IPR issues.

Just to understand the difference of required structure, query processing in multimedia database has

to be different from standard alphanumeric database. Results of a simple query may contain

multimedia inputs and items and intended result may not be exact but at a certain degree of

similarity. One example query can be”Show all results from archived videos where person X is

available” whereas query has a picture of that person or name connected to another database of

static images. According to Jari Veijalainen, successful implementation of such a repository might be

doable also without guaranteeing full serializability and recoverability properties for interleaved

12

executions. Unless sufficiently is known in advance about the behavior and semantics of the transactions

inserting, retrieving, and updating the data, it is better to guarantee serializability and recoverability.

At the current moment, we are not aware about amount of data possessed by department of music

and if the data is stored at same data store.

2.2 Faculty of Sports and health Sciences:

Faculty of sports and health sciences deals with varied data types .amount of data created and

handled on concurrent basis is not very high but high quality video data created during events is

intense . Most common data is alphanumeric consisting of evaluation results by different

measurement machines with reference to sports activity and health indicators measurement. Other

data sources are Video captures used in different lab activities and possibly, RFID tags linked to

experiments. Amount of data currently available for data mining purposes is not very high and to be

specific, network centric, ubiquitous, knowledge intensive management system has to be created

which will have capability of providing indexed data useful for data mining.

Data created and utilized at the faculty by individuals is under ownership of the faculty and object

consent system is in place which maintains needed consents in paper format .So, automation of

current consent’s and ownership issues will mean digitization of paper data . However, no prime

need for this has been given by faculty.

Data privacy has not emerged as a concern as yet because of the fact that Objects health and

voluntary responses results are used locally and data creating apparatus is not networked. However,

in a networked system which is to be established and implemented, this can be a matter of concern

because complete anonymity is not all the time possible and data is imperatively linked with object.

As the amount of digital networked data will grow, there will be a greater chance of data loss and

misplacements through thumb drives, external hard drives and other storage mediums. So, to

protect the secrecy of the entire data lifetime, we may have confidential ways to store data.

Another important aspect to be taken in to account might be the fact that data created at the

Laboratory can be highly scattered and inconsistent due to its dynamic nature. Do we approximate

such data or other ways of handling that are topics of discussion.

In current system, no archiving or backup issues are noticed. Data backup is done through external

drives and serves the purpose in current situation.

Each data creation practice is currently considered as a new data instance even if the object is being

monitored nth time. A basic Faculty lab instance is diagrammed in below mention drawing.

13

We can see that diagnostic results are directly taken from diagnostic machine and in the usual

settings; readings are preserved on paper in a standardized manner. Through this practice,

researcher can manage any data type or convention changes manually. However, in a networked

system, we need standardized data creation. For example, if an object is recording his/her body

temperature at two machines, both diagnostic machines should present the results in Fahrenheit or

Celsius or else, we may have conversion mediation so that stored data is more consistent and

minable.

14

Above mentioned diagram shows extended generic process with maximum of three links (levels of

network).

One possible concern can be the motivation of subject and researcher to record and update data

linked with a subject every time some measurement is done through diagnostic machines. Some

subjects may like to just monitor some stats for instant reference and may not like to update or

network updating of such data. Here, we also need to discuss if we are interested to have every data

creation instance updated in the database or just some experimental data is needed. By the terms

‘Subject’ we mean the person who’s data is being monitored at the faculty of sports i.e. athlete or

patient.

From viewing perspective, Multimedia data can be huge in size and can consist of time dependant

characteristics for better viewing. This can be a challenge in the proposed solution. Supposed data

infrastructure may contain Query, process and buffer management systems to handle these issues.

15

3.0 Functional Requirements of solution (What system should do?):

Following section of document will cover functional requirements of the solution which is classified

as follows

1.

2.

3.

4.

5.

Functionality

Usability

Reliability

Performance

Supportability

3.1 Dynamics of planned Data Infrastructure in general:

In the following section of the document, we will discuss about general characteristics of advanced

data infrastructure. At later stage in same document, we will discuss requirements specific for each

faculty (Sports and Music).

Data Redundancy:

Amount of data appears to be a non issue at Faculty of sports and department of music due to the

fact that data storage is not expensive and it is possible to store data of all kinds. However, this can

lead to duplication and redundancy of data and storage of different and conflicting versions of same

data stored at different locations(In advanced Data Infrastructure). For example, if temperature data

of a subject is measured in two databases, it is vital that data is stored in same data convention (in

Fahrenheit or Celsius) At a later stage, conflicting data can yield in data inconsistency which affects

the integrity of information created on the basis of that data. Therefore, data redundancy has to be

controlled in the advanced data infrastructure.

Classification of Data:

It would be imperative to classify data especially if we intend to provide a solution of decentralized

or cloud format data handling. Data should be classified into number of categories from which some

suggested categories are mentioned below. These are connected to different phases of the project

and different kind of projects. The classification below is tentative and is related with the inner loop

(data gathering-storage-analysis of the meta project cycle)

Discrete data

Continuous data

Human input data

Auto generated data

Descriptive or meta data

Positional and environmental data

Sensor data

16

If we look at the entire project duration, we can identify need for another categorization. It relates

with the phases of the project and different media types.

Raw data: data that is produced by measuring/capturing devices or input by humans

Derived data: this is produced algorithmically from raw data and/or derived data

Operational data: data that is produced by measuring devices or humans (input) or derived from

that data

Metadata: data that characterizes the operational data in terms of type, source, IPRs, privacy

restrictions, retention time, access history etc. It itself is discrete data.

Discrete data: consists of single values or sets of values; does not have special requirements for

storage or retrieval rate

Streaming data: data that has minimum requirements as concerns the storage or

retrieval/processing rate (e.g. video, audio, stream of discrete measuring values)

Single media data: text, audio, image, voiceless video/animation stream, etc. multimedia data:

consists of at least two synchronized single media type

Compressed data: operational data can be compressed from the beginning (video); compressed data

can also be obtained from operational data or metadata by lossy or lossless compression

Uncompressed data: operational or metadata that is not compressed or is decompressed

This classification (metadata- operational data) will determine the category and is instrumental for

the rights management. For example, it would be possible then to grant access in different tiers and

to any certain type of data (Privacy issues). Furthermore, it will also allow better and swift data

search results if the search is made from a certain classification. For example, data retriever can limit

his/her search to auto generated data if logs of RFID’s are needed.

The distinction between discrete and streaming data helps to guide the implementation of the

system. Typically, streaming data (video) is voluminous and has retrieval and storage rate (real time)

requirements, whereas discrete data does not require so much storage space and does not have real

time access requirements.

Classification of data should be done at general and at faculty level due to the fact that we also

intend to develop a system with capability of integration with all current data infrastructures and

ideally, with any future data structures developed in the University and partner institutions.

3.2 Key addressable areas of proposed Data infrastructure:

17

Concern Area

Description

Data Ownership

This relates to who has the legal right to data and who retains the data

including rights to transfer data

Data Collection

This pertains to collecting project data in a consistent, systematic manner

(i.e., reliability) and establishing an ongoing system for evaluating and

recording changes to the project protocol (i.e., validity).

Data Storage

This concerns the amount of data that should be

Stored and what real time requirements there are

Data Protection

This relates to protecting written and electronic data from physical damage

and protecting data integrity, including damage from tampering or theft.

Data Retention

This refers to the length of time one needs to keep the project data

according to the sponsor's or funder's guidelines. It also includes secure

destruction of data.

This pertains to how raw data are chosen, evaluated, and interpreted into

meaningful and significant conclusions that other researchers and the

public can understand and use.

This concerns how project data and research results are disseminated to

other researchers and the general public, and when data should not be

shared.

This pertains to the publication of conclusive findings

Data Analysis

Data Sharing

Data Reporting

(Steneck 2004)

3.3 Objectives of planned data Infrastructure:

In following section, we will discuss core objectives we wish to achieve through supposed data

structure. Planned solution should be capable of serving at least below mentioned objectives.

Data Repository

Data repository should be formed by collecting data from multiple internal and external

sources and summarize this data to provide single source to any mining applications or for

any processing by legal users.

Systematic storage of data through classification and meta data generation.

Data should be classified into number of categories on the basis of data type (some classes

mentioned above in the same document) and also according to level of internal controls to

18

protect data against theft and improper use (Ensuring data confidentiality). To achieve this,

it is imperative to classify data on the basis of sensitivity and confidentiality along with data

type classification. Three classes of data on the basis of control are suggested below [2].

These classes are to be defined with ratings of Low, Moderate and high in Nine box grid

format.

1. Confidentiality

2. Integrity

3. Availability

Low

Moderate

High

Confidentiality

Integrity

Availability

Efficient access to stored data.

Providing secure and efficient access of data to end user is very important especially in

distributed system or information cloud. This can be insured by using secure data data

access mechanism by Weichao Wang et al in their paper called “Secure and Efficient Access

to Outsourced Data”[3] in which the author has provided a certification method to handle

access. Diagram of method is mentioned below.

Similar practice is followed for online data access and for recovery of passwords over the

internet

To ensure data integrity and security.

Security of data may include protecting data from below mentioned anomalies.

1. Data Corruption

2. Data destruction (Where data is altered/ destructed by human factor)

3. Undesired modification

4. Data lost (technical defect)

5. Unauthorized disclosure

19

Verification and validation mechanism should be devised to ensure data integrity .

Furthermore, proper archiving and backup should be practiced according to standardized

defined procedures to ensure data lost and destruction. Backing up of systems may be done

according to Grandfather--- Father—Son principal. Backing up of data is discussed in this

context because data backup has a direct impact on data security.

Concurrent access to data.

In case of concurrent access to resources, data access should have mechanisms to prevent

undesired effects when multiple users try to modify resources that other users are actively

using. There are two basic categories of handling concurrent data.

1. Pessimistic concurrency control where a system of locks prevents users from

modifying data in such a manner which affects data negatively or affects other users.

After a user performs an action that causes a lock to be applied, other users cannot

perform actions that would conflict with the lock until the owner releases it.

2. Optimistic concurrency control where users do not lock data when they read it.

When a user updates data, the system checks to see if another user changed the

data after it was read. If another user updated the data, an error is raised and

transaction is aborted [4].

To maintain quality of data

Quality of data should be ensured at all stages of data management process from Capture to

digitization (if any), storage, analysis and end usage. Data can be improved in two ways.

1. Prevention

2. Correction

We can introduce both parameters to ensure quality of data. However, anomalies prevention

is considered to be more efficient because data cleaning and correction at later stages does

not provide better results.

To ensure privacy of data

Privacy handles the rights and obligations of individuals with respect to data collection,

usage, disclosure and disposal. Privacy matters of data come under the hood of risk

management function of University.

Section 10 of the Constitution of Finland, entitled "The right to privacy," handles and

provides guidance about data privacy. The Personal Data Act of 1999 introduces the concept

of informed consent and self-determination into Finnish law, giving data subjects the rights

to access or correct their data, or to prohibit their use for stated purposes [5]. Privacy

matters are to be discussed with legal authorities during the development of data

infrastructure.

20

A separate paper of EU legislation can be attached to this document for guidance. The main

directive on which the Finnish legislation is based is the privacy directives 2002/2006 and

some other directives. These are mainly implemented in Henkilötietolaki 22.4.1999/523

accessible at www.finlex.fi.

Efficient handling of IPR issues

Any data which is assumed to bear a level of Novelty or distinctiveness may be considered as

copyrighted material and may be handled under guidance of standardized process defined

for IPR handling. IPR controlled data can be

1. Copyrights

2. Patents[Not of concern for operational data because data is not patented in EU]

3. Usage of Trademarks(Signs or emblems)

4. Business plans or strategic plans

IPR issues might also arise in situations of collaborative research where more than one

individuals or groups coordinate to handle segments of a project. For example, Individual

researchers, Laboratory technicians (Faculty of sports) and objects involved in research data.

Details of IPR handling mechanisms are to discussed with experts in the specific field.

3.4 Challenges of planned Data Infrastructure:

Data Redundancy:

Some object or set of objects with unique identities can be stored at two different

locations(Bit strings and in different locations). For example, data about an object may be

stored in two files of non volatile storage mediums. Some of the information about the

object might be changing such as objects status in the information system. Therefore, it is

possible that when data about the object is changed in master file , then same data may not

have been updated in other files . This can raise issues of duplication and later, can

contribute to issues of integrating.

Logical representation of physical data:

Logical representation of physical data is the key feature of a data structure. The term

LOGICAL refers here to the view of data as presented to user and term PHYSICAL refers to

the data actually stored in the storage medium. Narrowing the gap between volume of data

between physical and logical can be challenging and also a good measure of efficacy of the

data infrastructure. By narrowing the gap, we mean the gap of information available in

physical storage and logical representation to the end user.

21

4.0 Use Cases and Specifications:

4.1 Actors of Advanced data Infrastructure:

Actors of proposed data infrastructure are following

Object- It may refer to the person/source or system which is categorized as the first contact

or point of data collection.

Data Generator – the First user of the raw data or the person/system gathering it. This also

includes the person/system interpreting the information carried by the operational data.

Data User – Researcher /system utilizing data or information created by data generator.

Data Analyst – Individual / research group or system manipulating research data may be

referred as data analyst in this document.

Data Manager or management system – Individual or system responsible of storage/ backup

and archiving of data may be referred as data manager. At the first level, data should be

managed by data generator or else, by system when data is inserted.

Data importer (If any) – System or individual importing data from external sources may be

referred as data importer. Please note that we don’t have any direct link with subject of data

in this case.

Data Exporter (If any) – System or individual exporting data to any external source shall be

referred as data exporter. IPR and ownership issues are to be taken care by Data exporter in

case of data export.

22

4.2 Links between actors and Use cases:

In the following section of the document, we shall elaborate generic links between actors

and their link to solution use cases. Purpose of the section is to categorize interactions and

to make technical specifications concrete and under the scope of solution.

Actor 1 - Subject

It may refer to the person/source or system which is categorized as the first contact or point

of data collection. In the scope of Faculty of sports, this may be the individual or group going

through certain fitness tests and in the context of department of music, this may be the

founder owner of any data piece.

Data creation and updating are first contact operations whereas verification is post action operation

in usual cases.

23

Actor 2- Data Generator:

Data Generator is the First user of the data or the person/system gathering the data. This also

includes the person/system transforming data into information. In the context of Faculty of sports,

this may be the researcher or fitness test administrator (Doctor etc) whereas in the context of

Department of music, this can be the person updating the data in system. For example, the person

playing and recording the melodies from manuscript for folk tunes record(Discussion case with

Tuomas Eerola ).

Data generator can be individual or an automated system in the above mentioned use case and

Transformation of data extends to synchronization without direct link with data generator.

24

Actor 3- Data User:

Data User may be referred as Researcher /system utilizing data or information created by data

generator. In the scope of our project , Researchers and data cleaning personnel may be referred as

data user.

Relaying and reusing of data may involve handling IPR issues and ownership constraints . These

issues are to be handled by Data management system or Data Manager, who can create metadata,

set access rights, remove data etc.

25

Actor 4 - Data Analyst

Individual / research group or system manipulating research data may be referred as data analyst in

this document.

In projects of longitudinal research, data analyst can be different from actual researchers.

Transformation of data into information in above mentioned use case means processing and refining

data towards a logical meaningful piece of information for end user’s and other’s referring to data.

26

Actor 5 - Data Manager or management system :

Individual or system responsible of storage/ backup and archiving of data may be referred as data

manager. Same actor may be responsible for handling IPR issues and data ownership and transfer

rules.

27

Actor 6 -Data importer (If any) :

System or individual importing data from external sources(Source outside JY) may be referred as

data importer. Please note that we don’t have any direct link with subject of data in this case.

Data sharing agreements are to be defined prior to any data import and this does not include in

responsibilities of data importer.

28

Actor 7 -Data Export (If any):

System or individual exporting data to any external source shall be referred as data exporter. IPR and

ownership issues are to be taken care by Data exporter in case of data export.

Data sharing agreements are to be defined prior to any data export and this does not include in

responsibilities of data exporting individual or system.

29

4.3 Process flow’s of proposed structure:

Database Query

Data Entry

Data Collection

Data Storage

Data IPR handling.

Data Ownership

Database query system.

30

Proposed PF 1 (Research Data Entry):

31

Proposed PF 2(Data Collection):

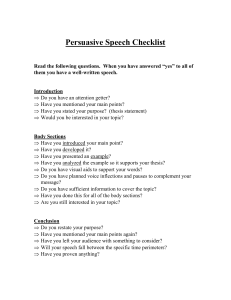

Data collection and segmentation of collected data is vital to efficiency of our data infrastructure

because inaccurate data collection or collection of data in vague classification can lead to difficulties

in processing of data and can lead to invalid results.

Data Collection Protocol:

Identification of Need for

Data

Data Collection Plan

Storage and Destruction

Approval

Sharing Results and

Decision Making

Data Collection

Analyzing and

Synthesizing Data

* Process derived fromHastings and Prince Edward District School Board, 2005 (Not a reference but a synthesis)

Data main classes and sub classes are mentioned below to be adhered by data collectors (Systems

and researcher’s).

Quantitative data collection

Qualitative data collection

Quantitative data collection:

The Quantitative data collection methods rely on random sampling and structured data collection

instruments that fit diverse experiences into predetermined response categories [6].

Type’s of Quantitative data categories are

1.

2.

3.

4.

Experiments and trials

Observing events

Obtaining data from other managements information systems

Surveys with closed ended questions

32

Qualitative data collection:

Qualitative methods usually are used to find out reasons behind certain quantitative results and are

used to further explore the subject or area of research. Qualitative methods are also used to improve

accuracy in quantitative methods.

Defined standards mentioned in diagram may be correct form of responses (Yes, No) , fulfillment of

all fields in case of E format questionnaires and any other standards defined by administrator

depending on project requirements.

33

Proposed PF 3(Data Integration):

In cases where data is updated manually by an individual instead of data creating devices, it may be

responsibility of the person updating data to make sure that data is clean and in line with standards

defined according to project and research team.

34

Proposed PF 4(Data Storage):

Storage needs are primarily classified in two segments.

During project

Long term storage

Storage needs for both phases of data lifecycle are different in terms of performance, replication and

backup needs. During the project, researcher or research team should be updating and storing the

data to the system. However, data should be refreshed, updated and formatted by University or

DBMS after the project unless it is disposed off according to need/nature of data [7].

Research data lifecycle is mentioned below for storage needs identification. We will discuss both

phases separately in this document.

During Project

• Observe

• Experiment

• Annotate

• Index

• Publish

After Project

•Refresh technology

•Refresh Format according to latest technology

•Store data reliably

During and After Project

•Learning

Data Management during a Research Project:

Main purpose of data management during the project should be to ensure that data can be

transformed into information by others. In technical terms, this means standard file formats, access

methods, descriptive metadata, proper indexing and adequate publishing.

Data Management after a Research Project:

35

Main purpose of data management after the project should be to keep the data useful and

retrievable for any references and to meet legal needs. Efficiency of access can be replaced with

compatibility and availability of data. Following table can summarize data storage preferences during

and after project[8].

Performance

Survivability

Curation

Primary Curator

During Project

High

High

High

Researcher

Long Term storage

Moderate

Very High

Very High

University

One concern with reference to long term preservation of data is management of data when original

funding and original researchers who collected or created the data are not available. This should be

handled by proper handover of data by personnel to database administrators at the time of leaving.

Other way of handling this issue can be shared responsibility of data amongst researchers and

database administrators during the project.

Classification of data and storage:

In the proposed data infrastructure, it is suggested to store data according to standards

classification of data. This classification means that data may be stored at different locations

according to type of data (Audio, video, text etc). In order to achieve this goal, we need to have a

data sorting system embedded in the data storage model that will segregate different data types

and shall store data in a way which will make retrieval easy and efficient. Suggestive model is

mentioned below.

36

Proposed PF 5(Data IPR handling):

Intellectual property ('IP') rights may grant creators or owners of a work certain controls over its

use. Following is the suggested framework for IP Rights handling of research data according to the

guidance of Finland’s Personal Data Act (1999).

37

Proposed PF 6 (Data ownership):

According to our project definition, data and information are valuable assets. This leads to the notion

of responsibility of data and information available at university servers/ data stores. Ownership of

data in this document corresponds to activities related with accessing, creating, modifying,

packaging, selling or removing data along with sharing the rights and privileges of data.

38

Proposed PF 7 (Data Quality):

Data can be rated as high quality if satisfies the defined standards to assist researcher in his/her

operations, planning and decision making. In the scope of our project, we may measure data quality

by analyzing the level of completeness, validity, consistency, conformance of data values to project

requirements and appropriateness for specific use.

Level of quality can be measured on the scale of 1 to 4 depending on the level of quality is exhibits

according to below mentioned table [9].

The data quality activity levels

Proactive

Data quality issues are prevented

Active

Data quality is supervised in real time

Reactive

Data quality is supervised periodically

Passive

Data quality is not measured

From data quality point of view, it has been observed that stakeholders of the project are at reactive stage of data

quality which means that random checks are made on available data to check consistency, supportability and

efficiency.

Through proposed data collection and integration model, we aim to reach at Proactive level of data handling

because in proposed data handling mechanism, we ensure data cleanliness and supportability at the time of data

entry and updating instead of periodic checks.

In terms of projects, data quality is to be ensured by researcher or/and project manager. However, at the higher

level of pyramid, it can be controlled by defining the parameters in the system and can be automated according to

defined parameters.

39

Data Quality process:

Following is the suggestive contamination control flow for research data in the proposed solution.

This process should be followed at data entry level to achieve proactive stage in contamination

control.

Moving Forward:

This document can be continued as a live document for updating project milestones and progress as

it moves forward towards technical specifications and implementations.

40

Conclusion:

The report is based on a meta description of the research project flow and discusses various forms of

data produced in different phases of the project flow. The emphasis in this report is on the actual

performance of the research, because there the need for data infrastructure seems to be the most

urgent one. It is still clear that the preparatory phase and funding type have ramifications for the

data management activities needed in the main phase. This is evidenced by the questions like “who

own this data, who has access to it, what are the privacy restrictions on this data”, etc. It is also clear

that the data produced in the project preparation phase has relationships with the data produced

later. These are for further study.

Current operational and meta data handling practices were analyzed through interviewing and

analysis of systems (Observations). It was identified that optimal data handling procedures were

needed and this creates the scope of advanced data Infrastructure.

Firstly, we developed better understanding of systems by interviewing and questioning stakeholders

and secondly, we analyzed standard modern data infrastructure management practices. Later, those

practices were manipulated to fit our specific needs and according to expectations of stakeholders.

As per data handling needs of stakeholders who are faculties, university administration, researchers

and funding authorities. Currently, we have covered elicitation of data related needs starting from

types of data needed to the end point of data archiving covering maximum data related concerns of

stakeholders. University administration’s concerns are for further study.

The document contains 7 use cases and seven Process flows to serve as instructional boundary for

system developers and technical personnel who are supposed to develop the data infra structure as

required by stakeholders.

Perhaps the most pressing issue is how to define and manage the metadata. Without correct

definition and clear processes for its handling the operational data cannot be kept in order and

protected against misuse, loss, confusion etc.

41

References:

[1] Dursan Delen et al , “A Hollistic framework for knowledge discovery and management” 2009

[2] B. Markham, "http://www.oit.umd.edu," 2011. [Online]. Available:

http://www.oit.umd.edu/Publications/Data_Classification_Presentation_022908.pdf.

[3] W. Wang, "Secure and efficient access to outsourced data," New York , 2009.

[4] G.Weikum, G. Vossen, Transactional Information Systems. Morgan-Kaufmann Publishers, USA, 2002.

[5] P. International, "www.privacyinternational.org," 2011. [Online]. Available:

https://www.privacyinternational.org/article/finland-privacy-profile#frame. [Accessed 2011].

[6] www.confluence.ucdavis.edu , University of California , 2011 [Acessed 2011]

[7] www.confluence.ucdavis.edu , University of California , 2011 [Acessed 2011]

[8] R. Silvola, O. Jaaskelainen, H. Kropsu Vehkapera and H. Haapasalo, "Managing one master data –

challenges and preconditions," Industrial Management & Data Systems, 2011.

42

43