Sta 414/2104 S - Department of Statistical Sciences

advertisement

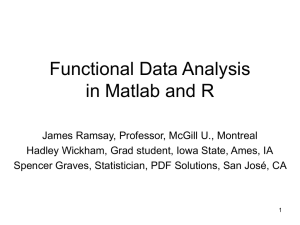

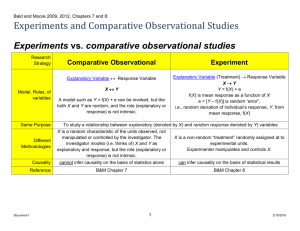

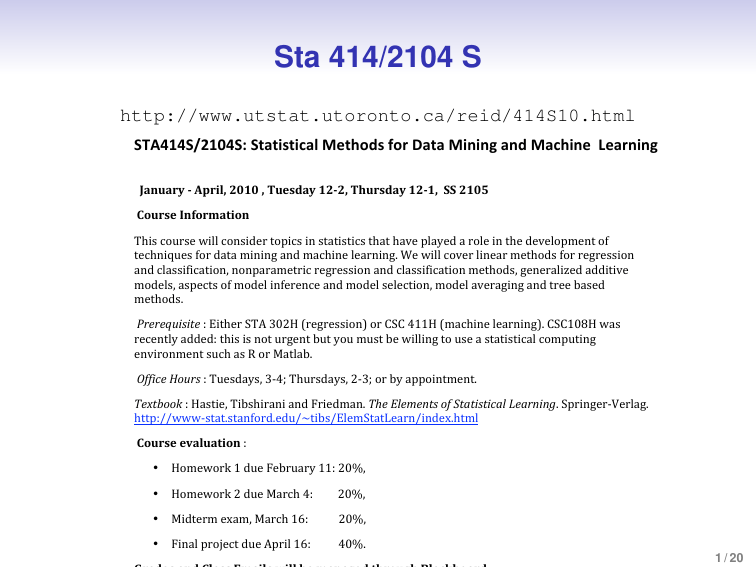

Sta 414/2104 S http://www.utstat.utoronto.ca/reid/414S10.html STA414S/2104S: Statistical Methods for Data Mining and Machine Learning January ­ April, 2010 , Tuesday 12­2, Thursday 12­1, SS 2105 Course Information This course will consider topics in statistics that have played a role in the development of techniques for data mining and machine learning. We will cover linear methods for regression and classification, nonparametric regression and classification methods, generalized additive models, aspects of model inference and model selection, model averaging and tree based methods. Prerequisite : Either STA 302H (regression) or CSC 411H (machine learning). CSC108H was recently added: this is not urgent but you must be willing to use a statistical computing environment such as R or Matlab. Office Hours : Tuesdays, 3‐4; Thursdays, 2‐3; or by appointment. Textbook : Hastie, Tibshirani and Friedman. The Elements of Statistical Learning. Springer‐Verlag. http://www‐stat.stanford.edu/~tibs/ElemStatLearn/index.html Course evaluation : • Homework 1 due February 11: 20%, • Homework 2 due March 4: 20%, • Midterm exam, March 16: 20%, • Final project due April 16: 40%. 1 / 20 Tentative Syllabus I I I I Regression: linear, ridge, lasso, logistic, polynomial splines, smoothing splines, kernel methods, additive models, regression trees, projection pursuit, neural networks: Chapters 3, 5, 9, 11 Classification: logistic regression, linear discriminant analysis, generalized additive models, kernel methods, naive Bayes, classification trees, support vector machines, neural networks, K -means, k-nearest neighbours, random forests: Chapters 4, 6, 9, 11, 12, 15 Model Selection and Averaging: AIC, cross-validation, test error, training error, bootstrap aggregation: Chapter 7, 8.7 Unsupervised learning: Kmeans clustering, k -nearest neighbours, hierarchical clustering: Chapter 14 2 / 20 Some references I Venables, W.N. and Ripley, B.D. (2002). Modern Applied Statistics with S (4th Ed.). Springer-Verlag. Detailed reference for computing with R. I Maindonald, J. and Braun, J. (). Data Analysis and Graphics using R. Cambridge University Press. Gentler source for R information. I Hand, D., Mannila, H. and Smyth, P. (2001). Principals of Data Mining. MIT Press. Nice blend of computer science and statistical methods. I Ripley, B.D. (1996). Pattern Recognition and Neural Networks. Cambridge University Press. Excellent, but concise, discussion of many machine learning methods. 3 / 20 Some Courses I http://www.stat.columbia.edu/˜madigan/DM08 Columbia (with links to other courses) I http://www-stat.stanford.edu/˜tibs/stat315a.html Stanford I http://www.stat.cmu.edu/˜larry/=sml2008/ Carnegie-Mellon 4 / 20 Data mining/machine learning I large data sets I high dimensional spaces I potentially little information on structure I computationally intensive I plots are essential, but require considerable pre-processing I emphasis on means and variances I emphasis on prediction I prediction rules are often automated: e.g. spam filters I Hand, Mannila, Smyth “Data mining is the analysis of (often large) observational data sets to find unsuspected relationships and to summarize the data in novel ways that are both understandable and useful to the data owner” 5 / 20 Applied Statistics (Sta 302/437/442) I small data sets I low dimension I lots of information on the structure I plots are useful, and easy to use I emphasis on likelihood using probability distributions I emphasis on inference: often maximum likelihood estimators (or least squares estimators) I inference results highly ‘user-intensive’: focus on scientific understanding 6 / 20 Some common methods I regression (Sta 302) I classification (Sta 437 – applied multivariate) I kernel smoothing (Sta 414, App Stat 2) I neural networks I Support Vector Machines I kernel methods I nearest neighbours I classification and regression trees I random forests I ensemble learning 7 / 20 Some quotes I I I I HMS1 : “Data mining is often set in the broader context of knowledge discovery in databases, or KDD” http://www.kdnuggets.com/ Hand et al.: Data mining tasks are: exploratory data analysis; descriptive modelling (cluster analysis, segmentation), predictive modelling (classification, regression), pattern detection, retrieval by content, recommender systems Data mining algorithms include: model or pattern structure, score function for model quality, optimization and search, data management Ripley (pattern recognition):“Given some examples of complex signals and the correct decision for them, make decision automatically for a stream of future examples” 1 Haughton, D. and 5 others (2003). A review of software packages for data mining. American Statistician, 57, 290–309. 8 / 20 Software I I I I Haughton et al., software for data mining: Enterprise Miner, XLMiner, Quadstone, GhostMiner, Clementine Enterprise Miner $40,000-$100,000 XLMiner $1,499 (educ) Quadstone $200,000 - $1,000,000 GhostMiner $2,500 – $30,000 + annual fees http://probability.ca/cran/ – Free!! You will want to install the package MASS, other packages will be mentioned as needed 9 / 20 Examples from text I email spam 4601 messages, frequencies of 57 commonly occurring words I prostrate cancer 97 patients, 9 covariates I handwriting recognition data I DNA microarray data 64 samples, 6830 genes I “Learning” = building a prediction model to predict an outcome for new observations I “Supervised learning” = regression or classification: have available sample of outcome (y ) I “Unsupervised learning” = clustering: searching for structure in the data Book.Figures 10 / 20 c Elements of Statistical Learning Hastie, Tibshirani & Friedman 2001 oooo oooooooooooooooooooo o oo ooooooooooo oo oooooooo oo o o o oo o o o o oooo o o o o o oo o o o o o o o o o o o o o o ooo oo o o o o o ooo oooooooooo o o o o lbph o o o o o o o o o o o o o o o o o o o o oo oooooo ooooo o oo oo o ooooo oo oo o oo ooo oooo o o o o o o o o o o o o o o o o o o o o o o o o o o o oooo o o o o o o o o o o o o o oo o o o oo o ooooo o oo o o o ooo o oo o o oooo oo o o o o oo o oo o o o o o o o o o o o o o o o o o o o o o o o o o o o o ooo ooo o o oo oo o o o o o oo ooo o o o o o oo o o o oo o oooo oo o oo o o ooo oo o o o o o o o o o oo o o o o o o o o o o o o o o o o o o o ooooo o oo o o o o o o oo ooo o ooo ooo o ooo o oooo o o oo ooooooo o o o oo o o ooo o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o 0 1 2 3 4 5 9.0 o o o o o o o oo o o o oo o o o oooo ooooo oo ooo o o o oo o ooo ooooooo ooo o o o o o oo oooo o o o o o o o o o o oo oo oo o o o oooo ooo oo o o o o oo o ooo ooooo o o o o ooo ooo oo o o o oo o o o o o o o o o o o o o o o o o oo o o o o o oo o ooooo o o o o ooo o o oo o o o o o o o o o o oo o o o o o o o o ooo ooooo ooooo ooo o ooooooooooo ooooo o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o 7.5 o o o o o o o o o o o o o o o o 6 o o o o o o ooooooo o o o o o oooooooooo o o o o ooo o o o o oo oo o o ooo o o o o o o o o o o o o o o o oo oo o oo o oooo o o ooo ooooo o oo ooooo o o o oo ooooo o o oo o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o o oo o ooooo o o o o ooo o o o ooo o oo o oo o o o o o oo o oo o oo o oo oo ooo o o oo oo o o o oo o oo o o o oo o o oo o oo oooo o o o o o o o oo o oo oo ooo oo o o o o o o o o o o o o o o oooooo o o o o o o o o ooo ooo o o ooo o o 5 o o o oo ooo o o oo ooo o oo ooo oooo lweight ooo ooo oooooo oooooooo oooooooo oo o o o o o ooo o ooooooo o oo ooo oo oo o oooooooooooo o o oo ooooooo ooooooo o ooooooo oo oo oo oooooo o oo oo ooo oo o oooooooooooo oo oo ooooooo ooooooooooooooo o o oooo oooo ooo ooo oo oo o ooo o oo o o o o o oooo o oo o o o o o o o o o ooo oo o o ooo o ooo o ooo o oo oooooo oo o oo oooooo oooooo oo o oo oooooo ooo oooo oo oooo o oo oooo o oooo oo o oooo o oo o ooooooo o oooo ooooo oo o oo oooo o o ooooo o o oooooooo oooo o ooo o ooooooooooo age o o oo oooooo oo o oooooooooo o o oo o o o o o o oo o o oo o o o o o o o o o o o o o o o oo o o o o o o o o o o o o ooo ooo oo o ooo oo o oo o ooooooo o oooo oo o oo oo o o ooo oo oooooooooooo oooo o oooo o oooooo o oooo oooooo oo o o oooooo o oo o oo oooooo o o oo o o o o o o oo o o o o o oooo o oo ooo o o oooo o oooooooo oooo o o oo o o o o o o o o o oo oo o oo o oooooooooooooo o ooooooo oooooooo ooooooooooooooooooooooo oooo oooooo oooooooooo oooooooooooo oo oooooooooooooo ooooooooooooooo ooooo oo ooooooooo oooooo ooo oooo o o o oooo ooooo ooo 6.0 o oo o o o o o o o oo o o oooooo oo ooo o o o o oo oooo o o oo oo o o ooo o o o o o o o o o ooo oo ooooo o o o o o o o o 4 o o oo o o o o o oooo o o o oooooo o o o ooooo oo o oo o o o oo oooo o o o o oooooo o o o o o o o o o o o o o o 0.8 3 o oo o ooooo ooo ooo o ooo oooooo o oo o oo ooo o oo oo oo oooooo o o oooooooo oooo o ooo o o o o o o oo o ooo o oo o o o o 0.4 o o o o o o o o o o o o o o o o 2 o 0.0 o o o o o o oo o oo o o oo o ooo o o oooooo oo ooooo o o o o oo o o o o ooo oooooooo o o o o o o o o o o 1 -1 0 1 2 3 4 60 80 o o o o oo o ooo oooooo o oo oo ooo o oo o ooo oo oo o oo oooo o o o o o o o ooo o o ooo oooooo oo o oo oo o oooo oo o o o 0.8 40 50 60 70 80 40 0 1 3 o o o oo o o oo o ooo o o o ooo oo oooo oooooo oooo oo o oooo o ooooo oo oo oo ooo oooo ooooo o oooo oo oo o o o o o o o ooooo o o o oo o o oo o oo oooo ooooooo oooo ooooo o oo ooo o o o o o o o ooo o o o oo o oo o o oo o oo o ooo o o oo o oo o ooo oo ooo ooooooooo oo ooooooo o ooo ooo oooo oo oooo oooo o oo o ooo oooooo oooooo o oooo ooooooooooooo oo lcavol ooooo oo oooo oooo o ooo o o oooo ooooo oo o o o o oo ooo oo oooo o o ooooo o oo o o -1 -1 lpsa Chapter 1 o o ooooooooooooooooooooooo oooo o oooooooooooo oo oo o o oo ooooooooooooooo oo oooooooo ooooooooooooo oo oo oo oooooooooo oooooooooooo ooooooooo o oo ooo ooo oo oo ooooooooooo oo o oo o ooooooo o oo ooo oooooooooo o oo ooo o oooooooooooo o o o o oo ooooooooo o o o o o o o o o o o o o oo o o o oo oooooo o o o o o o o o o oooo o o o o o o o oo oo o o o o oo o o o o o oo o oo o o o oo o ooo o o oo o o o oooooo o o o o o ooo o o o o oooooooooooooooooo oooo o oo o o gleason ooooooooo o o ooo o o 3 o o o o o o o o o o o o o o o o o o 2 o o lcp o o o oooo oooo oo ooooooo oo o o o o o o o o o o o o o o o o 1 o ooooooooooooooo o o o o o o o o o o o o o o o o o -1 0 o oo oo oo oooooooooo ooo oo ooooo oooooooo o o o o o o oooooo oo oo oooo o o o o o o ooo o ooo oo oooooo o oo o oo o o oo ooo o oooooooooooooooooo oo o o o o o o 100 6.0 7.0 8.0 9.0 0.0 0.4 svi ooooo ooooooooooooooo oooooooooooooo ooooooo ooo ooo o oo ooo oo oo oo oooooo o oo ooooooo o o o ooo oo ooo oooooo o oooo o o o oo o oo oo o o ooooooo oooooo oooo oooo o o ooo o oooooo oo o o o o o o o o oooo ooooo o ooo oo o ooo oo oooooo oooooo o o o ooooooooooo ooooo o ooooo o o oo oooooo ooo oooooooooo oooo oooooooooooooooooo ooooo ooo oooooooooooooo o oo oo ooooo ooooo o o oo ooooo 11 / 20 Some of the handwriting data 12 / 20 Some basic definitions I input X (features), output Y (response) I data (xi , yi ), i = 1, . . . N I use data to assign a rule (function) taking X → Y b (X ) goal is to predict a new value of Y , given X : Y I I regression if Y is continuous classification if Y is discrete I Examples... 13 / 20 How to know if the rule works? I I compare ŷi to yi on data training error b to Y on new data test error compare Y I In ordinary linear regression, the least squares estimates minimize Σ(yi − xiT β)2 I and the minimized value is the sum of the squared residuals Σi (yi − ŷi )2 = Σi (yi − xiT β̂)2 I test error is Σj (Yj − xjT β̂)2 14 / 20 ... training and test error I I In classification: use training data (xi , yi ) to estimate a b classification rule G(·) b G(x) takes a new observation x and assigns a class/category/target g ∈ G for the corresponding new y I for example, the simplest version of linear discriminant analysis says yj = 1 if xjT β̂ > c else y = 0 I what criterion function does this minimize? I test error is proportion of mis-classifications in the test data set I in regression or in linear discriminant analysis, training error is always smaller than test error: why? 15 / 20 Linear regression with polynomials I I I -1.0 -0.5 y 0.0 0.5 1.0 I model yi = β0 + β1 xi + β2 xi2 + β3 xi3 + · · · + i assume i ∼ N(0, σ 2 ) (independent) how to choose degree of polynomial? for fixed degree, find β̂ by least squares 0.0 0.2 0.4 0.6 0.8 1.0 x go to R 16 / 20 The Netflix Prize http://www.netflixprize.com//index 17 / 20 Digital Doctoring I I I “Digital doctoring: how to tell the real from the fake”, H. Farid, Significance 2006. “Exposing Digital Forgeries by Detecting Inconsistencies in Lighting”, M.K. Johnson, H. Farid, ACM 2005 http://www.cs.dartmouth.edu/farid/ H. Farid, Significance 2006. 18 / 20 Automated Vision Systems I http://www.cs.ubc.ca/˜murphyk/Vision/ placeRecognition.html I “Context-based vision system for place and object recognition” Antonio Torralba, Kevin P. Murphy, William T. Freeman and Mark Rubin, ICCV 2003. 19 / 20 For next class/week I Thursday: Make sure you can start R I Thursday: Overview of linear regression in R I Tuesday: Read Chapter 1, 2.1–2.3 of HTF I Tuesday: We will cover Ch. 3.1-3.3/4 of HTF 20 / 20