Introduction to Runtime

advertisement

Runtime, Powers of Two

ORRHS Primer in Mathematical Computer Science

John Hewitt

johnhew@seas.upenn.edu

jhewitt.me

May 21, 2015

0.1

Informal Introduction to Runtime

We’ve worked with a well-known algorithm, BFS, and we’ve proved its correctness in solving the shortest

path problem for unweighted graphs. In developing and using algorithms in computer science, proofs of

correctness are half the problem to solve. The other half is proving runtime. If an algorithm takes days

to compute, it won’t be of much worth, regardless of its correctness. But how does one define how quickly

programs run in comparison to each other? Some peoples’ computers are faster than others’, and it would

be useless to publish standards of how long an algorithm would take on a given input on a number of

standard computers.

For example, take our BFS algorithm. It’s clear that it would take any computer proportionally

longer to run BFS on a graph with 10 nodes and 20 edges than on a graph with 2 nodes and 1 edge.

It’s probably intuitive that most algorithms similarly take more time to solve when you give them larger

problems to work through. In the case of BFS, the size of the problem is the number of nodes and edges

in the graph. The time that BFS takes is proportional to these numbers. Computer scientists use the

property of increasing runtime to rate the runtime of an algorithm on a universal standard. The question

is always, At what rate does the runtime of the algorithm increase as a proportion of the size

of the problem? We’ll look at an example below, and then formalize the discussion.

0.1.1

Runtime of BFS

Let’s look informally at how the runtime of BFS will increase as a proportion of the size of the graph.

Remember that we assume that the graph is a connected component. When we start from our root node,

we add all of its neighbors to our BFS Queue. We’re not sure how long it will take a computer to add 1

node to a queue, but if we call that time c,

c = add to queue time

Then the total time it takes to fully process the root node is c times the number of neighbors of the root

node. So, if the degree of our root node is 4, its runtime alone is 4c. Now consider the next node. One

of its degrees is connected to the root, which has already been discovered, so the root won’t be added to

the queue. However, it takes some time to look at the root node, decide that it has been considered, and

move on. We can call this time c, or .5c or anything similar. As you’ll soon see, what we multiply c by

really isn’t important. Regardless, for any node, I claim that the runtime of considering that one node is

proportional to the degree of the node. In other words, for node v,

runtime(v) = degree(v) ∗ c

It follows that for an entire connected graph G, with the vertex set V ,

runtime(G) = degree(G) ∗ c =

X

v in V

1

degree(v)

This says that the runtime of connected graph G is proportional to the total degree of G, which is the

sum of the degrees of every node in G. We’ve shown previously that the total degree of G is twice the

number of edges. Remember that the set of edges in G is called E. By convention, we call the ”size” or

number of edges in E, |E|. So, we could say that

runtime(G) = 1/2 ∗ c ∗ |E|

This tells us that as the number of edges in G grows, the runtime of BFS increases linearly. in

proportion to the size of the problem.

0.1.2

Other runtimes?

So, ah, is this good or bad? Let’s compare linear runtime with another other common runtime.

Problem: Hewitt’s Algorithm is an algorithm that, at every edge in E, counts the number of edges

in E by walking across them. In other words, for every edge in E, Hewitt’s does c work for every edge in

E. What is the runtime of this algorithm?

Solution: For the first edge considered by this algorithm, we look at every single edge in E. Thus,

for 1 edge, we do |E| work. For the second edge, we also do |E| work. Similarly, for all |E| edges, we do

|E| work. We can see the total runtime, thus, as

X

c ∗ |E|

e in E

Or, more simply, |E| ∗ (c ∗ |E|) = c|E|2 . We say that the runtime of this algorithm increases proportionally with the square of the size of the problem. If we say that BFS and Hewitt’s both solve similar

problems, then BFS clearly is the better algorithm, since it runs in proportionally much less time.

0.2

Semiformal Introduction to Runtime

In computer science, we’re interested in solving big problems. So, when we talk about the runtime of an

algorithm, we don’t care about the constant c, we just care about how the algorithm’s runtime increases

as a proportion of the size of the problem. This may be hard to swallow, as intuitively, a lower constant

c seems like it should make a difference.

Consider, however, two algorithms, both of which look at a set of edges, E. Let’s say that there are

800 edges in E. So, |E| = 800. In a real-world setting, this is still quite small.

Runtime of Algorithm A: 1024 ∗ |E| = 1024 ∗ 800 = 819200

Runtime of Algorithm B: 2 ∗ |E|2 = 2 ∗ 800 ∗ 800 = 1280000

Already, the linear-time algorithm A is much faster than the quadratic-time algorithm B, even though

its constant is much much larger. This is why we only care about runtime proportional to the size of the

problem. This is called the asymptotic runtime. Not sure what asymptotic means? Take calculus!

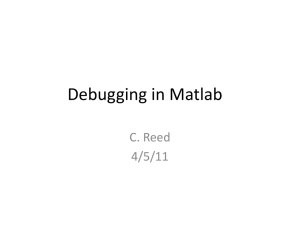

But also look at this diagram:

2

Quadratic

Algorithm

Linear

Algorithm

Y-axis: Runtime of algorithm

X-axis: Size of problem

Graph from graph.tk

The units on this graph are embarassingly missing, but its purpose is to give you an intuitive understanding of just how fast the runtime of the quadratic algorithm, like algorith B, increases significantly

faster than the runtime of the linear algorithm, like algorithm A. This is why we ignore the constant c

that we defined before, and just look at the class of the algorithm.

0.3

Formalizing remarks: Worst-case Runtime, Big-Oh Notation

Let’s generalize what we’ve seen about BFS and runtime, and take a look at what professional runtime

analysis looks like.

Given: Consider modifying our BFS algorithm such that it works on a disconnected graph. Whenever we finish a connected component, we just run the old algorithm on the next connected component

until all have been covered.

Problem: What is the runtime of this generalized BFS?

Solution: We, as computer scientists, want to give clients and each other a bound on how long the

algorithm will take to run. In other words, we want to say no matter how gross the input is, as long as

it’s valid, the algorithm will never run in an asymptotically worse time.

So, take the runtime of BFS as we’ve described it: |E|. What if our graph is 10 nodes and no edges?

Clearly, our new BFS will have to consider every node, even though none of them have any edges. Will

our algorithm still take |E| = 0 since it has no edges? Naturally, no. So, at worst, the algorithm takes

|V |+|E| time, since it has to look at every node as well as every edge, even if there are no edges connected

to a given node. This is the worst-case runtime. All graphs are either connected or not connected (duh)

and so this runtime of BFS describes the worst case – looking at every node and every edge. This is a

worst-case bound on the runtime.

0.3.1

Big-Oh Notation

Runtime is a big deal in computer science. I’ve given intuitive descriptions of how we categorize runtime,

now here’s the formality in all its college-level glory:

Problem-size: |V | is the number of nodes in a graph. |E| is the number of edges in a graph. n is the

number of elements in a set. (Say if we’re trying to sort a set of numbers into increasing order, we

say we have n numbers.)

3

Runtime function: A function , f (n), which describes the runtime of an algorithm

Big-oh ... O(f(n)): Function f1 (n) is ”big oh of” f2 (n) if there exist integers n0 and c such that for

all n > n0 , f1 (n) < c ∗ f2 (n). This is writeen f1 (n) ∈ O(f2 (n))

In other words, we’re saying that after a certain size of the problem (i.e. ”for all n > n0 ), and through

adding some negligable constant c, f1 (n) will never take longer to run than f2 (n).

0.4

Advanced example: Powers of 2

What differentiates a bad program from a good one? Spoiler alert: it’s math.

Problem: Given a positive integer, n, compute 2n . In other words, if given n = 3, you should come

up with the answer 8. Describe, in English, how you would do this.

Solution: Here are 3 correct answers to the problem. However, 2 are pretty darn good ways of

doing it, while 1 is awful. After each answer is the equivalent answer in pseudocode.

Iterative : Start with a variable answer which equals 1. Check to see if n, the power of 2 to calculate,

is greater than 0. If it is, multiply answer by 2, and subtract 1 from n.

iterativeFunction(n) {

answer <-- 1

while n is greater than 0, do

answer = answer * 2

return answer

Recursive : This one is pretty similar to Branching. Check to see if n, the power of 2 to calculate, is

greater than 1. If it isn’t, just return the answer 2. If it is, run the same program once, but ask it

to calculate one power of two less than originally asked. Then multiply this answer by 2 and return

it.

recursiveFunction(n):

if n is greater than 1, do

return 2 * recursiveFunction(n - 1)

else if n is 1, do

return 2

Branching : Check to see if n, the power of 2 to calculate, is greater than 1. If it isn’t, just return the

answer 2. If it is, here we get a bit complicated: run the same program twice, but ask them to

calculate one power of two less than originally asked. Then sum the two answers. This isn’t easy

stuff to grasp, but think about it this way: If you gave the input n = 3, then the function should

calculate 23 . The function knows that n is greater than one, and so would compute 22 twice, and

then sum them. So, it would compute 22 + 22 , which equals 2(22 + 22 ), which is... 23 . Oh dang,

that’s cool.

branchingFunction(n):

if n is greater than 1, do

return branchingFunction(n - 1) + branchingFunction(n - 1)

else if n is 1, do

return 2

4

Which one of these is the offending function? Let’s work through them one-by-one.

Runtime of Iterative: Notice that the runtime of the algorithm is dependent on how many times

the while loop runs. Notice that it runs exactly as many times as the value of n. Inside the while loop,

we do one calculation – multiplying the value answer by 2. This amount of calculation is what we could

call c. Thus, the algorithm takes cn time, and formally, O(n) time.

Runtime of Recursive: Recursive algorithms are fun and tricky. Recursive pretty much means

”part of the work of the algorithm is running the same algorithm.” Whoa. In this case, the algorithm is

asked for the value of 2n , and says ”hm. I bet if I ask for the value of 2n−1 and then multiply it by 2,

I’ll get the right answer...” So it does. Once it is asked for 21 , it says ”oh, that’s easy”, and returns 2

to version of itself that asked for it. Then that function multiplies by 2 and returns that... all the way

back up to the original function, which now has the value of 2n−1 and returns the times 2. Note that the

function will be called n times. The runtime is O(n).

Runtime of Branching: This is also a recursive algorithm. The only difference is that instead of

asking for 2n−1 once and multiplying by 2, it asks for 2n−1 twice and just adds them. Surely this can’t

be a big deal – maybe addition is faster than multiplication!

However, consider this: when branchingFunction(n) is called, it calls 2 of itself to calculate the answer.

Then each of them call 2, and then each of those 4 call 2, and then each of those 8 call 2... uh oh. If each

function is doing c work, then I claim that the total work done is c ∗ 2n = O(2n ). That’s one awful, awful

function, since we know that powers of 2 can be computed in O(n). Take a look at this diagram for a

visual explanation of the three runtimes:

5