Using Surveys to Evaluate Sakai: Goals & Results

advertisement

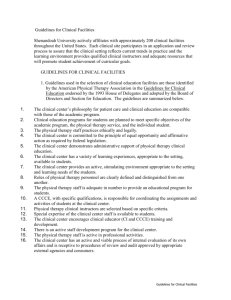

Using Surveys to Evaluate Sakai: Goals & Results Moderator: Stephanie Teasley, University of Michigan Panelists: Steve Lonn, University of Michigan Salwa Khan, Texas State University Jeff Narvid, University of California, Berkeley Angelica Risquez, University of Limerick Confluence Page: http://bugs.sakaiproject.org/confluence/pages/viewpage.action?pageId=18063 Goals/Purpose of This Session • Discuss why feedback from users is important for implementing Sakai. • Share general purposes of evaluations. • Share common question types and categories. • Share general survey statistics. • Answer specific questions about evaluation using surveys and other methods. 2 Panel Participants • Steve Lonn CTools Usability, Support, and Evaluation (USE) Lab, University of Michigan • Salwa Khan TRACS Instructional Technologies Support, Texas State University • Angelica Risquez Sulis Centre for Teaching and Learning, University of Limerick • Jeff Narvid bSpace Training & Support Team, University of California, Berkeley 3 Stage of Implementation • Michigan - CTools • • Full Production (about 45,000 users in Winter 2006) Was running Sakai 2.0 during survey (now running Sakai 2.1.2) • Texas State - TRACS • • Pilot (F 05), Expanded Pilot (Sp 06), Voluntary Production (F 06) Running Sakai 2.1 • Limerick - Sulis • • Pilot (Spring 2006), Pilot (Fall 06-Spring 07) Running Sakai 2.1.2 • Berkeley - bSpace • • Pilot (F 05) / Pre-Production (Sp 06) / Full Production (F 06) Running Sakai 2.1.1 4 Purpose of Survey Evaluation (Slide 1 of 2) • Gather users’ demographic data • Determine users’ level of computer proficiency • Determine level of system usage • Users’ perceptions of the system (ease of use, satisfaction, reliability, usefulness of tools) 5 Purpose of Survey Evaluation (Slide 2 of 2) • Determine users’ perception of system benefits • Determine quality of support / user preferences in obtaining support • Determine users’ desired improvements • Determine users’ pain points / barriers to migration 6 General Survey Statistics: Michigan • 1,357 instructor respondents (19% response rate) All instructional faculty (including student instructors) invited to respond • 2,485 student respondents (27% response rate) 25% of students (stratified random sample) invited to respond • Surveys administered online April 2006 (end of winter semester) Incentive: Random drawing for four $100 gift certificates • Surveys completed in 10-15 minutes, on average 7 General Survey Statistics: Texas State • 11 faculty/staff respondents Surveys completed in 15-20 minutes, on average • 798 student respondents Specific courses surveyed during class period Surveys completed in 5-7 minutes, on average • Surveys administered online for faculty/staff, paper for students End of semester 8 General Survey Statistics: Limerick • 175 faculty/staff respondents (no questions related to Sakai) • All faculty/staff invited via email by the Dean of T&L, aprox 46% response rate • 897 student responses (aprox 9% total population), 200 of them Sulis (Sakai) users (aprox 58% users) • • All undergraduate and taught postgraduate students invited via general email Instructors using Sulis offered a voluntary evaluation of their students in a class setting • Surveys administered online (www.markclass.com) and paper based (Sulis users) • Completed in 10-20 minutes 9 General Survey Statistics: UC Berkeley • Fall 2005: 15 faculty/staff respondents • Spring 2006: 22 faculty/staff respondents All faculty/staff invited via Message of the Day and an announcement on the bSpace community page • Fall 2005: 101 student respondents • Spring 2006: 110 student respondents All students invited via Message of the Day and an announcement on the bSpace community page • Surveys administered online (zoomerang) Administered at the end of Fall and Spring semesters Completed in 10 minutes, on average 10 Demographic Questions • • • • • • Gender Age Years Teaching Year in School (students) Department / College etc. 11 Demographic Data • Michigan: Asked college/school and students’ year in program • Texas State: Asked gender, age group, years at Texas State, staff/faculty member • Limerick: Asked gender, age, rural/urban, nationality, college, year of study, working relation, position, main activity in university • UC Berkeley: N/A 12 General Computer Use & Proficiency • Rate your expertise with computers Novice, Intermediate, Advanced • Rate expertise with other computerbased tools • Use of / preference for information technology in your courses None, Limited, Moderate, Extensive, Exclusive (Online Only) 13 General Computer Use & Proficiency: Michigan, Texas State, Limerick Results •Computer Expertise - Instructors: Q: Rate your expertise with computers 100% 91.2% * 83.0% 75% 62.2% 50% 32.8% 25% 17.0% 8.8% 5.0% 0% Novice UM: Instructors Intermediate TXSU: Instructors Advanced UL: Instructors 10 14 General Computer Use & Proficiency: Michigan, Texas State, Limerick Results •Computer Expertise - Students: Q: Rate your expertise with computers 100% 75% 65.0% * 64.0% 68.0% 50% 32.5% 27.0% 25% 17.0% 3.6% 8.2% 4.0% 0% Novice UM: Students Intermediate TXSU: Students Advanced UL: Students 10 12 15 Benefits of Using a System Like Sakai • Most valuable benefit of using information technologies Improved teaching/learning Convenience Manage course activities Faculty-student communication Student-student communication 16 Benefits of Using a System Like Sakai • Michigan: • Instructors: Improved instructor to student communication (38%) • and efficiency (23%) Students: Efficiency (45%) and helps manage course activities (17%) • Texas State: • Instructors: Most answered helped manage course activities and helped communicate with students • Limerick • Instructors: Improved instructor to student communication (31.4%) • and improved students’ learning (29%) Students: (Sulis users) Manage activities (62%); increased student to student communication (28,5%). (Non-Sulis users): improved learning (27%) and faculty-student commun. (19%) 17 Sakai Use • How often do you visit your Sakai sites? Stopped using Few times a semester Few times a month Once a week Few times a week Daily (once or more) • For how many courses have you used Sakai? None, 1-2, 3-4, 5 or more 18 Sakai Use: Michigan, Texas State, Berkeley Results Q: (Instructors) How often do you visit your Sakai sites? 75% 64.0% 58.3% 60% 45% 41.7% 40.0% 33.4% 30% 19.0% 15% 9.4% 7.5% 0% Few Times a Semester Few Times a Month UM: Instructors Weekly (Once or more) TXSU: Instructors Daily (or More) UCB: Instructors 19 Sakai Use: Michigan, Texas State, Limerick, & Berkeley Results Q: (Students) How often do you visit your Sakai sites? 75% 68.3% 60% 55.7% 54.6% 48.0% 45% 35.0% 30.0% 30% 21.0% 15% 12.6% 3.4% 0% 18.0% 11.9% 10.9% 5.9% Few Times a Semester UM: Students 7.0% 5.8% 2.4% Few Times a Month TXSU: Students Weekly (Once or more) UL: Students Daily (or More) UCB: Students 17 20 Overall Perceptions of Sakai • Overall dimensions of use Frustrating – Satisfying Difficult – Easy Useless – Useful Unreliable – Reliable • • • • Learning to use (Easy - Hard) Time to learn (Slow – Fast) Exploration of features (Risky – Safe) Tasks can be performed in straightforward manner (Never – Always) • Feedback on completion of steps (Unclear – Clear) 21 Overall Perceptions of Sakai: Reliability: Michigan & Texas State Results Instructors 75% 58.3% 60% 45% 41.4% 41.7% 45.4% 30% 15% 0% 9.8% 1.1% 2.3% Unreliable Somewhat Unreliable UM: Instructors Neutral Somewhat Reliable Reliable TXSU: Instructors 22 Overall Perceptions of Sakai: Reliability: Michigan & Texas State Results Students 75% 60% 50.2% 45% 41.0% 41.0% 31.2% 30% 27.0% 15% 6.0% 2.0% 14.0% 12.0% 9.0% 26.0% 24.0% 13.4% 3.2% 0% Unreliable Somewhat Unreliable UM: Students Neutral TXSU: Students Somewhat Reliable Reliable UL: Students 23 Overall Perceptions of Sakai: Learning to Use: Michigan & Texas State Results Instructors 75% 60% 50.0% 44.9% 45% 30% 41.6% 23.9% 23.5% 15% 6.0% 8.3% 1.6% 0% Difficult Somewhat Difficult UM: Instructors Neutral Somewhat Easy Easy TXSU: Instructors 20 24 Overall Perceptions of Sakai: Learning to Use: Michigan & Texas State Results Students 75% 60% 48.6% 45% 41.0% 43.0% 35.3% 30% 15% 0% 25.7% 22.2% 8.2% 11.0% 14.5% 17.5% 16.4% 7.0% 1.3% 3.0% Difficult Somewhat Difficult UM: Students Neutral TXSU: Students Somewhat Easy Easy UL: Students 20 22 25 Specific Uses of Sakai Tools/System • • • • • • • • • • Announcements Assignments – submission & grading Exams & quizzes (sample & real) Online folders for student work (Drop Box) Peer review Posted questions for students Resources / online readings & materials Schedule / Online Calendar Syllabus Other tools / uses 26 Specific Uses of Sakai Tools/System: Online Readings, Documents (Resources) Instructors 100.0% 100% 89.6% 80% 66.7% 60% 40% 33.3% 20% 8.9% 0% 1.5% Have Not Used UM: Instructors Not Valuable TXSU: Instructors Valuable/Very Valuable/Used UCB: Instructors 17 27 Specific Uses of Sakai Tools/System: Online Readings, Documents (Resources) Students 100% 94.7% 80% 72.0% 65.0% 63.3% 60% 40% 25.0% 20% 20.8% 16.0% 14.9% 10.0% 2.9% 0% 4.0% 2.4% Have Not Used UM: Students Not Valuable TXSU: Students UL: Students Valuable/Very Valuable UCB: Students 17 25 28 Specific Uses of Sakai Tools/System: Calendar / Schedule Tool Instructors 75% 62.9% 58.3% 60% 45% 41.7% 30% 23.2% 13.9% 15% 0% Have Not Used Not Valuable UM: Instructors Valuable/Very Valuable TXSU: Instructors 17 25 29 Specific Uses of Sakai Tools/System: Calendar / Schedule Tool Students 75% 69.0% 60% 56.0% 52.5% 45% 38.9% 38.5% 34.0% 30% 22.6% 15% 21.8% 24.7% 19.0% 11.0% 5.0% 0% Have Not Used UM: Students Not Valuable TXSU: Students UL: Students Valuable/Very Valuable UCB: Students 17 25 26 30 Level / Quality of Training & Support • Most effective way to get help Colleague/Classmate/Friend Online help documentation Keep trying on my own Email Support Call Support Department IT Staff Others (campus-specific) • Satisfaction with quality of support Very satisfied – very dissatisfied Can be broken up into different categories: • Timeliness, Quality of response, Friendliness 31 Level / Quality of Training & Support: Most Common Responses Instructors 75% 60.0% 60% 56.3% 45.0% 45% 29.5% 30% 25.0% 21.9% 20.0% 15.6% 15% 6.3% 0% 17.0% 6.7% Ask colleague/friend UM: Instructors 12.5% 14.0% 21.5% 16.0% 6.7% Help Docs TXSU: Instructors Keep Trying on Own UL: Instructors Email/Call Support UCB: Instructors 32 Level / Quality of Training & Support: Most Common Responses Students 75% 60% 45% 42.8% 36.3% 31.0% 30% 34.0% 34.0% 17.0% 15% 6.8% 3.0% 0% Ask colleague/friend UM: Students Help Docs 3.0% 2.9% Keep Trying on Own TXSU: Students 3.0% 5.0% Email/Call Support UL: Students 30 33 Attitudes / Suggestions for Improvement • Most important improvement • Importance of potential / new tools 34 Attitudes / Suggestions for Improvement: Michigan Results • Importance of Potential CTools Features • • Helps identify which tools in the Sakai pipeline might be most beneficial to our users (using Sakai 2.0 when surveyed) Compared with qualitative data and comments from Support queue • Most important tools for users: • • Instructors: Gradebook, New Discussion Tool, Group Control Students: Gradebook, Tests & Quizzes, New Discussion Tool, Group Control • Least important tools for users: • • Instructors: Concept Mapping, Podcasting, Blog Students: ePortfolio, Content Authoring, Blog 35 Attitudes / Suggestions for Improvement: Limerick Results • Faculty: • Solve problems with test and quizzes, make discussion • • tool more intuitive (qualitative) Two most important motivators: More information about it and successful teaching practices (22,3%); training and support (17,1%); and added teaching and learning value (10,3%) Students (Sulis users): • Faster performance, avoid problems with file upload capability (22,4%); improve design (21,7%); and wide adoption across campus (15,5%) 34 36 Attitudes / Suggestions for Improvement: Berkeley Results • Improve the discussion board • Add file upload capability • Improve the interface • Add customization options (image on home page, customization of synoptic view, reordering of tools and resources, etc) 34 35 37 Barriers to Use / Pain Points • Changes that you feel need to be made • List of potential barriers (skills, time, etc.) • Would you recommend Sakai to a colleague? • What would deter you from using Sakai? 38 • Michigan Barriers to Use / Pain Points • Instructors: Gradebook, Service Integration wanted • Students: Consistency of site usage, UI changes wanted • Texas State • Some instructors report that they don’t have time or don’t have skills • Need ability to set availability dates for documents • Need ability to set order of documents and to include a visible description of documents • Limerick • Preserve control over course administration while integrating with other IS • (qualitative) Two most important barriers: Need to free up time to get traing and devote to design (24%) and Dont know or understand teaching implications of using a CMS (17%) • Berkeley • • • • • Discussion board! Bug with roster data (affecting gradebook & site info) User interface ("reset arrow", font size, etc) Transition issues from Blackboard to bSpace 39 Other Methods for Evaluation • 1:1 interactions • Support queue • Use logs • Online help documentation tracking • Training session feedback 40 Questions? 41 Contact Information • Stephanie Teasley – CTools, University of Michigan steasley@umich.edu • Steve Lonn – CTools, University of Michigan slonn@umich.edu • Salwa Khan – TRACS, Texas State University sk16@txstate.edu • Jeff Narvid – bSpace, University of California, Berkeley jeffn@media.berkeley.edu • Angelica Risquez – Sulis, University of Limerick angelica.risquez@ul.ie • Confluence Page: http://bugs.sakaiproject.org/confluence/pages/viewpage.action?pageId=18063 42