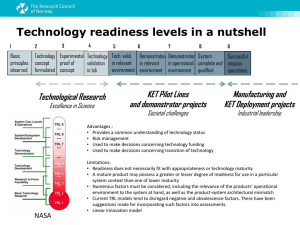

Technology Readiness Assessment (TRA) Deskbook

advertisement