Chapter 2

advertisement

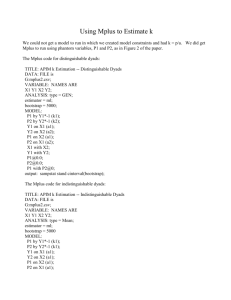

Chapter 2 GD 18/01/09 Writing a simple Mplus program: learning the language 2.1 INTRODUCTION In this chapter we introduce you to the basic and most commonly used commands in Mplus. We make no attempt to be comprehensive – for most analyses, only a small subset of the Mplus commands is needed. Once you have got used to the limited number of commands introduced here it will then be relatively simple to build up your repertoire as you proceed through the book or by consulting the Mplus User’s Guide. We illustrate the use of the Mplus commands by slowly building an input file to fit a simple factor model to the string measurements given in Display 1.1. After reading this chapter you should have an understanding of the basic vocabulary and syntax of Mplus and, hopefully, be able to put together your own input files. You should also be able to read and understand simple input files prepared by other Mplus users, together with the output produced by executing the commands in the Mplus programs. 2.2 RUNNING Mplus Mplus runs as a batch program. This means that in order to fit a model we have to use the Mplus Editor to set up an input file containing the appropriate variable definitions and model-fitting commands. Execution of the input file using RUN then leads to the creation of an output file that can then be examined using the Editor at leasure. Prior to execution, the input file will be saved using a user-specified file name such as string.inp, and the corresponding output file produced by Mplus will automatically be called string.out. Typically, the input file will refer to a third file containing the data (string.dat, for example). 2.3 CREATING AN INPUT FILE Having entered the Mplus Editor, the first step is either to open an existing input file or to create a new one from scratch (it is nearly always more convenient to proceed by editing and existing input file, if available). Our first example is shown in Display 2.1. Note that although we have used words or phrases typed with either upper case letters (TITLE, for example) or lower case letters (type=general, for example), Mplus makes no distinction between upper and lower case letters. We have introduced these differences here in order to facilitate the explanations of what we are describing and asking Mplus to do. There are ten Mplus commands, most of which are optional: TITLE DATA VARIABLE DEFINE ANALYSIS (Optional) (Required) (Required) (Optional) (Optional) 1 MODEL OUTPUT SAVEDATA PLOT MONTECARLO (Optional) (Optional) (Optional) (Optional) (Optional) The DATA and VARIABLE commands are required in all analyses. Our simple example in Display 2.1 uses TITLE, DATA, VARIABLE, ANALYSIS, MODEL and OUTPUT. Note that Mplus commands can come in any order. All commands should begin on a new line (the fact that there blank lines in our input file is ignored by Mplus – these have been introduced for clarity) and end with a colon (:). The words and phrases printed in lower case letters in our input file are the command options. Semicolons (;) separate command options. There can be more than one option per line, but we have put them on separate lines to improve clarity. Commands and options can be shortened to four or more letters for convenience, but we have not done so in our example. Any text on a line to the right of an exclamation mark (!) is ignored by Mplus and can therefore be used to provide explanatory comments. Finally, note that the maximum line length permitted within Mplus is 80 characters. 2.4 PROGRAM INPUT It is easy to be overwhelmed by the many possibilities in a programme such as Mplus. There are very many options (a great strength of Mplus) and the more advanced ones can be a source of confusion, particularly for the beginner. We start, therefore, by describing the ten key commands, together with the most commonly used options. The latter are not always necessary in any given run (Mplus uses a sensible set of defaults) but it is probably a good idea for the beginner (and anyone else for that matter) to get into the habit of making the essential options explicit. It makes it easier for a third party to understand what has been done, if nothing else. We will now describe the ten commands in turn, again indicating which are optional by the addition of ‘Optional’ in brackets where appropriate. TITLE: (Optional) The TITLE command is a way in which you can describe the analysis. The content can be anything you like! DATA: The DATA command provides information about the data set to be used in the analysis. In our example (Display 2.1) we have provided the option ‘file is string.dat;’. The string.dat file contains the following: 6.3 4.1 5.1 5.0 5.0 3.2 3.6 4.5 4.8 3.1 3.8 4.1 6.0 3.5 4.5 4.3 2 5.7 3.3 1.3 5.8 2.8 6.7 1.5 2.1 4.6 7.6 2.5 4.0 2.5 1.7 4.8 2.4 5.2 1.2 1.8 3.4 6.0 2.2 5.2 2.8 1.4 4.2 2.0 5.3 1.1 1.6 4.1 6.3 1.6 5.0 2.6 1.6 5.5 2.1 6.0 1.2 1.8 3.9 6.5 2.0 This is a raw data file (i.e. free format, containing each of the individual observations or measurements, obtained from a single sample or group of individuals), which could have been explicitly specified as ‘format=free; type=individual; ngroups=1;’. For once, we have ignored our own recommendation to make all options explicit! It is possible to read a data from two or more groups of observations and it is also possible to read summary statistics (covariances or correlations, for example) rather than the raw data themselves (an attractive option for secondary analyses of published data that are only available in this form). We refer you to the Mplus User’s Guide for these options (although some of them will be used later in the present book). VARIABLE: The VARIABLE command specifies the characteristics of the variables in the data set to be analysed. First we need to provide names (in order) of all the variables in the data file. Those in string.dat are defined by the option ‘names are ruler graham brian andrew;’ (see Display 2.1). Next we need to list the variables that are to be used in any given analysis. The input program listed in Display 2.1 is for a simple linear regression of Graham’s guesses on the measurements provided by the ruler (we are not fitting a model involving the guesses provided by brian or Andrew). Here we specify the option ‘usevariables ruler graham’. Other commonly-used options within the VARIABLE command are those used to define missing values and to distinguish binary/ordinal variables from quantitative ones. The option ‘missing are ruler(99);’, for example, would tell Mplus that the code 99 is being used to identify missing values for the measurements provided by the ruler (but there are none in our data set). In a typical social science data set we might have a variable called ‘sex’ with values of 1 (male) or 2 (female). Here we would specify the option ‘categorical are sex;’ (we do not have to specify the actual codes for the categories). DEFINE: (Optional) This command is used to transform existing variables or to create new ones. ANALYSIS: (Optional) 3 This is used to provide the technical details of the analysis. Typically, we use ‘type=general; estimator=ml;’ as a default. But we do not always use this option. If we were to fit a latent class (finite mixture) model, for example, then we would use ‘type=general; estimator=ml;’. Quite often we might wish to boostrapped standard errors (and confidence intervals – see the OUTPUT command, below). If so, we add, for example, ‘bootstrap=1000;’ (asking for 1000 bootstrapped samples – this number being specified by the user). MODEL: (Optional) This is used to describe details of the model to be estimated. The simple regression in Display 2.1, for example, is specified by ‘graham on ruler;’. A single factor model for the three guesses of string length would be described by ‘factor by graham brian andrew;’ (the new name for the latent variable ‘factor’ being provided by the investigator). It is important to carefully distinguish the use of ‘on’ (in a regression model) from ‘by’ (in a measurement or factor analysis model). Other options will be described as we come across them. OUTPUT: (Optional) Mplus produces a lot of output by default! But if you want more then there is plenty available. Particularly useful options here are ‘sampstat;’ (producing simple summary statistics for the variables being analysed, ‘standardized;’ (producing a variety of standardised parameter estimates), and ‘cinterval;’ or possibly ‘cinterval(bootstrap);’ (producing confidence intervals for the parameters – the latter using bootstrap sampling, used in association with ‘bootstrap=1000;’ in the ANALYSIS command). The use of ‘residual;’ produces residuals for to aid examination of the adequacy of the model. SAVEDATA (Optional) This command is used to save data of various sorts and a variety of analysis results. PLOT (Optional) This provides graphical displays of both observed data and analysis results. MONTECARLO (Optional) The final command allows us to specify Monte Carlo simulation studies. 2.5 PUTTING IT ALL TOGETHER You have already seen Display 2.1. An example of an input file containing the commands for a simple factor analysis of the string data (excluding the ruler) is shown in Display 2.2. Apart from its title, the second input file is the same as the first until we get to 4 ‘usevariables graham brian andrew;’. The next difference comes in the MODEL command where we specify ‘tlength by graham brian andrew;’ (tlength being a latent variable or factor). We have added four lines of comment (partly to illustrate the use of comments to annotate an input file and partly to explain where the name ‘tlength’ has come from – we could just as easily used variable names such as ‘f’ or ‘f1’). 2.6 THE OUTPUT FILE Running the program input file in Display 2.2 produces several pages of output. We have split this output into four consecutive sections (Displays 2.3 to 2.6). We start with Display 2.3. This is just a reprint of the initial input file but with the added line at the bottom ‘INPUT READING TERMINATED NORMALLY’. This is a good sign! Display 2.4 provides a summary of the analysis that has been requested. There are 15 observations (i.e. 15 pieces of string) from a single sample (number of groups is equal to 1). There are three dependent variables (graham, brian and andrew) and a single continuous latent variable (tlength). The estimation procedure is maximum likelihood (ml) – we’ll ignore the other technical details. Finally, at the bottom of this display are the specifically requested summary statistics – sample means, covariances and correlations. The key statement at the top of Display 2.5 is ‘THE MODEL ESTIMATION TERMINATED NORMALLY’. If this is not seen in your own output files (i.e. it is replaced by some sort or error message or warning), or if is accompanied by a warning or error message, then it is a sign that something may have gone wrong. Display 2.5 provides several indicators of model fit. For the time being we will ignore all of these except the first two chi-square tests. The first one (labelled ‘Chi-Square Test of Model Fit’ provides us with test of whether the model fits the observed data (the latter being the observed means and covariance matrix). The chi-square given is zero with zero degrees of freedom. You will remember that this particular model (a single factor model for three measurements) is just identified and therefore fits the data perfectly. Ignore the P-Value given with this test – it is not defined for zero degrees of freedom (it is not zero!). More interesting chi-square tests will arise when we begin to introduce model constraints (see next chapter). The next chi-square test – the one labeled ‘ChiSquare Test of Model Fit for the Baseline Model’ provides a test for the correlations between the three guesses of string length. The baseline model specifies that these three correlations (hence three degrees of freedom) are all equal to zero. Luckily the baseline model does not fit (P-Value<0.001)! A low chi-square value for the baseline model would have implied that there were no associations to model. Now we get to the interesting bit of the output – the parameter estimates (Display 2.6). This part of the outcome consists of a table with four columns. For each parameter there is the estimated value (except when it is subject to a constraint), its standard error, the ratio of the estimate to its standard error, and, finally, a two-tailed P-value based on this 5 ratio. Note that this P-Value is only of any use if the interesting null hypothesis is the one that specifies that the true value of the parameter is zero. We start with the estimated factor loadings (regression coefficients reflecting the linear dependence of the guesses on the unknown factor). That for graham is constrained to be 1 by default (this sets the scale of measurement). The loadings for brian and andrew are then interpreted as systematic relative biases of theses two sets of guesses with respect to those of graham. Both Brian and Andrew produce higher guesses (on average) than does Graham. Note that the default value for the mean of the latent variable (tlength) is zero (again helping to determine the scale of measurement). The estimated intercept terms (i.e. the mean values of the guesses when factor=0) for the three guesses are all very similar. We then get an estimated value for the variance of factor, together with the estimated error variances for the three sets of guesses. Remember that a relatively large error variance implies low precision. Taken at face value, the results appear to indicate that andrew has the greatest precision, followed by graham, then brian. But we should be careful. We have not yet tried a formal test of the equality of the three error variances. An, in fact, that test would only make sense if we’d already established a common value for the three factor loadings (i.e. a common scale of measurement). We’ll return to this problem in Chapter 3. Now let’s think about the reported P-values. When we a looking at the estimated factor loadings the null hypothesis of interest is equality across the three sets of guesses. We are not interested in a value of zero (this would be equivalent to asking whether a particular set of guesses were correlated with the measurements provided by the ruler. We know they are! Similarly, we are not interested in testing whether the intercepts are zero (not, at least when the model has been specified so that the mean of factor is zero). Finally, we know that string lengths are not guessed without random measurement error, so the Pvalues for the three error variances are of no real interest to us here. The take home message is that you should not automatically be searching for and interpreting P-Values associated with the parameter estimates. They will, however, frequently be of great interest, but not always. They have no interesting function in the present output. We will return to these string data in the next Chapter to illustrate how you might set up a series sensible hypotheses based on a slightly different specification of the basic measurement model (we’ll even acknowledge that we’ve used a ruler). 2.7 SUMMARY We have described the function of the basic building blocks for the construction of an Mplus input file. We have then described how to put them together and illustrated the ideas through fitting a simple measurement (factor analysis) model to the guesses of string length described in Chapter 1. We have also described how to read and interpret the essential components of the resulting output file. You should now be in a position to start running simple Mplus jobs, at least for the simpler regression and factor analysis models. The next chapter will describe this model-fitting activity in a bit more detail. 6 Display 2.1 A simple Mplus input file TITLE: Simple bivariate regression of Graham's guesses on measuremetns provided by the ruler - using date from Display 1.1 DATA: file is string.dat; VARIABLE: names are ruler graham brian andrew; usevariables ruler graham; ANALYSIS: type=general; estimator=ml; MODEL: graham on ruler; OUTPUT: sampstat; 7 DISPLAY 2.2 A simple factor analysis TITLE: Single common factor model Factor indicated by guesses of Graham, Brian and Andrew - using data from Display 1.1 DATA: file is string.dat; VARIABLE: names are ruler graham brian andrew; usevariables graham brian andrew; ANALYSIS: type=general; estimator=ml; MODEL: ! ! ! ! ! OUTPUT: tlength by graham brian andrew; tlength (i.e. true length) is a new name provided by the analyst, labelling the latent variable explaining the covariance between the measured (manifest) variables sampstat; 8 DISPLAY 2.3 The start of an output file INPUT INSTRUCTIONS TITLE: Single common factor model Factor indicated by guesses of Graham, Brian and Andrew - using data from Display 1.1 DATA: file is string.dat; VARIABLE: names are ruler graham brian andrew; usevariables graham brian andrew; ANALYSIS: type=general; estimator=ml; MODEL: ! ! ! ! ! OUTPUT: tlength by graham brian andrew; tlength (i.e. true length) is a new name provided by the analyst, labelling the latent variable explaining the covariance between the measured (manifest) variables sampstat; INPUT READING TERMINATED NORMALLY 9 DISPLAY 2.4 The next bit of output – the model and the data Single common factor model Factor indicated by guesses of Graham, Brian and Andrew - using data from Display 1.1 SUMMARY OF ANALYSIS Number of groups Number of observations 1 15 Number of dependent variables Number of independent variables Number of continuous latent variables 3 0 1 Observed dependent variables Continuous GRAHAM BRIAN ANDREW Continuous latent variables TLENGTH Estimator Information matrix Maximum number of iterations Convergence criterion Maximum number of steepest descent iterations ML OBSERVED 1000 0.500D-04 20 Input data file(s) string.dat Input data format FREE SAMPLE STATISTICS SAMPLE STATISTICS 1 Means GRAHAM ________ 3.433 GRAHAM BRIAN ANDREW Covariances GRAHAM ________ 1.980 2.116 2.398 GRAHAM BRIAN ANDREW Correlations GRAHAM ________ 1.000 0.955 0.981 BRIAN ________ 3.427 ANDREW ________ 3.767 BRIAN ________ ANDREW ________ 2.478 2.649 BRIAN ________ 1.000 0.968 3.020 ANDREW ________ 1.000 10 DISPLAY 2.5 The output file – the fit of the model THE MODEL ESTIMATION TERMINATED NORMALLY TESTS OF MODEL FIT Chi-Square Test of Model Fit Value Degrees of Freedom P-Value 0.000 0 0.0000 Chi-Square Test of Model Fit for the Baseline Model Value Degrees of Freedom P-Value 90.842 3 0.0000 CFI/TLI CFI TLI 1.000 1.000 Loglikelihood H0 Value H1 Value -38.647 -38.647 Information Criteria Number of Free Parameters Akaike (AIC) Bayesian (BIC) Sample-Size Adjusted BIC (n* = (n + 2) / 24) 9 95.294 101.667 74.191 RMSEA (Root Mean Square Error Of Approximation) Estimate 90 Percent C.I. Probability RMSEA <= .05 0.000 0.000 0.000 0.000 SRMR (Standardized Root Mean Square Residual) Value 0.000 11 DISPLAY 2.6 The output file – the parameter estimates MODEL RESULTS Two-Tailed P-Value Estimate S.E. Est./S.E. TLENGTH BY GRAHAM BRIAN ANDREW 1.000 1.105 1.252 0.000 0.088 0.066 999.000 12.612 18.934 999.000 0.000 0.000 Intercepts GRAHAM BRIAN ANDREW 3.433 3.427 3.767 0.363 0.406 0.449 9.451 8.431 8.395 0.000 0.000 0.000 Variances TLENGTH 1.915 0.723 2.649 0.008 Residual Variances GRAHAM BRIAN ANDREW 0.064 0.141 0.018 0.034 0.060 0.040 1.873 2.352 0.442 0.061 0.019 0.658 QUALITY OF NUMERICAL RESULTS Condition Number for the Information Matrix (ratio of smallest to largest eigenvalue) 0.968E-03 12