Rational agents - Santa Clara University

advertisement

Intelligent Agents

Artificial Intelligence

Santa Clara University 2016

Agents and Environments

•

Agents interact with environments through sensors and

actuators

Agent

Sensors

Percepts

Environment

?

Actuators

Actions

Vacuum Cleaner World

A

B

Percept sequence

Action

{A, Clean}

Right

{A, Dirty}

Suck

{B, Clean}

Left

{A, Clean},{A, Clean}

Right

{A, Clean},{A Dirty}

Suck

…

…

Rationality

•

What is rational depends on

• The performance measure that defines the

criterion of success

• The agent’s prior knowledge of the environment

• The actions that the agent can perform

• The agent’s percept sequence to date

Rationality

•

Definition of a rational agent

• For each possible percept sequence, a rational

agent should select inaction that is expected to

maximize its performance measure, given the

evidence provided by the percept sequence and

whatever built-in knowledge the agent has

Vacuum Cleaner World

•

•

•

•

Performance measure:

• One point for each clean room over a life time of

10000 time steps

Geography is knows a priori, but not the dirt

distribution and location

Only actions are left, right, and suck

The agent correctly perceives its location and

whether the location is dirty

Rationality

•

•

•

•

•

Rational =/= Omniscient

Rational =/= Perfect

Information gathering is part of being rational

Agents need to learn from its perceptions

Autonomy is limited by previous built-in knowledge

Task environment

•

Specify

• Performance

• Environment

• Actuators

• Sensors

Task environment

•

•

Group quiz:

Specify PEAS for

1. an automated taxi

2. interactive English tutor

3. medical diagnostic system

Task environment

•

•

•

•

•

•

•

Fully observable vs. partially observable

Single agent vs. multi agent

Deterministic vs. stochastic

Episodic vs. sequential

Static vs. dynamic

Discrete vs. continuous

Known vs. unknown

• Agent’s knowledge about the laws of the

environment

Structure of Agents

•

Agent = Architecture + Program

Table Driven Agent

class Agent:

bigTable = {}

def init():

percepts = []

def action(self, percept):

percepts.append(self.percept)

action = Agent.bigTable[self.percept]

Cannot be an efficient implementation because

the table size is to big

Agent Architecture

•

Alternatives

• Single reflex agent

• Agent reacts to current precept

• Model based agent

• Agent maintains a model of its world

• Goal based agent

• Agent uses goals in order to plan actions

• Utility based agent

• Agent evaluates goals using a utility function

• Learning agents

• Agents change program through learning

Reflex agent

State

How the world evolves

Sensors

What my actions do

Condition-action rules

Agent

What action I

should do now

Actuators

Environment

What the world

is like now

Reflex Agents

•

Reflex agents do not maintain history

•

Action is only based on current percept

•

•

Autonomic taxi cannot distinguish for sure between

a blinking light and a constant light

Can enter into an infinite loop

•

•

Vacuum in the vacuum world that only knows

whether the room is dirty

•

When the room is clean, should it go left or right?

Can use randomization to escape loops, but then

the agent cannot be perfectly rational

Reflex Agent

•

•

•

Maintains a state

• Uses percepts and own actions to update state

• State reflects the agent’s guess of what the world is like

• State also reflects the current goal of the agent

• E.g.: autonomic taxi behaves differently at the

same location depending on whether it wants to

go to the garage for maintenance or whether it

wants to pick up clients.

• States can be atomic, factored or structured

Matches a set of rules against the state

Action taken based on the selected rule

Goal based agents

•

•

•

Instead of trying to build goals into states (and explode the

state space)

• Maintain goals explicitly

In a given state, evaluate the actions that can be done and

how they lead towards goals

• AI topics: Searching & Planning

Less efficient than reflex agents but more flexible

• New knowledge can be used more easily

• E.g. Autonomic taxi in rain: Braking now works differently

• Reflex agent would need to rewrite many conditionaction rules

Goal based agent

Sensors

State

What my actions do

What it will be like

if I do action A

Goals

What action I

should do now

Agent

Actuators

Environment

How the world evolves

What the world

is like now

Utility based agent

•

Goals are binary:

• You are in a good state or in a bad state

Reality is more complex:

•

Autonomic taxi might deliver your to where you want to go,

but you also want to minimize costs

Utility measures how good a goal state is

•

•

•

•

Utility based agents try to maximize the expected utility of the

action outcomes

One can show that a racial agent must behave as if it

possesses a utility function whose expected value it tries to

maximize

Utility based agent

Sensors

State

What my actions do

What it will be like

if I do action A

Utility

How happy I will be

in such a state

What action I

should do now

Agent

Actuators

Environment

How the world evolves

What the world

is like now

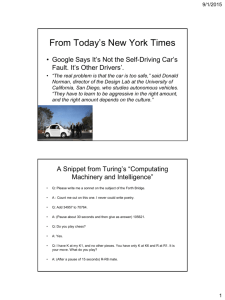

Learning Agents

•

Agents can be programmed by hand

Turing (1950): ""Some more expeditious method

seems desirable”.

•

Implemented as learning agents

•

Learning

•

Components

• Learning Element

• makes improvements

• Performance Element = the whole agent we considered before

• selects actions

• Critic

• provides feedback to the learning element

• tells agent how well agent is doing according to a performance standard

• Problem generator:

• In order for learning to take place

• agent must try out new things

• 4 years old: “Did you know that peanut butter on salt crackers

tastes horrible?"

Learning agent

Performance standard

Sensors

Critic

Learning

element

learning

goals

changes

knowledge

Performance

element

Problem

generator

Agent

Actuators

Environment

feedback

States

B

C

B

(a) Atomic

C

(b) Factored

(b) Structured