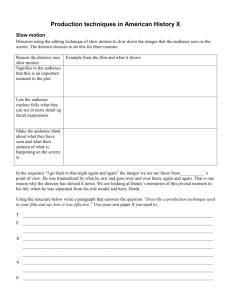

9 Smith - Birkbeck College

advertisement