Abstracting code specific concepts to a graphical representation by

advertisement

Abstracting code specific concepts

to a graphical representation by pattern

matching and refactoring

David Flenstrup

Kongens Lyngby 2009

IMM-M.Sc.-2009-43

ii

Technical University of Denmark

Department of Informatics and Mathematical Modeling

Richard Petersens Plads, DTU – Building 321

DK-2800 Kongens Lyngby, Denmark

Phone +45 45253351, Fax +45 45882673

reception@imm.dtu.dk

www.imm.dtu.dk

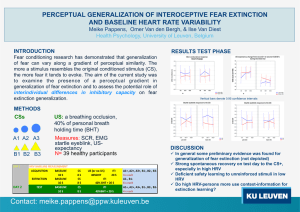

Abstract

This thesis deals with automatic analysis of Microsoft Dynamics NAV (NAV) application code. NAV (formerly

Navision) is an advanced Enterprise Resource Planning (ERP) system like SAP and Axapta, which can handle

anything from accounting to stock control for a company. NAV is customized to the individual enterprise

using the application language C/AL. The NAV configuration application has grown incrementally, from

version to version for more than 20 years, becoming a complex piece of software.

With the improvements that have emerged in languages and technologies, the NAV organization now

stands before a number of choices. It is clear that the application code has to be reorganized, but it is

unclear if the code should be kept in C/AL or moved to C#, because there are obvious benefits from both

choices.

The aim of this thesis is to contribute to this process with knowledge, by uncovering what exists today and

model some chosen suggestions for refactoring the present C/AL code.

Our approach is to identify, analyze, and implement recognition of software patterns in the NAV application

code. The software patterns we focus on, define relations between objects.

The application code’s data model consists of table objects which, among other things, save all data for the

enterprises. We use Unified Modeling Language (UML) diagrams to describe the relations we identify

between tables. The concepts we identify are relationships which in UML terms are described as

containment, aggregation, and generalization.

This gives the NAV organization an insight into which implications a change in one table object will have on

other objects. An example of one of the relations we identify is the relation between the Customer table,

which keeps information on all the company’s customers, and the Customer Bank Account table, which

contains information regarding the customers’ bank accounts. From our analysis we can see that the

existence of objects of the type Customer Bank Account is conditioned by the existence of a Customer

object. This is described with the concept containment in UML.

We show that it is possible to analyze the application code and extract concepts which contribute with an

overview of selected relations. Furthermore, we show that a graphic dynamic display of the relations is

preferable. We have developed a good intuitive way to present our results, where it is possible to make

sense of even very large diagrams with the provided filtering methods of the Concept Viewer tool.

We extended the project scope underway in the project, and examined the possibility of identifying specific

concepts from the accounting ontology Resource, Events, and Agents (REA) in the C/AL code. We found

that Events can easily be identified, but that the classification of Resources and Agents has some

complications which require a refinement of the chosen approach.

iv

Résumé

Dette speciale omhandler automatiseret analyse af Microsoft Dynamics NAV (NAV) applikationskoden. NAV

(tidligere Navision) er et avanceret Virksomhedsstyringssystem (ERP), i stil med SAP og Axapta, der kan

håndtere alt fra regnskab til lagerstyring i en virksomhed. NAV tilpasses til den enkelte virksomhed i

applikationssproget C/AL. NAV konfigurationsapplikationen har vokset inkrementalt fra version til version i

mere end 20 år og er i dag et yderst komplekst stykke software.

I takt med at der er kommet generelle forbedringer i sprog og teknologier, står NAV organisationen nu over

for en række valg. Det står klart, at applikationskoden skal reorganiseres. Det er dog uklart om koden skal

bibeholdes i C/AL eller flyttes til C#, da der er åbenlyse fordele ved begge dele.

I dette speciale søger vi gennem omfattende kode analyse at bidrage med viden til denne proces ved at

afdække hvad der findes i dag, samt ved at modellere nogle udvalgte forslag til kodeomskrivninger ud fra

den nuværende C/AL kode.

Vores fremgangsmåde er at identificere, analysere og implementere genkendelse af softwaremønstre i NAV

applikationskoden. De softwaremønstre vi fokuserer på udtrykker relationer mellem objekter.

Applikationskodens datamodel består af tabelobjekter der blandt andet bruges til at gemme alle data for

virksomheden. Vi benytter Unified Modeling Language (UML) diagrammer til at beskrive de relationer vi

identificerer mellem tabeller. De koncepter vi identificerer, er relationsbegreberne der i UML termer

beskrives som containment, aggregation og generalization.

Dette giver NAV organisationen et indblik i hvilke implikationer en ændring i et tabelobjekt vil have på

andre objekter. Et eksempel på en af de relationer vi identificerer, er relationen mellem Customer tabellen,

der indeholder oplysninger om alle virksomhedens kunder, og Customer Bank Account tabellen, der

indeholder oplysninger om kunders bank konti.

Fra vores analyse kan vi se at objekter af typen Customer Bank Account eksistens er betinget af eksistensen

af et Customer objekt. Dette beskrives med UML begrebet containment.

Vi viser at det er muligt at analysere applikationskoden og udtrække koncepter der bidrager med et

overblik over udvalgte relationer. Derudover viser vi, at en dynamisk grafisk visning af relationerne er at

foretrække. Vi har udviklet en god intuitiv måde at præsentere vores resultater, hvor der kan findes mening

i selv meget store diagrammer med de filtreringsmetoder værktøjet Concept Viewer tilbyder.

Vi udvidede projektes mål undervejs i projektet og undersøgte muligheden for at identificere specifikke

koncepter fra regnskabsontologien Resource, Events, and Agents (REA) ud fra C/AL koden. Vi finder at

Events nemt kan identificeres, men at klassificeringen af Resources og Agents har nogle komplikationer der

kræver en videreudvikling af den valgte tilgang.

vi

Preface

This thesis was prepared in collaboration with the Department of Informatics and Mathematical Modeling

(IMM) at the Technical University of Denmark (DTU) and the Microsoft Dynamics NAV Application Team

(APP Team) at Microsoft Development Center Copenhagen (MDCC). This thesis is prepared as the

fulfillment of the final requirement for earning the degree of Master of Engineering in Computer Science.

The thesis is the result of the work carried out from December 2008 to July 2009 with a workload of 35

ECTS credit.

Kongens Lyngby, July 2009

David Flenstrup

viii

Acknowledgements

I would like to thank Microsoft and especially Jesper Kiehn for his commitment to my project. I am really

happy with the subject we have chosen to investigate and if it had not been for Jesper’s passion and

humongous insight in NAV and REA (and his power of persuasion) this topic could not have been covered.

From DTU I would like to thank Peter Falster and Jeppe Revall Frisvad. I am very grateful for the excessive

interest and support my project has been given. It has truly been a great experience and an invaluable

resource for me.

x

Table of Contents

Abstract ....................................................................................................................................................... iii

Résumé ........................................................................................................................................................ v

Preface ....................................................................................................................................................... vii

Acknowledgements ..................................................................................................................................... ix

List of Figures.............................................................................................................................................. xv

List of Tables ............................................................................................................................................. xvii

List of Formulas ......................................................................................................................................... xix

List of Code Samples .................................................................................................................................. xxi

1

2

Introduction ......................................................................................................................................... 1

1.1

Background for the project ........................................................................................................... 1

1.2

Project Aim................................................................................................................................... 1

Enterprise Resource Planning Domain .................................................................................................. 3

2.1

Introduction to Enterprise Resource Planning (ERP) (3)................................................................. 3

2.2

ERP Business Opportunities (10) ................................................................................................... 4

2.3

Microsoft Dynamics NAV (NAV) (11) ............................................................................................. 5

2.3.1

NAV architecture .................................................................................................................. 7

2.3.2

Application Code (14) VS. Product Code ................................................................................ 7

2.3.3

Application Language Transition ........................................................................................... 8

2.4

3

Fundamentals of the C/AL language ............................................................................................. 9

2.4.1

C/AL Design Criteria .............................................................................................................. 9

2.4.2

C/AL Syntax........................................................................................................................... 9

Related Work ......................................................................................................................................13

3.1

What has been accomplished inside Microsoft ............................................................................13

3.1.1

Partial C/AL parser ...............................................................................................................13

3.1.2

Codedub ..............................................................................................................................14

3.1.3

Navision Developer Toolkit (25) ...........................................................................................14

3.1.4

Object Map ..........................................................................................................................14

xii

4

5

3.2

What has not been accomplished inside Microsoft ......................................................................14

3.3

What has been accomplished outside Microsoft ..........................................................................14

3.3.1

Refactoring from Code to UML ............................................................................................14

3.3.2

UML designers .....................................................................................................................16

3.3.3

Refactoring to Resource, Events and Agents (REA) (30) ........................................................16

3.4

What has not been accomplished outside Microsoft....................................................................16

3.5

Related work summary................................................................................................................16

Foundational Relations (33) ................................................................................................................17

4.1

Definition of the Is_a relation ......................................................................................................17

4.2

Definition of the Part_of relation .................................................................................................17

Unified Modeling Language (UML)(34) ................................................................................................19

5.1

6

UML Terminology Overview ........................................................................................................19

5.1.1

Dependency.........................................................................................................................19

5.1.2

Generalization .....................................................................................................................20

5.1.3

Association ..........................................................................................................................20

5.1.4

Aggregation .........................................................................................................................20

5.1.5

Containment........................................................................................................................20

Analysis...............................................................................................................................................21

6.1

Identifying Containment Pattern .................................................................................................21

6.1.1

The role of the Sales Header and Sales Line tables in NAV ....................................................21

6.1.2

Manual analysis of Containment pattern .............................................................................24

6.1.3

Variations in containment pattern .......................................................................................25

6.1.4

Manual identification of additional containments ................................................................26

6.2

Identifying Generalization Pattern ...............................................................................................27

6.2.1

Code smell Large Class and solution Extracting Class ............................................................28

6.2.2

Manual analysis of the Generalization pattern .....................................................................28

6.2.3

Manual analysis of the generalization relationship from Sales Line ......................................30

6.3

Identifying REA Concepts .............................................................................................................31

6.3.1

The Resource, Event and Agent (REA) model (40).................................................................31

6.3.2

Introduction to Accounting Theory ......................................................................................34

6.3.3

Naming of tables in NAV ......................................................................................................36

6.3.4

REA concepts in NAV............................................................................................................36

6.3.5

Manual analysis of REA Events in NAV..................................................................................37

xiii

7

Tools ...................................................................................................................................................41

7.1

.NET Framework ..........................................................................................................................41

7.2

Interoperability ...........................................................................................................................41

7.3

F# ................................................................................................................................................41

7.3.1

8

7.4

LEX, YACC and Abstract Syntax Trees (54) ....................................................................................45

7.5

C#................................................................................................................................................46

7.6

Regular expressions (58), (59) ......................................................................................................47

7.7

Lambda expressions (60), (61) .....................................................................................................47

7.8

LINQ (63) (64) ..............................................................................................................................48

7.8.1

Example 1: Without LINQ .....................................................................................................49

7.8.2

Example 2: With LINQ ..........................................................................................................50

Implementation ..................................................................................................................................51

8.1

Problem with Parser ....................................................................................................................51

8.1.1

CALParser AST to XML AST ...................................................................................................51

8.2

New data representation .............................................................................................................51

8.3

CAL parser extension for table relations ......................................................................................52

8.3.1

Generic parser vs. specific parsing rules ...............................................................................53

8.3.2

Parser Implementation ........................................................................................................53

8.4

Parsing rules (68) .........................................................................................................................54

8.5

LINQ for Querying........................................................................................................................55

8.6

Lambda Expressions in action ......................................................................................................57

8.7

Graph generation ........................................................................................................................58

8.7.1

9

Language syntax ..................................................................................................................42

Microsoft Automatic Graph Layout (69) ...............................................................................59

8.8

Performance boosts ....................................................................................................................59

8.9

Algorithm design .........................................................................................................................60

8.9.1

Containment........................................................................................................................60

8.9.2

Inheritance ..........................................................................................................................61

8.9.3

Inheritance – Reusing generalization objects .......................................................................62

8.9.4

Implementation of REA identification ..................................................................................62

8.9.5

Limitations by approach and solution suggestion .................................................................64

8.9.6

Analysis of initial REA results ................................................................................................65

Results ................................................................................................................................................67

xiv

9.1

TableRelation Analysis and TableRelation Parser Quality Assurance.............................................68

9.1.1

Matches on Key fields ..........................................................................................................68

9.1.2

Matches on all fields ............................................................................................................68

9.2

Results for Containment Pattern..................................................................................................69

9.3

Results for Generalization Pattern ...............................................................................................71

9.4

Results from refactoring the generalization objects .....................................................................72

9.5

The Concept Viewer and its output ..............................................................................................72

9.5.1

Sales Header and Sales Line – Aggregations and Containments ............................................73

9.5.2

Sales Header and Sales Line – Associations ..........................................................................74

9.5.3

Sales Header and Sales Line – Associations refactored via Generalization objects ................74

9.5.4

Sales Header and Sales Line – Associations refactored via refactored Generalization objects

75

9.5.5

Sales Line, Standard Sales Line, and Sales Line Archive –Reused Generalization objects

explored 75

9.5.6

Sales Line, Standard Sales Line, and Sales Line Archive – Generalization objects explored ....76

9.5.7

Sales Line, Standard Sales Line and Sales Line Archive – Associations explored ....................76

9.5.8

Item – Containments and Aggregations mapped with reused Generalization objects ...........77

9.5.9

Item –Reused Generalization objects containing Item ..........................................................78

9.6

Feedback from the Application team ...........................................................................................78

10 Conclusion ..........................................................................................................................................81

10.1

Parsing the C/AL application ........................................................................................................81

10.2

UML Relationship pattern matching ............................................................................................81

10.2.1

Generalization .....................................................................................................................81

10.2.2

Containment........................................................................................................................81

10.3

The Concept Viewer ....................................................................................................................82

10.4

Resource, Events and Agents (REA) relationship pattern matching ..............................................82

11 Future work and Perspective ...............................................................................................................83

12 Abbreviations......................................................................................................................................85

13 Works Cited ........................................................................................................................................87

14 Appendix.............................................................................................................................................91

14.1

Content on the enclosed DVD ......................................................................................................91

List of Figures

Figure 2-1 Integration Data Flow (5) ............................................................................................................. 3

Figure 2-2 ERP process flow (5) .................................................................................................................... 4

Figure 2-3 Home page of an Order Processor in Microsoft Dynamics NAV 2009 (Role Tailored Client) .......... 6

Figure 2-4 Current C/AL Compilation for new and old product stack ............................................................ 8

Figure 3-1 UML diagram manually created with Visio ..................................................................................15

Figure 3-2 UML diagram generated from code with MagicDraw ..................................................................15

Figure 5-1 UML mapping of a dependency ..................................................................................................19

Figure 5-2 UML mapping of a generalization ...............................................................................................20

Figure 5-3 UML mapping of an association ..................................................................................................20

Figure 5-4 UML mapping of an aggregation.................................................................................................20

Figure 5-5 UML mapping of a containment .................................................................................................20

Figure 6-1 Overview of all Sales Orders in the Role Tailored Client ..............................................................22

Figure 6-2 New Sales Order in the Role Tailored Client ................................................................................23

Figure 6-3 Viewed as an OO UML diagram ..................................................................................................24

Figure 6-4 Viewed as a database diagram with primary keys (PK) and foreign keys (FK) ..............................24

Figure 6-5 Table field association ................................................................................................................27

Figure 6-6 Multiple associations refactored to a single association..............................................................27

Figure 6-7 Selection box with elements .......................................................................................................29

Figure 6-8 Property window displaying the OptionString of the field Type...................................................29

Figure 6-9 Candidates for Generalization Refactoring..................................................................................30

Figure 6-10 Refactoring multiple associations to single associations............................................................31

Figure 6-11 The cookie company (42)..........................................................................................................33

Figure 7-1 The structure of a abstract syntax tree .......................................................................................46

Figure 8-1 Arrow heads for Containment, Aggregation and Generalization .................................................59

Figure 8-2 Illustrating procedure references between CU12 and CU13 ........................................................64

Figure 9-1 Template, Batch, Line pattern found in the initial Containment work .........................................67

Figure 9-2 Template, Batch, Line pattern in the Concept Viewer .................................................................67

Figure 9-3 The Concept Viewer ...................................................................................................................73

Figure 9-4 Sales Header and Sales Line – Aggregations and Containments ..................................................74

Figure 9-5 Sales Header and Sales Line Associations....................................................................................74

Figure 9-6 Sales Header and Sales Line Generalizations ...............................................................................75

Figure 9-7 Sales Header and Sales Line Generalizations refactored..............................................................75

Figure 9-8 Sales Line, Standard Sales Line, and Sales Line Archive reused Generalization objects explored ..76

Figure 9-9 Sales Line, Standard Sales Line, and Sales Line Archive Generalization objects explored .............76

Figure 9-10 Sales Line, Standard Sales Line, and Sales Line Archive – Associations explored ........................77

Figure 9-11 Sales Line, Standard Sales Line and Sales Line Archive – Associations explored .........................77

Figure 9-12 Item –Reused Generalization objects containing Item ..............................................................78

xvi

List of Tables

Table 2-1 NAV Customer segment................................................................................................................ 5

Table 6-1 Sales Header and Sales Line Containment ....................................................................................24

Table 6-2 Sales Header Containment in detail .............................................................................................25

Table 6-3 Purchase Header and Purchase Line Containment .......................................................................26

Table 6-4 Profile Questionnaire Header and Profile Questionnaire Line Aggregation ...................................26

Table 6-5 Generalizations in Sales Line ........................................................................................................30

Table 6-6 Color definition for Figure 6-9 ......................................................................................................31

Table 6-7 The double-entry accounting system ...........................................................................................34

Table 6-8 Table naming in NAV ...................................................................................................................36

Table 6-9 Code for posting Journals to Entries.............................................................................................38

Table 7-1 Sync vs. Async execution .............................................................................................................45

Table 8-1 REA results from step 1 ...............................................................................................................63

Table 8-2 REA candidate sets ......................................................................................................................65

Table 9-1 Key fields .....................................................................................................................................68

Table 9-2 Unique key fields .........................................................................................................................68

Table 9-3 All fields ......................................................................................................................................69

Table 9-4 All unique fields ...........................................................................................................................69

xviii

List of Formulas

Formula 4-1 is_a .........................................................................................................................................17

Formula 4-2 part_for ..................................................................................................................................17

Formula 4-3 has_part..................................................................................................................................18

Formula 4-4 part_of ....................................................................................................................................18

xx

List of Code Samples

Code 2-1 Table 3 Payment Terms ...............................................................................................................11

Code 6-1 Requirements for Generalizations ................................................................................................28

Code 6-2 Requirements for Generalizations ................................................................................................28

Code 7-1 Example with complex numbers – Definition of active patterns ...................................................42

Code 7-2 Example with complex numbers – Add function ...........................................................................43

Code 7-3 Example with complex numbers – Multiply functions ...................................................................43

Code 7-4 Example with Sync and Async execution - Synchronous function ..................................................44

Code 7-5 Example with Sync and Async execution - Asynchronous function ................................................44

Code 7-6 C/AL variable assignment .............................................................................................................46

Code 7-7 API description of the First method ..............................................................................................47

Code 7-8 Example with lambda expression .................................................................................................48

Code 7-9 Example without lambda expression ............................................................................................48

Code 7-10 Example without LINQ................................................................................................................49

Code 7-11 Example with LINQ .....................................................................................................................50

Code 8-1 C/AL IF statement ........................................................................................................................51

Code 8-2 Segment from our abstract syntax tree XML representation ........................................................52

Code 8-3 TableRelation matching Expression2 ............................................................................................54

Code 8-4 Appendix DVD, file

\Code\RegularExpressionParser\RegularExpressionParser\CALRegularExpressions.fs .................................54

Code 8-5 TableRelation matching Expression3 ............................................................................................55

Code 8-6 Appendix DVD, file

\Code\RegularExpressionParser\RegularExpressionParser\CALRegularExpressions.fs .................................55

Code 8-7 LINQ Example1 - Select record variables with number equals var ...............................................56

Code 8-8 Querying the abstract syntax tree ................................................................................................56

Code 8-9 Querying the abstract syntax tree ................................................................................................56

Code 8-10 LINQ Example2 – Select all statements .......................................................................................57

Code 8-11 LINQ Example3 – Select all Exp1 variables with ID equals var......................................................57

Code 8-12 Appendix DVD, file \Code\MatchingInLinq\IdentifyInheritance.cs, method RefactorInheritance 58

Code 8-13 Appendix DVD, file \Code\MatchingInLinq\IdentifyREAConcepts.cs, method

FindProcedureReferences ...........................................................................................................................58

Code 8-14 Appendix DVD, file \Code\MatchingInLinq\IdentifyREAConcepts.cs, method Call .......................58

Code 8-15 Appendix DVD, file \Code\MatchingInLinq\ParseRemaningElements.cs, method

ParseElementsToXML .................................................................................................................................58

Code 8-16 Pseudo code for the Containment algorithm ..............................................................................61

Code 8-17 Pseudo code for Generalization algorithm..................................................................................62

Code 8-18 Pseudo code for refactoring Generalization objects ...................................................................62

Code 9-1 Special case of TableRelation ignored in Containment analysis .....................................................70

xxii

Code 9-2 Special case of TableRelation ignored in Generalization analysis ..................................................71

Code 9-3 Special case of TableRelation dependent on YES/NO values ignored in Generalization analysis ....72

Chapter 1

1 Introduction

1.1 Background for the project

The overall scope for projects sponsored by the NAV Application Team is to find the primitives for the best

path towards the next generation NAV application. This is a very interesting task due to a number of

reasons we will cover later. The application team is highly motivated in finding the best steps towards a

new application design and student projects are used as one type of contribution for uncovering and

analyzing this challenge.

The application has grown incrementally from version to version, and the long term goal is to refactor the

application code, but the first steps are to analyze the available code and provide knowledge on how the

application is tied together. Due to the way the application has been developed, see section 2.3.2, no clear

overview of the application exists in terms of Unified Modeling Language (UML) (see section 12, for a list of

all abbreviations), or similar, models. These are necessary because the code is too complex for developers

to comprehend, and the learning curve for new developers is very steep.

One of the key drivers for a redesign is to reduce the code base. J. Kiehn stated that the code base has been

growing with a factor 10 from Navision v. 1. This is of course an exaggeration, but the application code base

is now counting more than 2.4 million code lines, which indicates, the complexity of the application.

Earlier work (1) has shown that the number of dependencies in the code base is high, which is confirmed by

J. Kiehn. Dependencies increase the complexity of code, and complex code is more prone to contain bugs

and costs more man hours to maintain. These factors are important and needs to be taken care of and the

NAV team are aware of the potential problems.

1.2 Project Aim

This thesis aims at contributing to a solution for the above problem, by uncovering what exists today and by

modeling some chosen suggestions for refactorizations from the present C/AL code.

Our approach is to identify, analyze, and implement recognition of software patterns in the NAV application

code. The software patterns we will focus on, defines foundational relations, see section 4, between

objects. We will use the Unified Modeling Language (UML) (2) terminology to describe the identified

relations. The relations we focus on identifying is the concepts containment and generalization introduced

in section 5.

The project aim was extended underway in the project. The goal was to examine the possibility of

identifying specific concepts from the accounting ontology Resource, Events, and Agents (REA), see section

3.3.3, from the C/AL code. The goal from such an approach is that REA offers domain specific knowledge as

opposed to the general domain knowledge provided by UML.

2

Introduction

Chapter 2

2 Enterprise Resource Planning Domain

The following section is provided to give an introduction to Enterprise Resource Planning (ERP) solutions in

general. Following this is a more specific introduction to the fundamentals of the Microsoft Dynamics NAV

system and the C/AL language.

2.1 Introduction to Enterprise Resource Planning (ERP) (3)

Today, ERP systems are an invaluable tool for most companies counting more than a few employees. An

ERP system is a tool for running an enterprise, and the ERP system is the backbone of the organization for

providing data that can be used in decision making (4). In the past, every department of a company made

decisions independently of each other. ERP provides a platform for collaboration and a common ground for

decision making.

Purchasing

Marketing and

Sales

Accounting and

Finance

Information

Manufacturing

Human Resources

Inventory

Figure 2-1 Integration Data Flow (5)

The model on the left illustrates how ERP

systems are based on storing all information

in one central database, enabling all

departments in the ERP solution to work

with the same data. (6)

Common modules in an ERP system are:

Financial Management (FM)

Customer Relationship

Management (CRM)

Supply Chain Management (SCM)

Business Intelligence (BI)

The most common application of an ERP system is the automation of business processes. One of the most

important chains of business processes for a manufacturing company, is the support for selling the

manufactured goods (7). Figure 2-2 illustrates how an arbitrary ERP system would support this scenario by

allowing the Sales, Warehouse, Accounting and Receiving departments to work together to handle the

entire flow, from sale and shipping to receiving payment, for the goods by sharing a common ground in the

central information database, and forwarding an order fulfillment to the department responsible for the

next step in the process.

4

Enterprise Resource Planning Domain

Warehouse

Sales

Sales quote

Pack and ship

Sales order

Information

Receiving

Returns

Accounting

Payment

Billing

Figure 2-2 ERP process flow (5)

One of the key drivers for implementing an ERP system is that it can often substitute the mess of different

applications that emerges in a company along the way (8), and thereby streamline and simplify the use of

software within the company and, as a result, allow the organization to do better with the same, or even a

smaller, amount of money.

Another aspect is the possibilities emerging from having all the organizations information stored centrally.

This makes it possible to derive important byproducts which, for instance, could enable the organization to

do forecasting of production schedules during a holiday period, based on expected order income. This

would allow management to adjust the available workforce to make sure that production can cope with the

demand from incoming orders.

The extensibility is also an important attribute of an ERP system. To fully qualify as an ERP system, it is

expected to offer more than ”just” a comprehensive integration of various organizational processes(9). ERP

systems should thus strive to be:

Flexible –Systems should be able to grow with the organization

Modular and open –Systems should offer open interfaces allowing easy interoperability and

extensibility to third-party add-on components.

Beyond the company –Systems should support integration to customers, partners and vendors

because many business processes require interaction with actors outside the organization.

2.2 ERP Business Opportunities (10)

There is a large market for ERP software, and many ERP suppliers. SAP has published a report from AMR

Research listing the estimated revenues in 2006 for the 17 most dominant ERP vendors. The report

estimates the total revenue on ERP software to be $ 28.8 Billion with a revenue growth from 2005 of 14 %.

In comparison, the Danish gross domestic product (GDP) 2006 was $ 202.9 Billion, which means that the

5

1

revenue of ERP software is a market of the entire GDP of Denmark, underlining that the ERP market is of

7

great importance.

The AMR report lists SAP as the number one ERP supplier with revenues nearly the double of number two

on the list.

During the last 10 years there has been a consolidation on the market for ERP software. Oracle acquired

PeopleSoft (2004), Navision acquired Axapta (2000) and Microsoft acquired Navision (2002) and Great

Plains (2000) amongst others. The five most dominant ERP vendors in 2006 were SAP, Oracle, Infor, Sage

Group, and Microsoft.

2.3 Microsoft Dynamics NAV (NAV) (11)

The ERP product we focus on in this thesis is, as previously stated, the Microsoft Dynamics NAV product.

This following section introduces the NAV user segment, product, and architecture. Furthermore, we

describe the difference between product code and application code in NAV, which is completely essential

in order to understand this project.

Microsoft has focused on getting a position on the global ERP market as described in the previous section.

The Microsoft Dynamics product group was kick started by acquisitions of successful ERP vendors in the

beginning of the 2000’s, and this product group has been developed continuously to gain market shares.

Microsoft Dynamics NAV is one of these products. NAV focuses on the Mid-Market+ (defined as companies

with 1-5000 employees) segment of the ERP market, leaving the enterprise market to other ERP products.

The company size definitions used are the following:

Enterprise

Corporate Account Segment(CAS)

Midmarket

Small Business

5000+ employees

1000-5000 employees

50-1000 employees

1-49 employees

Table 2-1 NAV Customer segment

The following numbers are provided to give an idea about the forces driving NAV. These numbers are from

the beginning of 2008(12)

> 65.000 Customers (companies that have bought NAV)

> 3.300 certified partners (IT professionals working with sale and customization of NAV)

> 1.800 add-on solutions (products offered for specific needs by partners)

> 40 localized versions (supporting local languages and date formats)

> 1.000.000 licensed users (employees in customer companies using NAV)

Microsoft Dynamics NAV was originally created by the three college friends, J. Balser, T. Wind and P. Bang,

from the Technical University of Denmark (DTU) under the product name Navigator and later Navision.

NAV is currently in version 6 (project Corsica). It has been developed in Vedbæk since 1984. It has grown

incrementally from version to version becoming a very elaborate ERP system. The latest new addition to

the product is the Role Tailored Client (RTC), offering customized user profiles. This enables every user role

in the system to have a personalized User Interface (UI) with focus on the tasks they perform, making it

6

Enterprise Resource Planning Domain

easier for employees to understand and interact with the system. Figure 2-3 shows the home page for an

Order Processor. The Order Processor is the role in a company who is responsible for shipping incoming

orders to customers and putting customer returns back in stock. The Order Processor is one of 21 role

centers that ship with NAV out of the box and partners can add more to suit individual customer needs.

Figure 2-3 Home page of an Order Processor in Microsoft Dynamics NAV 2009 (Role Tailored Client)

7

2.3.1 NAV architecture

NAV supports three architectures: one- and two-tier for legacy purposes, and the new three-tier

architecture that offers new features and a better scalability. In time, the one- and two- tier architectures

are going to be discontinued.

One-tier setups consists of the

C/SIDE client simply using a file as

C/SIDE Client

database (not shown in figure)

Two-tier setups consists of the

C/SIDE client and a database

(either Microsoft SQL or the NAV

Database Server (legacy product))

Three-tier setups are following the

Application

Model View Control pattern (13):

Service Tier

C/SIDE Client

o Role Tailored Client (RTC)

for users (View)

o Application Service Tier

(AST) (Control)

o Microsoft SQL or a NAV

Database Server (Model)

Microsoft SQL Server or

Furthermore,

the C/SIDE client

NAV Cserver Database

Microsoft SQL Server or

is

also

part

of

the

three-tier

NAV Cserver Database

setups and is mostly used for

2-1 Two-tier archictecture 2-2 Three-tier architecture

development.

The Role Tailored Client is only running on the three-tier setup and does, for now, not support

development. The C/SIDE client is the old client that is still used for development, but with time, the plan is

to provide a new development environment and discontinue the old C/SIDE client.

Role Tailored

Client

2.3.2 Application Code (14) VS. Product Code

When we describe NAV, it is important to note the difference between product code and application code.

New product code is written in C# and legacy code is written primarily in C++ (15). New application code is

written in C/AL (often written AL) and has always been developed in C/AL. The NAV product is the platform

that hosts the NAV ERP application. The product is also running on a platform, namely Windows.

Application code is, as stated above, written in the NAV specific language C/AL, and the application

implementation is the actual code forming the ERP solution. The application is hosted inside the product

and can be custom tailored to support specific customer needs.

According to Jesper Kiehn, the application codebase has grown considerably from version to version. One

reason for this is the sales channel for NAV. In general, Microsoft does not sell NAV directly to customers.

The sale is done via a partner that gets the customer up and running with NAV. As mentioned above, more

than 1.800 add-on products exist, and a number of add-on products have propagated back into the product

over time to fulfill general customer requests. This has been done by buying the add-on from the partner

and merging their code into the shipping application. As described before, the NAV system has been

developed continuously over the last 25 years. The result is an application of more than 2.4 million lines of

C/AL code.

8

Enterprise Resource Planning Domain

The NAV ERP application is divided into 192 granules. A granule is a pack of Pages, Forms, Codeunits, and

Tables, introduced in section 2.4.2, together adding a feature to the ERP application. One example of a

granule is the Commerce Gateway granule, allowing the NAV ERP system to interact via Commerce

Gateway. The commerce gateway granule adds support for business to business transactions. One example

of such a transaction could be electronic exchange of sales documents. The NAV system is designed to grow

with the company, allowing the company to extend their use of the system incrementally. This is in

compliance with theory for implementing ERP software in companies. In general, it is advised to implement

ERP systems incrementally in a company, increasing the scope along the way. Furthermore, it is advised to

design the system so it is able to handle future company growth (16).

As described above, this can easily be achieved with the introduction of new granules in the implemented

NAV ERP. In fact, the granules are already installed in the customers NAV. If the customer system runs on

the standard application, they just have to pay for an extension of their license to get the appropriate

access rights.

2.3.3 Application Language Transition

As described in section 2.3.2, the configuration of NAV is done with C/AL. Until NAV version 6.0, C/AL has

been compiled solely to a NAV specific binary format, but the new three-tier architecture described in

section 2.3.1, supported by the Role Tailored Client and the Application Service Tier, is running directly on

C# code. The transformation from C/AL to C# is done using a token parser. The generated C# code is fully

running C# code, but not nice readable code. The C# code is then compiled to Microsoft Intermediate

Language (17) (MSIL), allowing the binary (CLR) application code to run on any platform supported by the

.NET framework.

C/AL

Compiled to NAV specific

binary format

Transformed to C#

Old Product Platform (C/SIDE)

(C++ Code)

New Product Platform (RTC, AST)

(C# Code)

Large parts of the product were

designed to run with the NAV specific

binary format, and still do. Therefore,

the product is in a transition phase

where it needs both the C/AL and the C#

representation.

Product Interaction

Figure 2-4 Current C/AL Compilation for new and old product stack

A NAV specific object model has been created in C#, which enables application code to be written in C#, but

the product will need both the C/AL representation and the C# representation. At this point, only parsing

from C/AL to C# is possible, not the other way around. This causes some inconvenience when debugging

application code on the new product stack (three-tier). When debugging the application code on either the

Role Tailored Client or the Application Service Tier, debugging can only be done in the generated C# code.

This is far from ideal because there is no direct link between generated C# code and the C/AL code. Fixing

an issue identified in the generated C# application code has to be fixed in C/AL from the C/SIDE client.

9

2.4 Fundamentals of the C/AL language

The code we analyze throughout this project is written in the language C/AL. This section is provided to give

an introduction to the design criteria and syntax for C/AL.

C/AL is not an object oriented language because only eight predefined non extendable object types can be

used. This implicitly means that C/AL does not support concepts such as inheritance. The language is

strongly typed, meaning that variable types cannot be inferred and have to be defined explicitly. C/AL is a

procedural language in the family of imperative programming languages. Imperative languages specify the

individual steps of a computation (how we want it) in contradiction to declarative languages that specify

what the program should do (what we want) (18).

2.4.1 C/AL Design Criteria

Michael Nielsen (Director of Development in NAV) has a long history with NAV and was one of the original

designers of the language. M. Nielsen stated that the design criteria’s (19) for C/AL were to provide an

environment that could be used without:

Dealing with memory and other resource handling

Thinking about exception handling and state

Thinking about database transactions and rollbacks

Knowing about set operations (SQL)

Knowing about OLAP(20) and SIFT(21)

2.4.2 C/AL Syntax

The C/AL syntax is heavily inspired by Pascal, but the language is simpler. The full language reference can be

found on MSDN (22). The C/AL language provides a limited set of predefined object types, which also help

to reduce the complexity.

Overall, the general design goal has been to provide a flexible language that enables developers to quickly

assimilate themselves with developing for NAV.

C/AL has eight kinds of objects: Tables, Forms, Reports, Dataports, XMLports, Codeunits, MenuSuites and

Pages.

Pages are Forms (Graphical User Interface) for the Role Tailored Client. Forms and MenuSuites

objects will probably be discontinued as the old C/SIDE client is outfaced.

Dataports and XMLports are objects to setup import and export of data via text files or web

services.

Report is an object type to define Business Intelligence (BI) reports.

We will not spend more time with the above object types. They contain no information we need in our

further analysis. All relevant information in regards to our analysis is placed in Tables and Codeunits. The

following section will give an introduction to these two object types.

10

Enterprise Resource Planning Domain

2.4.2.1 Tables

Tables are data containers and represent the foundation of the application. Tables express data and logic in

the form of triggers. Tables contain two kinds of triggers:

Trigger Events are activated on specific actions. One Trigger Event is the OnDelete trigger that is

activated every time a row is deleted in the table.

Trigger Functions are defined by developers and can be activated on any instance of the table.

An example of the structure of a table can be seen in Code 2-1. This table is the first table in the

application, and is also one of the shortest. We will briefly describe each component of the table:

OBJECT-PROPERTIES contain meta data for the table such as creation data etc.

PROPERTIES contain general properties stored on the table and Trigger Events. This table only

contains a definition of the OnDelete trigger.

FIELDS contain the definition of the fields in the table.

KEYS contain a list of the fields forming the table’s primary key. In this case, the field Code is the

primary key.

FIELDGROUPS contain a list of prioritized fields used by the Role Tailored Client.

CODE contains the defined Trigger Functions. Every Trigger Function is defined as a procedure

11

OBJECT Table 3 Payment Terms

{

OBJECT-PROPERTIES

{

Date=05-11-08;

Time=12:00:00;

Version List=NAVW16.00;

}

PROPERTIES

{

DataCaptionFields=Code,Description;

OnDelete=VAR

PaymentTermsTranslation@1000 : Record 462;

BEGIN

WITH PaymentTermsTranslation DO BEGIN

SETRANGE("Payment Term",Code);

DELETEALL

END;

END;

CaptionML=ENU=Payment Terms;

LookupFormID=Form4;

}

FIELDS

{

{ 1;;Code;Code10;CaptionML=ENU=Code; NotBlank=Yes }

{ 2;;Due Date Calculation;DateFormula;CaptionML=ENU=Due Date Calculation }

{ 3;;Discount Date Calculation;DateFormula;

CaptionML=ENU=Discount Date Calculation }

{ 4;;Discount %;Decimal;CaptionML=ENU=Discount %;

DecimalPlaces=0:5; MinValue=0; MaxValue=100 }

}

KEYS

{

{

;Code;Clustered=Yes }

}

FIELDGROUPS

{

{ 1

;DropDown;Code,Description,Due Date Calculation

}

}

CODE

{

PROCEDURE TranslateDescription@1(VAR PaymentTerms@1000: Record3; Language@1001:

Code[10]);

VAR PaymentTermsTranslation@1002 : Record 462;

BEGIN

IF PaymentTermsTranslation.GET(PaymentTerms.Code,Language) THEN

PaymentTerms.Description := PaymentTermsTranslation.Description;

END;

BEGIN

END.

}

}

Code 2-1 Table 3 Payment Terms

12

Enterprise Resource Planning Domain

2.4.2.2 Codeunits

Codeunits act only as a container for functions. The standard function libraries are stored in Codeunits and

consist of utility routines that serve a general purpose in NAV.

User defined Codeunits can contain user defined functions. Codeunits can advantageously be used to:

Reduce the code in a table. Code can be extracted and stored in a Codeunit procedure.

If code is of a more general nature, and influences more than one object, it will be more correct to

store it in a Codeunit instead of storing it in a table.

The structure of Codeunits is identical to the structure of Tables presented above with the only exception

being that Codeunits only contain the components OBJECT-PROPERTIES, PROPERTIES and CODE.

Chapter 3

3 Related Work

The following section describes the prior work and accomplishments with relevance for our project. The

section is divided in two main parts. The first section is regarding related work done within Microsoft and

the Microsoft Dynamics NAV organization in particular. In the second section, we widen our scope and see

what has been accomplished elsewhere.

3.1 What has been accomplished inside Microsoft

The following section describes the achievements from former student projects and projects carried out by

Microsoft internally, that we know of. Elements of the previous work described later in this section have

worked as a great starting point for this project.

3.1.1 Partial C/AL parser

The core theme of this project relies on the ability to work with the application code in an abstract

queryable representation, see section 1.

A parser (CALParser) was developed by a former student project carried out by T. Hvitved 2008 (1). The

parser was developed to analyze the application to examine if the code could be modularized, to reduce

the number of dependencies in the code. The complete code for this project can be seen on the appendix

DVD in the folder \Code\Work From T. Hvitved\oo parser\.

CALParser is implemented in F# and uses the F# variant of the tools LEX (23) and YACC (24) to define the

parsing rules. The mentioned technologies are described in section 7.3.

The parser is of high quality, but unfortunately it is a complex piece of software that requires more work to

be complete.

From working with the parser we have found the following inexpedient issues we need to find solutions for.

The parser is not able to parse the actual application code.

Preprocessing removing multiline comments is necessary for the parser to work with the actual

application code.

The parser does not parse to the level of detail we need.

Table keys, table fields and field properties OptionString, TableRelationString and other options are

handled as strings and require subparsing.

The parser is implemented with F#’s immutable data types. This is a problem because we cannot

modify the data that needs subparsing.

We had a meeting with Tom Hvitved (Phd. Student at DIKU) who wrote the parser, and from his

introduction we learned that the intension with the parser was to implement enough level of detail to

14

Related Work

support his thesis, not to develop a complete C/AL parser. Taking this information into consideration, and

from our initial work with the parser, we know that we will be able to use the CALParser but not as-is.

3.1.2 Codedub

Codedub is a project carried out in collaboration between J. Kiehn (MDCC) and a Master’s thesis student,

Till Blume (IT University of Copenhagen). The focus for this thesis has been to find candidates for

refactoring. The short story is, that due to the lack of support for inheritance in C/AL, a lot of code blocks

are copied here and there. Code duplicates can be identified by comparing hashing values from code

blocks. The project deadline is June 2009.

3.1.3 Navision Developer Toolkit (25)

The Navision Developer toolkit (NDT) is a legacy tool for partners and internal users. The tool is able to

assist in upgrade operations and can display a number of details of the code. The NDT is not meant as a

development environment in the same sense as Visual Studio or Eclipse, but more as an analysis tool.

According to J. Kiehn, the tool has a limited success and is probably going to be discontinued. Partners and

developers rather use text comparison and search tools, such as Beyond Compare (26), in their analysis of

AL code.

3.1.4 Object Map

Object Map is an internal tool for displaying identified relations. The tool is a generic viewer of relations,

which are identified from an XML file. The XML file is produced with the Navision Developer Toolkit (NDT)

and it contains relations between Tables, Forms, Codeunits and Pages. The Navision Developer Toolkit

does, however, not support methods to determine the nature of a relation.

3.2 What has not been accomplished inside Microsoft

In relation to our project, the parser is still not complete, and needs further work to fulfill our

requirements.

Tools exist to display relations, but the view is static and based on a dump from the NDT. The

available tools do not support any kind of relation classification, i.e. it is possible to map a relation

but there is no way to know the deeper meaning of the relation (association, dependency, etc.).

The overall goal for Object Map is to display an interactive graph which allows us to obtain

knowledge about the structure of the application. The lack of relation classification reduces the

overall information we are able to read from a diagram displaying relations. Furthermore, if we

were able to apply general terms to how the relations are in the code, people with general

computer science knowledge will be able to get a deep insight into the application code

organization more quickly.

Suggestions for refactoring have in many ways been unsuccessful.

A good way to move from C/AL to C# has not been found.

3.3 What has been accomplished outside Microsoft

3.3.1 Refactoring from Code to UML

There are many projects offering code generation from an abstract representation. A search on Google

reveals that there seems to be an overweight in products offering generation of C++ and Java code.

15

A few products offer both refactoring from code to UML and vice versa. We have looked at two of the

larger products: IBM’s Rational Rose (27) and No Magic’s MagicDraw (28). We have previously worked with

Rational Rose without being completely satisfied with the UML generation. We therefore chose to look at

MagicDraw to see what the product offers when it comes to generating UML from code. The Enterprise

edition of MagicDraw comes with refactoring capabilities for Java, C++ and C# among other languages. We

installed a demo of MagicDraw 16.5 Enterprise and generated a diagram based on two files with a

generalization relation between them.

Table

-number : string

-id : string

+Number : string

+ID : string

+Table(in number : string, in id : string)

+Table(in info, in ctxt)

+GetObjectData(in info, in txt) : Table

REATable

+REATable(in table : REATable, in tablewith : Table)

+REATable(in info, in ctxt)

+GetObjectData(in info, in ctxt) : REATable

Figure 3-1 UML diagram manually created with Visio

Figure 3-2 UML diagram generated from code with MagicDraw

Figure 3-1 shows the relation we would like to map between Table and REATable. The analyzed classes can

be seen in the folder “Code\MatchingInLinq\MatchingInLinq\” on the attached DVD. The generalization

relation is described in depth in section 4.1. Figure 3-2 shows the generated model from MagicDraw. The

diagram does show a directed relationship from REATable to Table, but it is not clear that REATable is a

child/subclass to Table. We believe there are two legitimate reasons for this.

We have not spent hours on fully understanding how all the options of the tool works. MagicDraw

is a complex tool and we just generated a standard UML class diagram, expert users are probably

able to produce more useful diagrams.

The tool is directed towards creating code from a model and thus the model has to be very

detailed.

We do however believe, that the model created manually in Visio is providing a cleaner view of the

relationship between the two classes.

We believe MagicDraw is a fair representative for products offering UML diagrams generated from code.

Furthermore, we find that the generated diagram has a low readability, and as such does not offer the

overview we wish to provide. Many of the tools offer some kind of refactoring in a simple drag and drop

16

Related Work

fashion, but we have chosen not to look further into how they perform. None of the found refactoring tools

offer support for C/AL.

3.3.2 UML designers

Many good tools exist for drawing UML. Most tools offer good design options where the mouse can be

used to arrange the diagrams in any preferred order. We have primarily used Visio 2007 for this report, but

many other good tools exist. A list of UML tools can be found at the homepage of Associate Professor M.

W. Godfrey from University of Waterloo (29). We have not found a UML designer that can generate large

diagrams as a batch process.

3.3.3 Refactoring to Resource, Events and Agents (REA) (30)

Since the beginning of accounting software, all systems have relied on the double-entry accounting system,

introduced in section 6.3.2. Other systems have, however, emerged, trying to solve the drawbacks from the

double-entry system. One of the most successful systems is the Resource, Events and Agents (REA)

ontology. The system is developed by William E. McCarthy, who published his first paper presenting REA in

1982 (31). Since then, he and others have contributed in developing REA into an elaborate bookkeeping

ontology.

The scope of this project was, as described in the introduction (see section 1), extended to cover initial REA

identification (see section 6.3.1). We therefore looked into prior work in this field to learn from former

achievements. We found that not much work has been done in refactoring accounting systems into REA. As

previously stated, most accounting systems are based on the double-entry system. We have looked into a

paper analyzing the relationship between REA and the Enterprise Resource Planning system SAP (32). The

paper focuses on the similarities in the data models of SAP and REA. Evidence is found that proves the

existence of duality within SAP, but it is also found that SAP has implementation compromises that will not

fit into a REA model, and that a REA model cannot fully describe the SAP system. The paper concludes that

REA terminology is able to present SAP data models and that the REA presentation is able to provide

valuable information to the data model.

We have not found evidence of any work identifying REA concepts from automated code analysis.

3.4 What has not been accomplished outside Microsoft

Many products for refactoring between code and UML exist. They are complicated, and it requires

a larger study to benefit from their capabilities. No refactoring tools for C/AL exist.

Prior work mapping REA to an ERP product has not focused on analyzing the actual code

3.5 Related work summary

The most important notes learned from the related work study were, that we will need to extend the

parser to support the relation identification this project aims at solving. Furthermore, we will need to build

a custom viewer tool for presenting the identified relations in terms of UML.

Chapter 4

4

Foundational Relations (33)

The following section explains the conceptual meaning of the UML relations containment and

generalization, described in section 5. As previously described, the primary task of this project aims at

identifying the two above concepts from the NAV application code. Therefore, we introduce the formal

theory for the above concepts. The formal theory uses the abbreviations 𝑝𝑎𝑟𝑡_𝑜𝑓 for containment and is_a

for generalization. For this section, we primarily use the naming 𝑝𝑎𝑟𝑡_𝑜𝑓 and is_a, but in the later sections

we will solely use the naming containment and generalization to follow the UML standards described in

section 5.

We present the definition of 𝑝𝑎𝑟𝑡_𝑜𝑓 (containment) and is_a (generalization) in terms of standard firstorder logic.

The following two primitive relations are used in our formulas.

Inst(x, A) (short for instance) maps the relation between an instance and its class.

A statement using inst is: Jane is an instance of a human being.

Part(x,y) defines parthood between two instances.

A statement using part is: Jane’s heart is part of Jane’s body.

4.1 Definition of the Is_a relation

The is_a (generalization) relation is the simpler of the two relations we describe. The formula below

expresses that A is_a B. The right side definition of the is_a relation expresses that if x is an instance of class

A then x is an instance of class B and that this is true for all x. A statement fulfilling this formula is: A human

male is a human.

𝐴 is_a 𝐵 = def ∀𝑥 𝑖𝑛𝑠𝑡 𝑥, 𝐴 → 𝑖𝑛𝑠𝑡 𝑥, 𝐵

Formula 4-1 is_a

4.2 Definition of the Part_of relation

The 𝑝𝑎𝑟𝑡_𝑜𝑓 (containment) relation is a bit more complex. To provide a better understanding the formula

is divided into two parts which are joined in a third formula.

The part_for formula below express that if x is an instance of A then there exists an instance of y where y is

an instance of B and x is part of y and that this is true for all x. With the part_for formula we can state that:

Human testis part_for human being. But we cannot state that human being has_part human testis because

only males have testis.

𝐴 part_for 𝐵 = def ∀𝑥 𝑖𝑛𝑠𝑡 𝑥, 𝐴 → ∃𝑦 𝑖𝑛𝑠𝑡 𝑦, 𝐵 & 𝑝𝑎𝑟𝑡 𝑥, 𝑦

Formula 4-2 part_for

18

Foundational Relations

The ℎ𝑎𝑠_part formula below expresses that if y is an instance of B then there exists an instance of x where

x is an instance of A and where x is part of y. With ℎ𝑎𝑠_part we can state that human being has_part heart

but not that heart part_for human beings because many animals have a heart.

𝐵 ℎ𝑎𝑠_part 𝐴 = def ∀𝑦 𝑖𝑛𝑠𝑡 𝑦, 𝐵 → ∃𝑥 𝑖𝑛𝑠𝑡 𝑥, 𝐴 & 𝑝𝑎𝑟𝑡 𝑥, 𝑦

Formula 4-3 has_part

The 𝑝𝑎𝑟𝑡_𝑜𝑓 formula is a combination of the part_for and ℎ𝑎𝑠_part formulas. It expresses that A is

𝑝𝑎𝑟𝑡_𝑜𝑓 B if A is part_for B and B ℎ𝑎𝑠_part A. With the 𝑝𝑎𝑟𝑡_𝑜𝑓 formula we are able to state: Human

heart part_for human being and human being has_part human heart, which is the definition of the

𝑝𝑎𝑟𝑡_𝑜𝑓 relation.

𝐴 𝑝𝑎𝑟𝑡_𝑜𝑓 𝐵 = def 𝐴 𝑝𝑎𝑟𝑡_𝑓𝑜𝑟 𝐵 & 𝐵 ℎ𝑎𝑠_𝑝𝑎𝑟𝑡 A

Formula 4-4 part_of

The first-order logic definitions for is_a and 𝑝𝑎𝑟𝑡_𝑜𝑓 respectively generalization and containment are the

requirement to the patterns this thesis aims at identifying. The formal definitions have not been in the

foreground when developing the identification algorithms. The formulas are presented to provide a link

between the UML concepts generalization and containment, introduced in section 5, and their formal

mathematical definitions.

Chapter 5

5

Unified Modeling Language (UML)(34)

As mentioned earlier, see section 1, we have selected UML as the output standard for our findings. The

reason is that we aim at identifying common computer science concepts, described in section 4, and

present them in general abstract terms to present a generic result. In the following section, we will give an

introduction to the part of UML we use to facilitate a view of our findings.

5.1 UML Terminology Overview

The Unified Modeling Language (hereafter UML) has become the de facto standard for describing object

oriented software by means of models and diagrams. Since the object oriented languages started gaining

acceptance in the late 1970s, available modeling techniques proved inadequate for describing objects and

their relationships. This was the driving force behind the development of a new modeling technique and

Grady Booch, James Rumbaugh and Ivar Jacobson started developing and documenting UML in 1994. They

founded a UML consortium with several organizations willing to allocate resources into developing UML.