Automated method for identifying and prioritizing project risk factors

advertisement

Automated SoftwareEngineering, 2, 231-248 (1995)

© 1995 Kluwer AcademicPublishers, Boston. Manufactured in The Netherlands.

Automated Method for Identifying and Prioritizing Project

Risk Factors

GREGORY A. TOTH

g.toth@ieee.org

USC CenterJbr Software Engineering, Universityof Southern Califbrnia, Los Angeles, CA 90089

Abstract. Thispaper describes an approachfor automaticallydeterminingprojectrisk factorsbased on answers

to an interactive questionnaire. Stored knowledge of complex interrelationships is used to transform qualitative

inputs into quantitative factors used to rank and compareidentified risks. A tool called the Software Technology

Risk Advisor is described which implements these techniques and provides a testbed for further refinement.

Keywords: knowledge-basedsoftware engineering, risk management, advisorysystems.

1.

Introduction

Risk management techniques, when correctly applied, can help ensure the successful outcome of software projects. Risks are potential issues that, if not identified and managed,

could unexpectedly surface and cause substantial trouble when least expected. There are

many philosophies and approaches for managing risks, including those discussed by Boehm

(1989) and Charette (1989).

The first step in risk management is to identify and prioritize the risk areas relevant to a

project. Each project has different risks due to the unique characteristics that differ from

project to project. Effective risk management generally requires substantial insight into

technical, schedule and cost issues and their complex interactions. Such insight is usually

found in persons having extensive experience in a given application domain coupled with a

broad understanding of software at large. These experts are always in short supply, making

it difficult to repeatedly apply their expertise across many projects. One method of capturing

their expert knowledge and making it more widely available is through knowledge-based

tools such as expert systems. Since risk management requires expert knowledge, it is a

natural application for knowledge-based approaches.

The value of automated risk management tools is to make expert knowledge available to

less-expert participants in the software development process. Software technology experts

are often needed to assess new ideas and proposals. Automated knowledge-based tools

may allow more frequent assessment by non-expert personnel, especially during concept

formation stages. Such assistance could improve the software development process by

providing earlier identification of high-risk approaches and thus allow more time to develop

appropriate risk management strategies.

1.1.

Assisting Risk Management

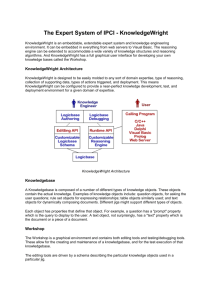

A knowledge-based tool known as the Software Technology Risk Advisor (STRA) was developed to provide automated expert assistance during risk management activities. Figure 1

illustrates the tool's overall topology. The STRA acquires information about a project from

the user, correlates the information to stored knowledge of software technical issues, and

232

TOTH

Project Type

Inferred & Prioritized Risk;Areas

•

b Risk Descriptions

Project Size

Importance of Product Needs

Rationale

Dp

User interface

tmportanom of Process Needs

{mportance of Capabilities

IP

Risk Reduction Advice

• Detected Conflicts

I

p Explanations

User Controls

• Knowledge Crou-Refemnc~s

Risk Engine

• Comtoiter

Figure 1.

1

, ,,

Overview of the STRA tool.

identifies disparities between project needs and current software capabilities. Such disparities are considered a source of risk, primarily due to the need for software technologies

that are immature or unavailable. Using previously developed or time-proven software technology generally implies lower risk, while needing non-existent or unproven technology

generally implies higher risk. Software technology risk was selected as a subset of the more

general technical/schedule/cost risk areas associated with modern software development.

A key step in the approach is to transform qualitative user inputs into quantitative factors

used to prioritize identified risk areas. Risk areas are determined and ranked by analyzing

multiple project factors using interrelationships stored in the knowledgebase. A prioritized

list of risk areas is automatically produced along with absolute and relative weighting factors

for comparison purposes. Explanation of each risk area includes a description of the risk

and rationale for each inference; this allows fine tuning and provides insight into how results

were achieved.

Several additional functions are provided as a by-product of basic risk identification.

The knowledge sources used as a basis contain much useful information about the current

state of software technology, including both products and the process by which they are

created. This knowledge can be useful to users who are not familiar with current software

issues. Explanation facilities provide organized access to this knowledge within a structured framework. A broad taxonomy of large-scale software development issues is thereby

covered.

The knowledgebase is quantified using opinions of software experts. Less-experienced

users can benefit from access to this expertise. Additional benefit is provided by interrelationships and consistency rules derived from experts and stored in the knowledgebase.

The STRA also provides a framework for maintaining notes related to individual facets

of software technology and engineering. These notes provide insight into various issues

and are provided upon user request. As such, the STRA serves as a unified repository

for knowledge, relationships, notes, cross-references, and other information pertinent to

software technical issues and risk areas.

AUTOMATEDMETHODFOR IDENTIFYINGAND PRIORITIZINGPROJECTRISKFACTORS

233

1.2. Outline

This paper is organized in sections describing various aspects of the STRA and its underlying

organization. Section 2 describes the risk knowledgebase and how the necessary knowledge

was gathered and represented. Section 3 describes implementation details and user interaction. Section 4 discusses results from experimental use. Section 5 compares the STRA to

related work. Section 6 discusses the status of STRA development and future work. These

sections are followed by conclusions which summarize important contributions made by

the STRA.

2.

2.1.

Risk Representation

Software Technology Taxonomy

Techniques used in the STRA are not dependent on a specific source of knowledge. However, the U.S. Department of Defense Software Technology Strategy (SWTS) (United States

Department of Defense, 1991) provides a reasonable taxonomy of current software technologies and their relative maturity. This was considered a good starting point for STRA development, and was used as the basis for an initial knowledgebase. Other written knowledge

sources, such as briefings, conference proceedings and textbooks, were used as supportive

and clarifying material.

The main objective of the SWTS was to define a plan for pursuing specific software technology areas. In order to determine which technologies are most important, it was necessary

to identify capabilities needed to support future military systems. The DoD evaluated several long-range military plans to identify system/software needs and software capabilities

that satisfy those needs. By qualitatively evaluating the maturity of each required capability,

the SWTS identifies technologies needing further research and development work.

The SWTS document classifies software needs into two main groups: Products and

Process. Products refer to the end-item software systems being built, while process refers

to the techniques used to build them. Each of these groups is further divided into functions

and attributes.

Five categories of software products are identified for DoD military systems: Command,

Control, Communications and Intelligence (C3I) systems; Combat Unit systems; Corporate

Information Management (CIM) systems; Manufacturing, Science and Engineering applications; and Simulation and Training systems. Each of these areas has different technology

needs.

Product functions describe specific capabilities provided by software, such as "Fuse

information from changing, imperfect multiple sources." Functions are usually observable at the system level, and are typically elaborated in system or software requirements

specifications. Product functions are often domain-specific in nature.

Product attributes are desired qualities of a software system, such as "Interoperability and

portability of DoD software applications and reusable software components." In general

they are less domain-specific than product functions, and while being less tangible, they

are often equally as important. Developing software systems possessing desired attributes

requires software technologies in much the same way as product functions.

Process functions and attributes, on the other hand, cover technologies used when building or maintaining software. While these may not be as technologically intense as product

234

TOTH

technologies, they are nevertheless important to successful software development. As software systems continue to grow in size, process technologies become even more important

to assuring software quality, timeliness, and cost effectiveness.

Each need is satisfied by one or more capabilities, which are also identified in the SWTS.

Capabilities are specific applications of technology, such as "Smart Sensors" The SWTS

attempts to describe each capability's maturity using simple qualitative statements such as

"Simple aids (adaptive beamforming)"

Heuristics were derived from expert interviews and from the author's own software

development experience. Heuristics are used to generate three additional sets of knowledge

in the STRA: 1) Consistency rules used to check user responses to questions; 2) Size-effect

scale factors used to scale certain risk areas as a function of project size; and 3) Risk

reduction advice.

The knowledgebase also contains supporting information for each need, capability, and

risk area. This data consists of elaborated descriptions, notes, and cross-references to the

knowledge sources. Supporting information is available to the user by selecting appropriate

STRA menu commands.

2.2. Acquiring Risk Information

Domain experts were interviewed to supplement written knowledge sources. Kelly (1991)

provides many practical guidelines for knowledge engineering. The main thrust of expert

interviews is to: 1) Provide quantitative values for needs and capabilities; 2) Determine

areas of highest payoff; 3) Identify heuristics that relate various facets of needs, capabilities,

and technologies; and 4) Provide feedback on working versions of the STRA. The first part

of each expert interview is a discussion of the expert's background in order to establish

proper context for questions and answers. A major challenge of STRA development was

to identify and mechanize quantitative relationships between knowledge elements; much

of this quantification comes from the experts.

Interviewing domain experts is an ongoing activity used to expand and improve the

knowledgebase. Two experts were interviewed during initial knowledgebase development.

These initial interviews were conducted through a series of meetings which followed a

pre-planned agenda. Since the interviewees had busy schedules, establishing and following a detailed agenda was critical to achieving interview objectives. A concerted effort

was made to keep each interview on-track to avoid spending time on low-payoff topics.

The first expert had considerable expertise in software processes and the C3I product

domain. The second expert had product experience in the simulation/test and corporate

information management domains, including non-DoD and commercial applications. At

the end of each interview working STRA prototypes were demonstrated. Results obtained

from these interviews included:

• Product and process observations

i) Each person, depending on their perspective, has a different notion of product function

importance. For example, someone in the CIM domain may consider CIM systems

critically important, while someone in the C3I domain may consider it less important.

ii) Product functions and attributes tend to vary from project-to-project, while process

functions and attributes are more universal.

AUTOMATED METHOD FOR IDENTIFYING AND PRIORITIZING PROJECT RISK FACTORS

235

• Heuristics

i) Requirements engineering is still generally weak.

ii) Many laboratory/prototype algorithms and approaches do not scale well to operational

systems, particularly in the areas of AI, robustness, and human-computer interfaces.

iii) Distributed and parallel systems are increasingly needed but are very immature.

iv) Several software technologies frequently deliver less than what is claimed for them.

For example: CASE tools, reusability, COTS software, and Ada.

v) Many observations from past experience were discussed; several of these were converted into conflict checking rules.

• Quantitative values

i) Nominal values for the relative importance of product and process functions and

attributes were obtained; these were used to help formulate default values in the

knowledgebase.

• Comments on STRA prototypes

i) The general STRA implementation approach, as demonstrated during working prototypes, seemed effective.

3.

Implementation Details

Use of the STRA follows a template-based approach. The user answers questions which are

tailored to the project being analyzed. Answers are used to infer risk areas by correlating

them to relationships stored in the knowledgebase. Correlation and inferencing is done

using a weighted connection network. Final outputs of the network correspond to risks

areas which are sorted and displayed in priority order. The process is interactively repeated

as many times as desired. Sample screen shots are shown in Figs. 5 through 7.

3.1.

User Inputs

Figure 2 depicts the flow of user interaction with the STRA tool. First the project type

and size are selected from pick lists. The type of project determines which set of detailed

StellSTRA

pSele~~Typeof

Pro~,ctSure

; RJt~OsIo :

Figure 2.

Flow of user interaction with the STRA tool.

236

Table 1.

TOTH

Project size criteria.

Project size

Size criteria, KDSI

Small

Medium

Large

Very large

< 10

I0 to 100

100 to 500

> 500

questions will be asked. Next a series of questions are answered by clicking check boxes

to rate the importance of various "needs" to the project. At any time during this process

the user can ask for a risk assessment. When a risk assessment is generated, the results

are displayed along with weighting factors and rationale. By viewing the rationale and

contributing factors, the rating factors can be refined to improve risk assessment accuracy.

Much of the fine tuning takes place by optimizing the ratings for lower-level capabilities

that satisfy higher-level needs. All ratings have default values which are stored in the

knowledgebase; these provide a point of departure and help guide less-knowledgeable

users.

3.1.1. Type of Project.

The user selects a type of project that most closely matches

his or her own project. The current list of project types includes C3I systems, Combat

Unit systems, Corporate Information Management systems, Manufacturing, Science and

Engineering applications, and Simulation and Training systems. Once the type is selected,

the STRA searches the knowledgebase to locate relevant needs. The relevant needs are

displayed 0n-screen so that importance ratings can be set by the user. A "catchall" project

type is included which contains all possible needs and thus supports projects which do not

conveniently fit a pre-defined type.

3.1.2. Project Size. The maturity of certain software technologies is sensitive to project

size. For example, a technology that works well for small projects may not scale well on

large projects. Size-effect scale factors are carried in the knowledgebase to handle these

conditions. The user specifies project size according to the number of Delivered Source

Instructions (defined by Boehm (1981)) as shown in Table 1. Project size selection based on

factors other than KDSI (e.g. function points) could be easily accommodated. In general,

technologies that are sensitive to project size become more risky as the size increases.

3.1.3. Importance of Product~Process Needs.

The importance of relevant product and

process needs is initially set to default values derived from experts and stored in the knowledgebase. These defaults correspond to "typical" projects. Since each user's project may

not be completely typical, the default importance ratings can be interactively changed to

improve risk assessment accuracy. The importance of each need is selected from the set

{None, Very-Low, Low, Medium, High, Very-High, Extra-High}.

Each time a rating is set by the user, it is checked against all other ratings by applying

consistency rules stored in the knowledgebase. If a conflict or inconsistency is detected,

conflicting needs are displayed and the user may re-answer the question or disregard the

conflict. Consistency checking provides extra assistance to novice users by immediately

pointing out potential problems. It also helps experienced users avoid simple oversights.

AUTOMATEDMETHODFOR IDENTIFYINGAND PRIORITIZlNGPROJECTRISK FACTORS

237

3.1.4.

Importance of Next-Level Capabilities. Each product or process need is satisfied

by one or more capabilities. The importance of each satisfying capability is set to a value in

the range {None.. Extra-High}. If the importance of a capability is high, it may have a strong

influence on risk results. If the importance is low or none, it may have a weak influence.

Capability importance ratings are initially set to defaults stored in the knowledgebase. The

user may wish to change these defaults to better match specific characteristics of the project.

For each risk identified by the STRA, detailed rationale shows the quantitative influence

of each needed capability. This is useful for iteratively fine-tuning capability importance

ratings to optimize the risk assessment.

3.1.5.

Risk Description, Advice and Rationale. After all questions have been answered,

the STRA correlates answers and computes numerical risk values for each possible risk.

These are stored in descending order and the top ten risk items are displayed. At this point

the user may request detailed information about each risk or repeat the rating/assessment

cycle. Detailed risk information includes a risk description, advice on risk reduction, a

list of all related technical capabilities and their shortcomings, and all user answers that

generated the risk.

An important aspect of the STRA is its explanation capability. The user can select

"explain" to access supplemental information, notes and cross-references to the underlying

knowledge sources.

3.2.

Inferring and Ranking Risks

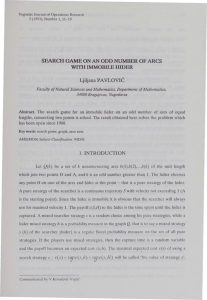

The STRA inferencing mechanism is a weighted connection network having four levels

as shown in Fig. 3. Figure 3 is a simplified representation of the topology; there are actually hundreds of connections. Three of the four levels have weighting factors on each

interconnect. A weighted connection scheme allows all factors to be considered simultaneously while retaining a computational (numerical) approach to identifying risks. Key

quantitative factors and their use at each connection level is further described below. Each

node in the network is implemented by an object-oriented data structure containing all

pertinent information along with connections to other nodes. The current number of

interconnections at each level of the knowledgebase is summarized in Tables 2 and 3.

Table2. Numberof connections at each level.

Project or

product t y p e

C31

Combat Unit

CIM

Mfg, Science

& Engineering

Simulation

& Training

User-Defined

Level 2

Level 3

Level 4

connections connections connections

28

30

22

24

135

129

125

118

28

29

26

27

23

122

26

43

185

33

23 8

TOTH

Table 3. Other knowledgebase statistics.

Project or

producttype

C3I

Combat Unit

CIM

Mfg, Science

& Engrg

Simulation

& Training

User-Defined

Connection

I

Product Product Process Process

function at~ibute function attribute Software Consistency

needs

needs

needs

needs capabilities

rules

7

9

1

3

10

10

10

10

7

7

7

7

4

4

4

4

113

110

107

99

61

44

33

30

2

10

7

4

98

30

22

10

7

4

153

78

hE

/evei I

Caernect¢on

Levet 2

Connection

Level3

t

net

[ irnpor

nee,

( impot

I

Conn~cIlonsign~

Level 4

[

i~lstal

[ Risk Area ]

Sea,or Fnsi~

[ Risk Area 1

[ RiskAreaI

Figure 3. Representative knowledgebase object interconnections.

Four primary numerical values, k n o w n as size, importance, maturity, and significance,

were devised to quantify and correlate knowledge. Size represents the project size (Table 1)

and can take on values from the set {Small, M e d i u m , Large, Very-large} corresponding to

n u m e r i c a l values {3, 2, 1, 0}.

3.2.1.

Importance Factor. Importance describes the relative importance of a lower-level

need or capability to a higher-level need. Importance can take on values from the set {None,

Very-Low, Low, M e d i u m , High, Very-High, Extra-High}, corresponding to numeric values

{0, 1, 2, 3, 4, 5, 6}. Using Fig. 3 as an example, Product Function Needs has constituent

AUTOMATEDMETHODFOR IDENTIFYINGAND PRIORITIZINGPROJECTRISKFACTORS

239

needs Detect Threats Amid Clutter and Jamming and need-48, where Detect Threats...

might be of medium importance and need-48 might be of very-high importance. Detect

Threats... requires capabilities Smart Sensors and cap-98, where Smart Sensors might be

of high importance and cap-98 might be of extra-high importance. Importance values are

used in connection levels 2 and 3 and are set by the user. Default importance values were

obtained from domain experts and are stored in the knowledgebase. Each level 3 connection

is also scaled by the size factor as described later.

3.2.2. Maturity Factor. Maturity factors are used solely for capabilities. They identify

the relative maturity of a software technology in today's time frame. Maturity can take

on values from the same value set as importance, except that {None} is not used. Commonality between values of importance and maturity provides symmetry and consistency

in the knowledge representation. It also allows reuse of data manipulation functions in the

implementation. All maturity factors have constant values obtained from domain experts.

3.2.3. Significance Factor. Significancerepresents the relative significance of each mismatch between needs and capabilities.It is computed as the difference between a level 3

importance and a capability's maturity factor, multiplied by the corresponding level 2 importance; the result is then scaled by the size factor. A size-effect scale factor is associated

with certain capabilities (those which are sensitive to size) to represent the net scale factor

for a small project. Linear interpolation is used to compute scale factors for other sizes.

Using Fig. 3 as an example, a sample significance calculation is shown below:

Assume:

Project size is large {1}

Detect Threats Amid Clutter and Jamming importance to Product Function Needs is

very-high {5}

Smart Sensors importance to Detect Threats Amid Clutter and Jamming is high {4}

Smart Sensor maturity is low {2}

Smart Sensors size-effect scale factor is 0.4 (net scale factor for project size = small)

Then:

Smart Sensors significance = (4 - 2) • 5 = 10

(1)

Applying the size-effect scale factor yields:

Smart Sensors significance = 10 * [1.0 - (1 • ((1.0 - 0.4)/3)] = 8

(2)

Significance is computed with a floor of zero (0) in order to prevent negative values.

The size-effect scale factor accounts for changes in a capability's significance as a function

of product size. If the size-effect scale factor is one (1) then no scaling takes place and

significance is considered independent of size.

After computing each capability's significance, all capabilities are coalesced according to

their connections to capability families. A capabilityfamily is a general technology area (e.g.

real-time systems). All capabilities belong to at least one family. The significance values

of all capabilities in a family are averaged and the result becomes that family's absolute

risk weight. Families are sorted in order of decreasing risk weight and the top ten items are

240

TOTH

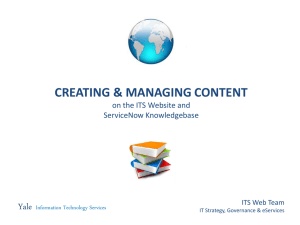

Prolect-type

name

v Need

name

instance

ShOtt-de$,C

C.......

/

I needs-product-func,,ton

need """

Ionfl~esc

notes

. capaMi ~

;: l im,l~rtan~

crosser

i l~ z''~te'act°r

needs.a

/

[cross4ef

.,. .................................................................

Capabillt~ 4,,

name

iS4

inst#nce . . . . . . . . . . . . . .

,

....... p, Caplbiiil~4mmll~ name

.

"'"

Confllct-oale lelf-hand

is*8

[ tnre~t

instance

shoR-des.~c

shoR-desc

lon~-detc

}on~.desc

r.eru.~

nsk.csduc'don

cun'ent-cat."-de$c

note~

notes

CtOSl.mf

Kbdnfp,

I ve~ion.id

ctoss..tl~f

Figure4. Knowledgerepresentation.

presented as potential risk areas. The weightings of each family are also normalized to the

highest risk weighting; this makes it easier to see relative risk positioning.

3.2.4. Knowledge Structure and Organization. The STRA architecture consists of schemata, rules, and utility functions. Schemata, also known as frames, store basic knowledge

about needs and capabilities. Schemata are interrelated through inheritance and through

relations such as has, needs, needs-a, and instance-of Importance and size-effect factors

are carried on non-inheritance relations to quantify each relationship. Attached to each

schema are textual descriptions taken from the SWTS document, an elaborated description,

applicable notes, cross-reference information, and advice. Figure 4 illustrates the schema

organization. All user input, data manipulation, risk computations, consistency checks, and

resulting printouts are performed by supporting functions.

3.3.

Consistency Rules

Consistency rules from the knowledgebase are used to detect potential conflicts between

level 2 importance ratings. Each rule consists of three ordered elements in the form (left,

right, threshold). When a need corresponding to right is rated by the user, it is checked

against all active rules to determine which conflicts may exist• Active rules are those which

are not being disregarded or ignored. A conflict is asserted by the difference between the

new rating for right and the existing rating for left is greater than threshold, either positive or

negative• Any, rule involving a left need still having a default rating is temporarily ignored

in order to prevent needless conflict assertions during initial ratings. Detected conflicts are

displayed along with related left ratings as illustrated in Fig. 6. Table 3 shows the number

of consistency rules in the current knowledgebase.

AUTOMATED METHOD FOR IDENTIFYING AND PRIORITIZING PROJECT RISK FACTORS

4.

241

Experimental Results

4.1. Sample Screens

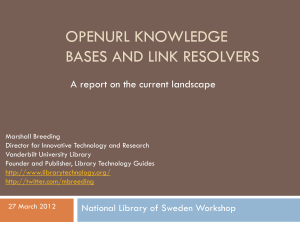

Sample STRA screens are shown in Fig. 5 through 7. Figure 5 shows the initial screen

after startup, with a Very-large C~I project as the default. From here the user would select

the appropriate project type/size and then rate importance factors for relevant needs. The

set of needs changes, based on the knowledgebase taxonomy, whenew;r the project type is

changed. A scrollbar is used to expose needs that are not currently visible due to screen

limitations.

All importance ratings have default values; these can be changed by making corresponding checkbox selections. A special symbol next to each need shows whether it has been

rated or is still at the default. A button is provided to reset all importance ratings to default

values; this is useful for returning to a known state.

Each time an importance rating is set, consistency rules are consulted to check for conflicts

with other ratings. If a conflict is detected, a message is displayed such as shown in Fig. 6.

At this point the user has several options for dispositioning the conflict. If a conflict

is disregarded, the associated consistency rules will never again be checked. The total

number of disregarded conflicts is shown by a gauge in the upper right corner. Controls are

provided to turn conflict checking on or off and to reinstate any disregarded conflicts.

.~

Softw'arl Technology Risk Advisor - Version 2.1.3 - Co~yricjht ( ~ t393 bY CeIgOPY 4. Toth. A l l l i g h t s i s s i r ~ e d .

Exit Risk Advise,- I

P~ojectType:

~

Project $1zo: ~

N

N

VL

L

VL

~

H

H

VH

~

-

Figure 5.

M

H

£H

Importance of Product Function Needs:

Exl=latn... ] > ~ete~ threa~ amid clutter and Jammlnq

~1~

_J

}late Next Llvel_)

Exollin.. ! :~ FUSe information f1"om mul~ll~le sour'¢8~

~,

.~

Rata Next L~vel_ '; Explain.,. I > Evaiuate '.hrea~ and ootions under dlff~cult ¢0ndltlons

_j

R I t l Next t.avii.. ~ Explain.. ~ :~ Timely and =ffictlve m~;tion plannin9

/

Ra_~.;~x~v_ll_.~

Ex2ttin.. i > Rill--time. ¢otn=lex b;t~ll m~t in the "~oq of w;¢'

._j

Ra~e Next LeveL, ]

£xolaJr~.. ~ > Tl~nsmlt C31 ~nfcrmtltlon tO ctynamically changint re¢ioienl~

3~

-J

R;l~e Next LeveL. ) , £ x 0 1 a i L )

• Oeny adversa.ry attest to C31 infocm~tion

VH

EH

~

l i ~ i Next Revel-- ~ , E;~glain.- )

• Rioid r i c o n f l t u r l ~ l l i ~

~

Re! Hilt LlvlL )

~1~

A

L

I1~. Vet 2.0.1

(:* 500 1<]3$0

Ra_t±~ext L e v e L )

I m g o r t a n c l of Product Atl~ibute Needs:

E~oIillL ] > JnilroDIKl~illty

> Easy--to--USe ~ut katd-~o-mlsuse ¢iurlrl9 ~'lii$ situations

~J

ll~e Next LllVel--j

Exolain._)

~

ReEl NIxt L i t I L )

£xllliin.., ) • Oill~iblgld Illll$ with herlro<]loul lIfoCiSSOpl arid $ O ~ i J ~

_J

Rgll N l x t L I v l l L /

CXOlii~L j

_J

RI~I Next CIvll--'l

Exolaln- ) • G u a r l n t . d riled-tirol OlllPitl~In in COmpleX e l b ~ J d d i d lysI:el$

> /al'alltl ~¢o~ll~ll~lg

_J"

Rail Next L I v e L ~

~xi:iliiP,. ~; • ROl:uit l e d Piult-toler~nt distributed ~y*;t~ms

..J

Rat8 Next I.iVII_ ]

£ x l ) l i i l ~ ) • InfOtma~on secui'tt?

J

_.1

Rail N l x t b i l l

VH

EH

1

VL

il

-Jr

_j

N

To O d l u l t ~aluls "= C I l l " ~11 Con fl~cI O l i r l l i r d l ~

~1~

~1~

~

R l i l t All ImOot~lncl l a t l r ~

Command, Con~,ol, CornmUnlCationsandlntelllc,~ence(C30~y~ims

Vlry-k~,¢~l

q

L

Rtcik-~llte Rll kil ;

) , Ex~lll~-. )

• $ o f t w i ~ a~d ~ystim ~ i C Y i i l U f l ~ ¢ l l

Imlortinc~

o;= P r o c l l s FuncCI~R N e l d I :

Initial STRA screen showing Level I and Level 2 needs,

242

TOTH

:J

$ofT~v~'e Technology RIsk Advisor - version 2.1-1 - CooYl'ight {c) 1993 by C r e g o w A. Toth. All R[ghts Alser~ed.

~eQlcuiato RIsks! ~

Exit Risk Ad'~lsc¢ ]

Reset AH ~rn~)ortance RatteRs TO Default ualuls ~

CREW"All Conflict Disregards )

KB ID: Vet 2.0.;

i

Conflict Checking; 0 ~ 0 ~

PtojectTypo:

VL

Num Disregards: "~ •

,

,

,

,

,

.

,

;

;

. . . . . . . .

~'~ Comm;nd, ConIl'ot. Commun~Gltion~s~dlntel;igence(C3~$ystem$

~'~JlC~ $t11~: ~

N

Auto-Rec;tlculatll &lsks: ~

vlry-~;~Qe () 500 KD$0

L

M

_J

~

H

~

VH

EH

~

_j

~mooKance of PPoduc'¢ F u n c t l o . Needs:

R&(~ Naxt Level

Exl~aln

1

Oe~lct threets amid clutter and Jamming

FUS~ infccm~ion ~rorn multiple sources

_2

_J

.-i

-2"

~v~luace ~hre|~ ~nd o0tlons under d[ffTcult condition

_J

_J

_J

.2

A¢¢Ordlnq to heuris:ics, your ~ting of:

fien~

Informa¢i~o sect,city [ LOW ]

N

VL

L

may oi In¢onsistln~ with your e~ller r~ting$ for~

_J

_J

.J

Transmit C3I information to O/namically changin 9 re¢ipiolltS [ VeP/-HkJh ]

Deny advers~/ access to C31 ~nformation [ Ve~-HIRh ]

-J~ii!iiiil

S

r ~

~, elg awing nywayJ

Abarldon~,,R~tt[ng&TryAqain)

Kello&lgnccltTh~sConfl~C[FromNowOn~

systarns

_J

N

_J

VL

.J

:d

.J

~

.J

k

M

H

VH

EH

RatQ NeXt LOVO~., i

EXl~llict.. '; • So~va/~ and system S&(~ty ~ S S U r l ~

[ m p o r t a n o l of Pr~olss Function Noeds~

Figure 6. Conflict detected after rating importance of Information Security.

Explanations are available by pressing Explain buttons. Lower-level capability ratings

are accessed by pressing Rate Next Level... buttons. At any time a risk assessment can

be performed by pressing the Recalculate Risks! button. This causes a list of risks to be

displayed as shown in Fig. 7. A scrollbar can be used to assess additional risks not currently

visible. When automatic risk recalculation is turned on, risks are automatically recalculated

and displayed each time an importance rating is changed. The entire risk inferencing process

is fast. On a Sun SPARCstation 10 it taken around 0.5 seconds to infer, calculate, sort and

display all risks.

Risks are shown in priority order along with their absolute (second column) and normalized (third column) weighting factors. Explanation, advice and rationale for each risk

are accessed by selecting the risk as shown in Fig. 7. The rationale section shows all

capabilities contributing to the risk, along with their significance and maturity factors, maturity descriptions, and originating needs. Pop-up windows can be "pinned" so they can be

viewed while fine-tuning importance ratings.

4.2.

Usage Results

The STRA has been used under uncontrolled conditions and during demonstrations. It has

been applied to several sample projects, primarily in the C3I and Combat Unit domains.

During experimental use, an initial risk assessment was usually generated after spending

five to ten minutes rating level 2 needs. In most cases the initial risk assessment was close to

AUTOMATED METHOD FOR IDENTIFYING AND PRIORITIZINGPROJECTRISK FACTORS

")

243

SoP~v'~-~ TlchnolocjV Risk Ad~isor - Verlion 2.1,3 - Cooyright (d 1993 by Cr~gorv A. Toth. All lie]has ReserVed.

~ x l t l l s k Advisor }

Recalculate llsksl )

Conflict Checkln~J:

Off~ ~ - n

A u t o - R e c a I c a l a t l Risks: ~

Project Type:

~

ProJeclc SIze:

~1 Ve~-~a.~e (:~ .~00 ~ S 0

N

~

VL

L

H

Nut~

Risk ~hat real-time trn~lern~n~atLon doe~ ~ot meat tfvouqhouc

memc¢% ~ I / 0 bandwidth re~mremant~ within r~;ourQi

limitations lm0osed by physical, technological, o¢ cost

constrain c~.

EH

VH

Im~o~mn,

Rate ~lext ~vel_ ~ Ex~lain.J

~etlct t

RISk ieduCtion Advicl:

Ra~ Next I.evel_ ) Ex~:lain... i

Fuse inf

'- in.., )

.~valuit~

ESter(aSh ~hroug~d, memoq, and I/O bandwidth buctds . f l y in

project ~n~ oe~iodlc~lly compare against actual me~rsurtmen~ in

OrdEr re =rO~id¢ mlximum t i r o l to address 0ro~)lem¢. senehmatk

¢TItlQI ~g¢~'ithms to rlitO determine needed oroo~ssinq r~sources,

£v~luam compilers and run-~irne environments to ide~tifi/week~esse$

a~d ~o ¢¢sess o0rtccman~l asoo¢'~3, S~Io~ ~ro~rammlnq lan~uaqes

that have r ~ o n a b l y c~ood codl ped~rmanc~ on your ~ , r ~ t oto~ssor

i

~'ioritlzed Risk

A.____re~u;

F~t~

(.U..7) (lOOl ~

tlO.o) ( t 1 I t ~

t~tr,~l

( 7 . 7 ) ( ~T;] N't~'lci41 t n t e t l l ~ m e ~

< 7,~) ( 6x} F~Jh role~,c~

, -,

~_ ':

Trans~l

(I.q,. Adt C},

Needed Caeabilitles That Substantiate T h i s Risk:

6. ( 7 . 0 ) (60) ? ~ * l l * t ~ 3

hoid it

l~orcan

Cao; Real-£ime sensor ~usion [ 1~10 ]

Cug Needs k ~ r i p i d bullet end fusion algc~tthms.

Mac Uiry-Low

F~t= Fuse In f~matton fTom muitlp¢e souf~s [ Vet~/-Hlgh ]

($.5) ( ~tl} l~w,S,~d Lif~c,,,jele ~lop*a.~r. T~<,ts

8.

Pe,*tabll

hsy..;

-;

-J

-i

-:

¢

-.I

aatl ~0xt Lavo~..;

I

~x~iaie~. }

Ois~ridu |

t

"a'

t

Fuse information from m u K l p [ e s o u ~ $

N

VL

!

_~

--]

_J

~

L

M

H

VH

EH

..J

~

~

~

_]

--J

~1~ ..J

..J

.J

~a~ Next Livel_ ) Exolzin--~ • ~:cura

-- ~

Ra~ ~axt Live#- } ~xDl;irt.. ! • ~,daptl

/

~

_t

R .....

Ratl ~ l x t level neld5

~AK

j~

R e a l - T i m e Systems

Risk Oesc~iltion:

Command. Con~-ol, Communication; an~t Intelligence (C30 Syst

Ris k A s s e s s m e n t

L

2.

3.

/*.

_~

Reset ALl l i n e . t i n c t latings TO Defaull

Needed

tLevel_)Ex,fairt-),R.d-~im

Cap: R e a l - t i m e hemroqeneous dIstribumd P , ~ t e m s [ IOD ]

Cur:. Real-time ~l<hnoiogy Ilmi~d in ho~rc<Jeneou~-dis~ibu~b

at~¢

Ha~ Hedlum

Fcc R.l-time. com~l|x h a i t i mqt in ~h¢ "fo~ d war" [ Vl~,-HIc~h ]

Ca~ ~eaF-time pcra,liet ~'YStems $o{C"~q tO~lS [ 10,O ]

......

f....

"I

1|

n Needs:

~;~r ;~m¢ifTr a n n l i ~ f i ~

i

FigureZ Example risk asessment, Level 3 needs, advice and rationale for Real-TimeSystems.

expectations but with perhaps one or two risks which did not seem intuitive. Examination

of rationale showed that certain capabilities assumed to be needed (by virtue of their default

level 3 importance ratings) were really not applicable for the given project. Once those

ratings were optimized through several iterative passes, the assessed risks were usually

quite intuitive. In several cases the STRA also identified risks previously unknown to the

user; examination of rationale confirmed that they were indeed concerns to the project.

Some of the most useful assistance came from consistency checking rules. On many

occasions users realized importance but subtle relationships after the STRA identified rating

conflicts. For example, if Transmit C3I Information To Dynamically Changing Recipients

is highly important, Information Security and Process Support For Defect-Free Software

Development and Modification should also be highly important due to the need to prevent

compromise of information. Such assistance is invaluable to less-expert users, even if only

to stimulate deeper thought.

Several shortcomings were identified as experimental usage progressed; most are discussed in section 6.1. Two additional shortcomings were also noticed:

• The SWTS taxonomy and assessments are geared for large-scale software projects. STRA

results for small projects are sometimes erroneous due to insufficient knowledge about

scaling effects. This matter will be corrected through further knowledge acquisition.

• Under certain conditions the averaging function used to compute capability family significance is inadequate. A family having a large number of low-significance capabilities

can mask a few high-significance capabilities and produce a lower absolute weighting.

244

TOTH

An alternative is to discard zero-significance capabilities, but this causes a problem for

importance values of {None}. Other statistical methods are being investigated.

5.

5.1.

Related Work

Taxonomy-Based Risk Identification

The Software Engineering Institute (SEI) has been studying software risk management for

some time. They have developed and documented a Taxonomy-Based Risk Identification

method (Carr et al., 1993) which is the first phase of a comprehensive effort to address risk

identification, analysis, planning, tracking, control, and communication.

SEI's risk identification method consists of a taxonomy-based questionnaire (TBQ) and

a process for its application. The TBQ is hierarchical and is structured into three levels known as class, element and attribute. Three classes are defined: Product Engineering, Development Environment, and Program Constraints. Product Engineering is

similar in concept to Product needs in the SWTS/STRA, while Development Environment is similar to Process needs. The third class, Program Constraints, deals with programmatic issues such as resources, type of contract, and customer/subcontractor interfaces.

Elements comprise the second level of the TBQ, and while they correspond to Level

2 needs in the STRA, they bear little further resemblance. Some random examples of

elements include Requirements, Integration and Test, Development Process, Management

Process, Resources, and Program Interfaces.

Attributes make up the third level; they are similar in concept to STRA Product and

Process attributes. Random examples include Stability, Testability, Security, Personnel

Management, Schedule, and Budget. The TBQ included in (Carr et al., 1993) contains a

total of 13 classes and 64 attributes.

Risks are identified by interviewing key project personnel. Each interview consists of

a question and answer session followed by issue clarification. Interviews are typically 2.5

hours or less in duration and are guided by questions from the TBQ. Each series of questions

begins with a "starter" question, and depending on the answer, may be followed by one or

more detailed "probe" questions. This approach helps avoid spending time on irrelevant or

unimportant topics. TBQ questions are answered with "Yes" or "No" responses; additional

discussion may be needed to clarify issues or concerns.

Risk interviews are conducted by a trained risk identification team. During SEI field

testing of the TBQ, teams consisted of SEI personnel and client personnel. Including

client personnel helps transition the methodology to software development organizations.

Although risk identification team members need not be experts in the project domain,

they should have at least some domain knowledge and an understanding of software at

large.

Specific risk areas are identified by manually correlating interview results and identifying

taxonomy areas having issues or concerns. The list of issues and concerns constitutes

identified risks.

TBQ development was evolutionary; preliminary versions were field tested and results

were incorporated into the next version. The TBQ is said to have evolved over the course

of 15 field tests and will continue to be improved based on additional feedback. In general,

AUTOMATEDMETHOD FOR IDENTIFYINGAND PRIORITIZINGPROJECTRISK FACTORS

245

the TBQ method of risk identification is considered effective and efficient by developers

and field test participants.

5. I. 1.

Comparison to STRA Techniques

Similarities

•

•

•

•

•

Taxonomy-based

Identifies risk areas

Repeatable risk identification

Addresses products and processes

Useful for software risk management

Differences

• No risk quantification or prioritization

• Includes non-technical factors such as programmatic issues

• Different risk identification philosophy; addresses poorly communicated known risks

rather than unknown risks

• No risk reduction advice

• Qualitative rather than quantitative approach

• Interview mechanisms have an associated cost in time, effort and facilities

• Manual method; not yet computer-automated

• Validated through a series of field tests

5.2. Expert COCOMO

Madachy (1994) has extended Mitre's work on ESCOMO (Day, 1987) by developing a

knowledge-based assistant for software cost estimation and project risk assessment. In

addition to computing intermediate COCOMO results from user inputs, Madachy's tool

identifies risks, detects user input anomalies, and provides advice.

Risks are identified by detecting combinations of upper extreme cost drivers such as tight

schedule and a highly complex system. Cost driver combinations are evaluated using results

from a knowledgebase. Each risk combination has an associated rating such as moderate,

high, or very-high. Identified risks are tabulated according to their membership in five

different categories. Risk weights are computed as a function of risk probability and risk

consequence.

Consistency rules are used to detect potential user input anomalies such as embedded

mode and very-low complexity. Advice is provided by displaying information about each

risk from the knowledgebase.

5.2.1. Comparison to STRA Techniques

Similarities

•

•

•

•

•

Provides useful project assistance

Uses Knowledge-based approach

Quantitatively identifies potential risks

Checks consistency of user inputs using rules

Provides rationale and advice

246

TOTH

Differences

•

•

•

•

•

•

6.

Smaller knowledgebase

Simpler inferencing

Also computes cost and schedule using intermediate COCOMO

Different method of identifying and quantifying risks

Includes schedule effects on risk

Provides only simple advice

Status and Future Work

6.1. Implementation Versions

STRA development has progressed through several implementation versions, each building on the previous. Version 1 was implemented in Lisp using the KnowledgeCraft expert

system shell (Carnegie Group, Inc., 1990 a-c), which provided a schema-based knowledge

representation framework running on a Sun SPARC platform. The Version 1 user interface

used simple TTY-oriented menus and queries and provided simple risk information displays. Version 1 successfully demonstrated the risk inference algorithms, but had several

shortcomings:

• User interface was cumbersome and forced a sequential question/answer dialogue; this

did not allow easy back and forth cycling between user inputs and inferred results.

• Development and execution required the KnowledgeCraft expert system shell to be installed and licensed on the host platform; this limited the number of machines that could

support the STRA.

• Knowledgebase was implemented in the Lisp code, making knowledge maintenance

difficult for non-Lisp programmers.

• Simple TTY interface did not readily permit user modification of Level 3 capability

importance values; this is needed to allow fine-tuning of the project profile.

Version 2 was written entirely in C and C++ using the Xview toolkit (Heller, 1991)

running under Sun OpenWindows TM. It provides a graphical user interface (shown in Figs. 5

through 7) which is much easier to use and allows random user inputs. All knowledge

representations and manipulations as well as rule evaluations are implemented in C/C++.

Since Lisp is not used, the KnowledgeCraft environment is not required and the STRA can

operate on any Sun SPARC platform equipped with a standard windowing environment.

This makes it much more accessible to the software community at large.

The Version 2 design decouples the user interface and risk computation engine from the

knowledgebase, allowing each to be maintained separately. A knowledgebase compiler

was written to transform textual knowledgebase files into binary representations used at

runtime; this allows end users to modify supplied knowledgebases or develop their own.

Version 1 shortcomings were eliminated, however several desired features were identified

during experimental use. These included printing hardcopy results, saving and restoring

importance ratings between sessions, and running on Personal Computers in addition to

Sun workstations.

Version 3 is being written in C++ under Microsoft@ Windows TM. It addresses the

desired featues and shortcomings of Version 2 and is structured as a toolkit containing the

AUTOMATEDMETHODFOR IDENTIFYINGAND PRIORITIZINGPROJECTRISKFACTORS

247

Risk Advisor and a Knowledgebase Compiler. The widespread availability of Personal

Computers running Microsoft Windows should lead to increased use of the STRA toolset.

6.2.

Future Work

There are seveal areas in which the STRA is being enhanced to provide greater utility:

• Expanding the knowledgebase through additional knowledge acquisition and expert interviews.

• Enhancing knowledge about small project and commercial systems domains.

• Incorporating results of field use and demonstrations.

• Providing the STRA on multiple host platforms to allow more widespread use.

Other areas are being researched to see how the STRA can be extended and integrated

into the software engineering process:

• Adding cost and schedule effects to risk identification and prioritization.

• Providing knowledge-based assistance for deciding which risks to accept, perhaps by

looking at risk exposure or economic impact.

• Determining how the STRA best fits into risk management practices and software engineering environemnts.

• Looking for other applications of the STRA such as assessing technical risks during

software design, implementation, and integration/test.

7.

Conclusion

This paper has described the Software Technology Risk Advisor, including its internal

architecture and knowledgebase structure. The STRA has provided useful technical risk

advice on almost all of the projects it has been applied to. It provides low-cost, quickturnaround assistance for risk identification, risk prioritization and risk reduction.

The DoD SWTS taxonomy and technology assessments have provided a viable starting

point for the STRA knowledgebase. The taxonomy-based approach supports orderly growth

as the knowledgebase is expanded, and provides a framework for notes, explanations and

advice.

Usage feedback has improved the initial STRA in several key areas, including user

interface, ability to modify lower-level factors, explanations, and support for multiple host

platforms/environments. Additional usage and testing will lead to further refinements.

Ongoing research may identify new ways of expanding STRA capabilities and tying into

other related work.

tn summary, knowledge-based tools such as the STRA are feasible, practical, and useful

for software risk management. Applying knowledge-based approaches to provide expert

assistance is an effective way to leverage risk management techniques in software engineering.

Acknowledgments

I am grateful to Barry Boehm for suggesting and supporting this research, for providing useful comments on my work, and for participating as an expert during knowledge acquisition

248

TOTH

activities. Prasanta B o s e p r o v i d e d helpful c o m m e n t s during various stages of this w o r k and

during preparation o f this paper.

References

Boehm, B.W. 1989. Software Risk Management. IEEE Computer Society Press, Washington, D.C.

Boehm, B.W. 1981. Software Engineering Economics. Prentice-Hall, Englewood Cliffs, NJ.

Charette, R.N. 1989. Software Engineering Risk Analysis and Management. Intertext Publications/Multiscience

Press and McGraw-Hill, New York, NY.

Carnegie Group, Inc., 1990a. CRL-OPS.

Carnegie Group, Inc., 1990b. CRL Technical Manual.

Carnegie Group, Inc., 1990c. Knowledge Craft-Technical Overview.

Carr, M.J., Konda, S.L, Monarch, I., Ulrich, EC., and Walker, C.E 1993. Taxonomy-Based Risk Identification,

Technical Report CMU/SEI-93-TR-06, Software Engineering Institute.

Day, V.C. 1987. Expert System Cost Model (ESCOMO) Prototype. Proceedings, ThirdAnnual COCOMO User's

Group Meeting. Software Engineering Institute.

Heller, D. 1991. XViewProgrammingManual. O'Reilly & Associates, Sebastopol, CA.

Kelly, R. 1991. Practical Knowledge Engineering. Digital Press.

Madachy, R. 1994. Knowledge-Base Risk Assessment and Cost Estimation. Proceedings, The Ninth KnowledgeBased Software Engineering Conference. IEEE Computer Society Press.

United States Department of Defense, 1991. Draft Department of Defense Software Technology Strategy.