these notes

advertisement

Notes on CSE 540 Homework 2

Problem 2.20

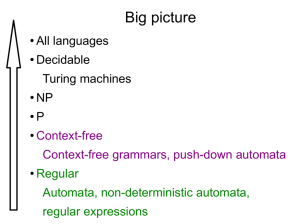

Let A/B = {w|wx ∈ A for some x ∈ B}. Show that if A is context-free

and B is regular, then A/B is context-free.

Suppose A is context-free and B is regular. Let

P = (QP , Σ, ΓP , δP , q0,P , FP )

be a PDA that recognizes A, and let

M = (QM , Σ, δM , q0,M , FM )

be a NFA that recognizes B. We will show that A/B is context-free by

constructing a PDA P 0 = (Q0 , Σ, Γ0 , δ 0 , q00 , F 0 ) that recognizes A/B.

The intuitive idea of the construction is as follows: P 0 will start out

behaving like P while reading a prefix w of the input string. At a nondeterministically chosen point, P 0 will “guess” that it has reached the end of w. At

this point, it will behave like P and M running simultaneously, except that

it will “guess” the input string x, rather than actually reading it as input.

If it is possible in this way to simultaneously to reach an accepting state of

both P and M , then P 0 accepts. Note that there is no reason why the stack

would have to be empty at the point where P 0 begins the guessing phase. So

it is necessary for P 0 to continue simulating P , in order to properly account

for the stack contents.

Formally, we define P 0 as follows:

• Q0 = QP ∪ (QP × QM )

• Γ0 = Γ

• q00 = q0,P

• F 0 = FP × FM

• δ 0 is defined as follows: For qP ∈ QP (i.e. if P 0 is in the initial phase):

(

0

δ (qP , a, u) =

δP (qP , a, u),

δP (qP , , u) ∪ {((qP , qM,0 ), },

1

if a ∈ Σ,

if a = .

For (qP , qM ) ∈ QP × QM (i.e. if P 0 is in the guessing phase):

δ 0 ((qP, qM ), a, u) =

if a ∈ Σ,

∅,

S

b∈Σ {((rP , rM ), v) : (rP , v) ∈ δP (qP , b, u) and rM ∈ δM (qM , b)},

if a = .

We claim that P 0 accepts w if and only if there exists a string x such that

P accepts wx and M accepts x. For, in an accepting computation of P 0 on

input w, all of w must be read during the first phase (since nothing is read

during the guessing phase), and the input symbols b that are guessed during

the second phase determine a string x that is accepted by M and is such

that wx is accepted by P . Conversely, if w is a string with the property that

wx ∈ A for some x ∈ B, then there is an accepting computation of P 0 in

which w is read during the first phase, and then the input x is guessed during

the second phase. In this case, the P -components of the states determine

an accepting computation of P on input wx, and the M -components of the

states determine an accepting computation of M on input x.

Problem 2.26

Show that if G is a CFG in Chomsky normal form, then for any string

w ∈ L(G) of length n ≥ 1, exactly 2n−1 steps are required for any derivation

of w.

Lemma: For all n ≥ 1, if G derives sentential form w in n steps, then

n = 2 · T (w) + N T (w) − 1, where N T (w) denotes the number of nonterminal

symbols in w and T (w) denotes the number of terminal symbols in w.

Note that the desired result follows immediately from the Lemma, as the

special case in which w contains no nonterminal symbols.

We prove the Lemma by induction on n.

In the basis case, n = 1, and the derivation of w must have one of the

following two forms:

1. S ⇒ a, where S → a is a rule of G and w = a.

2. S ⇒ AB, where S → AB is a rule of G and w = AB.

In the first case, 2 · T (w) + N T (w) − 1 = 2 + 0 − 1 = n, and in the second

case, 2 · T (w) + N T (w) − 1 = 0 + 2 − 1 = n.

2

For the induction step, suppose as the induction hypothesis that for some

n ≥ 1 we have shown that for all sentential forms w, if G derives w in n steps,

then n = 2 · T (w) + N T (w) − 1. Suppose now that w is a sentential form

that is derived by G in n + 1 steps. The derivation then has the form:

S ⇒n x ⇒ w.

Applying the induction hypothesis to the first portion of the deriviation

(i.e. consisting of all but the last step), we obtain n = 2 · T (x) + N T (x) − 1.

In the last step of the derivation, either a rule of the form A → a is applied, or else a rule of the form A → BC is applied. In the first case,

N T (w) = N T (x) − 1 and T (w) = T (x) + 1 so 2 · T (w) + N T (w) − 1 =

2 · (T (x) + 1) + (N T (x) − 1) − 1 = 2 · T (x) + N T (x) = n + 1. In the second

case, N T (w) = N T (x) + 1 and T (w) = T (x) so 2 · T (w) + N T (w) − 1 =

2·T (x)+N T (w) = n+1. In either case, we have 2·T (w)+N T (w)−1 = n+1,

completing the induction step and the proof.

Problem 2.22

Let C{x#y|x, y ∈ {0, 1}∗ and x 6= y}. Show that C is a context-free

language.

First note that a string x#y is in C if and only if either |x| 6= |y| or

else strings x and y differ at some particular position; that is, x = tau and

y = vbw with |t| = |v|, a, b ∈ {0, 1}, and a 6= b.

It is a simple matter to construct a CFG that derives all strings of the

form x#y where |x| =

6 |y|:

D → ADA | AB# | #AB

A→0|1

B → | AB

The productions for D generate an equal-length, but otherwise arbitrary,

prefix and suffix, and then at some arbitrary point decide which side of the

# will have the larger number of symbols.

It is slightly trickier to construct a CFG that derives all strings of the

form tau#vbw with |t| = |v|, a, b ∈ {0, 1}, and a 6= b.

E → C0 1B | C1 0B

C0 → AC0 A | 0B#

C1 → AC1 A | 1B#

3

In the first step of a derivation from E, it is decided whether the “mismatch

pair” (a, b) is going to be (0, 1) or (1, 0) and it puts the symbol b in position.

The expansion of C0 or C1 then generates the equal-length but otherwise

arbitrary strings t and v. This expansion terminates with the placement of

a, followed by the generation of an arbitrary string before the #.

Finally, the complete grammar is obtained by adding initial rules that

choose between generating x and y of different lengths, or generating x and

y with mismatch at a specific location:

S→D|E

4