Rating children's enjoyment of toys, games and media

advertisement

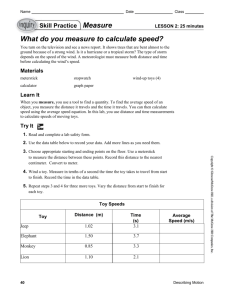

Presented at 3rd World Congress of the International Toy Research Association on Toys, Games and Media, London, August 2002 Rating children's enjoyment of toys, games and media Sharon Airey, Lydia Plowman, Daniel Connolly and Rosemary Luckin Overview This work evolved as part of an ESRC/EPSRC-funded research project investigating children’s use of electronic toys and related software. Four- to six-year-old children were studied over varying periods of time in formal and informal learning environments (schools, homes and out of school centres). Various data was collected regarding the children’s interaction styles, attitudes towards the games and toys, and patterns of use. In order to assess the children’s preferences for the software games, as well as the novel toy and software compared to their usual toys and games, some form of rating scale was necessary. First it had to be decided how the children were going to rate the items and it was decided that it would mostly be according to enjoyment or ‘liking’ for the toy or game and could possibly also be used to rate expectation, fun, endurance, difficulty and cleverness. Previous research in this area was evaluated and a suitable rating scale was developed. Previous research Rating scales traditionally used with adults may not always be suitable for research with child subjects. Unfamiliar words and scales based on numbers can be difficult for children to comprehend enough to give a reliable response. If unsure about an appropriate answer, children often give what they think is the ‘correct’ response rather than their own opinions. One method that has been widely used is the ‘smiley-face’ scale, where subjects are presented with a series of simple faces ranging from sad to happy. Even young children tend to be familiar with cartoons and have a basic understanding of human emotions so can comprehend this method well. With this in mind Wong and Baker (1988) attempted to design a child-friendly rating scale. The scale was intended for children undergoing medical treatment to enable them to express the severity of pain experienced. However, it was thought that it might be possible to translate the scale to measure more positive aspects such as enjoyment. Originally Wong and Baker explored existing rating scales for children “such as those using colour, chips and one unpublished paper that has used 4 different faces”. However, the shortcomings of these scales became apparent when the researchers found that “young children had considerable difficulty using any scale with a number concept, a ranking concept or unfamiliar words. Many young children did not know colours sufficiently well to create their own colour scale”. After regularly using smiley face stickers during the care of child patients in hospital, it was decided to develop the idea for a rating scale. Initially children were given six empty circles in which to draw a range of faces from “a very smiling face to a very sad face” and the faces were then assigned a rating from 0-5 where 0 is no pain and 5 is intense pain. Six choices was considered appropriate based on a compromise between enough choice to cause a sensitive rating scale and few enough so as not to confuse the children. Although most rating scales consist of an odd number of choices (3,5,7) to enable a totally neutral choice, the team reports that “six choices were also consistent with the other scales we were using and facilitated statistical comparison among the scales”. It is interesting to note that whilst most children drew the sequence of faces from no pain to intense pain Copyright Institute of Education, University of Stirling 2002. 1 increasing from left to right, several also drew the scale of pain descending from left to right (see Figure 1). Figure 1: Sample of child-drawn rating scale in Wong-Baker study As most other scales increase from left to right it was decided to continue this method with the new scale. A professional artist was asked to draw the final version of the scale, basing it upon the child-drawn faces, but involving more consistency and differentiation. The final scale is presented in Figure 2 below. After further investigation, including presenting the scale on a vertical line versus a circular fashion, it was found that the scale was a reliable and suitable assessment tool to use with a wide range of ages from young children, through adolescence and even into old age. The scale should indeed be universal as “the advantages of the cartoon type face scale is that it avoids gender, age and racial biases”. Figure 2: Wong-Baker pain rating scale Whilst the Wong-Baker scale is appropriate for use in childhood pain assessment, a more versatile scale which can be used to measure the usability of novel toys or computer software would be desirable for studies other than pain assessment. It has been argued that the smiley face scale is not accurate enough. MWResearch, a marketing research company, presents the view that “small children in particular are often unable to differentiate the fine nuances of an ‘especially happy’ smiley and a merely ‘slightly happy’ smiley. MWResearch also claim that children have difficulty equating a sad face with their own views about not liking something, and that most children will instinctively prefer happy smileys to sad ones. Based on this view MWResearch developed a novel tool for gathering children’s opinions. This object is described as a “swivelling thumb-scale”, a solid ‘thumb’ which can be placed in the position a child wishes to represent their opinion of a particular product or activity. The thumbscale has graded markings on the reverse side, allowing the interviewer to assign a numerical figure to the child’s response. The idea of a tangible object that children can physical manipulate to express their views has potential as a research tool. One of the fundamental factors in designing or selecting a scale for use with children and toys or ICT is to identify exactly what you are asking children to rate. Assessment of adults’ opinions often uses the concept of ‘satisfaction’, but this can be difficult for children to understand. A child will rarely find a game or toy that makes them sad, or dissatisfied, so the language needs to be defined. Read and MacFarlane (2001) measured the responses of Copyright Institute of Education, University of Stirling 2002. 2 children aged 6-10 years to a range of novel computer interfaces. The main focus of this study was to measure the ‘fun’ aspect of the task, as opposed to the concept of ‘satisfaction’ that is more commonly used during assessment of adult software interaction. Three key ‘fun attributes’ were defined as “expectations, engagement and endurability”. Expectations of the task and subsequent perceptions were measured using a repertory grid test (Fransella & Bannister, 1977) which established the subjects’ opinions of the task at various stages during the trials. Children were then videotaped whilst on task and the tapes were later analysed for general engagement with the task. This was assessed by observation of facial expression (giggling, smiling) utterances and body language. The children were asked to rate the task by means of a ‘funometer’, a Likert type scale using a smiley face vertical scale (Risden et al, 1997). Finally, endurability was assessed by the children’s ability to recall the activity. If the task was “memorable” it was awarded a higher endurability score. The concept of using a vertical smiley face scale appears to be original yet logical. It could make sense to a child that the ‘smiley-ness’ of the faces was enforced by the increasing scale upwards. This research used observation of the child on-task to support the child’s subjective judgement after taking part in the task. There are also other measurement techniques available to assess fun that do not rely on subjective responses to some kind of rating scale. The potential to assess fun during computer interaction using human psychophysiology is briefly explored by Cahill et al (2001). Theoretically it might be possible to evaluate software usability by measuring such factors as “skin conductivity, heart activity, blood pressure, respiration rate, eye movements and electrical activities in muscles and brain” which may vary in response to mental events. However, it is accepted that it is difficult to distinguish between physical responses to such experiences as negative and positive emotion, frustration, or fun. For example, some computer games may require a heavy workload, mental commitment, and frustration, but could still be regarded as fun by the user. The authors admit there are still many problems with this theory and do not mention the effectiveness with child subjects so more investigation is needed. Recently, Frantom (2002) reviewed methods of assessing children’s attitudes towards technology. She discovered that there are many scales available to assess attitudes towards computer usage rather than attitudes towards technology in general. However, these available scales do not consider children as young as those in our present study. The Children’s Attitude Towards Technology Scale (CATS) was tested on children aged 8 to 19 years and consisted of a survey defining “perceptions of the difficulty of use, and drawbacks of technology, where technology included multimedia, programmable VCRs, digital cameras, and other consumable goods as well as computers”. Whilst the Children’s Attitude Towards Technology Scale was found to be reliable when measuring children’s attitudes towards technology in general “when attitude toward technology is defined as perceptions of the difficulty, uses and drawbacks of technology”, its use is limited regarding younger children’s perceptions of specific toys and games. Development of the ‘sticky ladder’ rating scale Due to a lack of suitable rating scales for use with children and ICT and toys a novel rating scale has been devised. This scale is based on the idea of a tangible object that children can physically manipulate to express their views. Rather than pointing to pictures on a scale, children respond by sticking a range of items onto a small fabric ladder with Velcro. For the purposes of this study the response items consist of images of typical children’s electronic toys and games. The toy and games pictures were gathered from a catalogue and the Copyright Institute of Education, University of Stirling 2002. 3 appropriateness of the items was tested by asking children in the target age range if they have such toys or know of them. Some blank cards are also available for children to draw any other toys they regularly play with, and if these toys or games appear regularly they are made up into standard pictures. Another set of pictures represent the various games available on the computer software used in the CACHET study: each game is led by one animal character and children are familiar with which animal represents each game. Children are asked to place these in order on the ladder according to a range of factors, including enjoyment, expectation, fun, endurance, difficulty and cleverness. Results are then compared with views expressed by the parent and child in interviews and diaries, as well as between children to see if there are any trends related to age, gender or ability scores. The software games can be separated into two distinct groups; those which have an obvious aim, or are structured or closed (for example the Muffy game where the child has to guide a mummy out of an Egyptian pyramid in order to successfully complete the task) and those that are more open or creative (for example a simple colouring and pattern making game). Figure 3. ‘Sticky ladder’ rating scale, completed with software game choices. Ten children aged four- to six-years of age have been presented with the rating scale in both domestic and informal learning environments. The benefit of such a scale is due to its lack of need for complex language skills or instructions. So far children as young as four have been able to comprehend the task and complete it easily. The results of the scale tend to reflect the opinions of the parents regarding their children’s preferred games and toys, and the children’s own views, as gathered from interviews and diaries, but this needs further work to be substantiated. Sample of results: Participant GM - GM is a boy aged 6 years, just below the average age of children in this study. His verbal score was 26, one of the highest and his non-verbal score was 181, the lowest boy in this group. GM already has a Gameboy and an electronic Battleships game. He is fairly familiar with the computer for his age and his mother sees the PC for learning and educational games, whilst the Gameboy is for playing. GM’s favourite toy is his Gameboy and he enjoys playing games on the PC. GM was very keen on the software and began to immediately interact well with it, understanding the controls and aims of the games. He didn’t like the music (Prunella) game at all. GM liked the Arthur toy very much when he was 1 Ability was measured using the Weschler Pre-School and Primary Intelligence scales- Revised (WPPSI-R) Copyright Institute of Education, University of Stirling 2002. 4 first shown it. Over the following week GM played with the toy alone as well as at the PC. For the first few days he played with the toy, and put it to bed in his room with a blanket. He appeared to really like the gross sayings and laughed out loud at the toy. He also liked the toy with him at the PC for the first few days, enjoying the dance game that made him laugh out loud. However, in the last few days GM’s use of both the toy and the computer game tailed off, as if the novelty had worn off. GM said he liked the software, particularly the dance game (Arthur) and the mummy game (Muffy). GM’s responses to the information gathered through interview and diaries, as seen above, are fairly consistent with his preferences expressed on the rating scale (figure 4 ). His active dislike of the Prunella game (involves making music) can be seen. He does not express a definite preference for any other software game, placing them all equal on the scale even though he earlier expressed a preference for the Arthur (dancing and colouring) game. Fun rating - GM 8 7 6 score 5 4 3 2 1 M uf fy M rR at bu rn D W B us te r P ru ne lla A rth ur Le go K -n ex bi ke G am eb oy ar tk it to y ar th ur ar th ur ac tio n m an so ftw ar e 0 Game/toy Figure 4: Rating scale results for child GM Fun rating - SV 8 7 6 score 5 4 3 2 1 rn fy bu uf P M rR at M W D r te us ne ru P B lla ur rth A er ot co la S ys y- ta tio do n h go la ex -n Le P am G K oy eb ke bi ba rb ie ll ba by do tk it ar ar th ur so ftw ar e 0 game/toy Figure 5: Rating scale results for child SV Copyright Institute of Education, University of Stirling 2002. 5 Participant SV - SV is a girl aged 6 years and 9 months, the oldest girl in our at home study. Her verbal score was 23, slightly below average and her non-verbal score is 30, well above average. She is the middle child, with an older brother and a younger sister, CV. The sisters have use of the family Playstation and Gameboy, but do not play on them very often. SV in particular asks for electronic toys, which they hear about through friends as they have no family TV. Both girls are familiar with computers and use their father’s laptop at weekends for the Internet and painting. Their mother is not particularly keen on electronic toys, and thinks they stop imaginative play, give immediate feedback and can be addictive. SV and CV were captivated by DW when first presented with her. They used her efficiently and explored many of the games. During the first week, the girls played with DW every day, but only in short bursts. At the start of the week they played with her interactively, but this wore off and by the end of the week they were not switching her on, just playing with her as a doll with their other toys. During the second week, the girls stopped playing with the toy, apart from to control the dance game on the computer. The girls played on the computer every day to begin with, but the last two days they did not choose to as the novelty appeared to have worn off. Their favourite game was the dancing and painting game and they disliked the dragon and mummy games. The results from SV’s rating scale confirm her preferences expressed during interview and diary data. The results showed that although she enjoyed the software, it was not her favourite toy or game, and her favourite game on the software was the dancing and colouring game (DW). In general the children’s responses on the rating scale supported the views expressed by the parent and child in the interviews and diaries. However, the views of the child can and do change regularly so the results of the rating scales must only be interpreted as a sample of the child’s preferences at that particular point. Children change their minds regularly and in order to assess whether the preferences are more than just a fleeting opinion, the rating scale would need to be applied at a later date to assess the stability of the view. Whilst analysis of the findings is still ongoing, emergent findings already show that: • Males tended to prefer the more structured games, whilst females preferred more creative games. • Average non-verbal ability score was much higher for those children who preferred creative games. • Average verbal score was only slightly higher for those children who preferred creative games. • Average age was only slightly higher for children who preferred creative games. • Children with more experience of computers tended to prefer the structured games. Conclusions So far results indicate that the ‘Sticky Ladder’ rating scale has the potential to be developed into a useful tool to assess children’s preferences during research or evaluation. Even very young children are able to present their opinions of the amount of fun or enjoyment a toy or game gives them. Some children were also asked to rate the toys and games according to other factors, such as difficulty. Whilst these concepts were harder for the children to grasp, some managed to present their opinions using the scale indicating that it has potential to assess other factors, perhaps with slightly older children. The subjective quality of a toy or game can be measured by its ‘endurance’, and this scale could be used at a later period to see how enjoyable the children remember a toy or game to have been. Further work needs to be done to assess the rating scale’s reliability, by comparing the results with those gathered using more traditional rating methods, and by using a larger number of participants. Also, investigation needs to be conducted to assess the target users of such a tool. Similar tools Copyright Institute of Education, University of Stirling 2002. 6 have been devised by Joan Murphy (2000) for use with patients with communication difficulties. Murphy’s ‘Talking Mats’ use a wide range of picture symbols that are then placed along a range of emotion symbols on a ‘mat’ to reflect an individual’s Figure 6: ‘Talking Mats’ – example showing patient’s opinion of using the telephone. opinion about a range of situations. For example, a resident in a nursing home for stroke victims could comment on their preferences for the week’s activities, without using any language. ‘Talking Mats’ has been widely researched and is now available for use by practitioners. It is thought that with further development, the ‘Sticky ladder’ rating scale could be used in similar situations with children, by researchers, toy and game manufacturers and other interested groups. The symbols could be adapted according to the context and if proved to be a reliable tool, the ladder could be useful for assessing the enjoyment value of limitless toys, games, and media. References Cahill B, Ward R D, Marsden P H and Johnson C (2001). Fun, Work and Affective Computing: can Psychophysiology Measure Fun? Interfaces 46, Spring 2001, p11. Fransella, F. & Bannister, D. (1977) A manual for repertory grid technique, Academic Press. Frantom C G, Green K E & Hoffman E R (2002). Measure development: the children’s attitude towards technology scale (CATS). J. Educational Computing Research, Vol. 26 (3), 249-263 Murphy, J. (2000) Enabling people with aphasia to discuss quality of life. British Journal of Therapy and Rehabilitation, November 2000, Vol. 7, no 11. MWResearch http://www.iris-net.org/whatsnew/default.asp?aID=889 Accessed on 12/8/02 Read, J & MacFarlane, S (2001) Measuring fun – usability testing for children. Interfaces 46, Spring 2001 Risden, K., Hanna, E & Kanerva, A. (1997), Dimensions of intrinsic motivation in children’s favourite computer activities, Poster Session at the meeting of the Society for Research in Child Development, Washington DC, USA. Wong, D., and Baker, C.(1998) Pain in children: comparison of assessment scales, Pediatr. Nurs. 14(1):9017. Copyright Institute of Education, University of Stirling 2002. 7