Hadoop - Installation Manual for Virtual Machines

advertisement

Hadoop - Installation Manual for Virtual

Machines

Hannes Gamper and Tomi Pieviläinen

December 3, 2009, Espoo

Contents

1 Introduction

1

2 Requirements

1

3 Installation procedure

3.1 Installing packages . . . . . . . . . . . . . . . . . . . . .

3.2 Copy configuration and .jar files to your home directory

3.3 Configuring hadoop-env.sh . . . . . . . . . . . . . . . . .

3.4 Configuring hadoop-site.xml . . . . . . . . . . . . . . . .

3.5 Specify masters and slaves . . . . . . . . . . . . . . . . .

3.6 Set environment variables in current shell . . . . . . . .

3.7 Format the namenode . . . . . . . . . . . . . . . . . . .

4 Running the cluster

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2

2

2

2

3

4

4

4

4

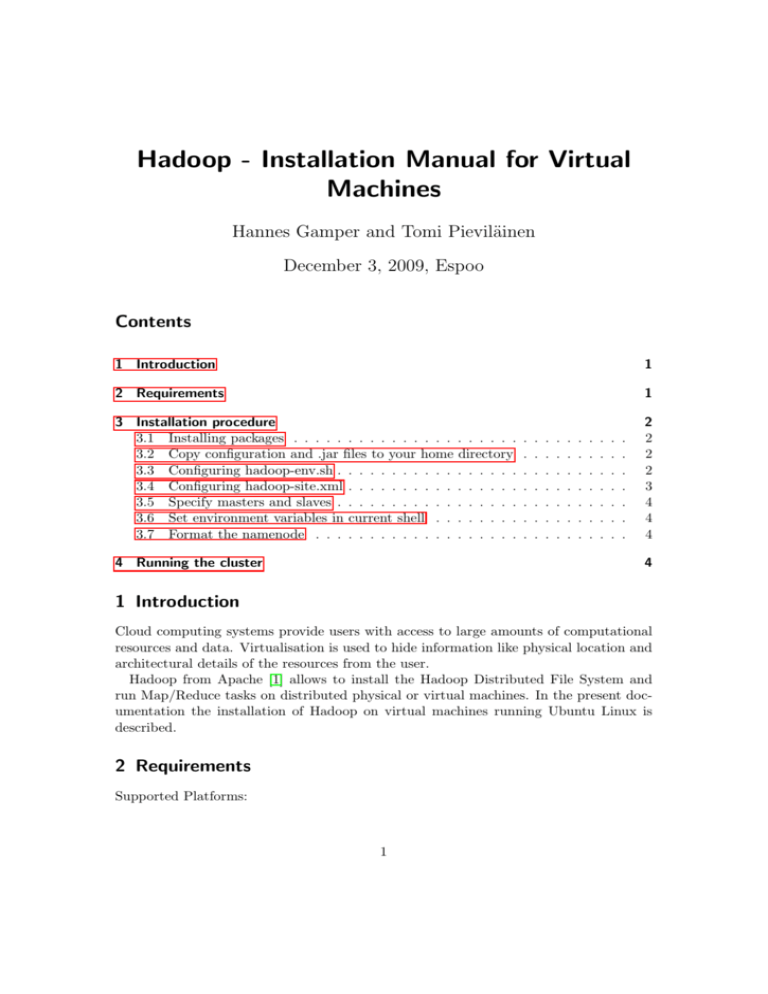

1 Introduction

Cloud computing systems provide users with access to large amounts of computational

resources and data. Virtualisation is used to hide information like physical location and

architectural details of the resources from the user.

Hadoop from Apache [1] allows to install the Hadoop Distributed File System and

run Map/Reduce tasks on distributed physical or virtual machines. In the present documentation the installation of Hadoop on virtual machines running Ubuntu Linux is

described.

2 Requirements

Supported Platforms:

1

3 Installation procedure

• GNU/Linux for development and production

• Win32 for development only

Hardware requirements:

• In the tested setup, only 256 MB RAM were available per virtual machine. This

is NOT enough to run any MapReduce tasks.

Required software:

• Java 1.6.x (preferably from Sun, however due to security issues of the Sun JDK,

a Java-Open JDK should be installed instead (unlike stated in Michael Noll’s

tutorial [2])

• ssh must be installed, and sshd running

3 Installation procedure

3.1 Installing packages

Make sure SSH is set up (see Michael Noll’s tutorial [2]). If ROOT access is granted to

the virtual machines, install the required packages. After successful installation, Hadoop

has to be configured. The following steps do NOT require ROOT access.

3.2 Copy configuration and .jar files to your home directory

In order to change any configuration settings, the configuration files have to be copied

to your home folder:

$ cp -r /etc/hadoop/conf/ ~/hadoop/

Next, copy the .jar files:

$ cp -r /usr/lib/hadoop/*.jar /usr/lib/hadoop/lib/* ~/hadoop/lib/

3.3 Configuring hadoop-env.sh

The first configuration step is to set some environment variables in /hadoop/conf/hadoopenv.sh. The settings are given below:

# remote nodes.

export HADOOP_HOME=/home/tpievila/hadoop

# The java implementation to use. Required.

export JAVA_HOME=/usr/lib/jvm/java-6-openjdk

# Extra Java CLASSPATH elements. Optional.

export HADOOP_CLASSPATH=/usr/lib/hadoop/lib

# The maximum amount of heap to use, in MB. Default is 1000.

export HADOOP_HEAPSIZE=200

2

3 Installation procedure

# Extra Java runtime options. Empty by default.

# export HADOOP_OPTS=-server

HADOOP_OPTS=-Djava.net.preferIPv4Stack=true

# Command specific options appended to HADOOP_OPTS when specified

export HADOOP_NAMENODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_NAMENODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_SECONDARYNAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Dcom.sun.management.jmxremote $HADOOP_DATANODE_OPTS"

export HADOOP_BALANCER_OPTS="-Dcom.sun.management.jmxremote $HADOOP_BALANCER_OPTS"

export HADOOP_JOBTRACKER_OPTS="-Dcom.sun.management.jmxremote $HADOOP_JOBTRACKER_OPTS"

# Where log files are stored. $HADOOP_HOME/logs by default.

export HADOOP_LOG_DIR=${HADOOP_HOME}/logs

export HADOOP_CONF_DIR=${HADOOP_HOME}/conf

# File naming remote slave hosts. $HADOOP_HOME/conf/slaves by default.

export HADOOP_SLAVES=${HADOOP_HOME}/conf/slaves

3.4 Configuring hadoop-site.xml

The example hadoop-site.xml file from the Michael Noll’s tutorial [2] did not work properly. Change the /hadoop/conf/hadoop-site.xml file according to your installation settings (i.e. change <cloud1.tml.hut.fi> to the IP address of the master node you are

using):

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>tmp/hadoop-${user.name}</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://cloud1.tml.hut.fi:54310</value>

<description>Name of the default file system</description>

</property>

<property>

<name>mapred.job.tracker</name>

<value>cloud1.tml.hut.fi:54311</value>

<description>Host and port of MapReduce job tracker</description>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication.</description>

</property>

<property>

<name>dfs.name.dir</name>

<value>dfs-name-dir</value>

</property>

3

4 Running the cluster

<property>

<name>mapred.local.dir</name>

<value>mapred-local-dir</value>

</property>

</configuration>

3.5 Specify masters and slaves

On the master node, you need to specify the master in the file /hadoop/conf/masters and

the slaves in /hadoop/conf/slaves, for example, assuming you have 1 master (cloud1)

and 8 slaves (cloud[1-8]), the files would look like this:

• content of /hadoop/conf/masters

cloud 1

• content of /hadoop/conf/slaves

cloud1

cloud2

cloud3

cloud4

cloud5

cloud6

cloud7

cloud8

3.6 Set environment variables in current shell

To set the environment variables defined in /hadoop/conf/hadoop-env.sh, execute the

following command (note the DOT in front of the filename)

$ . ~/hadoop/conf/hadoop-env.sh

This command has to be executed every time before running hadoop, in order to set

the environment variables of the shell invoking hadoop.

3.7 Format the namenode

To initialise the Hadoop FileSystem, format the namenode by executing the following

command:

$ hadoop namenode -format

4 Running the cluster

Ensure the environment variables of the current shell are set (see section 3.6). To start

Hadoop, execute:

$ /usr/lib/hadoop/bin/start-all.sh

This starts hadoop on all nodes. Now you can execute MapReduce tasks on the cloud.

4

References

References

[1] Apache. Welcome to Apache Hadoop! http://hadoop.apache.org/, 2009.

[2] Michael G. Noll.

Running Hadoop on Ubuntu Linux (single-node cluster). http://www.michael-noll.com/wiki/Running_Hadoop_On_Ubuntu_Linux_

(Single-Node_Cluster), 2009.

5