Robust Virtual Implementation under Common Strong Belief in

advertisement

Robust Virtual Implementation under

Common Strong Belief in Rationality∗

Christoph Müller†

Carnegie Mellon University

October 18, 2013

Abstract

Bergemann and Morris (2009b) show that static mechanisms cannot robustly virtually

implement any non-constant social choice function if preferences are sufficiently interdependent. Without any knowledge of how agents revise their beliefs this impossibility

result extends to dynamic mechanisms. In contrast, we show that if the agents revise

their beliefs according to the forward induction logic embedded in strong rationalizability,

admitting dynamic mechanisms leads to considerable gains. We show that all ex-post incentive compatible social choice functions are robustly virtually implementable in private

consumption environments satisfying a weak sufficient condition, including in all auction

environments with generic valuation functions, regardless of the level of preference interdependence. This result derives from the key insight that in such environments, in

any belief-complete type space under common strong belief in rationality (Battigalli and

Siniscalchi, 2002), dynamic mechanisms can distinguish all payoff type profiles by their

strategic choices. Notably, dynamic mechanisms can robustly virtually implement the

efficient allocation of an object even if static mechanisms cannot.

Keywords: Strategic distinguishability, common strong belief in rationality, extensive form

rationalizability, strong rationalizability, robust implementation, virtual implementation.

∗

I thank my advisor Kim-Sau Chung for his dedication and encouragement and for his invaluable guidance

throughout this project, and David Rahman, Itai Sher and Jan Werner for their continuous support. I also

thank an anonymous associate editor and two referees for their excellent comments and suggestions, Pierpaolo Battigalli, Dirk Bergemann, Narayana Kocherlakota, Eric Maskin, Konrad Mierendorff, Stephen Morris,

Guillermo Ordoñez, Alessandro Pavan, Andrés Perea and Christopher Phelan for helpful discussions and suggestions, and the participants of the Workshop on Information and Dynamic Mechanism Design (HIM, Bonn),

the SED Annual Meetings (Istanbul), the ESEM (Barcelona), the 11th SAET Conference (Ancao) and seminar

audiences at ANU, Bocconi, Bonn, CMU, Maastricht, Minnesota, Oxford, Penn State, Pitt, Royal Holloway,

Sabanci and Western Ontario. Financial support through a Doctoral Dissertation Fellowship of the Graduate

School of the University of Minnesota is gratefully acknowledged. All errors are my own.

†

Contact: cmueller@cmu.edu

1

1

Introduction

Through their stringent necessary conditions for implementation, many results from a growing

literature on robust implementation reveal an unwelcome trade-off for mechanism designers:

Either choose one of the comparatively few social choice functions that are robustly implementable, or give up robustness. The former choice is severely limiting in many environments

and can even confine the designer to choose among constant social choice functions. The

latter choice entails reverting to non-robust mechanisms which depend on fine details about

the agents’ assumed beliefs and higher order beliefs about private information. Results by

Bergemann and Morris (2009b) (henceforth BM) establish this trade-off for robust virtual implementation by static mechanisms (normal game forms). In this paper, we show that using

dynamic mechanisms (roughly, extensive game forms) and adopting a natural generalization

of BM’s notion of robustness allows designers to escape this trade-off in important cases by

enlarging the set of robustly virtually implementable social choice functions.

Notion of Robustness. Assumptions about the agents’ beliefs and higher order beliefs

about payoff types are usually described by a type space, often implicitly via the assumption

of a common prior over a payoff type space. Motivated by the desire to avoid such assumptions,

BM define robust virtual implementation (rv-implementation) as (full) rationalizable virtual

implementation. This is justified by the well-known equality between the union of all Bayesian

Nash equilibrium strategies across all type spaces on the one hand and the set of rationalizable strategies on the other hand (Brandenburger and Dekel, 1987; Battigalli and Siniscalchi,

2003). If a social choice function is rationalizably implementable, it is (fully) implementable

in Bayesian Nash equilibrium for all type spaces, freeing the designer from the task of guessing

the “correct” type space.1

Instead of anchoring the concept of robust implementation in an equilibrium concept, a

second justification for BM’s definition formulates the notion of implementation directly in

terms of explicit assumptions about the agents’ strategic reasoning. This justification derives

from the characterization of rationalizability by the epistemic condition of rationality and

common belief in rationality (RCBR). Just as the equilibrium based approach, implementation

with respect to RCBR frees the designer from guessing the correct type space, as for any type

space, any strategy that is consistent with “RCBR in the type space” is rationalizable.2 And

1

We are interested in robustness with respect to type spaces describing beliefs about payoff types. Epistemic

results are often stated in terms of “epistemic” type spaces describing interactive beliefs about payoff types and

strategies (see e.g. Battigalli and Siniscalchi, 1999). In what follows, we do not distinguish between these two

notions; rather, it is understood that we are only interested in “epistemic” type spaces that correspond to type

spaces about (initial) beliefs about payoff types and that we tacitly rule out all other “epistemic” type spaces.

2

BM adopt a special case of ∆-rationalizability (Battigalli, 1999, 2003; Battigalli and Siniscalchi, 2003)

called belief-free rationalizability. A strategy is consistent with RCBR precisely if it is belief-free rationalizable

2

since RCBR itself does not impose any restrictions on belief hierarchies about payoff types,

implementation with respect to RCBR can justly be called robust.

The approach of examining implementation with respect to an epistemic condition lends

itself to generalization to dynamic mechanism.3 Generalizing from above, it is natural to

assume that agents are (sequentially) rational and that there is common belief in (sequential)

rationality at the beginning of the mechanism. But with dynamic mechanisms, one also

needs to take a stand on how agents revise their initial beliefs while playing mechanisms,

a decision that did not arise with static mechanisms. We assume that agents revise their

beliefs via Bayesian updating whenever possible. This still leaves open how an agent revises

his beliefs at information sets at which Bayesian updating cannot be applied because they

surprise him, that is, at information sets that should have been reached with probability zero

according to the agent’s earlier beliefs. One can make no assumption about this belief revision,

which corresponds to adopting the epistemic assumption of rationality and common initial

belief in rationality (RCIBR). Alternatively, in the spirit of the logic of backward induction,

one can assume rationality and common belief in future rationality (RCBFR) (Penta, 2013;

Perea, 2013). However, in Müller (2012) we prove that under RCIBR, dynamic mechanisms

cannot rv-implement any more social choice functions than static mechanisms. A similar

result can be proved for RCBFR. Therefore, under these assumptions, designers interested in

rv-implementation gain nothing by admitting dynamic mechanisms.

In this paper, we assume that the agents revise their beliefs according to the epistemic

condition of rationality and common strong belief in rationality (RCSBR) (Battigalli and

Siniscalchi, 2002), where we restrict attention to belief-complete type spaces. An agent strongly

believes in an event if he initially believes in the event, and continues to believe in the event “as

long as possible.” For example, if i strongly believes in j’s rationality then i believes that j is

rational at all information sets, including those that he did not expect to occur, save for those

that can have resulted only from irrational play of j. By revising their beliefs according to

RCSBR, agents engage in forward induction reasoning. We show that in contrast to the results

for RCIBR and RCBFR, this leads to considerable gains from admitting dynamic mechanisms.

(see Battigalli, Di Tillio, Grillo, and Penta, 2011, and references therein). Moreover, a strategy is consistent

with the restrictions on belief hierarchies about payoff types expressed by a given type space and RCBR if

and only if it is interim correlated rationalizable for that type space (Dekel, Fudenberg, and Morris, 2007;

Battigalli, Di Tillio, Grillo, and Penta, 2011). Any strategy that is interim correlated rationalizable for some

type space is also belief-free rationalizable.

3

Another approach to robust implementation in dynamic mechanisms is to generalize the equilibrium based

justification of BM’s definition. Viable approaches emerge from the equivalence of the set of weak perfect

Bayesian equilibrium strategies to the set of weakly rationalizable strategies (Battigalli, 1999, 2003), and the

equivalence of the set of interim perfect equilibrium strategies to the set of backwards rationalizable strategies

(Penta, 2013). The resulting implementation concepts correspond to the notions of implementation under

RCIBR and RCBFR described below.

3

Belief-Completeness. A type space is belief-complete if it admits all belief systems. Restricting attention to belief-complete type spaces allows us to use a result by Battigalli and

Siniscalchi (2002) which shows that in such type spaces, RCSBR is characterized by strong

rationalizability 4 (Battigalli, 1999, 2003). Using their result, we circumvent the formalism of

type spaces. While we implicitly analyze virtual implementation under RCSBR that is robust

with respect to all belief-complete type spaces, we define rv-implementation directly as virtual

implementation under strong rationalizability.

Although without loss of generality in static mechanisms,5 assuming belief-completeness

may be with loss of generality in dynamic mechanisms under RCSBR. In general games,

the set of strategies consistent with RCSBR can be larger in a “small” type space than in a

belief-complete type space. This is a consequence of the non-monotonicity of the strong belief

operator (Battigalli and Siniscalchi, 2002; Battigalli and Friedenberg, 2012). Suppose player

1 moves first and chooses action a, and that a is rational only for his payoff type θ1 . Upon

observing a, in a belief-complete type space, a player 2 that strongly believes in 1’s rationality

infers that 1’s payoff type is θ1 . In a “small” type space, player 2 might simply never be

“allowed” to believe in θ1 . In this case, upon observing a, 2 concludes that 1 is irrational and

forms any new belief about 1’s payoff type, potentially leading to a larger set of strategies

consistent with RCSBR than in the belief-complete type space. Under RCSBR, a “small” type

space can thus affect how agents revise their beliefs.

Focusing on belief-complete type spaces can be interpreted as focusing on cases in which

the mechanism designer and the agents have symmetric information about which payoff type

profiles are conceivable. The notion of robustness corresponding to strong rationalizability

presupposes this symmetry. It is important to note that this notion does not, however, rule

out any beliefs that the agents might initially hold about payoff types (including those that

place probability zero on some of the opponents’ payoff types).

Robust Virtual Implementation and Strategic Distinguishability. BM show how to

rv-implement social choice functions in environments with little preference interdependence.

But BM also point out that if an environment exhibits sufficient preference interdependence,

it is impossible to find a static mechanism that rv-implements any non-constant social choice

function. In such environments, mechanism designers using static mechanisms face the stark

trade-off between robustness and implementability described in the introductory paragraph.

4

Strong rationalizability is a version of Pearce’s (1984) extensive-form rationalizability for incomplete information environments with correlated beliefs such as ours.

5

The “larger” a type space, the larger the number of possible belief hierarchies that it permits, the larger the

set of strategies that are consistent with RCBR and the type space, and the more difficult is implementation

with respect to the type space. Implementation with respect to the “large” belief-complete type spaces (such

as the universal type space) thus implies implementation with respect to all “small” type spaces.

4

BM show that this trade-off in fact transcends the implementation question. A mechanism

implements a social choice function if any play of the mechanism results in the outcome that

the social choice function prescribes for the “true” (but unknown) payoff type profile. Suppose

for a moment that a mechanism implements a social choice function that assigns a different

outcome to each payoff type profile. Then an observer of any play of the mechanism can

infer the “true” payoff type profile from the realized outcome. In other words, it is possible to

tell apart any two payoff type profiles by their strategic choices, or to strategically distinguish

(BM) all payoff type profiles. More generally, if the social choice function can assign the same

outcome to several payoff type profiles, it is necessary (but not sufficient) for implementation

that there exists a mechanism that strategically distinguishes enough payoff type profiles.

BM show that if preferences are sufficiently interdependent it is impossible to find a static

mechanism that strategically distinguishes at least some payoff types of some agent.

Strategic distinguishability captures how much of the payoff-relevant private information

of a group of agents can be robustly revealed, and can be viewed as a multi-person analogue

to the single-person revealed preference theory. We generalize strategic distinguishability to

dynamic mechanisms and show that under our epistemic assumptions there are substantial

gains from considering dynamic mechanisms. We first illustrate this in the context of auction environments or, more precisely, environments with quasilinear preferences and private

consumption that describe the assignment of an object to one of finitely many agents (QPCA

environments). In contrast to the result for static mechanisms, we show that all payoff types of

all agents can be strategically distinguished by dynamic mechanisms in QPCA environments

with generic valuation functions, and therefore (essentially) regardless of the degree of preference interdependence (Proposition 4). We thus show how to successfully harness the forward

induction logic embedded in strong rationalizability to induce agents to robustly reveal their

private payoff-relevant information.

We then use this result to characterize rv-implementation. BM prove that ex-post incentive compatibility (epIC) and robust measurability are necessary and, under an economic

assumption, sufficient for rv-implementation in static mechanisms. A social choice function is

robustly measurable if it assigns the same outcome to any payoff types that are strategically

indistinguishable by static mechanisms. We show that if we admit dynamic mechanisms and

appropriately generalize robust measurability, analogous necessary (Proposition 1) and, with

a minor qualification, analogous sufficient conditions (Proposition 3) emerge. Consequently,

the fact that dynamic mechanisms can strategically distinguish more payoff types immediately implies that they can also rv-implement more social choice functions. In particular, in

QPCA environments, non-constant epIC social choice functions can be rv-implemented by

static mechanisms only if the agents’ preferences are sufficiently little interdependent, but

rv-implemented by dynamic mechanisms (essentially) regardless of the degree of preference

5

interdependence.

Obtaining general results when using strong rationalizability can be challenging due to

the non-monotonicity of the strong belief operator. Nonetheless, we show that strategic distinguishability is well-behaved in an important sense in general environments (Proposition

2). Moreover, we generalize the results described in the previous two paragraphs to private

consumption environments (Corollary 2). In all of our analysis, we rule out badly behaved

mechanisms by restricting attention to finite dynamic mechanisms, and focus on finite payoff

type spaces.6

QPCA Environments. Example 1.1 previews some results in the context of a QPCA

environment with specific valuation functions. The example highlights that under RCSBR,

dynamic mechanisms can robustly virtually allocate a single object in an efficient manner in

many cases in which the degree of preference interdependence prevents static mechanisms to

achieve the same. We tacitly assume belief-completeness.

Example 1.1 (compare BM, Section 3) An object is to be allocated among I < ∞ agents.

Each agent i has a finite payoff type space Θi , {0, 1} ⊆ Θi ⊆ [0, 1], and receives utility

vi (θi , θ−i )qi + ti if the payoff type profile is (θi , θ−i ), where qi is the probability that i will

P

receive the good, ti a monetary transfer and vi (θi , θ−i ) = θi + γ j6=i θj the value of the object

to i. The parameter γ ≥ 0 measures the degree of preference interdependence in the environ1

ment. If γ < I−1

static mechanisms can strategically distinguish all payoff type profiles (see

1

BM, Section 3). But if γ ≥ I−1

neither static mechanisms nor dynamic mechanisms under

RCIBR can strategically distinguish any payoff type profiles. In contrast, Proposition 4 im1

1

plies that for all γ < I−1

and for almost all γ ≥ I−1

, all payoff type profiles are strategically

distinguishable by dynamic mechanisms if there is RCSBR.

1

If γ ≥ I−1

, only constant social choice functions are rv-implementable by static mechanisms or by dynamic mechanisms under RCIBR. This is because only constant social choice

functions are robustly measurable (BM): only constant social choice functions assign the same

outcome to payoff type profiles that are strategically indistinguishable by static mechanisms,

or, equivalently, strategically indistinguishable by dynamic mechanisms under RCIBR. In contrast, under RCSBR, all epIC social choice functions can be rv-implemented in dynamic mechanisms for almost all γ by Proposition 3. This includes in particular the efficient allocation of

the object (a non-constant social choice function), which is epIC if the single-crossing condition

γ < 1 holds.7

6

Finiteness of the payoff type spaces is a standard assumption in the virtual implementation literature,

compare BM, Abreu and Matsushima (1992a,b), Artemov, Kunimoto, and Serrano (2013). BM provide an

example of strategic distinguishability with continuous payoff type spaces.

Q

P

7

More precisely, an allocation rule is a profile (qi ) of functions qi : Θ = Θi → [0, 1] such that Ii=1 qi (θ) =

6

1.1

1.1.1

Detailed Preview of Results

Strategic Distinguishability

Two payoff types of an agent are strategically indistinguishable by a static mechanism if they

have a rationalizable strategy in common. If the common rationalizable strategy is played, the

mechanism designer is unable to draw an inference about the agent’s payoff type. Since the

designer can observe individual strategies, strategic distinguishability can be defined agent by

agent. In a dynamic mechanism the designer observes terminal histories instead of strategies,

and whether or not the designer can infer agent i’s payoff type may depend on which strategies

′

the agents −i follow, and thus indirectly on −i’s payoff types. In particular, if θ−i and θ−i

are two profiles of −i’s payoff types then it is possible that two of i’s payoff types lead to the

′ , but to different

same terminal history for some strongly rationalizable strategy profile of θ−i

terminal histories for all strongly rationalizable strategy profiles of θ−i (see Example 4.1).

This is why we will sometimes need to talk about the strategic distinguishability of payoff

type profiles (θi , θ−i ) rather than payoff types θi .

Generalizing BM’s notion, we call two payoff type profiles strategically indistinguishable if

in any mechanism some terminal history is reached by strongly rationalizable strategy profiles

of both payoff type profiles (and strategically distinguishable otherwise). If such a terminal

history occurs, it is impossible to tell which (if any) of the two payoff type profiles has played the

mechanism. Analogously, we call two payoff types of an agent strategically indistinguishable

if in any mechanism some terminal history is reached by strongly rationalizable strategies of

both payoff types and if in addition, this terminal history is admitted by strongly rationalizable

strategies of some payoff types of the other agents.8

We show in Proposition 2 that strategic distinguishability is well-behaved in the following

sense. We never need to worry about being able to strategically distinguish some payoff type

profiles by a first, but some other payoff type profiles only by a second, different mechanism.

There always exists a maximally revealing mechanism that strategically distinguishes all payoff

type profiles that can be strategically distinguished.

Next, we address in Section 5 which payoff type profiles maximally revealing mechanisms

actually do strategically distinguish. To begin, we focus on QPCA environments. Lotteries

over allocations are available. Preferences are interdependent, meaning that an agent’s valuation for the object can depend on his own and on the other agents’ payoff types. Proposition

4 shows that under a weak sufficient condition, there is a fully revealing mechanism, that is,

1 for all θ ∈ Θ. It is efficient if for each payoff type profile θ and each agent i, qi (θ) > 0 implies vi (θ) =

maxj=1,...,I vj (θ). Any efficient allocation rule is epIC for γ < 1 if combined with generalized VCG transfers,

P

that is, if the transfer to i is ti (θ) = −qi (θ)(γ j6=i θj + maxj6=i θj ) (Dasgupta and Maskin, 2000).

8

If in these definitions we replace “strongly rationalizable” with “weakly rationalizable,” we obtain the notion

of strategic indistinguishability we use in Müller (2012).

7

a mechanism which strategically distinguishes all payoff type profiles. This mechanism has

a simple structure: One after another, agents announce a possible ex-post valuation. Each

agent only moves once.

The sufficient condition of Proposition 4 is that for every agent, the sets of ex-post valuations are disjoint for any two distinct payoff types. In QPCA environments, an ex-post

valuation of a payoff type is any valuation the payoff type can have if he holds a degenerate

belief about the other agents’ payoff types. The sufficient condition holds for almost all valuation function profiles, and merely requires that the set of ex-post valuations be disjoint across

an agent’s payoff types — distinct payoff types can have the same valuation for non-degenerate

beliefs. On top of that, the sufficient condition is not necessary. While there is a measure zero

set of valuation function profiles for which no fully revealing mechanism exists — if a valuation

is an ex-post valuation of two payoff types for the same degenerate belief about the others’

payoff types, then the two payoff types cannot be strategically distinguished (Proposition 5)

— a fully revealing mechanism can exist even if the sets of ex-post valuations of all payoff

types of all agents intersect (Example 5.1).

Finally, we generalize the sufficient condition for the existence of a fully revealing mechanism to private consumption environments that satisfy the economic property (Corollary 2).

It is interesting to note that the mechanism from Corollary 2 would also be fully revealing if

we were to use iterated admissibility instead of strong rationalizability as the solution concept.

1.1.2

Robust Virtual Implementation

We say that a social choice function is robustly implementable if it is fully implementable

under strong rationalizability. A social choice function is robustly virtually implementable

(rv-implementable) roughly if arbitrarily close-by social choice functions are robustly implementable.9

Section 4 examines rv-implementation in general environments in which outcomes are lotteries over a finite set of pure outcomes and agents have expected utility preferences. The necessary (Proposition 1) and sufficient (Proposition 3) conditions reveal a simple relation between

rv-implementability and strategic distinguishability that originates in Abreu and Matsushimas’ (1992b) characterization of (non-robust) virtual implementation in static mechanisms.

Our sufficiency proof builds on the proofs by Abreu and Matsushima (1992a,b).

There are two necessary conditions. First, only social choice functions which assign the

same outcome to strategically indistinguishable payoff type profiles can be rv-implementable.

We call such social choice functions dynamically robustly measurable, or briefly dr-measurable.

9

Replacing “strongly rationalizable”/“strong rationalizability” with “weakly rationalizable”/“weak rationalizability” in the previous sentences yields the implementation concept we study in Müller (2012), and replacing

“dynamic mechanism” with “static mechanism” yields the implementation concept studied by BM.

8

A second necessary condition is ex-post incentive compatibility (epIC), which requires that in

the direct mechanism, truth-telling is a best response for every payoff type of an agent that expects his opponents to tell the truth, regardless of his belief about his opponents’ payoff types.

Ex-post incentive compatibility has been shown to be necessary for robust implementation in

static mechanisms by Bergemann and Morris (2005), and is a strong incentive compatibility

condition.10 Conversely, under an economic assumption, any epIC social choice function that

is strongly dr-measurable is rv-implementable. Strong dr-measurability is a somewhat stronger

version of dr-measurability and requires that for any agent, the social choice function treats

any two strategically indistinguishable payoff types the same.

From our results on strategic distinguishability (Proposition 4 and Corollary 2) we know

that in private consumption environments satisfying the economic property, dr-measurability

and strong dr-measurability are very weak conditions. Indeed, under a sufficient condition

which is for example satisfied by any QPCA environment with generic valuation functions,

all social choice functions are both dr-measurable and strongly dr-measurable, and dynamic

mechanisms can rv-implement a social choice function if and only if it is epIC (Corollaries 1

and 2).

1.2

Related Literature

Robust Virtual Implementation. Carrying out the Wilson doctrine (Wilson, 1987) in

the context of mechanism design, Bergemann and Morris (2005, 2009a,b, 2011), Chung and

Ely (2007) and others examine which social choice functions are implementable if the beliefs

about others’ private information are not common beliefs. Within this literature on robust

mechanism design, Artemov, Kunimoto, and Serrano (2013), BM and the present paper all

require full (and not just partial) implementation but slightly weaken the implementation

concept to virtual, that is, approximate implementation. Our analysis is less general than

that of BM and Artemov, Kunimoto, and Serrano (2013) inasmuch as we restrict attention

to private consumption environments, at least for the purpose of determining which payoff

type profiles are strategically distinguishable. At the same time, our analysis is more general

inasmuch as we admit dynamic mechanisms and not only static ones.

As discussed, BM impose no assumptions on the belief hierarchies over payoff type profiles.

This makes their mechanisms “totally” robust but permits virtual implementation only in

environments with little preference interdependence. Artemov, Kunimoto, and Serrano (2013)

present more permissive sufficient conditions for virtual implementation which do not impose

a limit on the preference interdependence. They achieve this by giving up “total” robustness

and adopting an “intermediately” robust approach. Specifically, they assume that a (finite)

10

On the strength of epIC, see e.g. Jehiel, Meyer-Ter-Vehn, Moldovanu, and Zame (2006), but also Bikhchandani (2006) and Dasgupta and Maskin (2000).

9

subset of first-order beliefs about payoff type profiles is common knowledge. In the present

paper we leave the initial belief hierarchies over payoff type profiles unrestricted and thus

adopt a generalization of BM’s “total” robustness approach to dynamic mechanisms. Despite

maintaining “total” robustness, we still offer sufficient conditions that do not impose a limit

on the preference interdependence.

Robust Dynamic Mechanisms. Bergemann and Morris (2007) point out the potential of

dynamic mechanisms to improve on static mechanisms in complete information environments

(in which bidders commonly know the payoff type profile). They present an ascending price

version of the generalized VCG mechanism and show that it robustly virtually allocates an

object in an efficient manner even if there is so much preference interdependence that a static

mechanism cannot. Bergemann and Morris (2007) focus on robustness solely in terms of

the uncertainty about others’ strategies. Both because they use backward induction as their

solution concept and because they assume complete information, inferences about others’

payoff types, while central to our analysis, are absent from theirs.

Penta (2013) explores robust implementation in incomplete information environments with

multiple stages, but under different epistemic assumptions than those used in the present

paper. He introduces backwards rationalizability, a solution concept that incorporates a belief

revision assumption as expressed by common belief in future rationality at each decision node.

In each stage of his model, an agent learns part of his payoff type and participates in a static

mechanism. As a special case that is similar to our set-up, an agent may learn everything

about his payoff type in the first stage. In this case, the agent subsequently participates in

a sequence of static mechanisms and so de facto in a dynamic mechanism with observable

actions. But due to Penta’s (2013) use of backwards rationalizability, in his model, dynamic

mechanisms cannot strategically distinguish more payoff type profiles than static mechanisms.

Implementation under RCSBR. Our paper is not the first to consider implementation

under RCSBR. One mechanism design problem for which a solution under RCSBR has already

been suggested is King Solomon’s dilemma (see e.g. Perry and Reny, 1999). The dilemma is

to give an object to the one of two agents who values it most, at zero cost. Who values

the object most is assumed to be common knowledge among the agents, an informational

assumption that sets the dilemma apart from the paradigm of “total” robustness. Battigalli

and Siniscalchi (2003, Section 3.2.2) show that a first-price auction with an entry fee and a

preceding opt-in stage solves a specific parametrization of the dilemma if the agents engage in

forward induction reasoning.

10

2

Example

Example 2.1 argues in a simple environment that static mechanisms and, equivalently, dynamic

mechanisms under RCIBR can strategically distinguish less payoff type profiles than dynamic

mechanisms under RCSBR. Again, we tacitly assume belief-completeness.

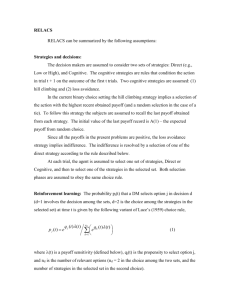

Example 2.1 There are two agents i ∈ {1, 2} with two conceivable payoff types each, θ̂i ∈

{θi , θi′ }, and four outcomes, w, x, y, z. The utility functions are given in Figure 1. We only say

u1 (·, θ1 , θ2 )

u1 (·, θ1 , θ2′ )

u1 (·, θ1′ , θ2 )

u1 (·, θ1′ , θ2′ )

w

x

y

z

5

1

1

5

1

1

0

1

0

0

2

0

2

0

0

2

u2 (·, θ1 , θ2 )

u2 (·, θ1 , θ2′ )

u2 (·, θ1′ , θ2 )

u2 (·, θ1′ , θ2′ )

w

x

y

z

1

0

0

0

0

1

1

1

3

3

3

0

2

2

2

1

Figure 1: Utility functions

that some information is revealed if it is revealed for all strategy profiles that are consistent

with the epistemic assumption under consideration. For this demanding notion of information

revelation, we claim that neither a) static mechanisms nor b) dynamic mechanisms under

RCIBR can reveal anything about the state of the nature (θ̂1 , θ̂2 ), but that c) the dynamic

mechanism shown in Figure 2 fully reveals the state of nature if there is RCSBR.

1

2

ϑ2

w

ϑ1

ϑ′1

ϑ′2

ϑ2

2

x

y

ϑ′2

z

Figure 2: Mechanism that strategically distinguishes all payoff type profiles

a) To see that static mechanisms cannot reveal anything about the state of nature we show

that all payoff type profiles are strategically indistinguishable by static mechanisms (see BM,

Proposition 1). This is the case if in any static mechanism there is a strategy profile which

can be played by every payoff type profile. In accordance with the robust approach we assume

that a strategy profile can be played if it is consistent with RCBR, where a strategy is rational

for θ̂i if it is a best response to some, arbitrary belief about θ̂−i (and −i’s strategy).

Let S1 and S2 be finite strategy sets for agents 1 and 2, respectively, and Γ : S1 × S2 →

{w, x, y, z} be a static mechanism. To show that some strategy profile can be played by all

11

payoff type profiles, we first construct a strategy profile (s11 , s12 ) which is rational for all payoff

type profiles, or, equivalently, which survives one round of iterated elimination of never-best

responses for all payoff type profiles. Let s11 ∈ S1 be a best response for a payoff type θ1 who

is certain that agent 2’s payoff type is θ2 and that agent 2 will play some arbitrarily chosen

s02 ∈ S2 . Since u1 (·, θ1 , θ2 ) = u1 (·, θ1′ , θ2′ ), s11 must then also be a best response for a payoff type

θ1′ who is certain that agent 2’s payoff type is θ2′ and that agent 2 will play s02 ∈ S2 . Therefore,

s11 is rational for both payoff types of agent 1. Similarly, since u2 (·, θ1 , θ2′ ) = u2 (·, θ1′ , θ2 )

there is a s12 ∈ S2 that is rational for both payoff types of agent 2. Next, we construct

a profile (s21 , s22 ) which is consistent with rationality and mutual belief in rationality for all

(θ̂1 , θ̂2 ), or, equivalently, survives two rounds of iterated elimination of never-best responses

for all payoff type profiles. To that end, simply let s21 be a best response for θ1 against the

degenerate belief in (s12 , θ2 ). Then s21 is also a best response for θ1′ , to the degenerate belief

in (s12 , θ2′ ), and thus rational for both payoff types of agent 1. Moreover, if agent 1 is certain

of (s12 , θ2 ) or of (s12 , θ2′ ), then agent 1 believes that agent 2 is rational. We can derive s22 ∈ S2

analogously. Finally, we can iterate this argument. The iterated elimination procedure stops

in some round k < ∞. The corresponding strategy profile (sk1 , sk2 ) is consistent with RCBR

for every payoff type profile, or, equivalently, rationalizable for every payoff type profile. Since

strong rationalizability equals rationalizability in any static mechanism, formally, (sk1 , sk2 ) is a

terminal history of Γ that is strongly rationalizably reached by all payoff type profiles. This

does not change if we admit lotteries over {w, x, y, z} as outcomes of mechanisms.

b) To see that dynamic mechanisms under RCIBR cannot reveal anything about the state

of nature we show that all payoff type profiles are strategically indistinguishable by dynamic

mechanisms if there is only RCIBR (see Müller, 2012). The argument is essentially as in a):

Take any dynamic mechanism, and let s11 be a sequential best response for a payoff type θ1

whose beliefs are as follows: initially, θ1 is certain that agent 2’s payoff type is θ2 and that

agent 2 will play some arbitrarily chosen s02 . If surprised, θ1 continues to be certain that agent

2’s payoff type is θ2 and believes that agent 2 plays some arbitrarily chosen strategy that

admits the current information set. Then, s11 must also be a sequential best response for a

payoff type θ1′ who at each information set is certain that agent 2’s payoff type is θ2′ and that

agent 2 plays the strategy that θ1 believes in. Since there is also a s12 that is a sequential

best response for both θ2 and θ2′ , we can again iterate the argument to find a strategy profile

(sk1 , sk2 ) that is consistent with RCIBR for every payoff type profile.

c) To show that the dynamic mechanism presented in Figure 2 is fully revealing under

RCSBR we prove that it strategically distinguishes all payoff type profiles. To that end, we

iteratively delete the strategies which are not sequentially rational, not consistent with sequential rationality and mutual strong belief in sequential rationality and so on. This corresponds

to determining the strongly 1-rational, strongly 2-rational, ... strategies as formally defined

12

later in Definition 3. For ease of exposition, instead of saying that any strategy that prescribes

ϑ′2 at history (ϑ′1 ) is a never-best sequential response (or conditionally dominated) for θ2 we

say that action ϑ′2 is never rational (or conditionally dominated) for θ2 at (ϑ′1 ), and so on.11

• It is never rational for θ2 to play ϑ′2 if agent 1 announced ϑ′1 and

it is never rational for θ2′ to play ϑ2 if agent 1 announced ϑ1 .

1

Agent 2

2

ϑ2

u2 (θ1 , θ2 ) u2 (θ1 , θ2′ )

u2 (θ1′ , θ2 ) u2 (θ1′ , θ2′ )

1 0

0 0

ϑ1

ϑ′1

ϑ′2

ϑ2

2

3 3

3 0

0 1

1 1

ϑ′2

2 2

2 1

In the figure above, we replaced the outcomes assigned to the terminal histories of Γ

with agent 2’s payoffs, with θ2 ’s payoffs filling the left and θ2′ ’s payoffs the right columns.

Conditional on reaching the history (ϑ′1 ) playing ϑ′2 is dominated for payoff type θ2 , as

independent of θ2 ’s belief about 1’s payoff type the action ϑ′2 leads to a payoff of 2 while

the action ϑ2 leads to a payoff of 3. Similarly, ϑ2 is dominated for θ2′ conditional on

reaching (ϑ1 ).

No strategies are eliminated for agent 1, so we move on to the next step.

• If agent 1 (strongly) believes in agent 2’s rationality, ϑ′1 is never rational for θ1 .

1

ϑ1

Agent 1

2

ϑ2

u1 (θ1 , θ2 ) u1 (θ1′ , θ2 )

u1 (θ1 , θ2′ ) u1 (θ1′ , θ2′ )

5 1

1 5

ϑ′2

1 0

1 1

ϑ′1

2

ϑ2

0 2

0 0

ϑ′2

2 0

0 2

We now display agent 1’s payoffs, graying out payoffs to which agent 1 assign probability

zero because he is certain that agent 2 does not follow any strategy which was deleted

in the previous step (any sequentially irrational strategy). If we ignore the grayed out

payoffs, then strategy ϑ′1 leads to a payoff of 0 for θ1 and is thus dominated by ϑ1 , which

leads to a payoff of at least 1.

11

For a formal definition of conditional dominance see Shimoji and Watson (1998) or the proof of Proposition

2 in Appendix B.

13

• If agent 2 strongly believes in agent 1’s rationality and 1’s (strong) belief in 2’s rationality,

and if agent 1 announced ϑ′1 , then ϑ2 is never rational for θ2′ .

1

Agent 2

2

ϑ2

u2 (θ1 , θ2 ) u2 (θ1 , θ2′ )

u2 (θ1′ , θ2 ) u2 (θ1′ , θ2′ )

ϑ1

ϑ′1

ϑ′2

ϑ2

2

0 1

1 1

1 0

0 0

3 3

3 0

ϑ′2

2 2

2 1

We switch back to agent 2 and gray out payoffs that correspond to strategies of agent

1 that already were deleted. We see that at this point, independent of his initial belief

about θ̂1 , agent 2 concludes that 1’s payoff type is θ1′ if (ϑ′1 ) is reached. In essence,

RCSBR allows us to predict agent 2’s belief about agent 1’s payoff type at this history.

Therefore, ϑ2 is dominated for θ2′ conditional on reaching (ϑ′1 ). This is the first time

we crucially rely on the forward induction logic embedded in RCSBR. If nothing were

known about how agent 2 revises his beliefs, we could not exclude the case that θ2′ , once

surprised by the action ϑ′1 , believes to face payoff type θ1 (rationalizing ϑ2 at (ϑ′1 )).

• If agent 1 (strongly) believes in ..., ϑ1 is never rational for θ1′ .

1

Agent 1

2

ϑ2

u1 (θ1 , θ2 ) u1 (θ1′ , θ2 )

u1 (θ1 , θ2′ ) u1 (θ1′ , θ2′ )

ϑ1

ϑ′1

ϑ′2

ϑ2

2

1 0

1 1

5 1

1 5

0 2

0 0

ϑ′2

2 0

0 2

Given 1’s beliefs at this stage, telling the truth and announcing ϑ′1 leads to a payoff of

2 for θ1′ and thus dominates ϑ1 , which leads to a payoff of at most 1.

• If agent 2 strongly believes in ..., and if agent 1 announced ϑ1 , then ϑ′2 is never rational

for θ2 .

1

Agent 2

2

ϑ2

u2 (θ1 , θ2 ) u2 (θ1 , θ2′ )

u2 (θ1′ , θ2 ) u2 (θ1′ , θ2′ )

1 0

0 0

14

ϑ1

ϑ′1

ϑ′2

ϑ2

0 1

1 1

2

3 3

3 0

ϑ′2

2 2

2 1

Again, RCSBR allows us to predict agent 2’s belief about agent 1’s payoff type, this

time at the history (ϑ1 ). Agent 2 concludes at (ϑ1 ) that 1’s payoff type is θ1 . Given this

restriction on beliefs, ϑ′2 is dominated for θ2 conditional on reaching (ϑ1 ).

We see that for both payoff types of both agents only truth-telling survives the iterated elimination procedure — only truth-telling is strongly rationalizable. Therefore one can infer

(θ̂1 , θ̂2 ) from observing (ϑ̂1 , ϑ̂2 ), and Γ strategically distinguishes all payoff type profiles. Full

revelation is possible because, by engaging in forward induction, the agents “learn” each others’

payoff types during the course of the mechanism, independently of their initial belief hierarchies about each others’ payoff types. In particular, by the time agent 2 moves, his payoff

types must have different (expected) preferences, as by then agent 2 already “learned” agent

1’s payoff type and has a fixed, degenerate belief about agent 1’s payoff type.

The difference in the revelation properties of static and dynamic mechanisms (under

RCSBR) in Example 2.1 translate to a difference in terms of implementation. Consider

the non-constant epIC social choice function f defined by f (θ1 , θ2 ) = w, f (θ1 , θ2′ ) = x,

f (θ1′ , θ2 ) = y and f (θ1′ , θ2′ ) = z. The mechanism of Figure 2 robustly implements f , but

no static mechanism can robustly, or even robustly virtually implement f (all payoff type profiles are strategically indistinguishable by static mechanisms, hence only constant social choice

functions are rv-implementable by static mechanisms). Proposition 3 will provide a dynamic

mechanism that rv-implements any epIC social choice function in Example 2.1 if lotteries over

{w, x, y, z} are admitted as outcomes.

3

Environment and Preliminaries

There is a finite set I = {1, . . . , I} of at least two agents. Each agent i ∈ I has a nonempty and

finite payoff type space Θi . We let Θ denote the set of payoff type profiles (θ1 , . . . , θI ). More

Q

generally, if (Zi )i∈I is a family of sets Zi , we let Z denote the Cartesian product i∈I Zi .12 It

is also understood that z denotes (z1 , . . . , zI ) whenever zi ∈ Zi for all i ∈ I. If Zi = Ai × Bi for

all i ∈ I, we at times ignore the correct order of tuples and write ((a1 , . . . , aI ), (b1 , . . . , bI )) ∈ Z

for (ai , bi )i∈I ∈ Z.

There is a nonempty and finite set X of pure outcomes; the set of outcomes is the set

P

Y = {y ∈ R#X : y ≥ 0, #X

n=1 yn = 1} of lotteries over X. We let ui (x, θ) denote the von

Neumann-Morgenstern utility that i derives from the pure outcome x if the payoff type profile

is θ ∈ Θ, and, in a slight abuse of notation, ui (y, θ) the expected utility that i derives from

lottery y if the payoff type profile is θ. If m, n ∈ N = {0, 1, . . .} and m > n, then {m, . . . , n}

is the empty set.

12

As an exception to this rule, Hi will denote the set of non-terminal histories at which i is active, and

Q

H 6= i∈I Hi the set of all histories (compare Definition 9).

15

3.1

Mechanisms

A (dynamic) mechanism Γ = hH, (Hi )i∈I , P, Ci is a finite extensive game form with perfect

recall that has no trivial decision nodes. The set of dynamic mechanisms includes the set of

static mechanisms or normal game forms as a proper subset. We relegate most definitions to

Appendix A, but summarize some important notation here. A mechanism’s first component,

H, is a finite set of histories h = (a1 , . . . , an ), which are finite sequences of actions. We

let ∅ denote the initial history and T the set of terminal histories. At non-terminal history

h = (a1 , . . . , an ) ∈ H\T , the agent determined by the player function P : H\T → I chooses

an action from the set {a : (h, a) ∈ H}. Here, (h, a) denotes the history (a1 , . . . , an , a). Once

a terminal history h ∈ T is reached the lottery C(h) ∈ Y obtains as the outcome of the

mechanism. The set Hi partitions the set Hi of all histories at which i moves into information

sets H. Whenever i moves he knows the information set, but not the history he is at.

A strategy si for player i specifies an action for each information set H ∈ Hi . The set of

i’s strategies is Si . The terminal history induced by strategy profile s ∈ S is denoted by ζ(s).

We use the symbol to indicate precedence among histories, and also to indicate precedence

among i’s information sets. We let Si (h) = {si ∈ Si : ∃s−i ∈ S−i , h ζ(s)} be the set of i’s

strategies admitting history h, Si (H) be the set of i’s strategies admitting j’s information set

H, j ∈ I, and S−i (H) be the set of −i’s strategy profiles admitting H. Moreover, Σi = Si ×Θi ,

Σ−i = S−i × Θ−i , Σi (h) = Si (h) × Θi and Σ−i (H) = S−i (H) × Θ−i .

For any J ⊆ I, we let both H((sj , θj )j∈J ) and H((sj )j∈J ) denote the set of histories admitted by (sj )j∈J , and Hi ((sj , θj )j∈J ) = Hi ((sj )j∈J ) = {H ∈ Hi : ∃h ∈ H, h ∈ H((sj )j∈J )}

the set of i’s information sets admitted by (sj )j∈J . For A ⊆ Σ, H(A) denotes the union of

sets H(s, θ) and Hi (A) the union of sets Hi (s, θ), where both times (s, θ) ∈ A. Similarly for

A ⊆ Σi . Finally, we let lh denote the length of history h ∈ H and H =t = {h ∈ H : lh = t} the

set of histories with length t. The sets Hi=t , H ≤t etc. are defined analogously. Combinations

of such notation have the obvious meaning. For example, H =1 (s) is the singleton consisting

of the history of length 1 which lies on the path induced by strategy profile s.

3.2

Beliefs and Sequential Rationality

Player i’s beliefs about his opponents’ strategies and payoff types are captured by a family of

probability measures on Σ−i , with each measure representing i’s belief at one of his information

sets. Player i also holds a belief at the initial history, even if it does not comprise one of his

information sets. Formally, i’s beliefs are indexed by the members of H̄i = Hi ∪{{∅}} and form

a conditional probability system. For later, note that we let H̄i (s−i ) consist of the elements of

H̄i admitted by s−i etc.

16

Definition 1 (Rényi, 1955) A conditional probability system (CPS) on Σ−i is a function

µi : 2Σ−i × H̄i → [0, 1] such that

a) for all H ∈ H̄i , µi (·|H) is a probability measure on (Σ−i , 2Σ−i )

b) for all H ∈ H̄i , µi (Σ−i (H)|H) = 1.

c) for all H, H′ ∈ H̄i , if H′ H then µi (A|H)µi (Σ−i (H)|H′ ) = µi (A|H′ ) for all A ⊆

Σ−i (H).

Condition b) requires that at information set H, agent i places zero (marginal) probability

on any strategy of −i which would have prevented that H occurs. Condition c) says that i

uses Bayesian updating “whenever applicable:” Suppose that H′ H, and that at H′ , agent i

believes that A will happen with probability µi (A|H′ ). The play proceeds and i finds himself

at H. If H was no surprise to him — that is, if µi (Σ−i (H)|H′ ) > 0 — he now believes in A

with probability

µi (A|H′ )

µi (A|H) =

.

µi (Σ−i (H)|H′ )

If, on the other hand, H did surprise him — if µi (Σ−i (H)|H′ ) = 0 —, Bayesian updating

“does not apply” and condition c) allows any µi (A|H) ∈ [0, 1], that is, any new estimate of the

likelihood of A. We let ∆(Σ−i ) denote the set of probability measures on Σ−i and ∆H̄i (Σ−i )

denote the set of conditional probability systems on Σ−i .

Given a CPS µi ∈ ∆H̄i (Σ−i ),

Uiµi (si , θi , H) =

X

ui (C(ζ(s)), θ)µi ((s−i , θ−i )|H)

(s−i ,θ−i )∈Σ−i (H)

denotes agent i’s expected utility if he plays strategy si ∈ Si (H), is of payoff type θi and holds

beliefs µi (·|H).

Definition 2 Strategy si ∈ Si is sequentially rational for payoff type θi ∈ Θi of player i with

respect to the beliefs µi ∈ ∆H̄i (Σ−i ) if for all H ∈ Hi (si ) and all s′i ∈ Si (H)

Uiµi (si , θi , H) ≥ Uiµi (s′i , θi , H).

We let ri : Θi × ∆H̄i (Σ−i ) ։ Si denote the correspondence that maps (θi , µi ) to the set of

strategies that are sequentially rational for payoff type θi with beliefs µi , and ρi : ∆H̄i (Σ−i ) ։

Σi denote the correspondence that maps µi to the set of strategy-payoff type pairs that includes

(si , θi ) if and only if si is sequentially rational for payoff type θi with beliefs µi . For each i ∈ I,

ri and ρi are nonempty-valued.

17

3.3

Strong Rationalizability

Battigalli (1999, 2003) defines strong rationalizability for multi-stage games. We extend his

definition to mechanisms in the obvious way.

Definition 3 For i ∈ I let Fi0 = Σi and Φ0i = ∆H̄i (Σ−i ) and recursively define the set Fik+1

of strongly (k + 1)-rationalizable pairs (si , θi ) for agent i by

Fik+1 = ρi (Φki ),

and the set Φk+1

of strongly (k + 1)-rationalizable beliefs for agent i by

i

o

n

k+1

k+1

6= ∅ ⇒ µi (F−i

|H) = 1 ,

Φk+1

= µi ∈ Φki : ∀H ∈ H̄i Σ−i (H) ∩ F−i

i

T

k

k ∈ N. Finally, let Fi∞ = ∞

k=0 Fi be the set of strongly rationalizable strategy-payoff type

T

∞

k

pairs for player i, and Φ∞

i =

k=0 Φi be the set of strongly rationalizable beliefs for player i.

The strongly rationalizable strategies are determined by iteratively deleting never-best

sequential responses, where it is required that at each of his information sets an agent believes

in the highest degree of his opponents’ rationality that is consistent with the information set

(best-rationalization principle). For convenience, we let Rik (θi ) = {si ∈ Si : (si , θi ) ∈ Fik }

Q

denote the set of strongly (k-)rationalizable strategies for θi ∈ Θi , and Rk (θ) = i∈I Rik (θi ),

k ∈ N ∪ {∞}. The sets Ri∞ (θi ) and Φ∞

i are nonempty for all i ∈ I and θi ∈ Θi .

4

Robust Virtual Implementation

A social choice function f : Θ → Y assigns a desired outcome to each payoff type profile. It

is robustly implementable if there exists a mechanism such that for every payoff type profile

θ, every strategy profile that is strongly rationalizable for θ leads to f (θ). A social choice

function is robustly virtually implementable if it can be robustly approximately implemented

in the following sense, where k · k denotes the Euclidean norm on R#X .

Definition 4 Social choice function f is robustly ε-implementable if there is a mechanism

Γ such that kC(ζ(s)) − f (θ)k ≤ ε for all (s, θ) ∈ F ∞ . Social choice function f is robustly

virtually implementable (rv-implementable) if it is robustly ε-implementable for every ε > 0.

4.1

Necessary Conditions for Robust Virtual Implementation

The robust approach to implementation gives rise to the following incentive compatibility

condition.

18

Definition 5 Social choice function f is ex-post incentive compatible (epIC) if for all i ∈ I,

all θ ∈ Θ and all θi′ ∈ Θi

ui (f (θ), θ) ≥ ui (f (θi′ , θ−i ), θ).

Bergemann and Morris (2005) show that epIC is necessary for robust implementation in

static mechanisms. Roughly, if f is robustly implementable then it is implementable regardless

of what agent i believes about −i’s payoff types. Payoff type θi must never have an incentive

to deviate, and in particular not if he holds the degenerate belief in θ−i . A payoff type θi

with this belief must prefer playing one of his rationalizable strategies and obtaining f (θ) over

imitating payoff type θi′ and obtaining f (θi′ , θ−i ). Generalizing this argument, one can show

that epIC is also necessary for rv-implementation in static mechanisms (BM, Theorem 2), and,

as we will see in Proposition 1, for rv-implementation in dynamic mechanisms.

Admitting dynamic mechanisms does not change the incentive compatibility condition. But

it weakens the second condition necessary for rv-implementation. For rv-implementation in

static mechanisms, this second condition is called robust measurability (BM). We now define

strong dynamic robust measurability and dynamic robust measurability. These conditions

are both weaker than robust measurability and will turn out to be sufficient and necessary,

respectively, for rv-implementation in dynamic mechanisms.

First, we generalize BM’s notion of strategic indistinguishability. We write θi ∼Γi θi′ and say

′ ∈

that θi and θi′ are strategically indistinguishable by the mechanism Γ if there exist θ−i , θ−i

Θ−i such that ζ(s) = ζ(s′ ) for some s ∈ R∞ (θ), s′ ∈ R∞ (θ ′ ). We write θi ∼i θi′ and say that

θi and θi′ are strategically indistinguishable if θi ∼Γi θi′ for every mechanism Γ. Otherwise, we

call θi and θi′ strategically distinguishable.

Definition 6 Social choice function f is strongly dynamically robustly measurable (strongly

dr-measurable) if for all i ∈ I, θi , θi′ ∈ Θi and θ−i ∈ Θ−i , θi ∼i θi′ implies f (θi , θ−i ) = f (θi′ , θ−i ).

Strong dr-measurability is a direct generalization of BM’s robust measurability. Robust

measurability requires that f treats two payoff types the same if no static mechanism strategically distinguishes them, and strong dr-measurability requires this only if no mechanism at

all (static or dynamic) strategically distinguishes them. Proposition 3 will establish strong

dr-measurability as a sufficient condition for rv-implementability.

Strong dr-measurability is, however, not necessary for rv-implementation. Example 4.1 will

illustrate why. To obtain a necessary condition, we further weaken robust measurability by

introducing a notion based on the strategic indistinguishability of payoff type profiles instead

of payoff types. We write θ ∼Γ θ ′ if Γ is a mechanism and ζ(s) = ζ(s′ ) for some s ∈ R∞ (θ),

s′ ∈ R∞ (θ ′ ). We write θ ∼ θ ′ and say that θ and θ ′ are strategically indistinguishable if θ ∼Γ θ ′

for every mechanism Γ. Otherwise, we call θ and θ ′ strategically distinguishable. The binary

relations ∼ and ∼Γ are reflexive and symmetric, but not necessarily transitive.

19

Definition 7 Social choice function f is dynamically robustly measurable (dr-measurable) if

for all θ, θ ′ ∈ Θ, θ ∼ θ ′ implies f (θ) = f (θ ′ ).

Example 4.1 There are two agents i ∈ {1, 2} with two payoff types each, Θi = {θi , θi′ }, and

three pure outcomes, X = {x, y, z}. Player 1 prefers “not z” when he is of payoff type θ1 and

z when he is of payoff type θ1′ :

u1 (x, θ1 , ·) = u1 (y, θ1 , ·) > u1 (z, θ1 , ·)

u1 (z, θ1′ , ·) > u1 (x, θ1′ , ·) = u1 (y, θ1′ , ·)

Player 2 is indifferent between all outcomes unless the payoff type profile is (θ1 , θ2 ), in which

case he favors x, or (θ1 , θ2′ ), in which case he favors y:

u2 (x, θ1 , θ2 ) > u2 (y, θ1 , θ2 ) = u2 (z, θ1 , θ2 )

u2 (y, θ1 , θ2′ ) > u2 (x, θ1 , θ2′ ) = u2 (z, θ1 , θ2′ )

u2 (x, θ1′ , ·) = u2 (y, θ1′ , ·) = u2 (z, θ1′ , ·)

Clearly (θ1′ , θ2 ) ∼ (θ1′ , θ2′ ),13 and therefore θ2 ∼2 θ2′ . The social choice function f given in

θ2

θ2′

θ1

x

y

θ1′

z

z

1

ϑ1

2

ϑ2

x

ϑ′2

ϑ′1

z

y

Figure 3: f (left) and mechanism Γ that rv-implements f (right)

Figure 3 is not strongly dr-measurable because f (θ1 , θ2 ) 6= f (θ1 , θ2′ ) even if θ2 ∼2 θ2′ . But f

is dr-measurable, and indeed robustly and therefore robustly virtually implementable via the

mechanism Γ. This is because in Γ, (ϑ1 , θ1′ ) ∈ Σ1 and (ϑ′1 , θ1 ) ∈ Σ1 are eliminated via strict

dominance — for any payoff type of agent 1, truth-telling strictly dominates lying. Hence if

agent 2 gets to move he infers by forward induction that 1’s payoff type is θ1 . Given that he

believes to face θ1 , any payoff type of 2 strictly prefers truth-telling over lying.

Robust measurability implies strong dr-measurability, which itself implies dr-measurability.

In general, the converse of these implications is not true. Example 4.1 presented a social choice

function that is dr- but not strongly dr-measurable, and a main implication of Section 5 will be

that strong dr-measurability can be considerably weaker than robust measurability. Example

13

Formally, this follows from the proof of Proposition 5 which, if we let i = 2, applies without change to the

current example.

20

1.1 already described some environments in which all social choice functions are strongly drmeasurable, but only constant social choice function robustly measurable.

We prove the following proposition in Appendix B.

Proposition 1 Every rv-implementable social choice function is epIC and dr-measurable.

4.2

Sufficient Conditions for Robust Virtual Implementation

In this subsection, we show that epIC and strong dr-measurability are sufficient for rvimplementation. Like BM and Artemov, Kunimoto, and Serrano (2013), we adapt the logic

behind the mechanisms of Abreu and Matsushima (1992a,b) in constructing our implementing

mechanisms. What is new is that our mechanisms are dynamic. This matters in particular

whenever we need to “combine” several mechanisms in such a way that the combined mechanism strategically distinguishes at least as many payoff type profiles as each of the individual

mechanisms (see the proofs of Propositions 2 and 3). Combining dynamic mechanisms can

change the information available at some information sets as well as which information sets

are admitted by strongly rationalizable strategies, and we need to make sure that this does

not affect which payoff type profiles are being strategically distinguished.

1

First, we let ȳ denote the uniform lottery that assigns probability #X

to all x ∈ X, and

recall the economic property used by BM.

Definition 8 (Economic Property) The economic property is satisfied if there exists a profile of lotteries (zi )i∈I such that, for each i ∈ I and θ ∈ Θ, both ui (zi , θ) > ui (ȳ, θ) and

uj (ȳ, θ) ≥ uj (zi , θ) for all j 6= i.

The economic property allows us to single out and reward agent i. If ȳ is the reference

outcome, we can reward agent i without rewarding any other agent at the same time, namely

by substituting zi for ȳ. The economic property is satisfied in quasilinear environments (we can

reward i by transfering some of −i’s money to i) and in Example 2.1, if we admit lotteries.14

Second, we recall some facts about the environment from BM (their Lemma 2) in Lemma

1, and generalize an observation of BM (their Lemma 4) to dynamic mechanisms in Lemma 2.

Lemma 1 (BM) There exist C0 > 0 and, if the economic property holds, c0 > 0 such that

a) |ui (y, θ) − ui (y ′ , θ)| ≤ C0 for all i ∈ I, y, y ′ ∈ Y and θ ∈ Θ.

b) ui (zi , θ) > ui (ȳ, θ) + c0 for all i ∈ I and θ ∈ Θ. Here, (zi )i∈I are the lotteries from the

economic property.

14

In Example 2.1, the degenerate lotteries placing probability one on w for agent 1 and on z for agent 2

satisfy the requirements of the economic property.

21

The existence of the uniform upper bound on utility differences in part a) of the preceding

lemma follows directly from the finiteness of I, X and Θ, and the existence of the minimal

utility difference between zi and ȳ in part b) from the finiteness of I and Θ. Lemma 2, proved

in Appendix B, implies that in any mechanism, the loss in utility from playing a strategy that

is not strongly (k + 1)-rationalizable is uniformly bounded below. It is a consequence of the

finiteness of Σ−i and Hi , the resulting compactness of Φki , k ∈ N, and the continuity of i’s

expected utility with respect to his belief system.

Lemma 2 For any mechanism Γ there exists ηΓ > 0 such that for any i ∈ I, k ∈ N, (si , θi ) ∈

/

k+1

k+1

k

′

Fi

and µi ∈ Φi there are H ∈ Hi (si ) and si ∈ Ri (θi ) ∩ Si (H) such that

Uiµi (s′i , θi , H) > Uiµi (si , θi , H) + ηΓ .

Third, suppose that f is strongly dr-measurable (and epIC), and that f (θ) 6= f (θ ′ ) and

f (θ̃) 6= f (θ̃ ′ ). Since f is strongly dr-measurable it is dr-measurable. Thus there is a mechanism Γ which strategically distinguishes θ and θ ′ , and a mechanism Γ̃ which strategically

distinguishes θ̃ and θ̃ ′ . Potentially, Γ 6= Γ̃. If we want to rv-implement f , a first step is to find

a single mechanism that strategically distinguishes both θ and θ ′ and θ̃ and θ̃ ′ . Does such a

mechanism exist?

If Γ and Γ̃ are static mechanisms, then letting the agents play Γ and Γ̃ simultaneously

strategically distinguishes all payoff type profiles that are strategically distinguishable by either

of the two mechanisms. For this to be true, it is irrelevant how we weight the outcomes of Γ

and Γ̃. In particular, BM (their Lemma 5) show that if C and C̃ are the outcome functions

of Γ and Γ̃, respectively, then it suffices to let the new, combined outcome function equal

εC + (1 − ε)C̃ for some ε ∈ (0, 1).

If Γ or Γ̃ are genuinely dynamic, letting the agents play the mechanisms simultaneously

becomes impossible and some strategic interdependence between the mechanisms unavoidable.

Inevitably, at some information set an agent chooses after having observed some choices made

in the other mechanism. Such an interdependence generally changes the set of strongly rationalizable strategies. However, if we let the agents play Γ first and Γ̃ second and let ε be

close to 1, then the realized terminal histories remain unaffected despite possible changes in

the strongly rationalizable strategies, and the strategic distinguishability of θ and θ ′ and θ̃

and θ̃ ′ is preserved. This result has surprisingly profound roots and relies on recent work by

Chen and Micali (2011). We make it precise in the proof of the upcoming Proposition 2 (see

Appendix B).

We generalize from the case of two mechanisms and ask whether there is a mechanism which

reveals to the mechanism designer at least as much information about the true payoff type

profile as any other mechanism. Formally, we say that mechanism Γ is maximally revealing if

22

it strategically distinguishes any two payoff type profiles that are strategically distinguishable

by some mechanism, that is, if for all θ, θ ′ ∈ Θ, θ ≁ θ ′ implies θ ≁Γ θ ′ .

Proposition 2 There exists a maximally revealing mechanism.

Armed with the knowledge that a maximally revealing mechanism exists, we can now

establish strong dr-measurability and epIC as sufficient conditions for rv-implementation.

Proposition 3 Suppose the environment satisfies the economic property. Then every ex-post

incentive compatible social choice function that is strongly dr-measurable is rv-implementable.

We conclude this section by proving Proposition 3. Compared with the necessary conditions from Proposition 1, we make two additional assumptions. First, we assume the economic

property in order to guarantee the existence of small punishments and rewards which we use

in constructing our implementing mechanisms. This is much like in Abreu and Matsushima

(1992a,b), Artemov, Kunimoto, and Serrano (2013) and BM. Second, we assume strong drmeasurability instead of dr-measurability. Our mechanisms will resemble a direct mechanism

in which i expects any j 6= i to announce his true payoff type θj or a θ̂j such that θ̂j ∼j θj .

By epIC, truth-telling is a best response for i if i believes that all j 6= i tell the truth. Strong

dr-measurability ensures that truth-telling remains a best response even if i expects some j to

lie by announcing a θ̂j which is strategically indistinguishable from j’s true payoff type θj .

In Section 5, we will consider environments that we call QPCA environments. Any such

environment satisfies the economic property, and if its valuation functions are generic, we

will be able to find a mechanism that strategically distinguishes all payoff type profiles. In

this case, the difference between dr- and strong dr-measurability vanishes (both are trivially

satisfied by every social choice function), the additional assumptions of Proposition 3 have

no bite and an exact characterization of rv-implementation obtains: a social choice function

is rv-implementable if and only if it is epIC (Corollary 1). Maintaing the assumption of the

economic property, we will even be able to generalize this simple characterization to a broad

class private consumption environments (Corollary 2).

Proof. Let f be an epIC and strongly dr-measurable social choice function and Γ∗ =

(H ∗ , (H∗i )i∈I , P ∗ , C ∗ ) be a maximally revealing mechanism. Let δ > 0 and L ∈ N\{0} be such

that

δ2 C0 < δηΓ∗

(1)

and

1

1

C0 < δ2 c0 ,

(2)

L

I

where C0 and c0 are the constants from Lemma 1 and ηΓ∗ the constant from Lemma 2. The

√

following mechanism Γ = (H, (Hi )i∈I , P, C) will turn out to robustly 2(δ + δ2 )-implement

23

f . First, each agent is asked to L times submit his payoff type. An agent can lie, and his l-th

submission can differ from is m-th submission. His submissions are not revealed to the other

agents during the entire mechanism. Second, the agents play Γ∗ . Formally, the set of histories

is15

L ∗

∗

H = {h : h (θ1 , . . . , θI , h∗ ) for some (θi )i∈I ∈ ΘL

1 × . . . × ΘI , h ∈ H }.

The player function is defined by P (θ1 , . . . , θi−1 ) = i and P (θ1 , . . . , θI , h∗ ) = P ∗ (h∗ ) for

i ∈ I and (θ1 , . . . , θI , h∗ ) ∈ H. Agent i’s information sets are Hi∅ = {(θ1 , . . . , θi−1 ) : θj ∈

ΘL

j for j < i} and

∗

∗

[θi , H∗ ] = (θ1 , . . . , θI , h∗ ) : θj ∈ ΘL

,

j for j 6= i, h ∈ H

∗

∗

∀θi ∈ ΘL

i , H ∈ Hi .

∗

∗

In summary, Hi = Hi∅ ∪ [θi , H∗ ] : θi ∈ ΘL

i , H ∈ Hi . The agents’ l-th submissions of

their payoff types determine nearly L1 -th of the outcome via a direct mechanism. Hence, if all

agents truthfully announce their payoff type L times, the outcome of the mechanism virtually

equals the outcome stipulated by f . Because f is strongly dr-measurable this is also true if the

agents lie, but their lies are “inconsequential.” We call payoff type θi ’s lie θ̂i inconsequential

if θi «i θ̂i , where «i denotes the transitive closure of ∼i . If on the other hand θi ffi θ̂i , then

there might exist a θ−i for which f (θi , θ−i ) 6= f (θ̂i , θ−i ) and we call i’s lie “serious.” The small

part of the outcome that is not determined by direct mechanisms incentivizes the agents to not

ever seriously lie in their submissions of their payoff types. This part consists of the outcome

of Γ∗ , and to a smaller extent of some reward terms. More precisely, the outcome function

C : T → Y assigns the lottery

L

C(h) = (1 − δ − δ2 )

1X

1X

ri (h),

f (θ l ) + δC ∗ (h∗ ) + δ2

L

I

i∈I

l=1

to terminal history h = ((θi1 , . . . , θiL )i∈I , h∗ ), where agent i’s reward ri (h) is defined by

ri ((θi1 , . . . , θiL )i∈I , h∗ ) =

ȳ

zi

if ∃m ∈ {1, . . . , L} s.t.

a) h∗ ∈

/ H ∗ (Ri∗,∞ (θ̂i )) for all θ̂i s.t. θ̂i «i θim and

.

b) ∀l ∈ {1, . . . , m − 1}∀j 6= i :

∗,∞

∗

∗

l

h ∈ H (Rj (θ̂j )) for some θ̂j s.t. θ̂j «j θj

otherwise

The reward term ri (h) either punishes agent i with ȳ or rewards him with the lottery zi

guaranteed to exist by the economic property. It punishes i exactly if one of his announcements

θim meets the following two conditions. First, the history h∗ observed in the Γ∗ -part of Γ is

For a finite sequence h with codomain A and a1 , . . . , an ∈ A′ , let (a1 , . . . , an , h) denote the finite sequence

g with codomain A ∪ A′ and length n + lh such that g(k) = ak , k = 1, . . . , n, and g(n + k) = h(k), k = 1, . . . , lh .

15

24

not admitted by any strongly rationalizable strategy of θim or of an inconsequential lie of θim

in the stand-alone mechanism Γ∗ . Second, for all l < m, h∗ is admitted in Γ∗ by some strongly

l or of an inconsequential lie of θ l .

rationalizable strategies of θ−i

−i

The upcoming Lemma 3 implies that Γ strategically distinguishes any two payoff type

profiles that Γ∗ strategically distinguishes, and therefore that Γ is maximally revealing. For

si ∈ Si , let ϕi (si ) ∈ Si∗ be the strategy induced by the Γ∗ -part of si that is not prevented by

si itself. Formally, ϕi : Si → Si∗ satisfies

ϕi (si )(H∗ ) = si ([si (Hi∅ ), H∗ ]),

∀H∗ ∈ H∗i .

For convenience, let ϕ(s) = (ϕ1 (s1 ), . . . , ϕI (sI )) for any s ∈ S.

Lemma 3 For all (s, θ) ∈ F ∞ we have ζ ∗ (ϕ(s)) ∈ H ∗ (R∗,∞ (θ)).

We prove Lemma 3 in Appendix B. To gain some intuition for why the lemma holds,

note that at the time an agent reaches his information sets in the Γ∗ -part of Γ he has already

made the L announcements of his payoff type and can only influence two components of Γ’s

P

outcome: δC ∗ (h∗ ) and the reward terms δ2 I1 i∈I ri (h). Suppose θi is certain that θ−i follows

∗,k−1

extensions of the strategies in R−i

(θ−i ) to Γ. By (1), seeking a more favorable reward by

∗

following a strategy in the Γ -part of Γ that is suboptimal in the stand-alone mechanism Γ∗

can never offset the loss the suboptimal strategy causes in δC ∗ (h∗ ). Hence, θi will only follow

extensions of the strategies in Ri∗,k (θi ). In the Γ∗ -part of Γ, we can eliminate at least as many

strategies from Fi∗,k−1 as in Γ∗ . Maybe we can actually eliminate strictly more strategies, but

we choose not to. This choice can change the set of strategies surviving the iterated removal

of never-best sequential responses, but Chen and Micali (2011) proved that the set of induced

terminal histories remains unaffected. As a consequence, any path of play observed in the

Γ∗ -part of Γ might be played in Γ∗ , as well.

The following lemma shows that as a consequence of Lemma 3, the agents will never

seriously lie.16

Lemma 4 (s, θ) ∈ F ∞ implies si (Hi∅ )l «i θi for all l ∈ {1, . . . , L} and all i ∈ I.

Proof. Suppose the lemma is false. Pick i ∈ I, µi ∈ Φ∞

i and (si , θi ) ∈ ρi (µi ) such that

∅ m

si (Hi ) ffi θi , where m is the minimal element in {1, . . . , L} for which there are i ∈ I and

(ŝi , θ̂i ) ∈ Fi∞ such that ŝi (Hi∅ )m ffi θ̂i .

As a first case, suppose that i believes that none of his opponents will seriously lie, that

is, suppose that for all (s−i , θ−i ) ∈ Σ−i , µi ((s−i , θ−i )|Hi∅ ) > 0 implies sj (Hj∅ )l «j θj for

16

If one uses a mechanism with slightly more complicated rewards, one can even show that the agents will

never announce payoff types that are strategically distinguishable from their true payoff types (see Müller,

2010).

25

all l ∈ {1, . . . , L} and all j 6= i. Then, given the beliefs µi , the strategy s′i defined by

s′i (Hi∅ ) = (θi , . . . , θi ) and s′i ([θi , Hi∗ ]) = si ([si (Hi∅ ), Hi∗ ]) for all [θi , Hi∗ ] gives strictly higher

expected utility to payoff type θi at Hi∅ than si , contradicting (si , θi ) ∈ ρi (µi ): Because

f is epIC and strongly dr-measurable, s′i maximizes the expected utility from (1 − δ −

P

∅ l

17 The strategies s and s′ yield the same expected utility

δ2 ) L1 L

i

i

l=1 f ((ŝi (Hi ) )i∈I ) in Si .

from δC ∗ (ζ ∗ (ϕ(ŝ))). For any profile (s−i , θ−i ) that i expects with strictly positive probability

we have s ∈ R∞ (θ), and Lemma 3 implies that the terminal history induced by both ϕ(s) and

(ϕi (s′i ), (ϕj (sj ))j6=i ) is in H ∗ (R∗,∞ (θ)). Hence for any such (s−i , θ−i ), ri (ζ(s′i , s−i )) = zi while

ri (ζ(s)) = ȳ. Finally, rj (ζ(s′i , s−i )) = rj (ζ(si , s−i )) = zj for any j 6= i and any (s−i , θ−i ) that

i expects with strictly positive probability.

As a second case, suppose that the set

o

n

l ∈ {1, . . . , L} : ∃(s−i , θ−i ) ∈ Σ−i , ∃j 6= i µi ((s−i , θ−i )|Hi∅ ) > 0 and sj (Hj∅ )l ffj θj

is nonempty and n its minimum. Then n ≥ m is the smallest l for which i expects some j 6= i

to seriously lie. Define the strategy s′i by s′i (Hi∅ ) = (θi , . . . , θi , si (Hi∅ )n+1 , . . . , si (Hi∅ )L ) and

s′i ([θi , Hi∗ ]) = si ([si (Hi∅ ), Hi∗ ]) for all [θi , Hi∗ ]. Then given µi , s′i gives strictly higher expected

utility to θi at Hi∅ than si , contradicting (si , θi ) ∈ ρi (µi ): Because f is epIC and strongly drPn−1

measurable, s′i maximizes the expected utility from (1 − δ − δ2 ) L1 l=1

f ((ŝi (Hi∅ )l )i∈I ) in Si .

P

∅ l

Strategy s′i yields the same expected utility as si from (1 − δ − δ2 ) L1 L

l=n+1 f ((ŝi (Hi ) )i∈I ) +

δC ∗ (ζ ∗ (ϕ(ŝ))). Since agent i seriously lies for “first” time “later” (if at all) under s′i than under

si , agent i weakly prefers rj (ζ(s′i , s−i )) over rj (ζ(si , s−i )) for any j 6= i and any s−i ∈ S−i .

With probability p > 0 agent i believes in profiles (s−i , θ−i ) such that sj (Hj∅ )n ffj θj for some

j 6= i. For such (s−i , θ−i ), ri (ζ(s′i , s−i )) = zi and ri (ζ(s)) = ȳ. By Lemma 1 b) and (2), the

expected utility difference between zi and ȳ strictly outweighs the possible expected utility

loss from playing s′i instead of si in the n-th direct mechanism (1 − δ − δ2 ) L1 f ((ŝi (Hi∅ )n )i∈I ).

With probability 1 − p, i expects no j to seriously lie in his n-th announcement of his payoff

type. In this case, as si prescribes a serious lie in the m-th submission and hence leads to

ri (ζ(si , s−i ) = ȳ for any s−i under consideration, agent i expects s′i to lead to no worse reward

than si . Moreover, in this case, because f is epIC and strongly dr-measurable, i believes that

s′i will be at least as good as si with respect to the term (1 − δ − δ2 ) L1 f ((ŝi (Hi∅ )n )i∈I ).

By strong dr-measurability of f , θj′ «j θj for all j ∈ I implies f (θ) = f (θ ′ ), for any

θ, θ ′ ∈ Θ. Therefore, by Lemma 4, (s, θ) ∈ F ∞ implies

kC(ζ(s)) − f (θ)k ≤ δkC ∗ (ζ ∗ (ϕ(s))) − f (θ)k + δ2 k

√

1X