Gaussian Stochastic Processes - Engr207b: Linear Control Systems 2

advertisement

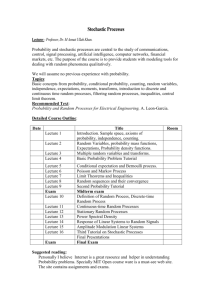

14 - 1 Gaussian Stochastic Processes 14 - Gaussian Stochastic Processes • Linear systems driven by IID noise • Evolution of mean and covariance • Example: mass-spring system • Steady-state behavior • Stochastic processes • Stationary processes • State-space formulae • Autocovariance • Example: low-pass filter • Systems on bi-infinite time • Fourier series and the Z-transform • The power spectral density S. Lall, Stanford 2011.02.24.01 14 - 2 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Linear systems driven by IID noise consider the linear dynamical system x(t + 1) = Ax(t) + Bu(t) + v(t) with • the input u(0), u(1), . . . is not random • the disturbance v(0), v(1), v(2), . . . is white Gaussian noise E v(t) = µv (t) cov v(t) = Σv • the initial state is random x(0) ∼ N (µx(0), Σx(0)), independent of v(t) for all t view this as stochastic simulation of the system • what are the statistical properties (mean and covariance) of x(t)? 14 - 3 Gaussian Stochastic Processes S. Lall, Stanford Evolution of Mean and Covariance we have x(t + 1) = Ax(t) + Bu(t) + v(t) taking the expectation of both sides, we have, as before µx(t + 1) = Aµx(t) + Bu(t) + µv (t) taking the covariance of both sides, we have Σx(t + 1) = AΣx(t)AT + Σv i.e, the state covariance Σx(t) = cov(x(t)) obeys a Lyapunov recursion 2011.02.24.01 14 - 4 Gaussian Stochastic Processes S. Lall, Stanford State Covariance The solution to the Lyapunov recursion is Σx(t) = AtΣx(0)(At)T + t−1 X Ak Σv (Ak )T k=0 Because the covariance of the state Σx(t) = cov x(t) is Σv £ t−1 ¤ Σv t t T ... A I Σx(t) = A Σx(0)(A ) + A .. . = AtΣx(0)(At)T + t−1 X k=0 Ak Σv (Ak )T t−1 T (A ) .. AT Σv I 2011.02.24.01 14 - 5 Gaussian Stochastic Processes S. Lall, Stanford Example: Mass-Spring System k1 k2 m1 b1 k3 m2 b2 m3 b3 masses mi = 1, springs ki = 2, dampers bi = 3 ẋ(t) = Acx(t) + Bc1w(t) + Bc2u(t) where 0 0 0 Ac = −4 2 0 0 0 0 2 −4 2 0 0 0 0 2 −2 1 0 0 −6 3 0 0 1 0 3 −6 3 0 0 1 0 3 −3 0 0 0 Bc1 = 1 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0 0 Bc2 = 0 0 1 u(t) is deterministic force applied to mass 3 w(t) ∈ R3 is random forcing w(t) ∼ N (0, 0.2I) applied to all masses 2011.02.24.01 14 - 6 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Example: Mass-Spring System discretization x(t + 1) = Ax(t) + B1w(t) + B2u(t) let v(t) = B1w(t), so E v(t) = 0 cov v(t) = B1Σw B1T and we have x(t + 1) = Ax(t) + B2u(t) + v(t) the inputs are 1.5 1 1 0.8 0.5 u w1 0.6 0 0.4 −0.5 0.2 −1 0 −0.2 0 50 100 150 200 t 250 300 350 400 −1.5 0 50 100 150 200 t 250 300 350 400 14 - 7 Gaussian Stochastic Processes S. Lall, Stanford Example: Mass-Spring System simulate three things • the evolution of the mean µx(t + 1) = Aµx(t) + Bu(t) + µv (t) • the evolution of the covariance Σx(t + 1) = AΣx(t)AT + Σv • the state trajectory for a particular realization of the random process at each time t plot • actual state in this particular run x(t) • mean state µxi (t) • 90% confidence interval [µxi (t) − h(t), µxi (t) + h(t)], where h(t) is as usual h(t) = ³¡ ´ 21 Σx(t) iiFχ−1 2 (0.9) ¢ 1 2011.02.24.01 14 - 8 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Example: Stochastic Simulation of Mass-Spring System position and velocity of mass 1 2 1 mean of state x1 90% confidence interval for x1 realization of state x1 mean of state x5 90% confidence interval for x5 realization of state x5 1 0 x x 4 0.5 1 1.5 0.5 −0.5 0 −1 −0.5 0 100 200 t 300 400 −1.5 0 100 200 t 300 400 14 - 9 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Example: Ellipsoids time = 1 time = 6 time = 11 time = 16 0 0 0 0 −0.5 −1 −0.5 0 0.5 x1 time = 21 −1 1 x4 0.5 x4 0.5 x4 0.5 x4 0.5 −0.5 0 0.5 x1 time = 26 −1 1 −0.5 0 0.5 x1 time = 31 −1 1 0 0 0 0 −0.5 −1 −0.5 0 0.5 x1 time = 41 −1 1 −0.5 0 0.5 x1 time = 46 −1 1 0 0.5 x1 time = 51 −1 1 0 0 0 −1 0 0.5 x1 1 −1 −0.5 0 0.5 x1 1 0 0.5 x1 time = 56 1 0 0.5 x1 1 x4 0 x4 0.5 x4 0.5 x4 0.5 −0.5 1 −0.5 0.5 −0.5 0.5 x1 time = 36 x4 0.5 x4 0.5 x4 0.5 x4 0.5 0 −1 −0.5 0 0.5 x1 1 −1 14 - 10 Gaussian Stochastic Processes S. Lall, Stanford Steady-State Behavior the Lyapunov equation is the same as the one we used for controllability analysis if A is stable, then the limit is Σxss = lim Σx(t) = t→∞ ∞ X Ak Σv (Ak )T k=0 the steady-state covariance as in controllability, this is the unique solution to the Lyapunov equation Σxss − AΣxssAT = Σv if Σv = BB T then Σxss is the controllability Gramian 2011.02.24.01 14 - 11 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Stochastic processes A stochastic process is an infinitely long random vector. It has mean and covariance x(0) µx(0) x(1) µx(1) E x(2) = µx(2) .. .. x(0) Σx(0, 0) Σx(0, 1) . . . x(1) Σx(1, 0) Σx(1, 1) cov = x(2) Σx(2, 0) Σx(2, 1) .. .. • For each w ∈ Ω, the random variable x returns of the entire sequence x(0), x(1), . . . • If x(0), x(1), . . . are Gaussian and IID, then x is called white Gaussian noise (WGN) In this case, Σx(i, j) = 0 if i 6= j 14 - 12 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Generating stochastic processes Suppose v(0), v(1), . . . is white Gaussian noise, with covariance cov(v(t)) = I, and x(t + 1) = Ax(t) + Bv(t) y(t) = Cx(t) x(0) = 0 We have y = T v, where T is the Toeplitz matrix y(0) 0 v(0) y(1) H(1) 0 v(1) y(2) = H(2) H(1) 0 v(2) y(3) H(3) H(2) H(1) 0 v(3) .. .. .. ... and H(0), H(1), . . . is the impulse response ( CAt−1B if t > 0 H(t) = 0 otherwise 14 - 13 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Example Let W = cov(y) = T T T . For example, suppose x(t + 1) = 0.95x(t) + v(t) y(t) = x(t) x(0) = 0 An image plot of W (i, j), is below. Notice that it becomes constant along diagonals. Also plotted is R(i) = W (i + 100, i) 10 12 20 9 40 10 8 60 7 8 80 100 5 120 4 140 3 160 2 180 1 200 20 40 60 80 100 120 140 160 180 200 0 R(i) 6 6 4 2 0 −100 −50 0 i 50 100 14 - 14 Gaussian Stochastic Processes S. Lall, Stanford The Output Covariance We have the covariance of the output W = cov(y) satisfies W (i, j) = ∞ X Tik (Tjk )T k=0 = ∞ X ¡ H(i − k) H(j − k) k=0 ¢T since T (i, j) = H(i − j). Therefore, evaluating W along the j’th diagonal W (i + j, i) = ∞ X ¡ H(i + j − k) H(i − k) k=0 = ∞ X ¢T H(j − p)H T (−p) p=−i and so lim W (i + j, i) = i→∞ ∞ X p=−∞ H(j − p)H T (−p) 2011.02.24.01 14 - 15 Gaussian Stochastic Processes S. Lall, Stanford The Output Covariance Let R(j) = ∞ X H(j − p)H T (−p) p=−∞ Then we have y(i) R(0) y(i + 1) R(1) y(i + 2) lim cov = R(2) i→∞ y(i + 3) R(3) .. .. R(−1) R(−2) ... R(0) R(−1) R(−2) . . . R(1) R(0) R(−1) R(2) R(1) R(0) ... • We must have R(i) = R(−i)T • As i becomes large, the output covariance W becomes Toeplitz 2011.02.24.01 14 - 16 Gaussian Stochastic Processes S. Lall, Stanford Asymptotic Stationarity This means that the pdf of tends to a limit as i becomes large. y(i) y(i + 1) y(i + 2) .. y(i + N ) The output process is therefore called asymptotically stationary. 2011.02.24.01 14 - 17 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Stationary Stochastic Processes A stochastic process y(0), y(1), . . . is called stationary if for every i and every N > 0 the pdf of y(i) y(i + 1) y(i + 2) .. y(i + N ) is independent of i. • If y(0), y(1), . . . is stationary, then ¡ ¢ W (i, j) = cov y(i), y(j) = R(i − j) for some sequence of matrices R(0), R(1), . . . • Any segment of the signal y(i), . . . , y(i + N ) has the same statistical properties as any other • R is called the autocovariance of the process 14 - 18 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 State-Space Formulae Suppose v(0), v(1), . . . is white Gaussian noise, with covariance cov(v(t)) = I, and x(t + 1) = Ax(t) + v(t) y(t) = Cx(t) x(0) ∼ N (0, Σx(0)) We have I x(0) 0 v(0) x(1) I 0 v(1) A x(2) = A I 0 v(2) + A2 x(0) 2 3 x(3) A A I 0 v(3) A .. .. .. .. .. . v(0) = P v(1) + Jx(0) .. 14 - 19 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 State-Space Formulae Therefore x(0), x(1), x(2), . . . , is a stochastic process with covariance Σv x(0) T Σ v P + JΣx(0)J T cov x(1) = P . .. .. Σv Hence for i ≥ j ¡ ¢ cov x(i), x(j) = j X Ai−1−k Σv (Aj−1−k )T + Ai−1Σx(0)(Aj−1)T k=1 = Ai−j à j X Aj−1−k Σv (Aj−1−k )T + Aj−1Σx(0)(Aj−1)T k=1 = Ai−j Σx(j) similarly, for i ≤ j we have cov x(i), x(j) = Σx(j)(Aj−i)T . ¡ ¢ ! 14 - 20 Gaussian Stochastic Processes S. Lall, Stanford State Covariance Hence we have the state covariance T 2 T 3 T Σx(0) Σx(1)A Σx(2)(A ) Σx(3)(A ) x(0) x(1) AΣx(0) Σx(1) Σx(2)AT Σx(3)(A2)T cov x(2) = A2Σ (0) AΣ (1) T Σ (2) Σ (3)A x x x x .. .. ... ... As t → ∞ we have Σx(t) → Σxss, so x(t) Σxss T T2 ΣxssA ΣxssA T3 ΣxssA x(t + 1) AΣxss Σxss ΣxssAT ΣxssAT 2 2 T lim cov x(t + 2) = A Σ AΣ Σ Σ A xss xss xss xss t→∞ x(t + 3) A3Σxss A2Σxss AΣxss Σxss .. ... ... .. 2011.02.24.01 14 - 21 Gaussian Stochastic Processes S. Lall, Stanford The Autocovariance The autocovariance of the output y is R(i) = CAiΣxssC T for i ≥ 0 where Σxss is the unique solution to the Lyapunov equation Σxss − AΣxssAT = I 2011.02.24.01 14 - 22 Gaussian Stochastic Processes S. Lall, Stanford Example: Low-Pass Filter Let’s look at the low-pass filter Ĝ(z) = c (z − e−λ)3 where λ = 0.1. • The breakpoint frequency is 20π rad s−1 when sampling period h = 1. • The constant c is chosen such that Ĝ(1) = 1. 0 10 −2 10 −4 10 −3 10 −2 10 −1 10 0 10 1 10 2011.02.24.01 14 - 23 Gaussian Stochastic Processes S. Lall, Stanford 2011.02.24.01 Example: Low-Pass Filter The input, output, autocorrelation, and breakpoint frequency are below. 4 0.3 0.2 2 0.1 0 0 −0.1 −2 −0.2 −4 0 100 200 300 400 500 0.02 0 100 200 300 400 500 0 100 200 300 400 500 1 0.015 0.5 0.01 0 −0.5 0.005 −1 0 −100 −50 0 50 100