File Last Written: 12:54 13 Jun 1978 Artificial Intelligence Laboratory

advertisement

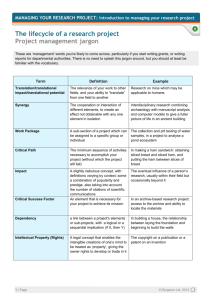

Name: Steve Tappel Project: 1 Programmer: STT File Name: SNMOM.XGP[ I.STT] File Last Written: Time: 12:58 12:54 13 Jun 1978 Date: 13 Jun 1978 Stanford University Artificial Intelligence Laboratory Computer Science Department Stanford, California Wvi-Hvu-sk-e^ SEMANTIC NET MODELS OF HUMAN MEMORY I. INTRODUCTION It often happens that an information processing model for some aspect of human behavior closely resembles some state-of-the-art Al technique for building intelligent systems. Since the late 19605, semantic net models of human long-term memory have been made which use nets very similar to those described in [ref: AIH article on semantic nets as rep'n for knowledge] for Al applications such as intelligent database retrieval and natural language understanding. Familiarity with the basic ideas of semantic nets covered in that article is assumed. The semantic net models of memory we shall discuss—Ross Quillian's original "semantic memory" net, and his later Teachable Language Comprehender; the Human Associative Memory model of John Anderson and Gordon Bower; the Active Structural Network of the LNR research group— have achieved moderate success in fitting experimental data on human memory performance. The models just mentioned all depend on certain generallyaccepted facts about human memory, so we pause for a brief survey of these. A partition of human memory into Short Term Memory (STM) and Long Term Memory (LTM) is now well established, based on a mass of experimental results. STM is of very small capacity, about 7 items [ref: Miller 7+-2], and is subject to rapid decay and/or loss through interference if its contents are not refreshed through a (conscious) process known as rehearsal. Rehearsal also appears to be necessary in order to transfer items from STM to LTM, and various experimental situations yield estimates of 3 to 10 seconds per item as the time required for transfer. LTM has no known size limit at all, and it is unclear whether memory traces there, when once laid down, ever fade away. It is clear that they do often become difficult or impossible for the person to retrieve, and both everyday experience and the protocols obtained in recall experiments attest that a long and devious search may be required to recover a desired memory trace. Yet retrieval from LTM is usually done without conscious effort, even though a quite subtle connection or analogy to current experiences may be involved. These facts would seem to indicate that the organization of knowledge in LTM is complex, and that sophisticated models are called for. The models in described in this article are all primarily concerned with the manner of representation of knowledge in LTM and the nature of the encoding and retrieval processes from it. Anything they say about STM is only incidental. Early computer simulations of human behavior were aimed chiefly at human problem solving [ref: LT, HPS, etc] which of course is still a major effort [ref: AIH article on human problem solving]. But while psychologists had not done a great deal of study of human problem solving processes" before the work of Newell and Simon and others, the study of human memory has a long history. Ideas in the associationist tradition bear a particular resemblance to the recent semantic net models. There have been many varieties of associationist theory, beginning with Aristotle; however, Anderson and Bower give four features which seem to typify associationism: 1. Ideas, sense data, memory nodes, or similar mental elements are associated together in the mind through experience. 2. The ideas can ultimately be decomposed into a basic stock of "simple ideas." 3. The simple ideas are to be identified with elementary, unstructured sensations. 4. Simple, additive rules serve to predict the properties of complex associative configurations from the properties of the underlying simple ... ... ... ideas, [ref: HAM p. 10] Apparently, none of the associationist theories included any notion of the type-token distinction by which a single node can stand for an entire complex idea; this distinction was introduced only in the days of computer simulation when the gross unwieldiness of purely sfmple-idea-to-simple-idea connections became apparent. Another improvement found necessary was to have several different kinds of association or link, i.e. the notion of a relation between ideas. With a few exceptions the associationists had held there is only one kind of link. To some extent it is clear why computer models of human memory should resemble associationist theories. Structures of nodes ("simple ideas") and links ("associations") are naturally represented in computers, especially if a list-processing language is used. Also, the alternative Gestalt and Reconstructionist theories are notoriously vague about the specific mechanisms underlying memory, making it rather difficult to model them. For whatever reason, there has never been a computer model of a Gestalt or Reconstructionist theory of long-term memory. In reading the system descriptions in the next three sections, it is well to keep in mind a few common features of the models, besides that they are all semantic nets, and a few points of contrast between them. Common Features . 1 The representations are all very language-like.All take exclusively language inputs and have a parser of some kind. It appears that no-one is trying to model human visual memory, or other perceptual memory. The reason is evidently that the perceptual codes are not yet known, though for vision at least they are being investigated [ref: Marr]. There is much greater confidence about the internal codes of language. 2. It is difficult to compare the models to human behavior. First, there is the question of conscious (or unconscious?) coding strategies that humans may use, so that a given input may be sent to memory in one of several forms, depending on expectations, whims, and other ill-understood things. Second, one suspects, and the model-builders generally admit, that human memory probably includes multiplerepresentation schemes of which the model is, at best, only one. Contrasts 3. Different kinds of knowledge are represented: definitions of verbs in terms of more primitive verbs, particular facts, general facts, and procedural knowledge (how to do things.) 4. The intended breadth of coverage of the model varies. HAM is quite specific, dealingonly with a certain aspect of long term memory; Quillian's memory model is part of a language comprehensionsystem; the ASN is part of a general model of human cognition. We will return to these points in the Comparisons section. The pioneer in semantic net models of memory was Ross Quillian, to whom both the later groups acknowledge a debt. We examine his model first. 11. QUILLIAN ( uw-f-Cvusktil) 111. HAM PRELIMINARY DISCUSSION HAM (Human Associative Memory) is the name of a book, of the neo-associationist theory of human long term memory (LTM) proposed in that book, and of a working computer simulation of a large part of that theory. All three are the work of two cognitive psychologists, John R. Anderson and Gordon H. Bower, done at Stanford in the period 1971-1973. A fundamental claim on which the theory rests is that the mechanisms of long term memory performance may be separated into an executive which determines the strategies, heuristics and tricks that a subject may use, and a strategy-free component common to all memory performances. Anderson and Bower state that We shall assume that long-term memory, itself, is strategy-invariant, that probes are always matched to memory in the same way, that identical outputs will be generated to identical probes, and that a given input always is represented and encoded in the same manner, [ref: HAM p. ?J This assumption places them squarely within the associationist tradition, as opposed to Gestalters and reconstruction theorists who would deny that any memory structure can be described apart from the processes by which it is made and used. It is also the assumption which allows Anderson and Bower to set aside the seemingly endless variations of encoding and retrieval people can call up, and concentrate on uniform underlying processes that will (it is hoped) show through when the variations due to strategy are eliminated. But if the separation in fact does not exist, in their words, "..this whole theoretical enterprise will come crashing down on our heads." Anderson and Bower wwere much concerned with developing a testable model and then carrying out stringent tests of it. Figure 1 shows the HAM model of long-term memory. AUDITORY RECEPTORS VISUAL RECEPTORS t + | AUDI TORY | | BUFFER | | VISUAL | | BUFFER | I I * * * |LINGUISTIC| | PARSER | t | LANGUAGE | | GENERATOR | t I 4 | PERCEPTUAL | | PARSER | t I I SPEECH OR WRITING t | ._+- —» INPUT OR PROBE <— - I II «- I 4, -+- | MEMORY 111 | | | -*- | | | | | WORKING | | MEMORY | | | | | | I i | | | I \ I EXECUTIVE | | | Figure 1 The theory and the computer simulation are concerned primarily with the part labelled "memory", and only secondarily with the others. The central features are (1 ) The representation of all LTM contents by means of overlapping binary trees (2) The proposed matching, storage and retrieval processes, which take place once an input or probe is presented to the memory, and are hypothesized to be uniform in all circumstances. KNOWLEDGE REPRESENTATION IN HAM All knowledge in HAM is represented as propositions, encoded in binary trees. For example, "In a park a hippie touched a debutante" would be represented as where the labels are interpreted as follows c F L T S P R O C V Context in which Fact is true Fact Location Time Subject Predicate Relation Object Set membership Subset Generic Figure 2 A proposition tree may indicate a context for the fact stated, consisting of location in space and/or time, or it may not. The proposition sans context always has the subject-predicate form, and in very simple cases the predicate is a single concept: "The debutante is tall" —> &*-BvAvx.>*.in, U\l In more complex cases the predicate is a (binary) relation which the subject holds to some object. The object may be another proposition: "The hippie knows the debutante Is tall" —> Hnhntnnto lull HAM is restricted to the statement of facts; it includes no definitions of verbs (or other predicates) as do both Quillian's system and the ASN. Every leaf node of the binary tree is connected by one of the quantifier links c, c, or V to some concept such as PARK or PAST. Referring to Figure 2, the node connected to PARK by an link stands for some particular park, and the node connected to PAST by an link stands for a particular time in the past. The and links are used to express such propositions as "canaries are birds" "all pets are bothersome" "all dogs chase some cats". It is perhaps worth noting that with the three quantifier links, HAM's representation is equivalent in power to the second-order predicate calculus. The question arises why Anderson and Bower chose a propositional representation, and one so reminiscent of phrase structure trees for natural language, given their statement that Both in the evolution of man and in the developmentof the child, the ability to represent perceptual data in memory emerges long before the ability to represent linguistic information. We believe that language attaches itself parasitically to this underlying conceptual system designed for perception. [HAM p. 154] They even cite evidence for this view of the secondary nature of language. Their reasoning appears to be that the propositional representation is universal and also quite flexible. It is supposed to be adequate for almost anything that must be remembered, and "relatively indifferent to. whether it is encoding, perceptual or linguistic material" [HAM p. 155]. However, Anderson and Bower do not show what encoded perceptual material might look like. The interface between language and memory, they say, is easier to handle than "costructing the necessary perceptual system". INPUT, STORAGE AND RETRIEVAL In Figure 1, a linguistic parser and a "perceptual parser" are shown. In the actual HAM model, the only kind of input handled is a certain restricted class of English, in which no sentence is ambiguous and no backtracking need be done during parsing. There is no intent that this model human input. The parser converts each sentence into a binary tree just like those in the memory. Instead of just dropping in this tree as a new addition to LTM, HAM uses the MATCH and IDENTIFY procedures to find the maximum overlap of the new tree with existing structure in the memory net, then inserts the new tree so as to share the common structure. Structure-sharing is essential in a model of human LTM, because of the enormous mass of redundant information that would otherwise have to be stored. It also allows some simple inferences to be made automatically, as in this example: input: "A hippie touched a debutante" "The debutante is from Chicago" question: "Did a hippie do anything to someone from Chicago?" answer: "Yes, a hippie touched a debutante from Chicago." MATCH starts with the leaf nodes already identified, by the parser, with nodes in LTM. It attempts a bottom-up best match from each leaf node, independently, and theoretically in parallel. (The program actually does them one at a time.) At each matched node A, MATCH calls GET(A, -B), where B is the label on the input tree branch above A, and -B the inverse label. GET returns a list of all nodes in LTM that have a type B link to node A, in decreasing (it can find them all because for every B link there is a -B the order of recency of other way.) MATCH then proceeds up (and eventually, down) the tree in a recursive depth-first search, using the GET-list at each node. There is a time limit on searching from each leaf node, so each GET-list is searched only to a probabilistically determined depth, and even so the search may be cut short. When time is up, MATCH returns the best match that any of its (supposedly) parallel searches found. Once a list of best-match node pairs is returned by MATCH, IDENTIFY starts identifying parts of the input tree with matching structures in LTM, and adding new nodes for the rest. Sometimes, to avoid unintended propositions appearing as side effects, not every matched pair of nodes can be identified. Retrieval of facts from HAM is relatively simple (and clearly not as sophisticated as human retrieval based on loose analogies, etc.) A question is given to the parser which converts it into a tree; in the case of yes-no questions the tree for, e.g. "Did a hippie touch a debutante in a park?" is identical to the tree for "In a park a hippie touched a debutante." In the case of who/what/when/where/which questions, the tree will have missing pieces. The exact same MATCH process used for encoding is now applied to find the best- matching structure in memory, which is given to an answer-generator. If there was a full match, HAM answers positively; otherwise with appropriate qualifications. An interesting thing happens if the question tree is the negation of something in memory. MATCH matches them, then the answer-generator detects the negation and answers "no". If HAM finds no significant match it says nothing. Because of limits of time and depth to which GET-lists are searched, HAM may fail to answer a question even If the knowledge needed is in memory. This is one mechanism by which HAM accounts for experimental data on human forgetting. EXPERIMENTAL TESTS Anderson and Bower compare a great mass of experiments in human memory nd retrieval, some done by themselves, with predictions of the HAM model. We will concentrate here on those dealing with phenomena of forgetting, because it is fascinating that HAM, in which links once made never decay, yet accounts well for experimental data on human forgetting. It does so, like associationist psychologists in recent decades, in terms of interference. L< in the 20th century, particularly in America, the associationist framework was applied with considerable success as an explanation for interference phenomena in learning. A major concern of Anderson and Bower in HAM was to develop a model sufficient to cover encoding and retrieval based on meaning (instead of the stimulus-response framework used in that work) without losing the ability to account for the experimental results on interference. HAM also yields predictions for: Errors in recall of sentences having elements In common Retrieval times for simple propositions Effects of negation and quantification on retrieval times Plus others. In general it does well, but is unable to account for a few of the results. It is very impressive for a single theoretical scheme to cover so wide a range. CONCLUSION Anderson and Bower, as cognitive psychologists, had as their primary motivation in HAM to construct a system with sufficient representational power to be plausible as a model of human memory, and at the same time accurate in its predictions of human performance in various verbal learning and retrieval tasks. They hypothesized a strategy-free component of human LTM, with uniform encoding and access, which they could model apart from the intelligent tricks and strategies that people use. The propositional representation they chose has so far proven versatile in encoding not only sentences but also things like paired nonsense-syllables, and together with matching and structure-sharing has accounted quite naturally for a surprisingly wide range of experimental data. The assertion that the HAM representation is adequate for perceptual information as well as linguistic, is so far untested. As a semantic net HAM lacks semantics. There is nothing attached to the verbs and nouns indicating what they mean; no definitions, no attached procedures, it had not been Anderson and Bower's interest to develop these in HAM. Consequently while HAM accepts and encodes sentences, it certainly cannot be said to understand them, and it is not altogether clear the binary-tree representation would work well should HAM (or something like it) be extended to a "semantic memory" system. IV. THE ACTIVE STRUCTURAL NETWORK INTRODUCTION The LNR group, named for the original experimenters Peter Lindsay, Donald Norman and David Rumelhart at the University of California at San Diego* is pursuing research in cognitive psychology by designing and testing computer based models of representations of knowledge. The design of the representation has been shaped by a large body of psychological experimentation, and led to the current ACTIVE STRUCTURAL NET model of long term memory. The ASN is characterized by . 1 A semantic net as representation for knowledge about many different domains and actions 2. A large component of knowledge in the form of procedures, represented within the net. The LNR group believes that procedural information is an important component of human knowledge structure, and have taken care that their model reflects this. Further, Norman and Rumelhart state (p. 159) that A basic tenet of our approach to the study of cognitive processes is that only a single system is involved. In psychological investigations, the usual procedure is to separate different areas of study: memory, perception, problem solving, language syntax, semantics. We believe that a common cognitive system underlies these areas, and that although they are partly decomposable the interactions among the different components are of critical importance. It is then something of a distortion to present their ASN as a model of long term memory, but we shall do so nonetheless, by the simple expedient of not discussing its other aspects except as they bear on memory. The reader is warned. The network itself shares many of the features of semantic nets in general, differing from the usual sort chiefly through the representation of executable plans along with facts and general knowledge. These plans can also be examined as data, or simulated, causing an internal representation of the action sequence to be constructed. ... STRUCTURE OF THE ACTIVE STRUCTURAL NETWORK The ASN is built up out of predicates, which have one or more arguments. An example be dog(subject), another is take(subject, object, from, at-time). Predicates correspond to nouns or verbs, though there often is no English word corresponding to a particular predicate in the ASN. Each predicate is represented by one type node and any number of token nodes, with "isa" links from noun tokens to their type node and "act" links from verb tokens. would All this is shown in the small net below, An abbreviation is generally made — » Figure 3 Predicates can be defined in terms of other predicates, much as in Quillian's system, using the "iswhen" link (which corresponds to Quillian's first kind of link.) An important function of the ASN is to analyze verbs into a small set of primitive predicates, so that semantically equivalent surface forms are mapped into identical "deep" representations in the net, and so that the implication structure of predicates is made explicit. The work of Schank [ref] has been quite influential in this regard. Here are some of the primitives used, with their arguments able to make the proposition true ABLE (agent, proposition) CAUSE (event, result) CHANGE (from-state, to-state, at-time) DO (agent) LOC (object, at-location, from-time, to-time) possession POSS (subject, object, from-time, to-time) Using the primitives, we can define "get" and then define "take" in terms of "get": By attaching procedures to the primitives and interpretingpredicates as "programs" "plans" or built out of the primitive procedures, the net itself is made active. We now look at how this is done. MEMOD AND VERBWORLD MEMOD, standing for Memory Model, is the computer implementation of the ASN. It consists of three major components: the node space, a parser and an interpreter. Figure 5 In keeping with Norman and Rumelhart's tenet that "there is only one system", the three components of MEMOD are very closely tied. The node space contains syntactic and semantic information for guiding parsing, in the form of predicates associated with English verbs (or nouns). Suppose the sentence "Bob took the ball from Bill" were input. The predicate for "take", shown in Figure 4, has four arguments. The parser is given information on the number and type of arguments to expect (some are optional), and finds the corresponding sentence parts. They are bound as arguments to a token of the "take" node, and then the token is interpreted. This causes the definition of "take", pointed to by an "iswhen" link from the "take" node, to be interpreted with those same arguments, etc. Procedures attached to the most primitive predicate nodes carry out net structure-building operations so that an internal representation of the sentence is built up as parsing proceeds. By the time parsing is completed, the entire sentence has been assimilated into the node space. MEMOD can also accept definitions, in restricted English, of new predicates. The node space initially contains only primitive predicates. The user can input a definition of "get" in terms of CHANGE and POSS, then a definition of "take" in terms of CAUSE, DO and "get". After that, MEMOD can parse and store sentences involving "get" and "take". Definition of new verbs is a major feature of the language comprehension system called Verbworld, implemented inside MEMOD. Any input fact that is stated using defined verbs is reduced by Verbworld to the primitives CHANGE, etc. according to the verb definitions, allowing semantically equivalent surface forms to map onto identical "deep" structures in the such as that if Bob took the ball from Bill then node space and also making many at some time in the past Bill possessed the ball. Verbworld is able to answer simple questions by relying on these inferences-by-definition plus a little bit of retrieval-time inference. In what is called partial comprehension, Verbworld leaves some verbs unreduced, which allows ambiguities in the original sentence to be maintained and is intuitively pleasing as a model of human memory (to some people, anyway.) However it makes question answering much more difficult because of the different ways a given fact might be represented. PLANS AND SIMULATION IN THE ASN Interpretable nodes in the ASN can guide parsing and construct new nodes and links. They can also represent plans for action in the external world, something that is clearly part of human LTM. The LUIGI system [ref], also developed within MEMOD, stores facts and plans pertaining to "Kitchenworld", which is really computer-simulated but is external to LUIGI proper. LUIGI consists of two parts: . 1 A database containing information about objects found in a kitchen and a set of procedures (recipes, actually) for manipulating those objects. 2. A set of procedures for answering questions using the data base. LUIGI is extendible in the same fashion as MEMOD and Verbworld, by defining new predicates, which for LUIGI are generally new recipes, e.g. "bake" or "(make a) sandwich". It can then be told to carry out a plan "Make a baked Virginia ham and very sharp cheddar cheese sandwich" and performs actions on Kitchenworld accordingly. Or, it can be asked a hypothetical question like "What utensils do you need to make cookies?" LUIGI simulates the plan (recipe) and as the simulation proceeds a representation of the sequence of actions involved is constructed in node space (NOT in Kitchenworld.) Then by processing this representation, e.g. by scanning it to note any utensil used, LUIGI finds the answer. THE ASN AS A MODEL OF HUMAN MEMORY The LNR group has done some experimental work in human memory, perception, language use and problem-solving. As of 1975, little of it bears on the goodness of the ASN as a model of human LTM. One experiment [ref: Gentner chapter in EIC] was a study of the order of acquisition of the verbs (1) "give", "take"; (2) "trade", "pay"; and (3) "buy", "sell", and "spend money" by children aged 3 yr. 6 mo. to 8 yr. 6 mo. The ASN definitions of these verbs lead to a predicted acquisition order (1), (2), (3) because verbs in (2) are defined in terms of those in (1), and likewise for (3) and (2). The children's performance bore out the predictions very well, but the test is hardly specific enough to provide much support for the particular ASN representation. In another study, of the semantic elements of movement, it was found necessary to add several ad hoc new primitives to the ASN set in order to give an adequate framework for analysis of subjects' recall of a story containing many descriptions of movement. From these experiments not much can be said about the ASN. It remains largely unevaluated as a memory model. It is certainly an ambitious design with many intriguing features. Using a quite ordinary semantic net structure of nodes and links, by attaching procedures to primitive nodes and writing an interpreter for predicates, the LNR group obtains a model of cognition in which Comprehension of a sentence or answering of a question proceeds in a uniform manner, without the need for any "intelligent" executive program: The intelligence of the system is distributed throughout the semantic network data base. [Norman & Rumelhart p. 1 79] As intelligent agents the MEMOD, Verbworld and LUIGI systems are rather interesting but not really very powerful or knowledge-rich; here too, the potential of the ASN scheme is not known. V. COMPARISON OF THE MODELS First a quick review of the three semantic memory models that have been described. Both the early Quillian model and his later Teachable Language Comprehender represented semantic knowledge for language comprehension, in the form of definitions of words. Each word was defined in terms of others at the same level as itself; as in a dictionary, none were more primitive than others. The chief method for language comprehension was the intersection search, which sought a (semantic) connection between two words by which the relationship between them in a sentence or phrase might be understood. Intersection search is simple yet seems to perform reasonably well in TLC, and Quillian advocates this kind of parallel breadth-first search as a mechanism used in the human memory net for similar tasks. Anderson and Bower intended their Human Associative Memory model as a testable model for the "stategy-free component" in human LTM. They required a representation sufficient to encode things (such as universal statements, "All men are mortal") which the usual sort of associationist theory could not handle, and hypothesized propositions, in the form of binary trees, as the basic representation in human memory. HAM was tested against data from many verbal learning, recognition and recall experiments and it predicted or explained most of the results. There were enough failures, though, to indicate that HAM would need revision. Norman, Rumelhart and the rest of the LNR research group have been developing an ambitious general model of human cognition, in which LTM Is modelled as an Active Structural Network. In this network, nodes and links are both data and interpretable "program". In language comprehension for instance, the main verb of a sentence corresponds to a predicate node in the net; interpretation of this node provides the parser with information on the case frame (subject, object, etc.) of the verb, and further interpretation of the net causes an internal encoding of the sentence to be added to the net. The ASN can also represent plans, both to be carried out as actions on the external world, or simulated within the net so as to answer questions about what would happen if some plan were carried out. Recall from the Introduction the two common features and two points of contrast between models which were suggested. We look at these again now, with reference to specific features of the models. Common features 1. The language-like representation schemes. Quillian and the LNR group explicitly aimed at supporting language comprehension with their semantic net memories, so this could be expected there. There is no such easy accounting for the HAM representation, which so resembles phrase structure trees. However, since 1974 one of the developers of HAM, John Anderson, has developed a new "theory about human cognitive funtioning" called ACT, with considerable attention given to language comprehension. ACT is a computer simulation model consisting of a proposition network model of LTM very much like HAM's (a revision of it, in fact) and a production system operating off the network. See [ref: AIH article on PS models of memory] or [Anderson 1 976] for more information. 2. Difficulty of testing the models. John Anderson relates how one person "...described his impression of HAM when he first dealt with it as a graduate student at Stanford: It seemed like a great lumbering dragon that one could throw darts at and sting but which one never could seem to slay." [Anderson 1976, p. 531]. Complicated models of cognitive processes can generally be bent to accomodate troublesome data that appear, until the sheer amount of it makes the model untenable. There has been enough testing of HAM that Anderson at least is convinced it is wrong on some points, and incorporated some changes in ACT. Collins and Quillian [Collins and Quiilian 1969] made some predictions based on a superset-chaining inference procedure used in TLC, which have since been shown false (see pages 381-383 of [Anderson & Bower 1973] for a summary.) But the main parts of the model, the representation scheme and the intersection- search method, have not been decisively tested. The Active Structural Net has hardly been tested at all. It is partly a question of research strategy—one can develop a model and then spend a lot of time doing experimental tests of it, or one can concentrate on getting a plausible model to perform as impressively as possible, and hope that it will cast light on human cognition. Contrasts 3. Different kinds of knowledge represented. There are striking differences here. TLC is dictionary-like, a web of definitions, with a few facts also included; HAM includes only particular and general facts, with no definitions at all; the ASN has both, as well as representation for plans and actions. It defines predicates by reducing them to fixed primitive predicates, while TLC defines them all in terms of each other. HAM can represent quantified statements quite well; TLC can represent "All birds have feathers" by attaching "has feathers" to the unit for "bird", so that any particular bird or subclass of birds will inherit the property, but cannot represent nested quantifiers as in "All philosophers read some books". The ASN would represent "All birds have feathers" by setting up a demon to attach the property "has feathers" to any new node representing a bird! Overall, the ASN covers the widest range. It remains to be seen whether the many kinds of knowledge it represents can all be used effectively. 4. The intended breadth of coverage varies. (unfinished) VI CONCLUSION (unf VII mi shed) REFERENCES Ross Quillian, "Semantic Memory", in Marvin Minsky (ed), Semantic Information Processing. Cambridge, Mass.: MIT Press, 1 968. Ross Quillian, "The Teachable Language Comprehender", CACM, 1969 12, 459-476. A. Collins and Ross Quillian, "Retrieval Time from Semantic Memory", Journal of Verbal Learning and Verbal Behavior, 1 969, 8, 240-247. A. Collins and Ross Quillian, "How to Make a Language User", in E. Tulving and W. Donaldson (eds), Organization and Memory. New York: Academic Press, 1972. John Anderson and Gordon Bower, Human Associative Memory. Washington, D.C.: Winston, 1973. John Anderson, Language, Memory and Thought. Hillsdale, New Jersey: Winston, 1976. Donald Norman, David Rumelhart and the LNR Research Group, Explorations in Cognition. San Francisco: Freeman, 1975.