Computers today are mostly digital computers—devices that can

advertisement

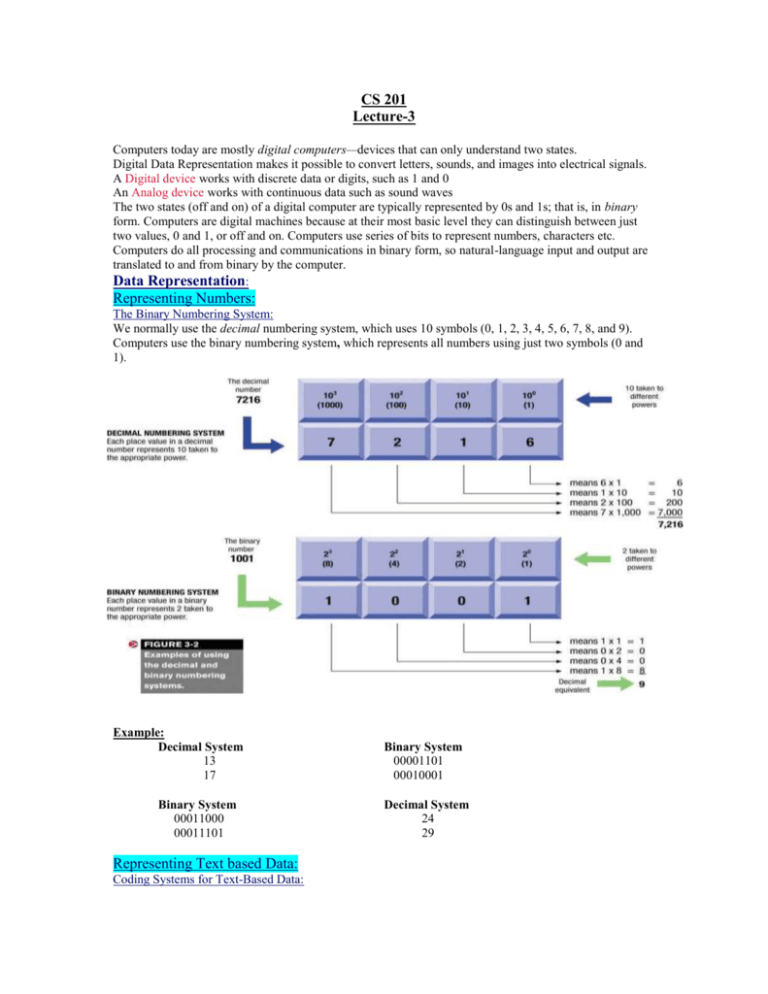

CS 201 Lecture-3 Computers today are mostly digital computers—devices that can only understand two states. Digital Data Representation makes it possible to convert letters, sounds, and images into electrical signals. A Digital device works with discrete data or digits, such as 1 and 0 An Analog device works with continuous data such as sound waves The two states (off and on) of a digital computer are typically represented by 0s and 1s; that is, in binary form. Computers are digital machines because at their most basic level they can distinguish between just two values, 0 and 1, or off and on. Computers use series of bits to represent numbers, characters etc. Computers do all processing and communications in binary form, so natural-language input and output are translated to and from binary by the computer. Data Representation: Representing Numbers: The Binary Numbering System: We normally use the decimal numbering system, which uses 10 symbols (0, 1, 2, 3, 4, 5, 6, 7, 8, and 9). Computers use the binary numbering system, which represents all numbers using just two symbols (0 and 1). Example: Decimal System 13 17 Binary System 00011000 00011101 Representing Text based Data: Coding Systems for Text-Based Data: Binary System 00001101 00010001 Decimal System 24 29 Among the most widely used coding systems for text-based data are ASCII and EBCDIC. Computers can only understand numbers, so an ASCII/EBCDIC code is the numerical representation of a character such as 'a' or '@' or an action of some sort. ASCII: American Standard Code for Information Interchange. ASCII was developed by ANSI (American National Standards Institute) EBSIDIC: Extended Binary-Coded Decimal Interchange Code. EBCDIC was developed by IBM is mostly used on IBM mainframe. Both ASCII and EBCDIC represent each character as a unique combination of 8 bits. A group of 8 bits allows 256 (28) combinations. Refer to figure 3-3 in the book. Bit: A bit is the smallest unit of data in a computer. A bit has a single binary value, either 0 or 1. Byte: 8 bits = 1 Byte Nibble: Half a byte (four bits) is called a nibble. Kilobyte: 1000 Bytes. Megabytes: 1 million bytes. Gigabytes: 1 billion bytes. Terabyte: 1 trillion bytes. Unicode: A longer (32 bits per character is common) code that can be used to represent text-based data in virtually any written language. It allows having more than a million combinations (2 32). Representing Graphics Data: Often stored as a bitmap which the color to be displayed at each pixel stored in binary form. Bitmap: a grid of hundreds of thousands of dots arranged to represent an image. The color to be displayed is combination of 0 and 1. Monochrome graphics: contains only two possible graphics. Grayscale: Image can be displayed in between Pure white (11111111) to pure black (00000000). For color images computer often represent color graphics with 16 colors or 256 colors or 16.8 million colors. So the higher the number of bits used for color-coding in each pixel the higher the picture quality. To resize picture its better to be Vector-based. Representing Audio Data: When you say something on the microphone Sound waves ripple past the microphone, causing a vibration. The vibration creates a change in voltage in the wire that runs from the microphone to the computer. This fluctuating voltage is an analog representation of the sound, for it changes smoothly from one amplitude to the next, encompassing all values in-between. Inside of our computer there is a special device called an Analog to Digital Converter (usually a part of the sound card). At regular intervals the ADC measures the microphone's analog signal and outputs a number representing the amplitude of the signal at that precise instant. This is called a "sample." Before long there are a huge number of samples all arranged in chronological order – a kind of "spot-map" of the original waveform and this is how Digital audio is created. MP3 compression makes audio files much smaller Digital audio samples, unlike analog information, can be saved in a computer file. When the time comes to play the recording back, the samples are sent to a Digital to Analog Convertor (again usually a part of the sound card). The DAC "connects the dots," creating a smoothly changing signal between the samples. The result is an approximation of the original waveform. Let's take a close look at a segment of the original waveform and the same segment after the DAC has reconstructed it from digital information: Clearly these waveforms are different. Shouldn't they also sound different? How is it that a digital recording can sound identical to an original? The human ear is marvelously sensitive. Nevertheless, it has limits. If you take very accurate samples of a sound often enough, there is a point where the ear can no longer tell the difference between an original and a recording Representing Video Data: Video data are displayed as a collection of frames. Each frame contains a collection of still graphical image. When the frames are projected one after the other (at a rate of 30 frames/seconds) the illusion of movement is created. Machine Language: Machine language is the binary-based code used to represent program instructions. Most programmers rely on language translators to translate their programs into machine language for them.