2E: Use of computers in traditional essay examinations

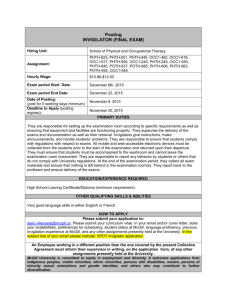

advertisement

CUGSC: 22 November 2007 A/02/01 HSS UGSC: 07/08 2E Use of computers in traditional essay examinations The problem Most of our students complete most of their written assignments using a word processor, but they are still asked to handwrite responses in examinations. Many students have learned to write and to spell using a word processor, but at a time when they are already stressed we remove these familiar tools from them. Further, the cognitive process involved in constructing an essay using a word processor almost certainly differs from a wholly paper based process. The ability to copy and paste and to go back and review words and phrases used is not practically available when handwriting text. This leads to fundamental differences in how students approach the task of writing an essay. Good practice in assessment design demands that the assessment method aligns with the learning opportunities presented and the learning outcomes intended. If we have not made very clear that students should practice writing timed hand-constructed essays during the course then it is unfair to expect them to do this in the examination. Many existing courses within our institution fail to meet this underpinning criterion of good practice. The proposed solution Allow students to type examination responses on a laptop. No other part of the examination process needs to change. The questions are still issued on paper, they are saved in an encrypted format and the responses can be printed and circulated for marking. The proposal is to replace pens and green books with a computer and a reliable, easy to use piece of software. The software proposed is called Exam4 and was developed by a US company called Extegrity. Exam4 has a long record of being used in high stakes exams across the USA where it has proved to be stable and reliable. The software ‘locks the computer down’ so the students are unable to access the internet, the hard disk or read information from an accessory device such as a USB stick. It provides a simple word processor with basic formatting abilities, bold, italic, underline and justification (the intention is not to test students’ word processing skills). The availability of other functionality such as spell checking, or global cut and paste and the location to which finished exams are submitted are determined on an exam by exam basis. 1 CUGSC: 22 November 2007 A/02/01 HSS UGSC: 07/08 2E Issues, Questions and Responses Is the system secure and reliable? Yes. The exam questions are not part of the software so question security is no different from traditional contexts. The software takes regular snapshots of work in process so if a student deleted all their work just before submitting the exam, their work could be retrieved. All data is stored in an encrypted form and the decryption algorithm can only be accessed by the authorised staff member. The software has a proven record of reliability and its security has never been compromised to date. Is the idea acceptable to students? Of course some students like the idea and others are sceptical. EUSA are broadly supportive of exploring the methodology while still retaining some doubts and raising some questions. As with any assessment method students should be given ample opportunity to practice and become familiar with the tools to be used, and not encounter them for the first time in the exam hall. There is no reason why students cannot have access to the software prior to the examination – the exam question is not held in the software. Doesn’t it favour students who can type faster? Yes of course, but handwritten exams favour students who handwrite faster. Writing at high speed for 3 hours is not a skill many students will practice and some students may have real physical problems doing it. Further Augustine– Adams et al (2001) demonstrated that a student typing an examination will typically perform slightly better than a student handwriting an examination. This is partly due to writing more, but there is also considerable evidence that the quality of word processed essays is better than the quality of handwritten essays (Hartley & Tynjala, 2001; Ferris, 2002. Goldberg et al, 2003). Can we offer students a choice of writing or typing? Probably not until we can be sure there is no implication from the choice made. Research demonstrates clearly and consistently that a typed text will be marked more harshly than an identical piece of handwritten text (MacCann et al 2002; Russell &Tao, 2004). What about the pragmatics of running an exam like this? There is no one way to run an essay exam on computer: we can adjust the set-up arrangements for each case. There is no technical reason why students could not use their own laptops for the exam – this would have the advantage of familiarity. Providing power is currently problematic for large numbers of students, but we hope to power a floor in Adam House shortly to provide a venue. Until then extension cables would need to be taped securely to the floor to provide power. All details about where files are saved are embedded into the exam software, it doesn’t depend on students saving their work with a specific name or to a specific location. The exam scripts can be saved to network or local drives as desired; students can also be issued with an encrypted copy of their examination if desired. Exam scripts can be decrypted and printed question by question for distribution to different markers. Emergency procedures should be 2 CUGSC: 22 November 2007 A/02/01 HSS UGSC: 07/08 2E prepared in advance for how to act in the case of fire, power cuts or other highly unusual eventualities. References Augustine-Adams K, Hendrix B & Rashband J (2001) Pen or printer: can students afford to handwrite their exams? Journal of Legal education 51, 118-129 Ferris S (2002) The effects of computers on traditional writing. Journal of Electronic Publishing 8 http://www.press.umich.edu/jep/08-01/ferris.html Goldberg A, Russell M & Cook A (2003) The effect of computers on student writing: a meta analysis of studies from 1992 to 2002. Journal of technology, learning and assessment 2, 1-51 Hartley J & Tanjala P (2001) New technology, writing and learning, in Writing as a learning tool Kluwer, Dordrecht MacCann R, Eastment B & Pickering S (2002) Responding to free response examination questions: computer versus pen and paper. British Journal of educational technology 33, 173-188. Russell M & Tao W (2004) Effects of handwriting and computer print on composition scores. Practical assessment, research and evaluation 9 http://PAREonline.net/getvn.asp?v=9&n=1 3