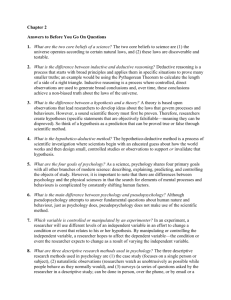

A Review of Basic Statistical Concepts

advertisement