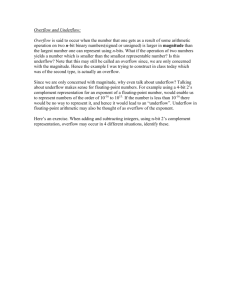

Rounding coefficients and artificially underflowing terms in non

advertisement