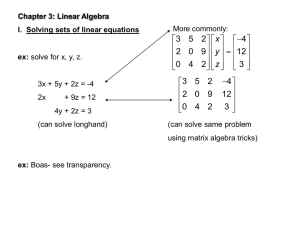

Solutions - BYU Math Dept.

advertisement