The Representation of Numbers on Modern Computers Dan Moore

advertisement

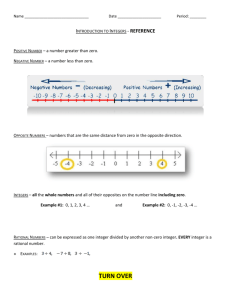

The Representation of Numbers on Modern Computers Dan Moore – Jan. 20, 2016 Numbers and characters are stored as binary bits that take values of 0 or 1 in a computer’s memory, on a hard drive or on the surface of a DVD/CD. The basic unit of storage today is the BYTE which is 8 bits. Most computer languages store a single character in a byte (such as an ‘a’ or a ‘^’). (NOTE: 4 BYTES make a WORD of 32 bits. ) Computers have special circuits on their CPU Chips to handle two types of numbers and their arithmetic: a. Integers : -2 0, 32457, etc. b. Decimal Numbers (call Floating Point Numbers) such as 3.14159254, 1.414121356, etc. Integers: Most modern computers now store Integers in a 32 bit WORD. An integer can range between 0 & 232-1 To allow negative integers, most computer languages offset the displayed value of an integer by 231 , so that the displayed value = internal value - 231 . Integer arithmetic is exact for integers as long as the results lie between -231 and 231 -1 If an arithmetic result lies outside this range then the computer adds or subtracts enough multiples of 232 to make the result lie within this ‘legal’ integer range! (Think of a clock: 11 o’clock + 2 hours = one o’clock!) Integer arithmetic means that ½ = 0 ! (7/8 = 0!) 22/7 = 3 etc. In most computer languages you can specify special types of integers that are one BYTE, two BYTES or 8 BYTES in size with ranges -27 <= i < 27 -1 (=127); -215 <= i < 215 -1 (= 32,767) and -263 <= i < 263 -1 ( = 9,223,372,036,854,775,807!) The former integer types are used to save space where the known range is small (like the dot locations on an LCD Screen) and the latter type can be used for extended integer operations such as encryption and decryption. The (default) integer range for a one WORD integer is between -2,147,483,648 and 2,147,483,647 ( <13 Factorial!). You can add, subtract, multiply and divide integers and the result is always an integer! Decimal Numbers: (Floating Point Numbers) Scientific Computing requires the calculation of quantities that are not conveniently expressed as integers (like π !). Modern Computers approximate decimal numbers in the form 0.d1d2d3...dN ·10E . (actually: 0.1b2b3b4 ...bN ·2E !). These decimal numbers are squeezed into 4 or 8 Bytes usually! This limits the number of accurate decimal places (N) and the range of exponents (E, the size of the number). When the decimal number is squeezed into 4 Bytes, N ~ 6-7 and |E| < 37 (Avoid ħ2 when using this form) When the decimal number is squeezed into 8 Bytes, N ~ 16-17 and |E| < 308 However, both types of decimal numbers have an exact ZERO!!! 8 Byte Decimal Numbers are called DOUBLE PRECISION numbers and are the type of decimal number most commonly used for Scientific Computation. Arithmetic using Floating Point Numbers is approximate! Only N digits or less of accuracy is preserved each time you use +, -, *, / or ** (^). These errors in the arithmetic of floating point numbers are called ROUNDING ERRORS. If the result of an arithmetic operation with two decimal numbers is too small to fit into 4 or 8 bytes, then usually it is set to ZERO (and perhaps a flag is set for ‘Floating Point underflow!). If the result of an arithmetic operation with two decimal numbers is too big to fit into 4 or 8 bytes, then a flag is set for ‘Floating Point overflow and the result is set to an INF [Floating Point Infinity!]. If you tell the computer to do an illegal operation such as divide zero by zero or calculate the square root of a negative number the result is a NaN (Not a Number!).