Instruction Level Parallelism - a historical perspective

advertisement

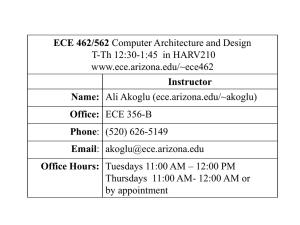

Instruction Level Parallelism - a historical perspective Roland Ibbett Roland Ibbett Instruction Level Parallelism - a historical perspective Instruction Level Parallelism I I Types of Instruction Level Parallelism I Parallel Function Units I Vector Processors I Superscalar Processors I VLIW Processors I SIMD Systems Where Next? Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units The Floating-point Arithmetic Problem - 1 I Instruction pipelines can operate at the speed of fixed-point arithmetic I Floating-point arithmetic is more complex: • exponent subtraction • mantissa shifting • mantissa addition • normalisation I To match fl-point execution rate to instruction pipeline rate, need parallel or pipelined arithmetic units, or both. Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units I Parallel function units were originally introduced as a means of matching function execution rate to instruction issue rate I Require use of two-address or three-address instruction format I c.f. one-address systems: I Each arithmetic operation requires the result from the previous operation before it can start Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units MEMORY Typical Write Back Registers Microprocessor Architecture Integer Unit Instruction Fetch Instruction Decode Fl−pt Unit Memory Access Multiply Unit Divide Unit I I Hardware must ensure that instructions still appear to be executed in sequential order Two main techniques: I I Common Data Bus + Tomasulo’s Algorithm (IBM) Scoreboards (CDC) Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units Tomasulo’s Algorithm www.icsa.inf.ed.ac.uk/research/groups/hase/models/tomasulo Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units Systems With Scoreboards I CDC 6600 - introduced in early 1960s I designed to solve problems well beyond contemporary capability I major innovation: parallel function units I CDC 7600 - introduced in late 1960s I upwardly compatible with 6600 I Cray-1 - introduced in 1976 I logically an extension of 7600 by addition of vector instructions I RISC Architectures - “typical” system: MIPS Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units Data Hazards - RAW, WAW, WAR I RAW (Read-After-Write) I an instruction requires the result of a previously issued, but uncompleted instruction: flow dependency I WAW (Write-After-Write) I an instruction tries to write its result to the same register as a previously issued, but uncompleted instruction: output dependency I WAR (Write-After-Read) I an instruction tries to write to a register which has not yet been read by a previously issued, but uncompleted instruction: anti dependency Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units DLX Simulation Model Roland Ibbett Instruction Level Parallelism - a historical perspective Code Optimisation Naive Code Improved Code LF F1 4(R1) LF F2 36(R1) MULTF F3 F1 F2 ADDF F0 F0 F3 ADDI R1 R1 4 SEQI R3 R1 32 BEQZ R3 loop LF F1 4(R1) LF F2 36(R1) ADDI R1 R1 4 MULTF F3 F1 F2 SEQI R3 R1 32 ADDF F0 F0 F3 BEQZ R3 loop Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units The Floating-point Arithmetic Problem - 2 I CDC 6600 Performance I I I I I I + 1/4 clocks = 0.25 Flops/Clock × 2/10 clocks = 0.2 Flops/Clock Total = 0.45 Flops/Clock Max Issue Rate = 1/clock (100 ns) Max MIPS = 10, Max MFLOPS = 4.5 CDC 7600 Performance I I I I I I + 1/clock = 1.0 Flops/Clock × 1/2 clocks = 0.5 Flops/Clock Total = 1.5 Flops/Clock Max Issue Rate = 1/clock (27.5 ns) Max MIPS = 36.4, Max MFLOPS = 36.4 Floating-point performance is limited by I I Instruction issue rate Entry rate of results into registers Roland Ibbett Instruction Level Parallelism - a historical perspective Parallel Function Units The Floating-point Arithmetic Problem - 3 I I How can architectural performance (FLOPS/CLOCK rate) be increased? Stop issuing so many instructions I I many FLOPS per instruction VECTOR instructions I Multiple register entry paths I CRAY-1 I FL-pt instructions are of the form: Vi = Vj ± Vk Vi = Vj × Sk Si = Sj ± Sk Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors Cray-1 Processor Organisation Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors The Cray-1 Scoreboard I An instruction is issued only when it is guaranteed to complete: I A, S registers Only 1 result per clock may be entered into registers, e.g. a 3-clock instruction with an S result register cannot be issued in the clock following the issue of a 4-clock S instruction I V registers Separate input multiplexers for each V register allow each to receive 1 result per clock Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors Reservations I I Each register has a Reservation Bit A and S registers I I I set when an instruction is issued which will deliver a result to the register reset when result is entered V registers I I I set when an instruction is issued that will deliver results to the register OR when an instruction is issued that reads the register (V register technology allowed only one read or write access in 1 clock cycle) reset when last element is read/written Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors Exception: I as a data element arrives it can also be routed back as an input to a function unit: Chaining I Example: I V0 ← Memory V1 ← V0 × S1 V3 ← V1 + V2 Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors - Cray1 Performance Roland Ibbett Instruction Level Parallelism - a historical perspective Vector Processors - Cray1 Performance Roland Ibbett Instruction Level Parallelism - a historical perspective Superscalar Processors In a superscalar processor, multiple instructions are issued in one cycle. An example system is the Alpha processor: Roland Ibbett Instruction Level Parallelism - a historical perspective Superscalar Processors I Instruction Unit (Ibox) I I I I I I fetches instructions checks resources maintains state for all pipeline stages to track outstanding writes controls stalls/aborts/restarts issues dual instructions Instruction Issue I An instruction pair can contain one from each of the sets (but only one load/store or branch per pair): Integer Operate Floating Operate Fl-pt Load/Store Integer Load/Store Fl-pt Branch Integer Branch BR/BSR/JSR Roland Ibbett Instruction Level Parallelism - a historical perspective Superscalar Processors Superscalar Processor Features I Multiple issue is an implementation feature: I I I I Alpha 21064 is dual issue Alpha 21264 issues 6 instructions per cycle i.e. instruction format is unchanged (c.f. VLIW) BUT: instructions must have certain features: I I fixed length branches on register values, not CCs Roland Ibbett Instruction Level Parallelism - a historical perspective VLIW Processors (VLIW = Very Long Instruction Word) I multiple instructions, fixed format I requires complex compiler optimisation e.g. Intel-HP IA-64* I I I I I 128-bit word 3 x 32-bit instructions + template template indicates instruction dependencies features include: I I I Predication Control Speculation ∗“The IA-64 Architecture at Work”, Carole Dulong, IEEE Computer, Vol 31, No 7, July 1998 Roland Ibbett Instruction Level Parallelism - a historical perspective VLIW Processors Predication I I Predicate: binary tag that permits conditional execution of an instruction value depends on the outcome of a conditional statement I I I P = true - instruction executes normally P = false - instruction is issued but results are not written to registers or memory IA-64 uses a full predication model I Compiler can append a predicate to all instructions Roland Ibbett Instruction Level Parallelism - a historical perspective VLIW Processors if-then-else Roland Ibbett Instruction Level Parallelism - a historical perspective Simulation Model Roland Ibbett Instruction Level Parallelism - a historical perspective SIMD Array Systems I An alternative to the temporal parallelism of pipelined vector processing is the use of an array of processing elements (PEs) to provide spatial parallelism I In an SIMD array system multiple PEs execute data instructions I PEs operate in lockstep, controlled by an array control unit (ACU), but can be individually disabled I The ACU executes scalar and program control instructions and issues SIMD instructions to the array I PEs are typically interconnected by a NEWS network I An early example was the ICL DAP I Later SIMD machines include the TMC Connection Machines Roland Ibbett Instruction Level Parallelism - a historical perspective ICL DAP Architecture Roland Ibbett Instruction Level Parallelism - a historical perspective ICL DAP Processing Element Roland Ibbett Instruction Level Parallelism - a historical perspective ICL DAP Store Organisation Roland Ibbett Instruction Level Parallelism - a historical perspective DAP Arithmetic Modes Roland Ibbett Instruction Level Parallelism - a historical perspective The TMC Connection Machine Roland Ibbett Instruction Level Parallelism - a historical perspective SIMD Simulation Model www.icsa.inf.ed.ac.uk/research/groups/hase/models/simd Roland Ibbett Instruction Level Parallelism - a historical perspective SIMD Instructions in µprocessors I An example is Pentium III SSE instructions I I I I I I I SSE = Streaming SIMD Extensions Instruction set contains new data type: 128-bit word of four 32-bit floating-point operands Processor contains eight 128-bit SSE registers packed instructions perform four operations simultaneously scalar instructions operate on l.s. operand Designed for use in 3D graphics applications Most systems offering high performance graphics use Graphics Processing Units as co-processors I I GPU’s are essentially SIMD systems HPC systems are starting to use GPUs Roland Ibbett Instruction Level Parallelism - a historical perspective Amdahl’s Law I Assume proportion p of a program is executed in parallel I Proportion executed serially = (1 − p) I If Ts = time to execute with no parallelism, then time to execute the same code with degree of parallelism = N is I Ts + (1 − p).Ts N maximum speedup (Ts /Tp ) is thus N and the relative speed-up S/N is Tp = p. I I S Ts Ts = = N NTp pTs + N(1 − p)Ts = 1 p + N(1 − p) Roland Ibbett Instruction Level Parallelism - a historical perspective Amdahl’s Law 1 0.9 N=4 N = 16 N = 64 N = 128 0.8 0.7 0.6 Relative 0.5 speed-up 0.4 0.3 0.2 0.1 0 0 0.2 0.4 0.6 0.8 Degree of parallelisation Roland Ibbett 1 Instruction Level Parallelism - a historical perspective Where Next? I Moore’s Law I I I I Use of Silicon Real Estate I I I The capacity of memory chips quadruples every 3 years Since much of this increase has been due to smaller silicon feature sizes, processor speeds have increased and processor architectures have also become more complex When will Moore’s Law stop working? High Performance Computing High Throughput Computing Has ILP come to the end of the road? I I problem of branches will not go away memory wall problem I GPUs and chip multiprocessors I Multithreaded architectures - Sun Niagara Roland Ibbett Instruction Level Parallelism - a historical perspective