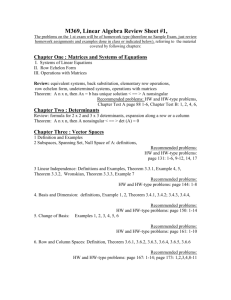

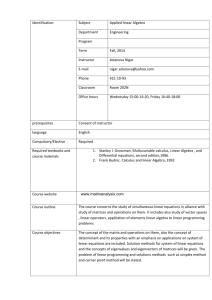

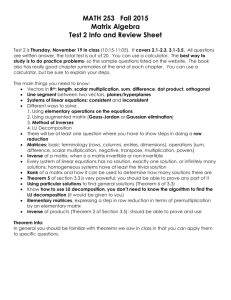

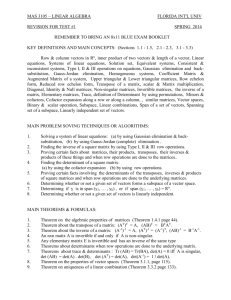

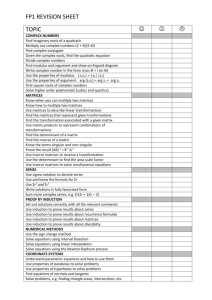

Elementary Linear Algebra

advertisement