EXPLORATORY ANALISYSIS OF THE ILPnet2 SOCIAL - ailab

ADVANCING TOPIC ONTOLOGY LEARNING THROUGH

TERM EXTRACTION

Blaž Fortuna (1), Nada Lavrač (1, 2), Paola Velardi (3)

(1) Jožef Stefan Institute, Jamova 39, 1000 Ljubljana, Slovenia

(2) University of Nova Gorica, Vipavska 13, 5000 Nova Gorica, Slovenia

(3) Universita di Roma “La Sapienza”, 113 Via Salaria, Roma RM 00198, Italy

Tel: +386 1 4773900; e-mail: Blaz.Fortuna@ijs.si

ABSTRACT

population of the terminology vocabulary and

This paper presents a novel methodology for topic ontology learning from text documents. The proposed methodology, named OntoTermExtraction is based on

OntoGen, a semi-automated tool for topic ontology construction, upgraded by using and an advanced keyword extraction, and

choice of concept names by comparing the bestranked terms with the extracted keywords.

The proposed approach is illustrated on a case study analysis of the ILPNet2 publications database [4,5], a terminology extraction tool in an iterative, semiautomated ontology construction process. This process consists of (a) document clustering to find the nodes in the topic ontology, (b) term extraction from document database of publications in the area of Inductive Logic

Programming, extensively gathered for the period of about

20 years.

The paper is structured as follows. Section 2 describes the clusters, (c) populating the term vocabulary and keyword extraction, and (d) choosing the concept names by comparing the best ranked terms with the extracted keywords. The approach is illustrated on a case study analysis of the ILPNet2 publications data.

1 Introduction

OntoGen [1, 2] is a semi-automated, data-driven ontology construction tool, focused on the construction and editing

ILPNet2 domain used to illustrate the proposed approach to ontology construction. Section 3 describes the background technologies, as implemented in the OntoGen and TermExtractor tools. Section 4 presents the proposed methodology, through a detailed description of the individual steps of the advanced ontology construction process, illustrated by the results achieved in the analysis of the ILPNet2 database. of topic ontologies. In a topic ontology, each node is a cluster of documents, represented by keywords (topics), and nodes are connected by relations (typically, the the

SubConcept-Of relation). The system combines text

2 The ILPnet2 database

The domain we analized is the scientific publications database of the ILPnet2 Network of Excellence in mining techniques with an efficient user interface aimed to reduce user’s time and the complexity of ontology construction. In this way it presents a significant improvement in comparison with present manual, and relatively complex ontology editing tools, such as Protégé

[3], whose use is hindered by the lack of ontology engineering skills of domain experts constructing the ontology.

Inductive Logic Programming [4]. ILPNet2 consisted of

37 project partners composed mainly of universities and research institutes. Our entity for the analysis are ILP publictions. The ILPnet2 database is publicly available on the Web and contains information about ILP publications between years 1971 and 2003. The data about publications in the BibTeX format, available in files at http://www.cs.bris.ac.uk/~ILPnet2/Tools/Reports/Bibtexs/

Concept naming suggestion (i.e. description of a document cluster through a set of relevant terms) plays a central part of the OntoGen system. Concept naming helps the user at evaluating clusters and organizing them hierarchically.

2003, ..., (one file for each year 2003, 2002, …).

The first stage of the data-driven ontology construction process is data acquisition and preprocessing. The data was acquired with the wget utility and converted into the

This facility is provided by employing unsupervised and supervised methods for generating the suggestions.

Despite the well-elaborated and user-friendly approach to concept naming, as currently provided by OntoGen, the

XML format. For easier data management in exploratory analysis of the social network of authors of ILP publications [5], it was convenient to put the data into a relational database format, using the Microsoft SQL Sever. approach was limited to single-word keyword suggestions, and by the use of very basic text lemmatization in the

OntoGen text preprocessing phase.

This paper aims at improving the ontology construction

One of the tasks accompanying the database population was the normalization of authors’ names. While this was crucially needed for social network analysis(described in

[5]), this step is not needed for the experiments in ontology construction described in this paper, as ontology process through improved concept naming, using terminology extraction as implemented in the advanced

TermExtractor tool [4,5]. The improved ontology construction process, proposed in this paper, consists of construction uses only document titles and abstracts, preprocessed using a predefined list of stop-words and the

Porter stemmer. the following steps:

document clustering to find the nodes in the topic ontology,

terminology extraction from document clusters,

3 Background technologies

3.1 OntoGen

The two main characteristics of the OntoGen system

[1,2,6] are the following.

Semi-Automatic. The system is an interactive tool that aids the user during the ontology construction process.

It suggests concepts, relations between the concepts, and concept names, automatically assigns instances to the concepts, visualizes instances within a concept and provides a good overview of the ontology to the user through concept browsing and various kinds of visualizations. At the same time the user is always in full control of the system and can affect the ontology construction by accepting or rejecting the system’s suggestions or manually editing the ontology.

Data-Driven.

Most of the aid provided by the system is based on the underlying data, provided by the user typically at the beginning of the ontology construction process. The data affects the structure of the domain for which the user is building the ontology. The data is usually a document corpus, where ontological instances are either documents themselves or named entities occurring in the documents. The system supports automated extraction of instances (used for learning concepts) and co-occurrences of instances

(used for learning relations between the concepts) from the data.

Major features of the system serve one or both of the two major design goals of OntoGen: (1) visualization and exploration of existing concepts from the ontology, and (2) addition of new concepts or modification of existing concept using simple and straightforward machine learning and text mining algorithms.

The main window of the system (see Figure 1) provides multiple views on the ontology. A tree-based view on the ontology, as it is intuitive for most users, presents a natural way to represent a concept hierarchy. This view is used to show the folder structure and as a visualization offering a one-glance view of the whole ontology. Each concept from the ontology is further explained by the most informative keywords describing the target concept, automatically extracted by employing unsupervised and supervised learning methods.

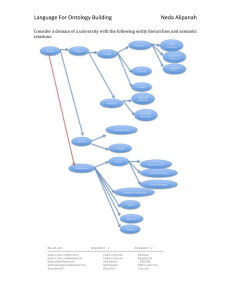

A sample ontology in the form of a tree-based concept hierarchy is shown in Figure 2. Both the first and the second level of the concept hierarchy were constructed using the k-means clustering algorithm, where the first level was split into 7 concepts and each of these concepts was than further split into three sub-concepts. The hierarchical structuring is user-triggered. At each single level, k-means is invoked for various user-defined values of k, then selecting the preferred k and dividing all the documents into k-subclusters, as a consequence.

While this procedure of ontology construction is elegant and simple for the user, quite some effort is needed to understand the content and the meaning of the selected concepts. This is especially striking when comparing the second level concepts, for example the sub-concepts of the concept named logic_program, program, and inductive_logic in Figure 2 with the sub-concepts of the concept logic program in Figure 3, which shows the concept hierarchy developed by the novel concept naming methodology based on TermExtractor.

3.2 TermExtractor

The TermExtractor tool [7,8] for automatic extraction of terms (possibly consisting of several words, as opposed to single keywords) from documents works as follows.

Figure 1. The user gets suggestions for the sub-concepts of the selected concept (left bottom part); the ontology is visualized as a tree-based concept hierarchy in a textual mode (left upper part) and in a graphical mode (right part).

Given a collection of documents from the desired domain,

TermExtractor first extracts a list of candidate terms

(frequent multi-word expressions). In the second step it evaluates each of the candidate terms using several scores which are then combined and the candidates are ranked according to the combined score. The output is a set of candidates whose score excides a given threshold.

Documents from contrast domains are used as extra input for term evaluation and serve as a control group for measuring the term significance. The following scores are used to evaluate candidate terms in the second step

(normalized score values are in the [0,1] interval):

Domain Relevance is high if the term is significantly more frequent in the domain of interest than in other domains.

Domain consensus is high if the term is used consistently across the documents from the domain.

Lexical cohesion is high if the words composing the term are more frequently found with the term than alone in the documents.

Structural Relevance is high for terms that are emphasized in the documents (e.g. appear in the title).

Miscellaneous set of heuristics is used to remove generic modifiers (e.g. large knowledge base).

The combined score is a weighted convex combination of the individual scores.

4 OntoTermExtraction methodology

4.1 Motivation

There are several ways in which a vocabulary can be acquired. In some domains there already exist established vocabularies (e.g. EUROVOC used for annotating

European legislation, AGROVOC used for annotating agricultural documents, ASFA used within UN FAO,

DMOZ created collaboratively to categorize web pages, etc.). Another option is automatic extraction of terms from documents, which is especially attractive for the domains where there is no established vocabulary.

Figure 2 Ontology constructed by the standard OntoGen approach, constructed from ILPnet2 publications data, using the kmeans clustering algorithm without any help from the pre-calculated vocabulary extracted by TermExtractor.

Concept and concept name suggestions play a central part in every ontology construction system. OntoGen provides unsupervised and supervised methods for generating such suggestions [1,2,6]. Unsupervised learning methods hierarchical ordering of concepts, and to create concept descriptions, thus helping concept evaluation.

4.2 Steps in the proposed OntoTermExtraction automatically generate a list of sub-concepts for a currently selected concept by using k-means clustering and latent semantic indexing (LSI) techniques to generate a list of possible sub-concepts. On the other hand, supervised learning methods require the user to have a rough idea about a new topic 1 – this is identified through a query returning the documents. The system automatically identifies the documents that correspond to the topic and the selection can be further refined by the user-computer interaction through an active learning loop using a machine learning technique for semi-automatic acquisition of the user's knowledge.

While OntoGen originally used only the input documents for proposing concept suggestions and term extraction techniques for providing help at naming the concepts, it should be noted that the whole process can be significantly methodology for concept naming

The advanced ontology construction process, proposed in this paper, consists of the following steps:

(a) document clustering to find the nodes in the ontology

(described in Section 3.1),

(b) terminology extraction from document clusters

(described in Section 3.2), using TermExtractor

(c) populating the term vocabulary and keyword extraction (described in Section 4.3),

(d) choosing the concept name (topic) by comparing the best-ranked terms with the extracted keywords

(described in the ILPNet2 application in Section 5).

4.3 Populating the terms and keyword extraction

For each term from the vocabulary, a classification model is need which can predict if the term is relevant for a given document cluster. In this paper we use a centroid based improved by constructing a predefined vocabulary from the domain of the ontology under construction. The vocabulary can be used to support the user during

1 Hereafter we name concepts the document clusters generated by the k-means clustering algorithm, while a topic is a description of the concept, e.g. a term of a set of terms that best identify the document cluster . nearest neighbor classifier [6] which was developed for fast classification of documents into taxonomies. We use this approach since it can scale well to larger collections of terms (hundreds of thousands of terms). A training set of documents is needed to generate a classification model. In some cases vocabularies already come with a set of documents annotated by the terms. In this case these documents can be used for training the term models. When no annotated documents are available, information

retrieval can be applied for finding documents to populate the terms.

In this paper we propose using two different techniques to populate terms extracted by TermExtractor.

Let T be the set of terms automatically extracted from document clusters:

The first technique uses the ILPnet2 collection. Each term t

T was issued in turn as a query and the top ranked documents (according to cosine similarity, using TFIDF word weighting) were used to populate the term.

The second technique did not use the ILPnet2 collection and relied on Google web search instead

[9]. A query was generated from each term t by taking its words and attaching an extra keyword "ILP" to limit the search to ILP related web pages. For example, if t is inductive logic programming , the query is ILP inductive logic programming . The query is then sent to Google and snippets of the returned search results are used to populate the term.

The ILP vocabulary prepared in this way was used as an extra input to OntoGen, besides the collection of the articles. We tried both approaches but in this report we only show the results of the second technique, because retrieval from the whole web turned out to be a richer resource than just the ILPnet2 collection. Details on how the vocabulary looked and how it was applied in the ILP ontology construction are described in Section 5.

5 ILPnet2 vocabulary and ontology construction

5.1 Vocabulary extraction

As described in the previous section, we used

TermExtractor to automatically extract the vocabulary for the ILP domain from the ILPnet2 collection of ILP publications. Table 1 shows the 11 top-ranked terms (out of 97) extracted from ILPNet2 documents.

Table 1:

Top-10 terms extracted from

ILPNet2 inductive logic logic programming inductive logic programming background knowledge logic program

Term

Weig ht

Doma in

Relev ance

Doma in

Conse nsus

Lexical

Cohesion

0.928 1.000 0.968 0.557

0.924 1.000 0.988 0.293

0.893

0.825

1.000

1.000

0.966

0.737

0.181

0.835 experimental result

0.824 1.000 0.867 0.203 machine learning 0.785 1.000 0.777 0.221 data mining 0.776 1.000 0.691 0.672 refinement operator decision tree

0.757 1.000 0.572 1.000

0.742 1.000 0.613 0.714 inverse resolution 0.722 1.000 0.557 0.894

0.718 1.000 0.594 0.684

All the terms were populated using Google web search. As an example, here are the top 5 snippets that were returned for the query "ILP predictive accuracy":

Boosting Descriptive ILP for Predictive Learning in

Bioinformatics -- general, this means that a higher predictive accuracy can be achieved. Thirdly, although some predictive ILP systems may produce multiple classification ...

Imperial College Computational Bioinformatics

Laboratory (CBL) -- Results on scientific discovery applications of ILP are separated below ... Progol's predictive accuracy was equivalent to regression on the main set of 188 ...

Evolving Logic Programs to Classify Chess-Endgame

Positions -- indicate that in the cases where the ILP algorithm performs badly, the introduc-. tion of either union or crossover increases predictive accuracy. ...

Estimating the Predictive Accuracy of a Classifier -- the predictive accuracy of a classifier. We present a scenario where meta- ..... Workshop on Data Mining,

Decision Support, Meta-Learning and ILP, 2000. ...

-*-BibTeX ... -- An outline of the theory of ILP is given, together with a description of Golem ....

Performance is measured using both predictive accuracy and a new cost ...

For each query the snippets of the first 1000 results were used. The snippets served as input for term modeling, described in Section 4.3. The models generated for each term, using this data, were then used for generating the concept suggestions and name suggestions in OntoGen.

5.2 Ontology learning

First the ILPnet2 collection and vocabulary were loaded into the program. The collection was imported in OntoGen as a directory of files, where each document was a separate

ASCII text file (File -> New ontology -> Folder). The vocabulary was loaded using the Tools -> Context menu.

After experimenting with different numbers and with the help of concept visualization, a partition into seven concepts using the k-means clustering algorithm was chosen. For all the seven concepts the first-ranked term suggested from the vocabulary suggested by

TermExtractor was selected. This means that the term extraction and population have indeed succeeded to rank the terms in a meaningful way. This is illustrated also by the following list of discovered concepts, with best-ranked concept names proposed by TermExtractor, followed by the second best-ranked concept name (in parantheses), and the list of most important keywords, as chosen originally by OntoGen:

Learning system (learning algorithm) -- learning, system, rule, language, methods, machine_learning, machine, approach, ilp, grammars

Decision tree (logical decision tree) -- order, inductive, trees, order_logic, discovery, decision, application, decision_trees, database, experiments

Structured data (chemical structure) -- structural, data, machine, predict, examples, relations, machine_learning, mining, definitions, knowledge

Clausal theory (theory revision) -- theories, refinement, inverse, resolution, predicates, operators, inverse_resolution, invention, refinement_operators, revision

Relational database (inductive learning) -- ilp, generalization, relations, model, algorithm, constraints, integrating, rule, agent, evaluation

Figure 3 Ontology constructed on top of ILPnet2 dataset using the pre-calculated terminology.

By checking the publication years of articles from different concepts it was possible to analyse the evolution of topics.

For example, we can notice that most frequent years in concepts clausal theory, concept learning and logic program were around 1994, concepts structured data and

[2] B. Fortuna, M. Grobelnik, and D. Mladenić. Semiautomatic Data-driven Ontology Construction System.

In: Proc. of the 9th International multi-conference

Information Society IS-2006, Ljubljana, Slovenia, learning system were most frequent around year 2000, and concepts decision tree and relational database appear to be most recent in years following 2000. Each of the concepts was further split into sub-concepts using suggestions from the vocabulary which resulted in the two-level taxonomy shown in Figure 3.

2006.

[3] The Protégé project 2000. Available online at http://protege.stanford.edu

.

[4]

[5]

ILPNet2 publications database. Available online at http://www.cs.bris.ac.uk/~ILPnet2/

S. Sabo, M. Grčar, D.A. Fabjan, P. Ljubič, N. Lavrač.

Exploratory analysis of the ILPnet2 social network. In

5 Conclusions

We presented a novel concept naming methodology

Proc. of the 10th International multi-conference

Information Society IS-2006, Ljubljana, Slovenia, applicable in advanced ontology construction, and illustrated the improved concept naming facility on the

2007.

[6] M. Grobelnik, D. Mladenić. Simple classification into large topic ontology of web documents. In Proc. of the ontology of topics, extracted from the ILPNet2 scientific publications database. Concept naming supports the user

27th International Conference Information

Technology Interfaces, Dubrovnik, Croatia, pp.188– in the task of concept discovery, concept naming and keeps the constructed ontology more consistent and

[7]

193, 2005

The TermExtractor tool. Available online through a aligned with the established terminology in the domain.

Acknowledgement This work was supported by the

Slovenian Research Agency and the IST Programme of the

EC under NeOn (IST-4-027595-IP), PASCAL (IST-2002-

Web interface at http://lcl2.uniroma1.it/termextractor ,

[8] F. Sclano and P. Velardi "TermExtractor: A Web application to learn the common terminology of interest groups and research communities. In Proc. of

506778) and ECOLEAD. the 9th Conf. on Terminology and Artificial

Intelligence (TIA 2007), Sophia Antinopolis, France,

References

[1]

B. Fortuna, D. Mladenić, M. Grobelnik. Semiautomatic construction of topic ontologies. In:

Ackermann et al. (eds.) Semantics, Web and Mining.

Workshop at ECML 2007.

LNCS (LNAI), vol. 4289, pp. 121–131. Springer,

2006.

2007.

[9] M. Grcar, E. Klien. Using Term-matching Algorithms for the Annotation of Geo-services. Web Mining 2.0,