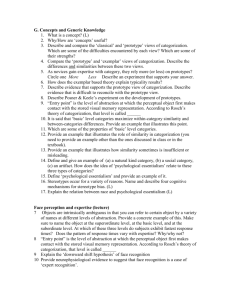

Simulating the Evolution of Color Categories using

advertisement

Simulating the Evolution of Color Categories using Individual Reinforcement Learning Models in Populations of Artificial Agents Jungkyu Park Mentors: Sean Tauber, Kimberly A. Jameson, Louis Narens, Natalia Komarova, Dominik Wodarz Languages are dynamic meaning systems used for pragmatic communication. They take form and evolve primarily for purposes of accurate information sharing and communication between users. Many pragmatic factors affect how shared meaning and communication systems evolve. One way to investigate such factors involves simulating communication interactions between individuals in artificial agent populations. This project investigates color categorization communications in silico, using reinforcement learning communication games to examine how different population categorization solutions can be generated, stabilized, and evolved from interactions within artificial agent populations. In particular, the project examines the consequences of manipulating network structures of communicating agents, variations in agent populations, and how these manipulations impact population categorization solutions that emerge. Results from a variety of simulation configurations support two conclusions: (i) communication games that occur within fixed local-neighborhood population networks increase the likelihood that categorization solutions will achieve a stochastically stable equilibrium state, and (ii) optimal categorization solutions, in some situations, are improved by using dynamic models with features that simulate agent “die-off” and “birth” within a population. These results provide important new perspectives for analyzing results from simulated categorization solutions and for understanding the effects of simulation parameters leading to optimal results.