instructions for Option 2

advertisement

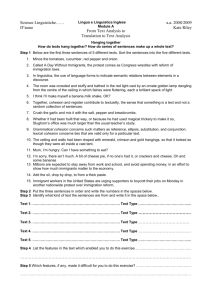

Spring 2011 CS 544 Final Assignment Given by Eduard Hovy Write your own text summarizer. Part 0: Preparation Step 1: Get 10 texts of the same genre (I recommend news articles). Place each sentence on a new line. Identify 6 Development texts and 4 final Evaluation texts. Set aside the Evaluation texts for Part II. Part I: Implement a simple text summarization engine (25 points) The input should be a query of M words (0 =< M < 4) indicating the reader’s interests a document of N sentences (one sentence per line) and the output should be a summary of 0.3N sentences. Step 1: Build at least three sentence scoring modules (see below) Step 2: Build the framework in which the modules fit: Accept input (text and query); activate each module; collect its scores for each sentence; at end combine the scores for each sentence across all modules; produce output (top-scoring 1/3 of the number of sentences) with their scores; order the sentences as you think they should be ordered; save result as the summary Step 3: Test the framework on the 6 Development texts, and decide how you want to combine the scores to get the best-sounding output summaries. Change your combination formula (and whatever else you want to change). EXTRA CREDIT if you use a machine learning method to determine optimal score weights. The modules: REQUIRED: EITHER o Choose 2 simple (word/position-based) topic scoring methods (word frequency counting of various forms, position, title, and cue phrase methods, query overlap method). Up to 5 points each for each method. o Choose 1 complex (cross-sentence) topic scoring method (cross-sentence overlaps of various kinds, lexical chains, discourse structure, etc.). Up to 10 points. OR (hard): Implement the information extraction frame/template method that includes at least 3 frames with at least 4 slots per frame. Up to 20 points. OPTIONAL: Up to 5 points for redundancy checking, pronoun replacement, and sentence order manipulations that improve the coherence of the summary REQUIRED: Up to 5 points for method of integrating the scores of the various modules (the more advanced the method, the more points. Simply adding the various modules’ scores gives 1 point; weighting them in some way is better, but requires an analysis showing how and why the particular weighting factors were determined) Part II: Implement a summary evaluator (15 points) The input is an ideal summary and a system summary. The output is the Precision and Recall scores. Step 1: Build the evaluator: Input is two summaries (ideal, of length A sentences, and system, of length B sentences). First count every sentence in system summary that also occurs in ideal summary (= C). Then compute Recall = (# correct sentences in system output) / (# sentences in ideal summary) = C/A Precision = (# correct sentences system output) / (total # sentences in system output) = C/B (If you want, you can do fancy things with the scoring to take into account the actual system score value for each sentence.) Step 2: Create three ideal summaries: for the 4 Evaluation texts, extract the N sentences (about 0.3 times the total number for each text) that you consider the most important. Rank them from 1 to N, and save them together with their rank scores. You should use the SAME query when your system generates the summary as when you generate the gold standard. You can pick just one query per text, although of course it’s more fun to have more than one query (and hence more than one summary) per text! Part III: Apply it! (10 points) Step 1: Run your summarizer: Feed the 4 Evaluation texts into your summarizer and save its output. Step 2: Run the evaluator: For each Evaluation text, input your summary (with rank scores) and the system’s summary (with sentence scores) and output the overlap score. Please email ALL the following to hovy@isi.edu by Saturday May 7: The code of each topic scoring module A trace-through of at least 2 texts for each topic scoring module (can be the same texts) showing the output scores assigned to each unit The code of the score integration module, which a discussion/description of how the weightings (if any) were determined The input and final output, with their Precision and Recall scores, of at least 2 texts, including the scores of the output sentences