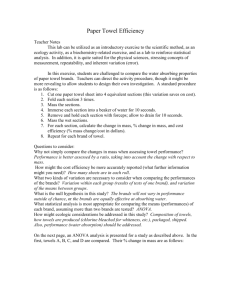

Lec15

advertisement

STAT 460 Lecture 15: Review 10/27/2004 1) Goal: Draw valid conclusions (with a degree of uncertainty) about the relationship between explanatory variable(s) and an outcome variable in the face of the limited experimental resources and the randomness characteristic of the world. 2) One detailed example: Experiment to study the effects of a “teamwork”vs. a “technical” intervention on productivity improvement in small manufacturing firms. 3) Principles of Statistics a) A statistical view of the world The Population A Sample Contains n randomly chosen subjects. Observed expl. & outcome variables. Some statistics for this sample: Contains N subjects. Unobserved variables. Some of this population’s parameters: μcontrol β0control βempcnt,control μteamwork β0team βempcnt,team μtechnical β0tech βempcnt,tech σ2rx only σ2rx,empcnt σ2rx only, multiple teachers X control ̂ control ̂ empcnt,control X teamwork ̂ 0team ̂ empcnt,team X technical ̂ 0tech s2rx only s2rx,empcnt ̂ empcnt,tech s2rx only, mult b) Hypothesis testing i) ANOVA: Let μi for i in 1 to k be the population mean for the outcome for group i. H0: μ1=…=μk vs. H1: at least one pop. mean differs ii) Regression: Let β0 be the population value of the intercept and β1 be the population value of the slope of a line representing the mean outcome for a range of values of explanatory variable 1. H0: β0=0 vs. H1: β0≠0 H0: β1=0 vs. H1: β1≠0 etc. iii) ANOVA decision rule: reject H0 if Fexperimental>Fcritical equiv. to p<α iv) Regression decision rules: reject H0 if |t|>tcritical equiv. to p<α v) If we choose α=0.05, then when the null hypothesis is true we will falsely reject the null hypothesis (make a type 1 error) only 5% of the time. (This only guarantees that we will correctly reject the null at least 5% of the time when the null is false.) 2 c) Characteristics of problems we can deal with so far: i) Quantitative outcome variables and a categorical explanatory variable (ANOVA) ii) Quantitative outcome variable (regression) or one of each (ANCOVA form of regression) iii) We require the following assumptions to make a specific model that we can analyze: (1) the outcome is normally distributed with the same variance at each set of explanatory variable values; (2) the subjects (actually, errors) are independent; (3) the explanatory variables can be measured with reasonable accuracy and precision. (4) For regression, the relationship between quantitative explanatory variables and the outcome is linear on some scale. (5) For one-way ANOVA, the k population means can have any values, i.e. there is no set pattern of relationship between the outcome and the explanatory variables. d) Examples of data we have not learned to deal with: 3 e) Experiments vs. observational studies i) In experiments, the treatment assignments are controlled by the experimenter. Randomization balances confounding in experiments. ii) In randomized experiments, association can be interpreted as causation. iii) In observations studies, causation can be in either direction or due to a third variable. f) The assumptions (fixed x, independent errors, normality with the same variance (σ2) for any set of explanatory variables, plus linearity for regression models) are needed to calculate a “null sampling distribution” for any statistic. i) This tells the frequency distribution of that statistic over repeated samples when the null hypothesis is true, thus allowing calculation of the p-value. ii) The alternate sampling distributions require more information and more difficult calculations. iii) Note that we do not require normality or equal variance for explanatory variables. g) The p-value is the probability of getting a sampling statistic (e.g. ANOVA F or regression t) that is as extreme or more extreme that the one we got for this experiment if the null distribution were true. h) The one-way ANOVA F value has a particularly nice interpretation. MSbetween in the numerator estimates σ2 from the model if the null hypothesis is true, and something bigger otherwise. MSwithin in the denominator estimates the σ2 regardless of whether the null hypothesis is true or not. So F is around 1 when the null hypothesis is true and bigger otherwise. i) Degrees of freedom count the amount of information in a statistic by subtracting the number of “constraints” from the number of component numbers in the statistic. Use df to check that an analysis was set up correctly or to obtain certain information about an analysis from statistical output. E.g. the df in MSbetween is k1 (one less than the number of groups being compared). 4 4) Experimental Design concepts a) Generalizability i) Assure that the population from which samples of subjects could be drawn is not too restricted. Assure that treatment and environmental conditions are not too restrictive. ii) Be more and more careful in stating conclusion as they apply to populations less and less restrictive than where the sample came from. iii) Balance against increased control for increased power. b) Power is the chance that the null hypothesis will be rejected for some specific alternate hypothesis. In one-way ANOVA, e.g., power is increased by increasing MS between 2 n effects the F statistic, F . MS within 2 i) Don’t study small effects ii) Decrease σ2 by decreasing subject, treatment, environmental and measurement variation iii) Increase sample size c) Interpretability: don’t make alternate explanations easy to defend i) Use a control ii) Use blinding of subject and/or experimenter iii) Use randomization to prevent confounding 5 5) EDA is used to find mistakes, become familiar with data and coding, anticipate results, and roughly check for appropriate models. a) All models: check descriptive statistics for quantitative variables and frequency tables for categorical variables. b) ANOVA: side-by-side boxplots of outcome by the categorical explanatory variable. 2.0 1.5 1.0 Productivity .5 0.0 -.5 N= 30 35 control teamwork 25 technical Treatment c) Regression: scatterplot with outcome on the y-axis. d) ANCOVA: scatterplot with separate symbols for each group. 2.0 1.5 1.0 .5 Productivity Treatment 0.0 technical teamwork -.5 control 0 20 40 60 80 Employee Count 6 100 120 140 6) Analysis details a) ANOVA Response: Productivity Treatment: Control (0), Teamwork (1), Technical (2) The MEANS Procedure Analysis Variable : Productivity N Treatment Obs Mean Std Dev N Minimum Maximum ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ 0 30 0.5533333 0.2823709 30 -0.0500000 0.9900000 1 35 0.6237143 0.3365402 35 -0.1100000 1.2200000 2 25 1.0324000 0.2769067 25 0.4400000 1.4800000 ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ 7 The ANOVA Procedure Class Level Information Class Levels Treatment Values 3 0 1 2 Number of observations 90 The ANOVA Procedure Dependent Variable: Productivity Source DF Model 2 Error Corrected Total Sum of Squares Mean Square F Value Pr > F 3.59417575 1.79708787 19.54 <.0001 87 8.00333981 0.09199241 89 11.59751556 R-Square Coeff Var Root MSE prtivity Mean 0.309909 42.49257 0.303303 0.713778 Source DF Treatment 2 Anova SS 3.59417575 ANOVA Productivity 8 Mean Square F Value 1.79708787 19.54 Pr > F <.0001 b) Regression and ANCOVA The REG Procedure Model: MODEL1 Dependent Variable: Productivity Analysis of Variance Source DF Sum of Squares Mean Square Model Error Corrected Total 5 84 89 4.66103 6.93649 11.59752 0.93221 0.08258 Root MSE Dependent Mean Coeff Var 0.28736 0.71378 40.25939 R-Square Adj R-Sq F Value Pr > F 11.29 <.0001 0.4019 0.3663 Parameter Estimates Variable Label Intercept EmployeeCount Teamwork TechSkill TeamEmployee TechEmployee Intercept Teamwork TechSkill DF 1 1 1 1 1 1 Parameter Estimate Standard Error 0.79282 -0.00578 0.01054 0.17951 0.00300 0.00686 0.09866 0.00202 0.14145 0.13708 0.00244 0.00243 t Value 8.04 -2.87 0.07 1.31 1.23 2.82 Standardized Pr > |t| Estimate <.0001 0.0052 0.9408 0.1939 0.2220 0.0060 Parameter Estimates Variable Label Intercept EmployeeCount Teamwork TechSkill TeamEmployee TechEmployee Intercept Teamwork TechSkill DF 1 1 1 1 1 1 9 95% Confidence Limits 0.59662 -0.00978 -0.27075 -0.09309 -0.00185 0.00202 0.98902 -0.00177 0.29183 0.45211 0.00785 0.01169 0 0.58066 0.01431 0.22398 0.32180 0.63719 7) Residual analysis .8 .6 .4 Unstandardized Residual .2 0.0 Treatment -.2 technical -.4 teamwork -.6 control -.8 Total Population 0 20 40 60 80 100 120 140 Employee Count .8 .6 .4 Unstandardized Residual .2 0.0 Treatment -.2 technical -.4 teamwork -.6 control -.8 Total Population .8 1.0 1.2 1.4 1.6 Log10(Employ ee Count) 10 1.8 2.0 2.2 Normal P-P Plot of Regression Standardized Residual Dependent Variable: Productivity 1 .00 Expected Cum Prob .7 5 .5 0 .2 5 0 .00 0 .00 .2 5 .5 0 Obs erved Cum Prob 11 .7 5 1 .00 8) Transformation and Interpretation Coefficients(a) Unstandardized Coefficients Model 1 B Standardized Coefficients Std. Error Beta t Sig. (Constant) 1.417 .284 4.989 .000 Teamwork -.035 .409 -.047 -.085 .932 Technical Skills -.618 .388 -.772 -1.593 .115 .126 .248 .303 .509 .612 .710 .243 1.471 2.919 .005 -.564 .182 -.495 -3.091 .003 F 12.529 Sig. .000 Teamwork/Log1 0(Employee Count) interaction tech/Log10(Empl oyee Count) interaction Log10(Employee Count) a Dependent Variable: Productivity Model Summary Model 1 R .654 R Square .427 Adjusted R Square .393 Std. Error of the Estimate .28122 ANOVA Model 1 Regres sion Residual Total Sum of Squares 4.954 6.643 11.598 df Mean Square .991 .079 5 84 89 12